Related Resources

Executive Summary

Antisemitic and white supremacist groups and their supporters are using Facebook Pages, Instagram profiles, YouTube channels, and X accounts to raise money for their causes, according to a new review by ADL and Tech Transparency Project (TTP). Our findings highlight where these companies are falling short on their promises to moderate hate groups and how they are even, at times, providing tools to facilitate fundraising efforts.

We found that dozens of accounts, including some whose very existence violates platforms’ policies, are using social media to direct people to outside hate group websites; these sites solicit donations, sell memberships, or hawk hate-related merchandise like books, mugs, and T-shirts. Much of this activity is taking place in plain sight. In some instances, the companies’ own products are helping hate groups achieve greater fundraising reach. In one case, a hate group installed a “Shop Now” button on its Facebook Page. In another, on YouTube, a channel with “Ku Klux Klan” in its name was able to display a donation link on its cover photo of a Confederate flag.

Major social media platforms are not only hosting hate groups that violate their policies, but at times helping these groups generate streams of revenue to support their movements. Based on our findings, here are our recommendations for industry and government:

-

Tech companies must effectively enforce existing policies against hate and extremism, transparently, equitably, and at scale.

-

Tech companies should strengthen and robustly enforce policies prohibiting outbound links to hate group websites.

-

Tech companies should stop allowing commerce-related product features to facilitate donations and purchases for hate-based merchandise.

-

The government should mandate transparency regarding content policy and enforcement. This should include information about outbound links as well as commerce-related policies and product features.

This project was conducted in summer 2023, but researchers revisited each platform in October and found that hate groups and their supporters continued to use the platforms for fundraising purposes.

Meta and YouTube both removed most of the content highlighted in the report after it was shared with them prior to publication. YouTube said it would continue to investigate fixes for its review process, while Meta did not address the wider issues that the report identified. Following publication of the report, X responded that they have taken action under their rules. We found that one account has been removed, but we cannot verify if others have been de-amplified or in some other way actioned.

Methodology

This project examined whether hate groups are able to carry out fundraising on Facebook, Instagram, YouTube, and X.

First, we compiled a list of hate groups and movements from ADL’s Glossary of Extremism that were tagged with all three of the following categories: “groups/movements,” “white supremacist,” and “antisemitism.” The list totaled 130 terms associated with current and historical hate groups and movements.

Second, we compiled a list of websites linked to the 130 hate groups and movements. We identified the sites via hate group social media accounts.

Third, we determined that 55 websites associated with 50 of the hate groups and movements raise funds through some combination of donations, membership fees, and merchandise sales. We entered these website addresses into the search bars of Facebook, Instagram, X, and YouTube, and examined the results to look for accounts promoting these sites.

Finally, we examined the Facebook Pages, YouTube channels, and X accounts that surfaced in the search results to determine if they directly solicited donations or sold memberships or merchandise.

Findings on Facebook, Instagram, X, and YouTube

Facebook and Instagram

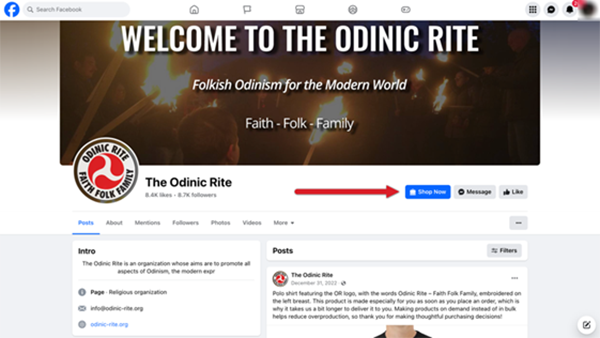

We found hate groups using Meta platforms to raise money, in some cases using Facebook’s own product features for fundraising. For example, the Facebook Page for Odinic Rite (an organization dedicated to the advancement of Odinism, a racist Norse pagan religious sect embraced by white supremacists) features a “Shop Now” button—a feature offered by Facebook—that links to the group’s off-platform website shop. The site sells Odinic Rite-branded clothing and merchandise, in addition to books and digital music about Odinism. Visitors to the site can click on a tab to join Odinic Rite or pay their membership renewal fee.

We searched for but did not find specific Facebook policies restricting who can use Shop Now and other commerce-related product features. However, Facebook’s Community Standards, which apply to all Pages, ban “content that praises, substantively supports or represents ideologies that promote hate, such as nazism [sic] and white supremacy.”

The Odinic Rite Facebook Page, which was created in 2009 and has more than 8,700 followers, features photos of members wearing Odinic swastika pins and merchandise with the sonnenrad symbol, the sun wheel associated with Nazis and white supremacy. The Odinic Rite’s website includes blog posts making statements like, “The two races are just not compatible … To blend these distinct differences is to propagate cultural genocide.”

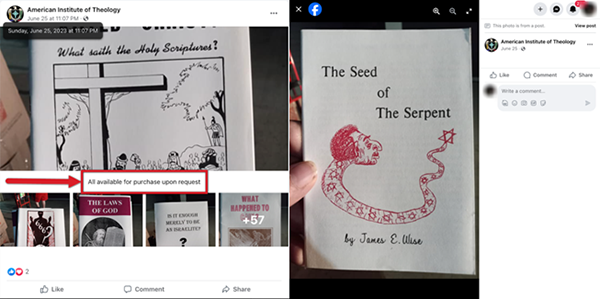

In another example, the Facebook Page for American Institute of Theology (Kingdom Identity Ministries), which identifies as part of the Christian Identity movement, features a link to its website, a single scrollable page selling books, music, and Bible study classes. The Christian Identity movement is a racist and antisemitic religious sect.

The American Institute of Theology website echoes Christian Identity beliefs in a “doctrinal statement,” calling Jews the “children of Satan” and an “evil race” and making pronouncements like, “Race-mixing is an abomination in the sight of Almighty God.”

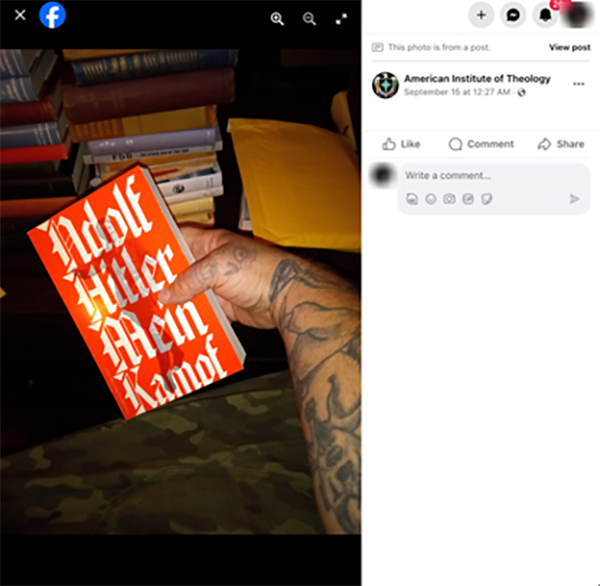

Books offered for sale on the group’s website and Facebook Page in June included “The Protocols of the Learned Elders of Zion,” a notorious political forgery about alleged secret plans of Jewish leaders to attain world domination, and “The International Jew,” an antisemitic work first published in the 1920s by Henry Ford. In September, a post on the group’s Facebook Page offered more racist and Nazi books for sale, including Adolf Hitler’s notorious 1925 autobiographical manifesto, Mein Kampf. The post refers to the books as “treasures.”

Facebook says that Pages on the platform and their content must comply with its Community Standards. Those standards prohibit content that “praises, substantively supports or represents ideologies that promote hate,” including Nazism and white supremacy.

The study also found that individual users on Facebook promote hate group websites that solicit funds from supporters.

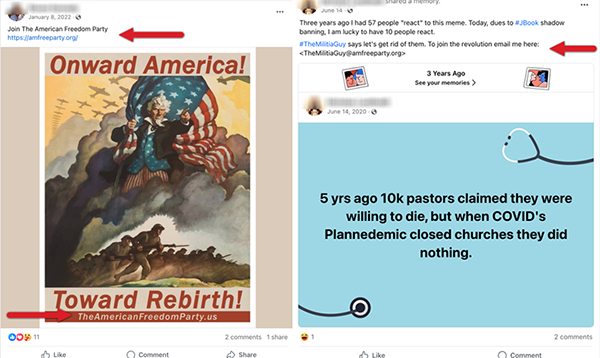

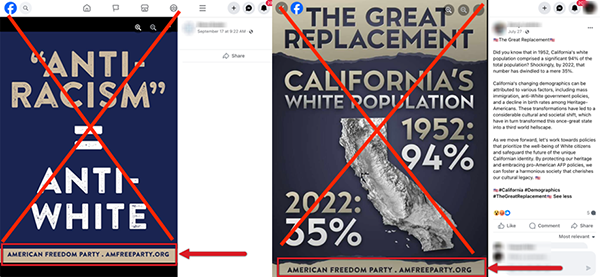

For example, one Facebook post from an individual user called on people to join the American Freedom Party, a white supremacist group. The post shared a link to the group’s website, which solicits donations and sells memberships, including lifetime membership for $1,000. Facebook has banned the American Freedom Party, according to an internal company list of “dangerous organizations” leaked by The Intercept in 2021.

In addition, researchers found individual Facebook users circulating images that promoted the American Freedom Party’s website. One image equated “antiracism” with being “anti-white,” while another invoked the “Great Replacement,” a conspiracy theory about whites being replaced by non-white immigrants.

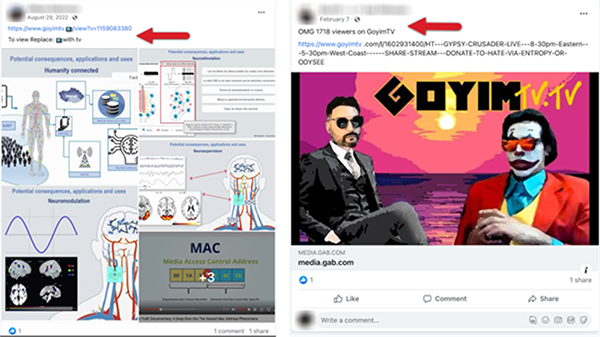

Some Facebook users shared a link to the website for GoyimTV, which is run by the hate group Goyim Defense League, a virulently antisemitic network known for stunts that troll and harass Jews. The GoyimTV site sells products like swastika-emblazoned T-shirts and a pack of soap to “wash the Jew away,” and gives options to donate by cryptocurrency and Amazon gift cards.

When we clicked on the GoyimTV link in the above posts, Facebook generated a message that read, “You won’t be able to go to this link from Facebook. The link you tried to go to does not follow our Community Standards.”

This shows the platform is able to identify and block outbound links to content that violates its policies. However, Facebook did not remove the links in question or the posts that shared them, meaning that users could simply copy and paste the links into a different browser window to reach the site.

Researchers found that Facebook did not consistently block violating links in this way. For example, the platform did not block links to the American Freedom Party website in the previously mentioned Facebook posts, despite including the group on its list of banned “dangerous organizations.”

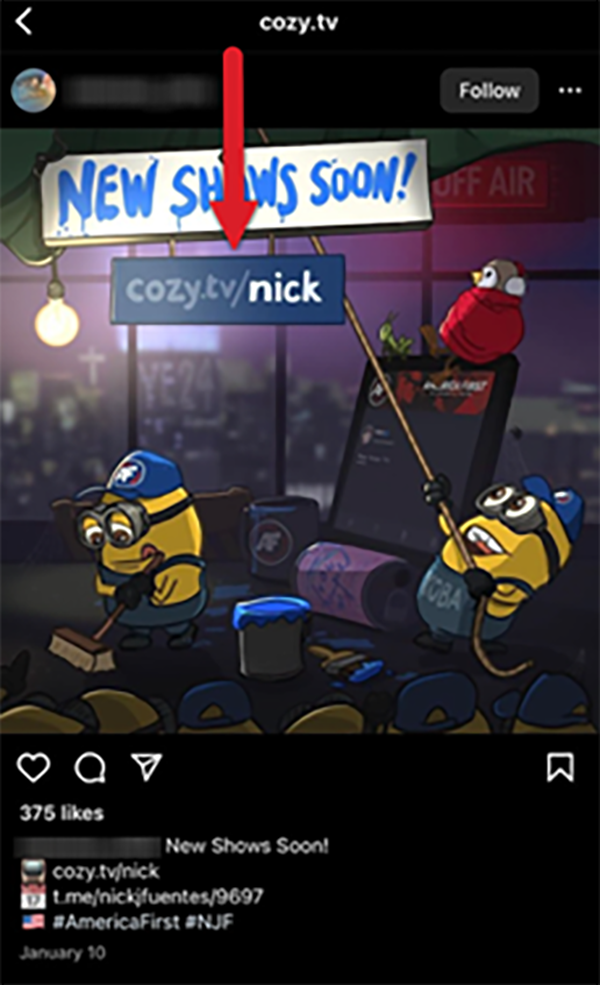

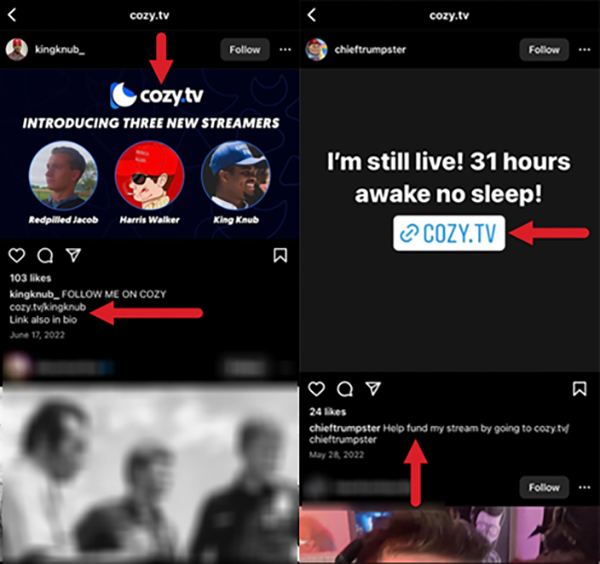

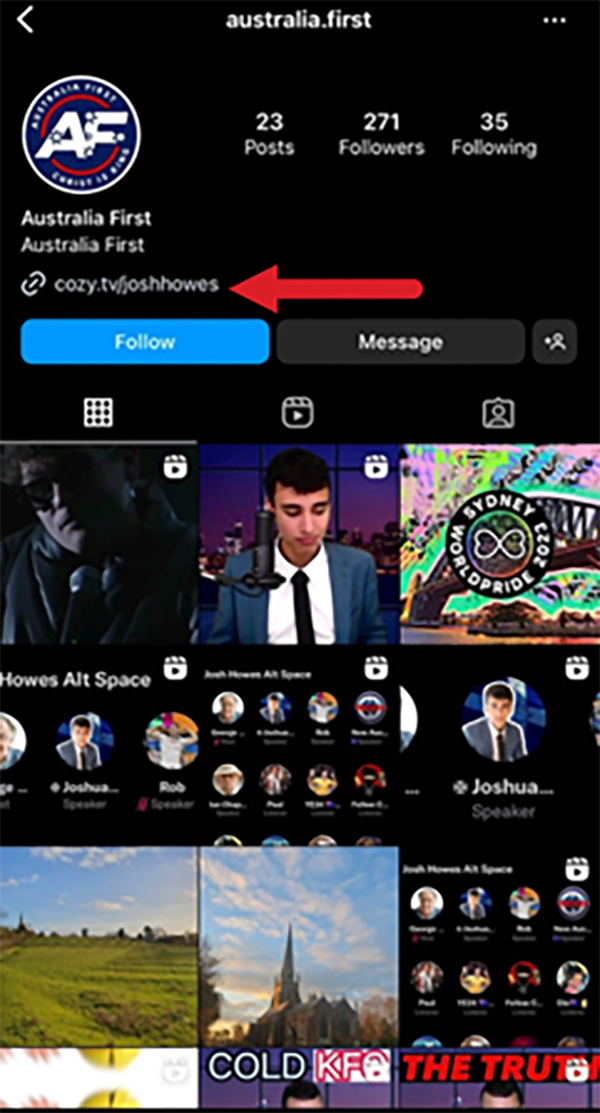

Meta’s other platform, Instagram, limits where users can share clickable hyperlinks, restricting them to a user’s bio section and Instagram Stories, which disappear in 24 hours. But we found Instagram accounts that embedded non-clickable hate group website addresses in images they posted to the platform. For example, multiple users posted images with the web address for cozy.tv, a streaming platform launched by white supremacist pundit and organizer Nicholas Fuentes. Cozy.tv collects donations and sells merchandise on its site.

Cozy.tv hosts numerous figures who broadcast racist and other kinds of hate speech that violate Facebook’s Community Standards, and Fuentes has appeared on Facebook’s list of banned dangerous individuals. But the platform is allowing users to repeatedly post cozy.tv links, even though Meta has said that its artificial intelligence technology can scan text in images to identify violating hate content.

Some Instagram users also linked to cozy.tv livestreams in their Instagram bios, including a user called Australia First, whose name appears to be an offshoot of Fuentes’ America First mantra.

YouTube

The study also found that YouTube enabled hate groups, which violate the platform’s policies, to solicit donations.

YouTube allows users to post links in the “about” section of a channel, in a channel’s banner photo, and in the description section for individual videos. We found that hate group supporters use all these options to raise money.

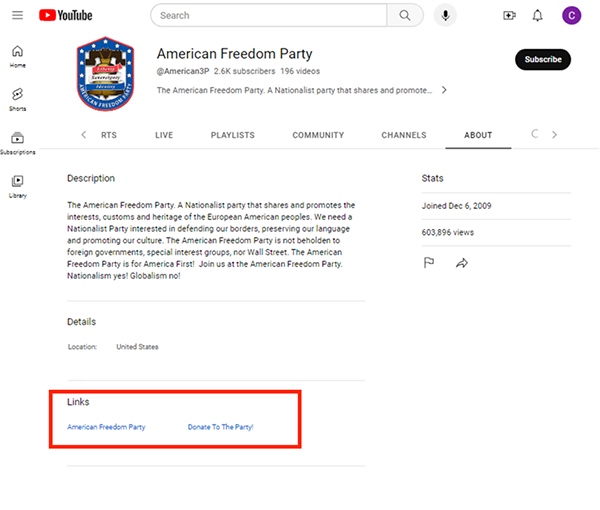

For example, we found a YouTube channel called American Freedom Party, the name of the white supremacist group. The channel has a “Donate To The Party!” link in its “About” section. The link leads to a now-defunct contribution page through payment processor Square.

The American Freedom Party channel, which has over 2,600 subscribers and over 600,000 views on YouTube, appears to be the official channel of the hate group. The channel links directly to the organization’s official website and has posted dozens of videos labeled “American Freedom Party Report.” ADL raised concerns about this channel in 2019, but it remains active on the platform and has gained more than 100,000 additional views since ADL’s report.

The channel’s videos promote antisemitic and white supremacist ideologies that violate YouTube’s hate speech policy. One video that has racked up over 4,300 views complains about “secretive globalist organizations that have taken over our society,” and shows an image of a burning Star of David while discussing how Europeans will retake the world from their "enemies.” This appears to refer to the antisemitic conspiracy theory that Jews are bent on world domination and control key institutions. Another video shows an interview with white supremacist Tom Sunic on Red Ice Radio. YouTube removed the channel for Red Ice Radio, a prominent video news outlet for white nationalists, in October 2019.

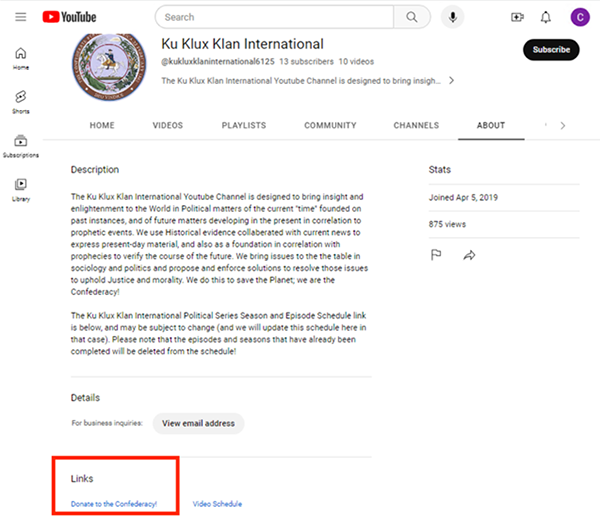

We also identified a YouTube channel called “Ku Klux Klan International” that had a hyperlink titled “Donate to the Confederacy!” in the bottom right corner of its Confederate flag banner photo and in its “About” section. The hyperlink, which was removed at some point after our last report mentioning the group, led to a PayPal account for @Klanfederacy where people could send funds to the group.

The content on this YouTube channel promoted the views of the Ku Klux Klan, the notorious American white supremacist organization, though it wasn’t clear if the channel represented an actual faction of the group. One video on the channel recites the “sacred vows” of the KKK, stating that members must be “opposed to Negro equality” and “in favor of a white man's government in this country”—clear violations of YouTube’s hate speech policy.

Though YouTube does have policies on external links, we searched for but did not find clarification as to whether a hate group can link to a payment account from its platform. YouTube prohibits linking to external sites that raise funds for terrorist organizations but does not say if the same rule applies to domestic hate groups. YouTube also prohibits links to hate speech content that would violate its own policies, but in this case, the channel linked to a PayPal account, not hate content.

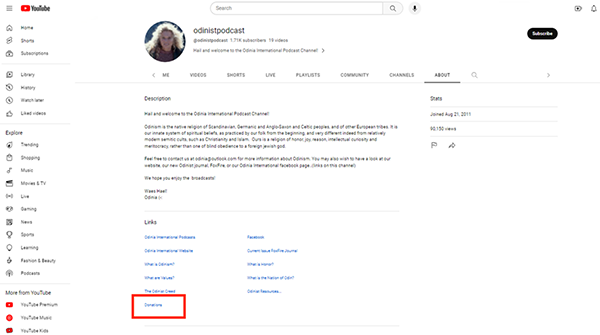

A YouTube channel called “odinistpodcast” links to a donations page for Odinia International, a self-described “Odinist religious organization.” As noted previously, Odinism is a racist variant of a Norse pagan religious sect. The donations page asks people to send cash or check by mail, saying its PayPal account was deactivated because “European ancestry people are particularly hated by supremacist Jews.”

Videos on the channel are rife with antisemitic and white supremacist rhetoric. One video refers to Charlemagne’s massacre of Saxons in the Middle Ages as “white genocide,” using the term for the white supremacist belief that the white race is dying due to a growing non-white population and a Jewish conspiracy to engineer forced assimilation. In another video, the narrator rails against “heathen organizations which are Zionist controlled,” a reference to the antisemitic conspiracy theory that Jews have too much power. The video closes with shoutouts to “real men and women and true heroes,” naming David Duke, America’s most well-known racist and anti-Semite, and David Irving, a Holocaust denier. These videos appear to violate YouTube’s hate speech policy, which bans content promoting hatred or violence against individuals on the basis of race, religion, ethnicity, and other characteristics.

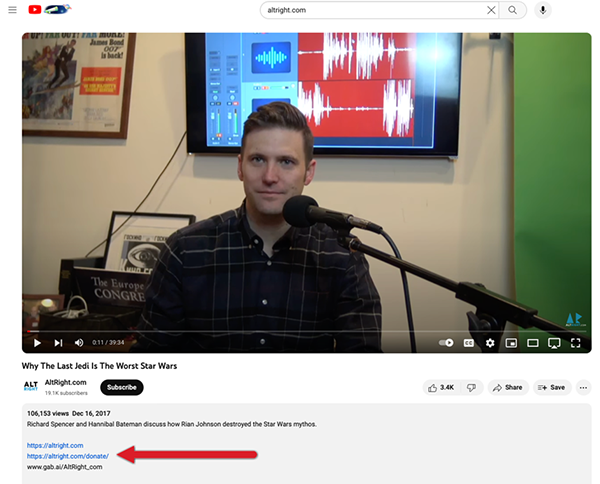

The YouTube channel AltRight.com, which features videos of white supremacist Richard Spencer, directs viewers to a donation page for AltRight Corp, which was formed through a merger between the National Policy Institute and a Scandinavian media platform that amplifies antisemitism. The donation link, which directs users to a Bitcoin QR code and mailing address, is listed in the description text for the channel’s most popular videos.

Some of the videos on the channel are blurred out with a warning that they contain content that “may be inappropriate or offensive to some audiences,” but users have the ability to click through to see them anyway. The channel’s most popular videos—which show Spencer discussing Star Wars and Black Panther movies, inserting racist commentary—include the link to the AltRight.com donation page.

The AltRight.com website links to only one social media platform: YouTube. That suggests that YouTube plays an important role for the organization. YouTube’s failure to effectively de-platform Spencer despite his ban means that his videos continue to generate views and solicit funding for his hate group.

X

In 2021, X (then Twitter) introduced a feature that enabled users to send money to their favorite accounts. None of the accounts identified in this report used this feature, and we did not find other product features that helped facilitate further fundraising capabilities. We did, however, find X accounts posting links to hate group websites that raise money through donations and merchandise sales.

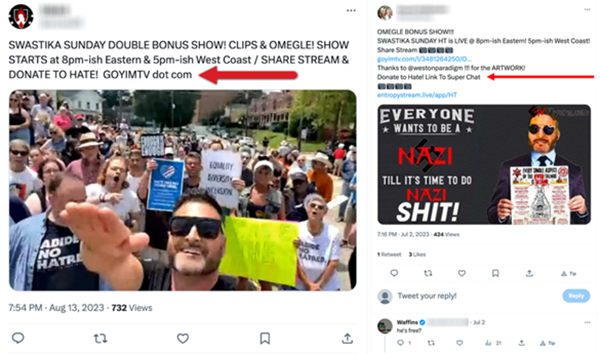

For example, we found users sharing links to donate on GoyimTV, the video platform of Goyim Defense League, the virulently antisemitic network. In August 2023, one user posted “DONATE TO HATE! GOYIMTV dot com.” Another user shared multiple links to GoyimTV livestreams and called on people to “Donate to Hate!” One of the posts declared it “Swastika Sunday” and included a meme with the text, “EVERYONE WANTS TO BE A NAZI TILL IT’S TIME TO DO NAZI SHIT” and an image of a man holding a placard stating that “EVERY SINGLE ASPECT OF THE JEWISH TALMUD IS SATANIC.”

According to X’s currently posted policies, the platform prohibits linking to content that “promotes violence against, threatens or harasses other people on the basis of race, ethnicity, national origin” and other attributes. The platform also bans “hateful entities” and “individuals who affiliate with and promote their illicit activities,” including by distributing their media and propaganda.

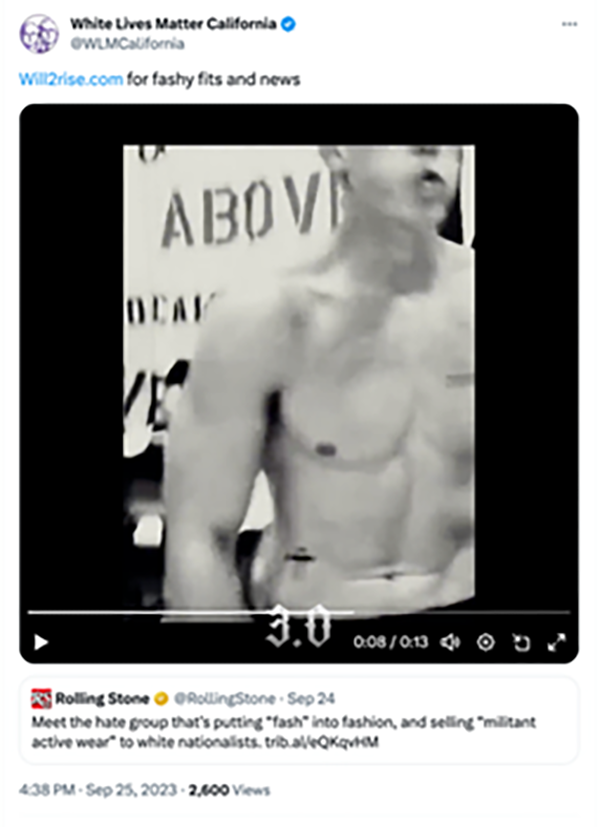

We also found X users posting links to an e-commerce site, Will2Rise. Some of the T-shirts for sale on the site are branded with the logo of Active Clubs, a localized network of crews inspired by the white supremacist Rise Above Movement. (Rolling Stone recently characterized Will2Rise as a “fascist fashion house” that is trying to “monetize” Active Club members.) The site also seeks donations for Robert Rundo, leader of the Rise Above Movement, who was recently extradited from Romania to the U.S. to face charges stemming from violent clashes with anti-fascist protestors in 2017. One of the X posts promoting the merchandise shop was viewed nearly 3,000 times and received 41 likes.

Some X users also posted links to an online store affiliated with the American Christian Dixie Knights, a Tennessee-based Ku Klux Klan group founded in 2014. One post showed a T-shirt with an image of a skull stamped with a Star of David and the text “Race Mixing is Death.” The online store appears to have been taken down since the research was conducted.

In September, we found X accounts promoting the abovementioned Will2Rise shop and the site for Media2Rise, the media production arm of the Rise Above Movement.

Some of the accounts had a blue checkmark, indicating they paid a monthly fee for the platform’s premium subscription service. X gives these accounts a variety of perks, including featuring them in the platform’s “For You” feed.

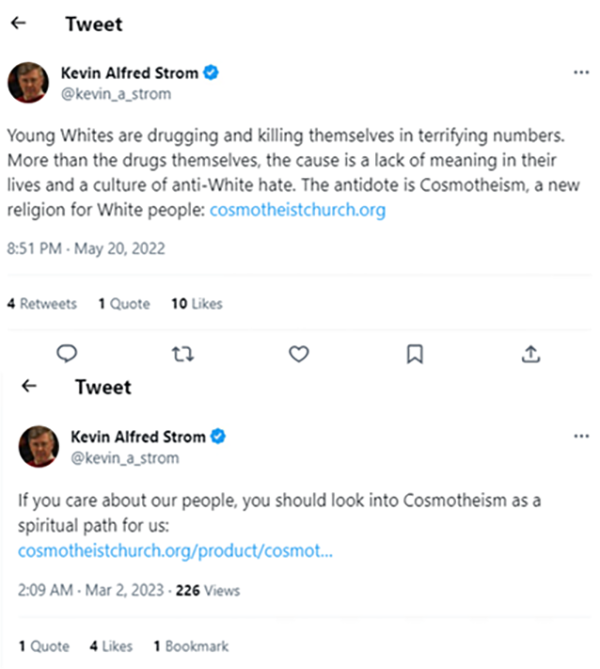

One premium account encouraged users to visit cozy.tv, the livestreaming platform of white supremacist leader Nicholas Fuentes, and donate to Fuentes. Another premium account that appears to belong to neo-Nazi Kevin Strom encouraged users to join the Cosmotheist Church, calling it “a new religion for White people.” The Cosmotheist Church was the brainchild of William Pierce, founder of the neo-Nazi group National Alliance. Shop and donate links on the National Alliance and National Vanguard websites take users to a Cosmotheist Church site to complete their transactions. The National Alliance website says, “Click here to easily donate online to the Cosmotheist Church, the parent and spiritual basis of all our work.”

Conclusion

Hate groups and their supporters are using Facebook, Instagram, YouTube, and X to direct people to outside websites that solicit donations and sell memberships and merchandise. YouTube and Facebook are also providing white supremacist and extremist groups with tools to help them improve their fundraising reach and capabilities. Until the tech companies tighten their policies around hate group fundraising and enforce them at scale, this practice will likely continue.

Policy Recommendations

Based on our findings, here are recommendations for industry and government:

-

Enforce existing policies against hate and extremism. Enforce them transparently, equitably, and at scale. While platforms have created content policies that prohibit hate and extremism, research repeatedly shows this is not effectively or consistently being enforced. In addition to prohibiting this content and establishing effective consequences for policy violations, a platform’s content policies should be clear and easy to understand. These policies should be enforced transparently, equitably, and at scale.

-

Tech companies should strengthen and robustly enforce policies prohibiting outbound links to hate group websites. Tech companies must have strong policies that prohibit linking to websites that promote hate, extremism, antisemitism, and racism. As noted above, many of these websites prominently feature donation solicitations or other fundraising efforts (e.g., merchandise sales). Policies must account for the many ways hate groups take advantage of outbound linking on platforms to fundraise. For example, YouTube, following a previous ADL report on “Hate Parties,” updated its policy prohibiting links to content that violates its Community Standards. The platform added that this prohibited activity also includes “verbally directing users to other sites, encouraging viewers to visit creator profiles or pages on other sites, or promising violative content on other sites.” A policy, however, is only as good as its enforcement. Platforms must enforce these policies at scale.

-

Tech companies should stop allowing commerce-related product features to facilitate donations and purchases for hate-based merchandise. Hate groups are taking advantage of commerce-related product features on platforms (e.g., Facebook’s “Shop Now” button) to fundraise and solicit money. Platforms should not only improve policies related to using these features but consider other product-focused improvements to ensure that they are not enabling hate groups’ ability to attract financial supporters.

-

The government should mandate transparency regarding content policy and enforcement—including information related to outbound links as well as commerce-related policies and product features. ADL has consistently pushed for more transparency regarding content policies and enforcement metrics. We need more information about how outbound links and commerce-related products are used on social media platforms. This could be done via third party auditing, publishing transparency reports, or engaging in relevant risk assessments. Government-mandated disclosures, however, can and should be crafted in a way that protects private information about users.

This piece was updated at 3:45p.m. ET on November 30, 2023, to include X's response.