Related Resources

Fight Antisemitism

Explore resources and ADL's impact on the National Strategy to Counter Antisemitism.

As the war between Israel and Hamas continues, people are turning to social media in search of reliable information about their family and relatives, events on the ground, and an understanding of where the current crisis might lead. The introduction of Generative Artificial Intelligence (GAI) tools—such as deepfakes and synthetic audio—are further complicating how the public engages with and trusts online information in an environment already rife with misinformation and inflammatory rhetoric. There is a dual problem of bad actors intentionally exploiting these tools to share misleading information, and their ability to cast doubt on any content with even the idea that it might be fake. This is leading to a dangerous erosion of trust in online content.

Fake news stories and doctored images are not unique to the current conflict in the Middle East; they have long been an integral part of warfare, with armies, states, and other parties using the media as an extension of their battles on the ground, in the air, and at sea. What has changed radically is the advent of digital technology. Any reasonably tech-savvy person with access to the internet can now generate increasingly convincing fake images and videos (“deepfakes”,) and audio, using GAI tools (such as DALL-E, that generates images from text,) and then distribute this fake material to huge global online audiences at little or no cost. Recent examples of such fakes include an AI-generated video supposedly showing First Lady Jill Biden condemning her own husband’s support for Israel.

By using GAI to, for example, spread mis- and disinformation, bad actors are actively seeking not just to score propaganda victories, but also to pollute the information environment. Promoting a climate of general mistrust in online content means that bad actors need not even actively exploit GAI tools; the mere awareness of deepfakes and synthetic audio makes it easier for these bad actors to manipulate certain audiences into questioning the veracity of authentic content. This phenomenon has become known as “the liar’s dividend.”

In the immediate aftermath of Hamas’s brutal invasion of Israel on October 7, we have witnessed an increase in antisemitism and Islamophobia online as well as in the physical world. Images of Israelis brutally executed by Hamas have been widely distributed on social media. While these images have helped document the horrors of the war, they have also become fodder for misinformation. So far, we have not been able to confirm that generative AI images are being created and shared as part of large-scale disinformation campaigns. Rather, bad-faith actors are claiming that real photographic or video evidence is AI-generated.

The perfect storm: An example from Israel

On Wednesday, October 11, a spokesperson for Israeli Prime Minister Benjamin Netanyahu said that babies and toddlers had been found decapitated in the Kfar Aza kibbutz following the Hamas attack on October 7. In US President Joe Biden’s address to the nation later that evening, he said that he had seen photographic evidence of these atrocities. The Israeli government and US State Department both later clarified that they could not confirm these stories, which unleashed condemnation on social media and accusations of government propaganda.

To counter these claims, the Israeli Prime Minister’s office posted three graphic photos of dead infants to its X account on Thursday, October 12. Although these images were later verified by multiple third-party sources as authentic, they set off a series of claims to the contrary. The images were also shared by social media influencers, including Ben Shapiro of The Daily Wire, who has been outspoken in his support for Israel.

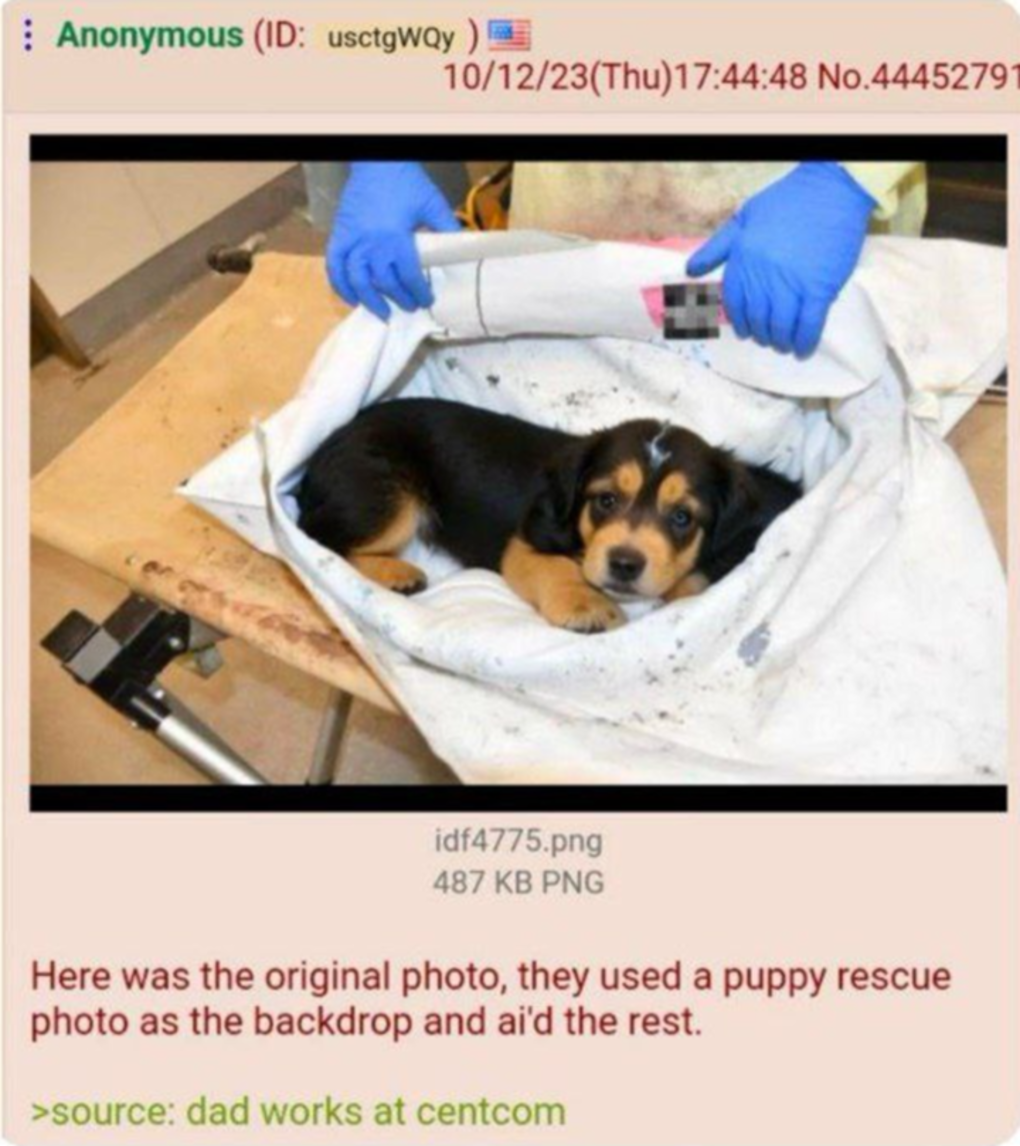

Critics of Israel, including self-described “MAGA communist” Jackson Hinkle, questioned the veracity of the images and claimed that they had been generated by AI. As supporting evidence, Hinkle and others showed screenshots from an online tool called AI or Not, which allows users to upload images and check if they were likely AI- or human-generated. The tool determined that the image Shapiro shared was generated by AI. An anonymous user on 4chan then went a step further and posted an image purporting to show the original image of a puppy about to undergo a medical procedure, alleging that the Israeli government had used this image to create the “fake” one of the infant’s corpse:

ADL took this screenshot on October 14, 2023.

The 4chan screenshot was circulated on Telegram and X as well.

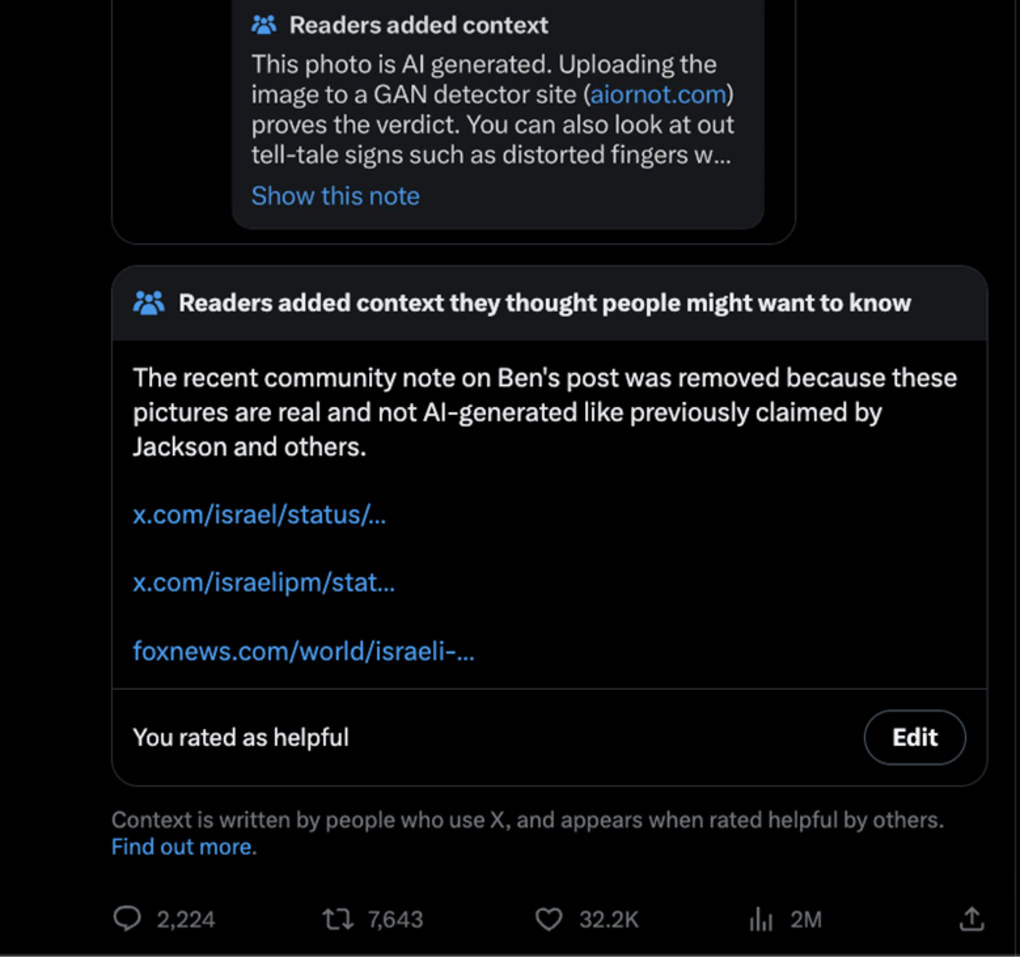

Other X users, including YouTuber James Klüg, disputed Hinkle’s assertion, sharing their own examples where AI or Not determined that the image was human-generated. Hinkle’s post has received over 22 million impressions at the time of this writing, whereas Klüg’s has only received 156,000.

Our researchers at ADL Center for Tech & Society (CTS) replicated the experiment with AI or Not and got both results: When using the photo shared by the Israeli PM’s X account, the tool determined it was AI. But when using a different version downloaded from Google image search, it determined the photo was human-generated. This discrepancy says more about the reliability of the tool than about any deliberate manipulation by the Israeli government. AI or Not’s inconsistencies are well documented, especially its tendency to produce false positives.

The Jerusalem Post confirmed the images were indeed real and had been shown to US Secretary of State Anthony Blinken during his visit to the Israeli Prime Minister’s office on October 12. In addition, Hany Farid, a professor at the UC Berkeley School of Information, says his team analyzed the photos and concluded AI wasn't used. Yet this is unlikely to convince social media users and bad-faith actors who believe the images were faked. X’s Community Notes feature, which crowdsources fact-checks from users, applied labels to some posts supporting the claim of AI generation and other labels refuting the claim:

Dueling Community Notes on posts that shared the image. ADL took this screenshot on October 17, 2023.

This incident exposes a “perfect storm” of an online environment rife with misinformation and the impact that many experts feared generative AI could have on social media and the trustworthiness of information. The harm caused by the public’s awareness that images can be generated by AI, and the so-called “liar’s dividend” that lends credibility to claims of real evidence being faked, outweigh any attempts to counter these claims. Optic’s AI or Not tool includes warning labels that the tool is a free research preview and “may produce inaccurate results.” But this warning is only as effective as the public’s willingness to trust it.

CTS has already commented on the misinformation challenges that the Israel-Hamas war poses to social media platforms. Generative AI adds another layer of complexity to those challenges, even when it is not actually being used.

Recommendations for social media companies and GAI developers

Amid an information crisis that is exacerbated by harmful GAI-created disinformation, social media platforms and generative AI developers alike have a crucial role to play in identifying, flagging, and if necessary, removing synthetic media disinformation. The Center for Tech & Society recommends the following for each:

Impose a clear ban on harmful manipulated content: In addition to regular content policies that ban misinformation in cases where it is likely to increase the risk of imminent harm or interference with the function of democratic processes, social media platforms must implement and enforce policies prohibiting synthetic media that is particularly deceptive or misleading, and likely to cause harm as a result. Platforms should also ensure that users are able to report violations of such a policy with ease.

Prioritize transparency: Platforms should maintain records/audit trails of both the instances that they detect of harmful media and the subsequent steps that they take upon discovery that such a piece of media is synthetically created. They should also be transparent with users and the public about their synthetic media policy and enforcement mechanisms. Similarly, as noted in the Biden-Harris White House’s recent executive order on artificial intelligence, AI developers must be transparent about their findings as they train AI models.

Proactively detect GAI-created content during times of unrest: Platforms should implement automated mechanisms to detect indicators of synthetic media at scale, and use them even more robustly than usual during periods of war and unrest. During times of crisis, social media platforms must increase resources to their trust and safety teams to ensure that they are well-equipped to respond to surges in disinformation and hate.

Implement GAI disclosure requirements for developers, platforms, and users: GAI disclosure requirements must exist in some capacity to prevent deception and harm. Requiring users to disclose their use of synthetic media and social media platforms to identify it can play a significant role in promoting information integrity. Disclosure could include a combination of labeling requirements, prominent metadata tracking, or watermarks to demonstrate clearly that a post involved the creation or use of synthetic media. As the Department of Commerce develops guidance for content authentication and watermarking to clearly label AI-generated content, industry players should prioritize compliance with these measures.

Collaborate with trusted civil society organizations: Now more than ever, social media platforms must be responsive to their trusted fact-checking partners and civil society allies’ flags of GAI content, and they must make consistent efforts to apply those labels, and moderate if necessary, before the content can exacerbate harm. Social media companies and AI developers alike should engage consistently with civil society partners, whose research and red-teaming efforts can help reveal systemic flaws and prevent significant harm before an AI model is released to the public.

Promote media literacy: Industry should encourage users to be vigilant when consuming online information and media. They may consider developing and sharing educational media resources with users, and creating incentives for users to read them. For instance, platforms can encourage users in doubt about a piece of content to consider the source of the information, conduct a reverse image-search, and check multiple sources to verify reporting.

What is generative artificial intelligence (GAI)? And why does it matter?

GAI is a subset of artificial intelligence (AI). Whereas AI-based systems, like credit scorers or fraud detectors, use algorithms to process large datasets and automatically render decisions, GAI systems “generate” text, images, video, audio, etc., by making probabilistic assessments about their training data. GAI cannot create content out of whole cloth because it cannot think the same way that a human does: GAI tools do not understand meaning, cannot reason, and are incapable of assessing ground truths. It is more accurate to say that GAI “remixes” elements it has encountered before into novel combinations. In other words, GAI “does its best to match aesthetic patterns in its underlying data to create convincing content”; it is more Girl Talk than Bach.

This emphasis on aesthetics is one of the reasons why GAI is receiving so much attention right now: GAI outputs look and sound remarkably similar to content that humans can make. Another reason is democratized access to GAI with the release of public-facing, experimental tools like DALL-E, Midjourney, ChatGPT, and Bard. Most of us encounter AI systems frequently in our daily routines (e.g., Google Maps, search engines, payments applications, etc.) but these systems and their operations mostly go unnoticed because they run in the background and “below the surface” of our attention. GAI, on the other hand, invites active engagement from users via prompts.

Aesthetics and democratization make GAI tools useful—not to mention profitable—for tasks like product design, medical imaging, and copywriting. But these same qualities also drive concerns about how bad-faith actors could use GAI to undermine trust in online content and spread disinformation, hate, and harassment.