Related Resources

Meta’s ‘Twitter killer,’ Threads, launched on July 6 to media fanfare. Thanks to integration with Instagram’s massive user base, Threads became the fastest consumer app to reach 100 million downloads, doing so in just five days. Meta executive Adam Mosseri, who now runs both Instagram and Threads, says the new text-based social app isn’t for news or politics. The goal of the app, he says, is to create a public square for other types of communities.

Although Meta is promising some forward-thinking safety features, such as blocking users with one tap and filtering out replies with certain words, the company has a history of failing to make good on its promises to protect targets of online hate and harassment.

With another already politically-charged U.S. election on the horizon, online hate and harassment at record highs, and a rise in antisemitism and extremist incidents both on- and offline, a new social media product of this scale will present serious challenges. Meta cannot simply wish them away by claiming its goal is to create “a positive and creative space to express your ideas.”

Here are six things ADL will be watching as Threads continues to grow and some concerning initial observations:

1. Policies on Hate, Harassment and Extremism

Creating a new app was an opportunity for Meta to approach Terms of Use and Community Guidelines from a fresh perspective and consider the types of interactions the product encourages. Instead, Meta tacked on a few changes to Instagram’s existing policy without addressing the fundamental differences between Instagram and Threads.

For example, Instagram’s explanation of how they mitigate the spread of false information is not relevant to Threads. It focuses on Instagram-specific features like ‘explore,’ hashtags, and stories – all features that are not available on Threads. Meta should explain if and how they will filter false information from Threads, in addition to being clear about their approach to other harmful media, such as synthetic media like deepfakes.

Meta’s suite of apps has created a labyrinth of policies on hate, harassment, and extremism. Unfortunately, they are not particularly user-friendly. In some instances, Facebook, Instagram, and Threads have distinct policies, but in others, they refer back to an overarching Meta policy. A policy is only as good as its clarity and enforcement, and Threads’ hate policy is concerningly hard to find.

Threads’ policy on hate appears to refer to Instagram’s policy, which in turn refers to Meta’s policy. If Meta’s policy is, in fact, fully applicable to both Instagram and Threads, the policy at the end of the maze is a strong one. The Meta policy “define[s] hate speech as direct attack against people – rather than concepts or institutions – on the basis of…protected characteristics: race, ethnicity, national origin, disability, religious affiliation, caste, sexual orientation, sex, gender identity and serious disease.”

Users of all of Meta’s platforms, including Threads, deserve clear, accessible policies tailored to the design of the app they are using and that prioritize keeping people safe. ADL has repeatedly urged platforms to not only have clear and comprehensive policies, but to also communicate with their users about their content management decisions. Users deserve to know that platforms will thoughtfully review their reports of hateful content. At present, Threads’ applicable hate policy is difficult to find without a concerted search, and it is unclear if and how it will be enforced on Threads.

2. Protections for Targets of Identity-Based Hate

In the ADL Center for Tech and Society’s (CTS) 2023 report on Online Hate and Harassment, Facebook was the site where most adult respondents had experienced harassment, even accounting for time spent on the platform. Instagram was the next highest, tied with Twitter. Of those who were harassed online, 54% reported that the harassment happened on Facebook.

So far, Threads has some promising product-based protections for targets of harassment, like easily accessible tools to unfollow, block, restrict or report a profile. Additionally, Threads automatically blocks any accounts a user has blocked on Instagram, sparing targets new or additional exposure to previously-blocked harassers. CTS has advocated for more intuitive, user-friendly, and effective safety designs, such as these, and has created a social pattern library that designers can use. Threads appears to have made some positive steps in this regard, especially given Meta’s history of falling short in providing users with adequate protections.

What is problematic, however, is that within days of launch, Threads has already exposed vulnerable targets to hate and harassment. One troubling incident involved a user discovering that Meta’s automated system had scraped her legal name and altered her display name on Threads accordingly without her notice or consent. That user, a sex worker, relies on a pseudonym online to protect her identity.

This feature violates users’ privacy, potentially jeopardizes their safety, and can trigger identity-based harassment. For example, changing users’ profile names to their legal names without giving advance notice and an opportunity to challenge the decision before it takes effect could result in deadnaming queer and transgender users. Transgender individuals, who already disproportionately experience online harassment, according to ADL data, could face outing and severe harassment as a result. While it remains unclear whether this was a hasty design oversight or a deliberate policy choice, this practice is dangerous to any user who relies on anonymity for their safety.

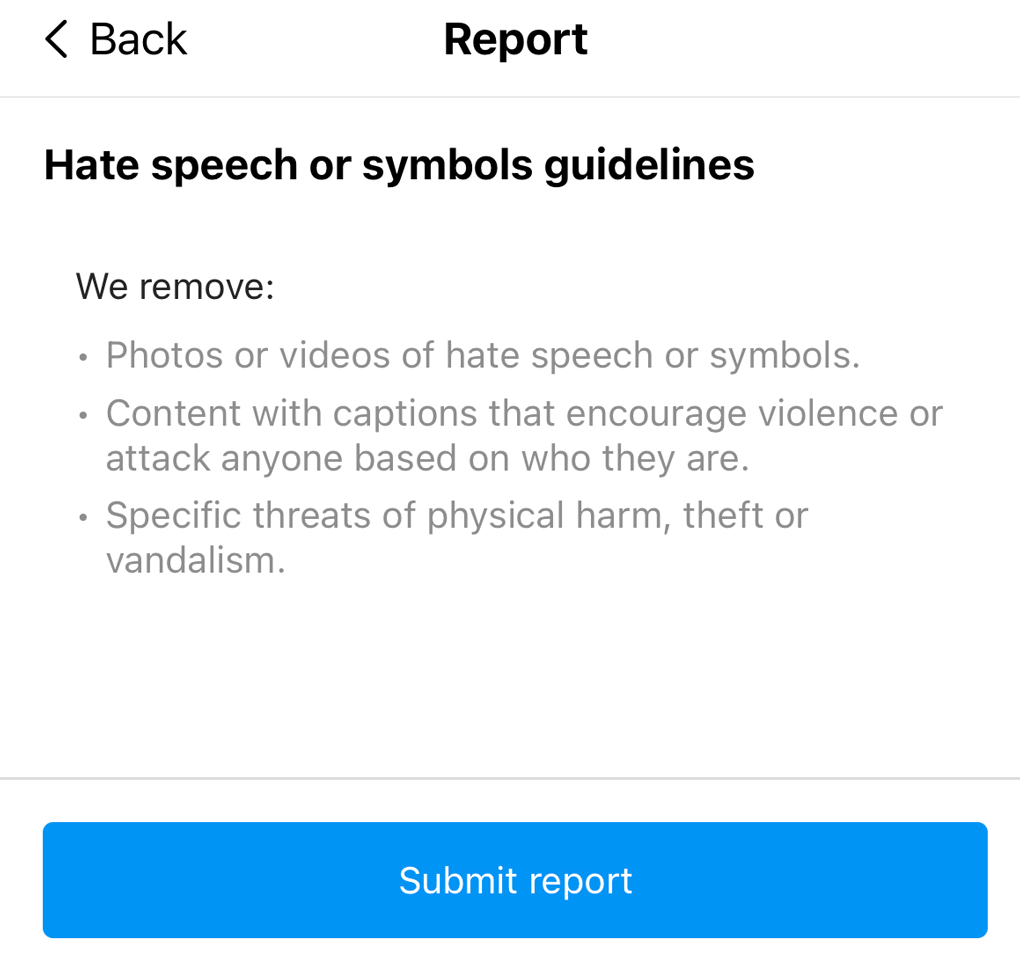

For those who are targets of online hate, the current reporting mechanisms also appear inadequate. Threads’ reporting form uses vague language in its hate speech reporting flow that does not center the experience of marginalized groups, instead defining hate as attacking “anyone based on who they are.”

Protecting targets also requires having a robust Trust and Safety operation. Adding a new, highly downloaded app to its suite further increases the urgent need for a fully resourced Trust and Safety team. Notably, in recent months, Meta gutted its Trust and Safety workforce. We will continue to observe Meta’s practices and encourage it to invest in the backend support that targets need.

3. Extremists’ and Antisemites’ Use of the Platform

In addition to structural concerns with Threads, bad actors are already taking to the app to spread their messages. White supremacist and antisemite Nick Fuentes, banned from Instagram after the January 6 insurrection, said he made a fake account on Threads, and urged his followers to do the same. There is evidence that many of the so-called Groypers have done so. Although the search capabilities of Threads are rudimentary, it is already possible to find antisemitism on the platform with a little effort. The Tech Transparency Project found that accounts filled with Nazi imagery and language were already populating Threads within 24 hours of the app’s launch.

Libs of TikTok, a ruthless purveyor of stochastic harassment and anti-LGBTQ+ extremist narratives, has already accused Threads of censorship for removing a post under its hate speech guidelines. Meta’s quick action, in this case, is encouraging, but other Threads users have commented that reports against Libs of TikTok are quickly rejected. That suggests that an account known for spreading hate and harassment may actually be receiving special treatment–known as whitelisting–on Threads. Meta must maintain a strong stance against users like Libs of TikTok, who will happily nurture a hateful following on Threads and direct harassment at vulnerable targets.

Extremists often take advantage of platforms during periods of turnover. For example, we observed “stress tests” from users pushing the content moderation envelope when Elon Musk acquired Twitter in October 2022. Fuentes and others appear to be doing something similar with Threads. Less extreme users with histories of spreading misinformation and encouraging harassment have already posted content that could violate Meta’s Community Guidelines, such as election conspiracy theories and insults against transgender people. We will closely monitor Meta’s efforts to moderate hate, harassment, and misinformation on Threads, as well as how extremists and others use the platform.

4. Elections Integrity Policies in the Run-up to 2024

Despite Mosseri’s assertion that Threads is not intended for “news and politics” and that the associated integrity risks are not worth the “incremental engagement or revenue,” the platform will undoubtedly be used by both political campaigners and other actors seeking to weigh in on political topics. In our previous analysis of Meta’s elections integrity policies in the lead-up to the 2022 U.S. midterm elections, we found that policies for both Facebook and Instagram lacked necessary measures. Both provided platform-wide misinformation policies and tools, such as flagging, but these policies did not cover all forms of mis- and disinformation. Moreover, they haven’t been updated since 2019. When we audited social media platforms’ enforcement of election misinformation policies during the 2022 midterm elections, we found inconsistent enforcement on both Facebook and Instagram. Facebook, in particular, was vulnerable to misinformation spreading via screenshots, while Instagram allowed unrestricted searches for problematic election conspiracy theory terms.

Threads must face the reality that with such a large user base, public figures will take to the platform to spread election-related messages and gain followers. While Threads can and should house important discourse, some bad actors have already imported their misinformation tactics to Threads. Threads quickly appended labels to several accounts, including Donald Trump Jr. warning users that the account “repeatedly posts false information.” After these accounts claimed censorship on Twitter – Threads’ competitor – Threads quickly removed the labels.

Additionally, except in cases like sharing child exploitation imagery, Meta’s moderation actions against users on Threads will not impact their associated Instagram account, allowing users more opportunities to spread false claims. Meta has also cut staff on teams essential for election integrity that focus on disinformation and coordinated harassment campaigns. While we hope that Threads, and Meta’s other platforms, will address shortcomings ahead of the 2024 elections, the company’s history does not inspire much confidence.

5. Transparency

Like Instagram, Threads’ Terms of Use prohibit data scraping. After restricting API access to its services’ data in the wake of the Cambridge Analytica scandal, Meta released its FORT API to qualified academic researchers in 2021, which is only for accessing Facebook data. Meta’s Instagram Graph API allows Instagram’s business and creator partners to access some platform data, but this is primarily for managing account interactions, not for trust and safety research. Meta has not yet announced any API access plans for Threads. Access to platform data is a crucial tool for academic and civil society researchers that enables threat monitoring and auditing of trust and safety mechanisms. We will continue to advocate for researcher access to Threads as we do for Meta’s other products and services.

Meta, like other social media companies, publishes voluntary transparency reports every quarter. We expect that Threads will be included in future reports, specifically regarding community standards enforcement. While we are glad Meta regularly publishes transparency reports, trust would be significantly strengthened if independent auditors had the ability to conduct regular audits.

6. What will a decentralized future mean for Threads?

Threads is meant to integrate into a decentralized system of social media networks, ActivityPub, and it remains to be seen how trust and safety will operate once that occurs. A decentralized system, sometimes called the "fediverse," could give users options to tailor their content moderation experiences and provide safe havens for vulnerable users. Still, some worry that Threads’ entrance will crush the burgeoning fediverse, leaving it in Meta’s dominant grip. CTS will monitor Threads’ integration in the coming months to ensure that targets’ needs are prioritized.