Fight Antisemitism

Explore resources and ADL's impact on the National Strategy to Counter Antisemitism.

Introduction

Researchers at the ADL Center for Tech and Society have found that bad actors appear to be sidestepping TikTok’s moderation policies to spread antisemitic content through slideshows (Photo Mode) and hashtags. While the platform remains difficult to study due to the lack of data it shares with civil society organizations, we were able to identify a concerning problem that, at a time where antisemitism and online hate are particularly concerning, warrants enforcement fixes from the company.

TikTok is a highly popular social media platform with an estimated 150 million active users in the U.S. alone. The platform ranks second in popularity to YouTube among US teens, and 6th worldwide in terms of the number of monthly users among social media platforms, behind peers such as Facebook and YouTube. And despite having strong anti-hate policies, hate and harassment on TikTok have risen sharply in recent years. Between 2021 and 2023, the share of adults who have been harassed on TikTok rose from 5% to 19%, according to ADL's annual survey.

Photo Mode:

ADL researchers manually searched for antisemitic content on TikTok. We found a few instances of obviously violative content in videos, which make up the bulk of posts on the site. However, we found more explicit antisemitic content on the slideshow feature, “Photo Mode.” Photo Mode is a slide show that lets users display picture posts one after another and set them to music or sound effects. The prevalence of hateful content in Photo Mode suggests that TikTok enforces its policies more effectively in videos.

Hashtags:

We also identified a key loophole that allows hatemongers, trolls and extremists to find—and spread—disturbing and virulent content, including outright slurs. Hashtags are words or phrases preceded by a hash sign (#) to tag and search for content on TikTok. TikTok bans certain hashtags from being searched through the platform’s search bar. However, a user can still click on a violative hashtag and view videos labeled with that hashtag, circumventing the search ban.

Findings

Antisemitism Spread Through Photo Mode

Despite a relatively robust set of community guidelines, toxic users have found numerous ways to evade the platform's content moderation efforts. Photo Mode provides another opportunity to spread hate with seemingly little pushback from the platform.

TikTok announced its new “Photo Mode” feature on October 6th, 2022, publicizing this feature as a new format designed to spur creativity and allow users to “more deeply connect with others.” In the year since, however, Photo Mode has become another vector for antisemitism and hate on the platform, and there is no indication that TikTok’s moderation is equipped to distinguish what is and is not hate.

Antisemitic Memes Unchecked in Photo Mode

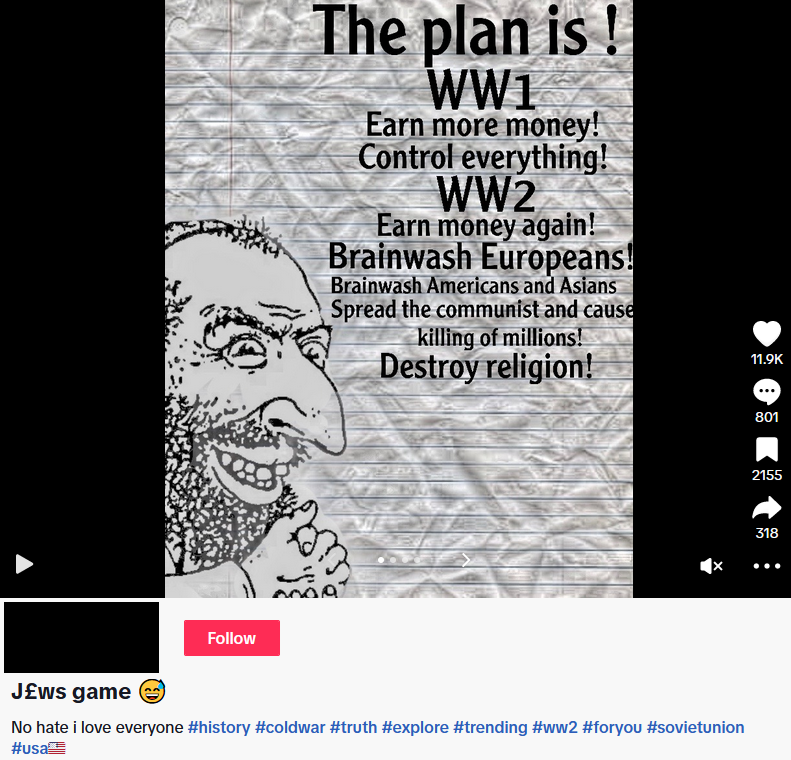

We collected a sample of nine antisemitic memes and pictures using TikTok’s Photo Mode. Many of these posts have garnered hundreds of thousands of views and have not been taken down, though they are in violation of TikTok’s community guidelines. The meme shown in Image 1 has over 800 comments and has been up since September 9th, despite its blatant antisemitism and hatemongering.

Image 1: Antisemitic slideshow posted on TikTok on August 9. Screenshot taken by ADL researcher, October 5.

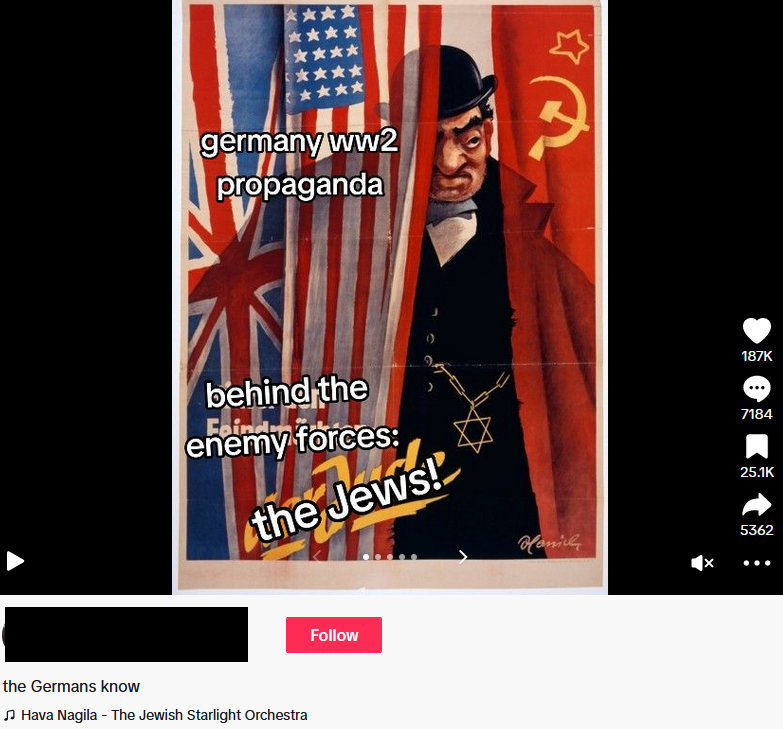

In Image 2, a slideshow that has been available since August 14 shows a series of antisemitic memes set to the tune of the traditional Jewish song Hava Nagila. This slideshow has over 7,000 comments and 187,000 likes.

Image 2: Antisemitic slideshow, set to the tune of “Hava Nagila,” from August 14. Screenshot taken by ADL researcher, October 5.

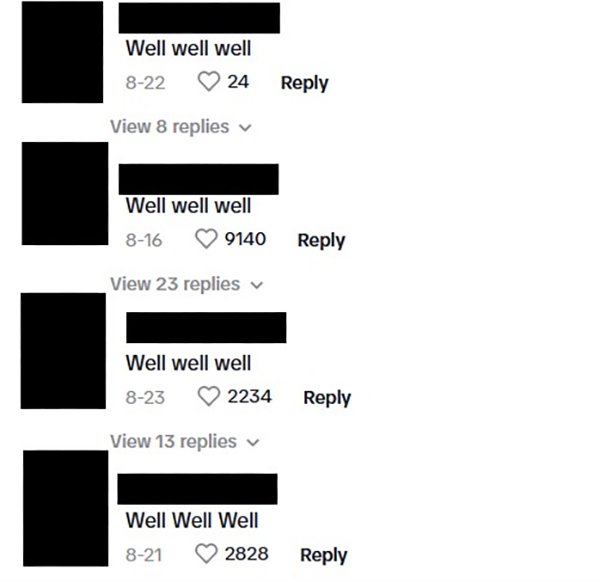

In addition to the hateful slideshows themselves, the comments underneath are rife with hate. For example, one repeated comment we saw was "Well, well, well.” This is a racist and antisemitic dog-whistle posted repeatedly under TikToks with Jewish and Black/African American themes. Image 3 shows a sample of the comments posted under the previous slideshow.

Image 3: Comments posted under the slide show in Image 2. Screenshot taken by ADL researcher, October 5.

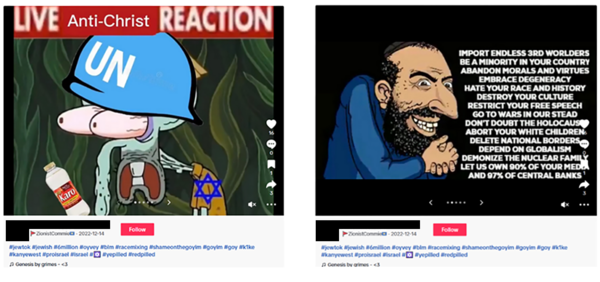

The oldest slideshow found in our data, in Image 4, has been available since December 14, 2022. Its lack of engagement compared to the other slideshows (16 likes compared to 187,000 for the one in Image 2) may indicate such low amplification that TikTok’s moderation tools or moderators have not detected it. However, it is still concerning as the slideshow is tagged with explicitly hateful hashtags.

We are unable to evaluate whether TikTok is adequately filtering hateful slurs in hashtags and the slideshows that use them. Unlike the previous slideshows, which often use popular hashtags like #fyp or #trending, this slideshow’s hashtags include slurs like #k1ke. Such hashtags presumably violate their Hate Speech and Hateful Behaviors policy, which prohibits “Using a hateful slur associated with a protected attribute.”

Image 4: Antisemitic slideshow available since 2022. Screenshot taken by ADL researcher, October 5.

The Hateful Hashtag

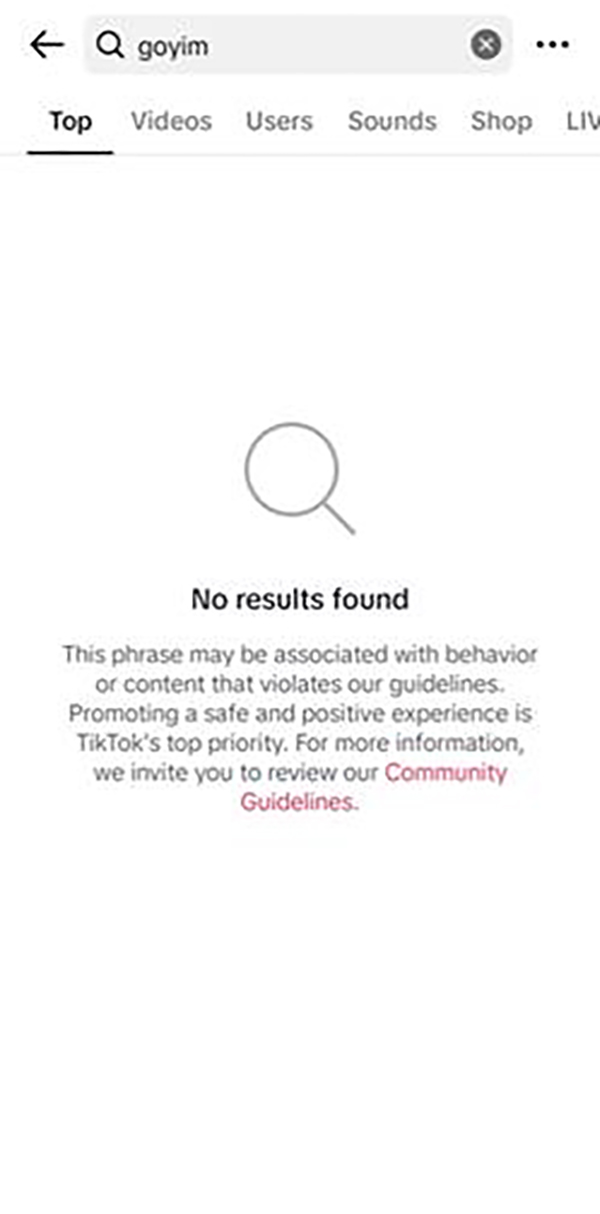

In addition to their Hate Speech and Hateful Behaviors policy, TikTok bans searching for terms that, in their words, “may be associated with behavior or content that violates our guidelines.” Despite this ban, it is still possible to find content under such terms. For example, TikTok prevents users from searching for “goyim.” Goyim, a Hebrew term for non-Jews, can reference the hateful trope “the Goyim know,” implying non-Jews are aware of Jewish conspiracies.

Image 5: Results after entering “goyim” in the TikTok search bar. Screenshot taken by ADL researcher, October 5.

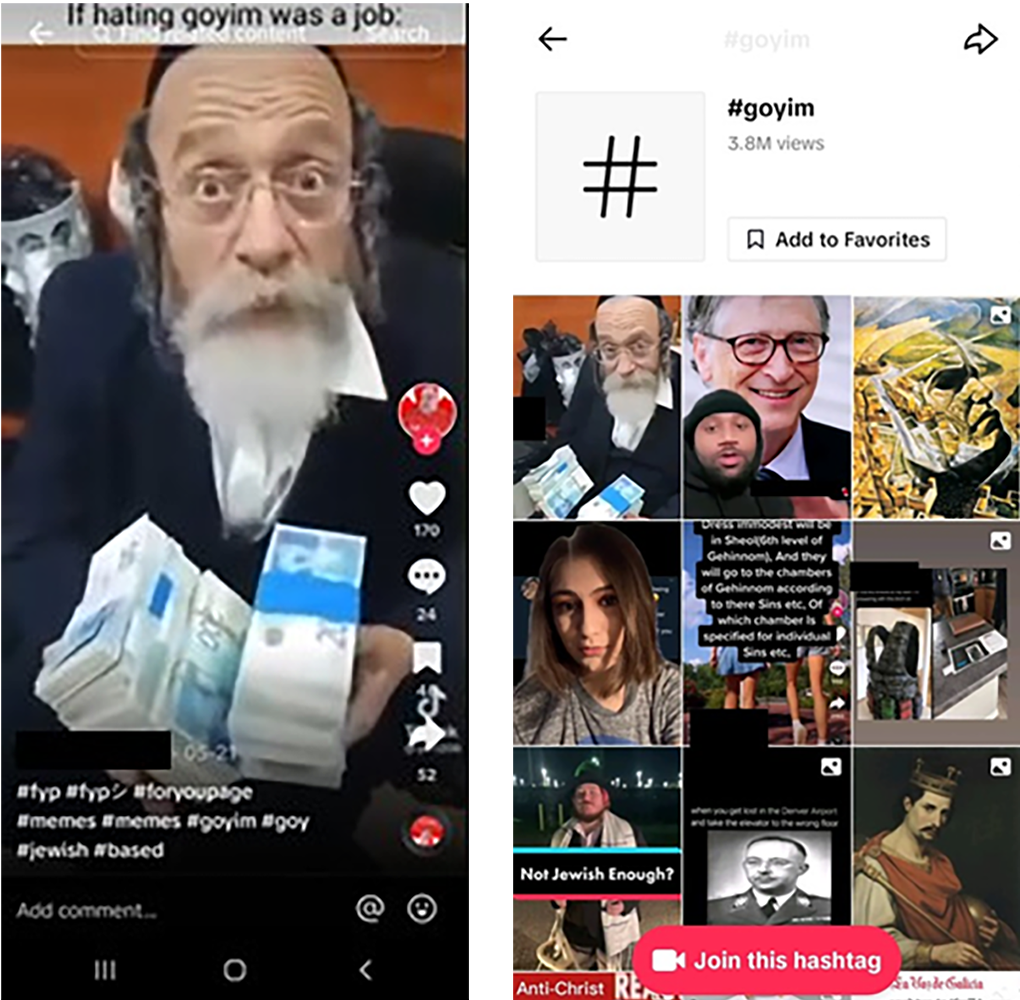

If a user finds a video tagged with #goyim, they can easily click through to find other content with the same hashtag. One of the hateful TikTok accounts we identified, for example, featured videos tagged with #goyim. When we viewed this user’s profile and scrolled through their videos, we clicked on the hashtag #goyim, which returned a list of videos with the same hashtag. The hashtag #goyim has, according to TikTok, over 3.8 million total views, despite the platform banning it from search.

Image 6: Results after clicking on the hashtag #goyim within a video. Screenshot taken by ADL researcher, October 5.

Consequences for Users

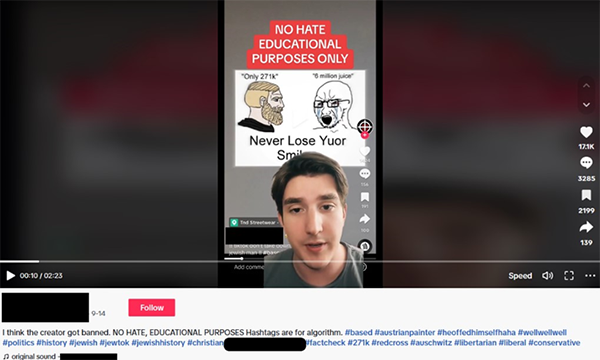

TikTok claims that its algorithm amplifies content that users are interested in and minimizes other content. However, there is evidence that even users who don’t espouse hate are exposed to hateful content, such as antisemitic memes—either because TikTok’s algorithm surfaces it, or because they seek it out to critique it. In a video debunking one of these slideshow memes (Image 7) a user labels his video with the same hashtags as many hateful memes. Examples include #271k (a reference to the conspiratorial claim that only 271,000 Jews died in the Holocaust) and #austrianpainter (a reference to Hitler) with the additional hashtag #factcheck. We do not know how or whether TikTok can differentiate between these debunking videos (i.e., counterspeech) and hate.

Image 7: Video debunking hateful memes on TikTok. Screenshot taken by ADL researcher, October 5.

Image 8: Comment beneath the video in Image 7 deploring the availability of hateful content on TikTok. Screenshot taken by ADL researcher, October 5.

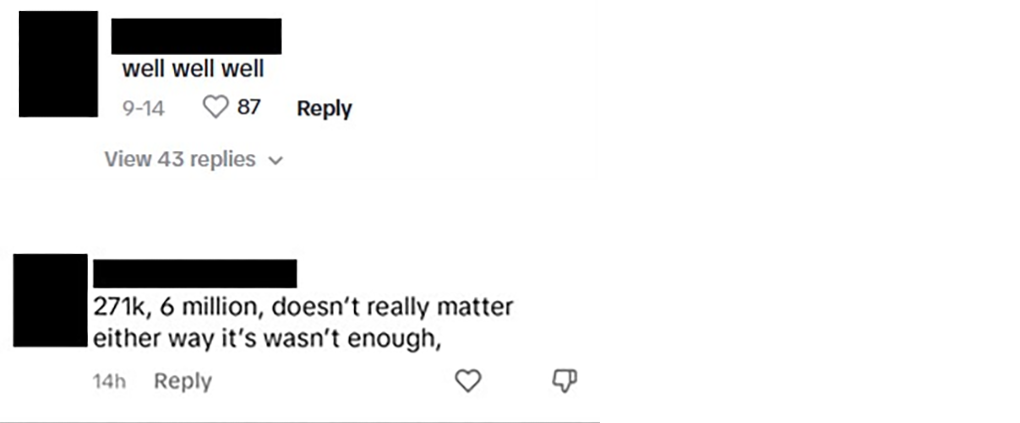

The counterspeech videos ADL researchers found attract further hate and harassment. The comments under the debunking video in Image 7 include both support (Image 8) and implicit hate (Image 9). Comments such as “well, well, well” as well as more explicit language (“271k, 6 million, doesn’t really matter, either way it’s wasn’t enough” [sic]) are widely spread on the comments section.

Image 9: Hateful comments posted under the video in Image 7. Screenshot taken by ADL researchers, October 5.

Recommendations

Based on Our Findings, We Recommend That TikTok:

Improve moderation for slide shows and other video categories that use static imagery. The more prevalent occurrence of hateful images, in contrast to hateful audio or video content, suggests that static content evades TikTok’s moderation filters. TikTok should employ Optical Character Recognition (OCR) to extract text from images for moderation purposes. If it is already doing this, it should improve its system. If TikTok and other platforms were more transparent about their content moderation process, we would be able to provide more specific suggestions for mitigating harmful, antisemitic content in static imagery.

Close the loophole that allows searching for hateful hashtags. It is simple for users to circumvent TikTok’s ban on searching for hateful hashtags. Although TikTok removes certain hashtags from search results, users can still click through on those hashtags when it find them on a video. If TikTok bans searching for hashtags “associated with behavior or content that violates our guidelines” (as per its user guidelines,) it must also prevent those hashtags from being clickable anywhere on site. Better still, hashtags that violate TikTok’s user guidelines should be removed entirely.

Remove the ability for users to attach slurs as hashtags. In addition to closing the hateful hashtag loophole above, TikTok should enforce its policy on hate speech and make it impossible to tag videos with certain hashtags altogether. Hateful slurs like #k1ke violate TikTok’s user guidelines and should not appear at all.

Provide API access for civil society organizations. Currently, there is no straightforward way to gather data on TikTok. An API (Application Programming Interface) provides access to a platform's data, which allows researchers to evaluate how much hate is on the platform and whether the platform is enforcing its rules. TikTok only provides access for researchers through an academic API for non-profit universities in the U.S. and Europe. TikTok should provide API access to civil society organizations with relevant expertise to allow independent researchers to verify claims that images on TikTok are well moderated.

Methodology

ADL researchers manually reviewed antisemitic hashtags on TikTok from September 9 to October 10, 2023, to find and identify antisemitic content. We conducted these searches for approximately one hour every two days. During this period, we found nine slide shows with explicit antisemitism. Of these nine, two have since been removed as of October 31, 2023. As of November 20, all posts have been removed by TikTok. The relatively small sample size reflects the fact that hateful content is still a small percentage of total social media posts. This content can, however, have outsized harms. We only began logging these hateful memes after discovering their prevalence in the course of broader research into antisemitism on TikTok.

Without a research API, it is prohibitively difficult to collect quantitative and qualitative data at scale. We were not, for example, able to automate browsing or auto-log results to create larger samples efficiently. These limitations in data access mean we cannot assess whether the slideshow videos we found are outliers, whether TikTok is not moderating antisemitism in the Photo Mode feature, or not moderating it as effectively. It is possible there are fewer hateful videos than slideshows, or that users are more likely to post hateful content in comments (for example, the antisemitic comments in Images 3, 8, and 9). It is also possible TikTok has moderated Photo Mode videos, and that we found a few that its detection missed. The contrast between hateful videos and hateful slideshows, however, suggests TikTok is not moderating the latter adequately, possibly because they are not using tools that are effective at detecting hateful text in images. Finally, though we did not observe many hateful videos that does not mean they do not exist. API access would allow us to more fully measure the number of antisemitic videos on TikTok.

Not having API or data access also means that ADL and other civil society organizations cannot test or verify these potential explanations. Finally, the slur hashtags we identified clearly evade moderation, and TikTok has not taken adequate steps to prevent users from exploiting the hashtag search loophole. This lets users find hateful content through hashtags otherwise banned in search.