Related Content

An investigation from ADL’s Center for Technology and Society (CTS) found Twitter did not remove over 200 blatantly antisemitic tweets accusing Jewish people of pedophilia, invoking Holocaust denial, and sharing oft-repeated conspiracy theories. To test Twitter’s enforcement of its policies on antisemitism, CTS reported 225 strongly antisemitic tweets over nine weeks through ongoing communications with the platform. Of the reported tweets, Twitter only removed 11, or 5% of the content. Some additional posts have been taken down, presumably by the user, yet 166 tweets of the 225 we initially reported to Twitter remain active on the platform.

The content ADL reported to Twitter were obvious examples of antisemitism as determined by our experts and our newly created Online Hate Index antisemitism classifier. We found examples of classic antisemitic tropes, such as myths of Jewish power and greed, and hate against other marginalized communities.

Twitter has taken some positive steps in the recent past. However, this report shows it has significantly more to do. Twitter must enact its most severe consequences and remove destructive, hateful content when reported by experts from the communities most impacted by such content.

Methodology and Results

To evaluate Twitter’s content moderation efforts around antisemitism, researchers at ADL’s Center for Technology and Society retrieved 1% of all content on Twitter for a 24-hour window twice a week over nine weeks from February 18 to April 21, 2022. We then passed that content through the Online Hate Index and selected the tweets our classifier indicated were at least 50% likely to be antisemitic. Tweets given the highest confidence level of being antisemitic were then manually reviewed. Only posts that were deemed antisemitic by two experts were reported to Twitter with sets limited to around 20 tweets per report for a total of 225 tweets. During the selection process, we eliminated any tweets that were not obviously antisemitic or could reasonably be debated.

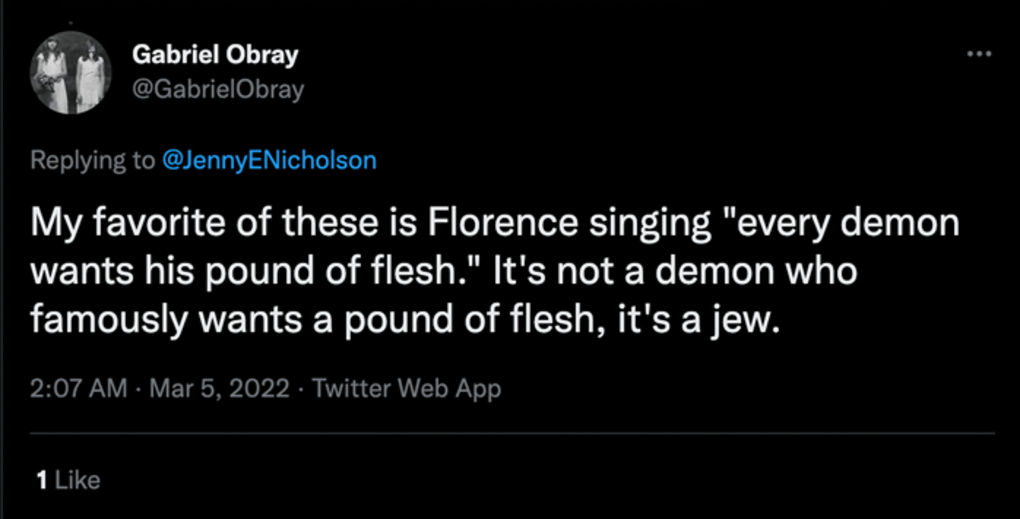

The 225 tweets we reported included a variety of long-standing antisemitic tropes and conspiracies. For example, 74 included myths of Jewish power and greed, while 18 included accusations of pedophilia and sexual misconduct along with antisemitism. Some of the tweets also included hate against other marginalized communities. For example, 12 of the antisemitic tweets we reported also included racist sentiment, and three of the antisemitic tweets expressed hate against the LGBTQ+ community. According to the company, none of these tweets were found violative enough for removal. Below is a sampling of tweets that we reported to Twitter.

Example 1:

Example 2:

Example 3:

Example 4:

Example 5:

Example 6:

Example 7:

Example 8:

As of May 24, 2022, 166 tweets of the 225 we initially reported to Twitter remain active on the platform. The remaining tweets have a potential reach of 254,455 users. While we know that Twitter removed 11 of the original 225 due to our directly reporting them, the reasons behind the removal of the other 48 are less clear. Fifteen of the 48 tweets currently display a message that indicates “No status found with that ID,” while 29 state that they belong to a suspended account. One tweet states, “Sorry, you are not authorized to see this status.”

Twitter’s Response

Twitter staff provided two reasons why the company did not remove these tweets. First, they stated that some of the content had not been removed but instead had been de-amplified, could not be shared or engaged with, or down-ranked the content behind a low-quality “show more replies” content filter in a thread. We are unable to verify whether these actions took place.

Secondly, Twitter told ADL that some of the content was purposely not actioned because it did not meet a particular threshold of hateful content on the platform. According to Twitter, tweets with one hateful comment were not subject to content moderation action by the platform, but if a tweet had repeated hateful comments, it would be subject to content moderation.

In both of these cases, the decisions made by Twitter significantly minimize the impact of antisemitism and that hate more broadly has on individuals from targeted communities. It is neither enough to de-amplify hate nor wait for hate to rise to a certain threshold before taking action. If a swastika was painted on a public building, we would not tell a community to put up a sign in front of it telling passersby to avert their eyes or wait to take action until there were a few additional swastikas painted on the same building. Hateful content, once identified, and especially if identified by members or groups who represent the impacted community, should be removed from tech platforms under their hate policies so that not even one person has to experience it.

Recommendations

Twitter first implemented its hateful conduct policy in late 2015. In the years since, it has expanded that policy significantly, including its most recent expansion around dehumanization in 2021. However, platform policy is only as good as meaningful and equitable enforcement that centers on the experiences of vulnerable and marginalized communities.

As ADL has noted in its pyramid of hate, unchecked bias can become “normalized” and contributes to a pattern of accepting discrimination, violence, and injustice. By not implementing its harshest penalties around obviously hateful content, Twitter is not sufficiently pushing back against the normalization of hate online and is putting the burden on marginalized communities to counter forms of bias and hate directed at them.

On June 1, 2022, Twitter shifted its focus away from new features such as audio-only Twitter Spaces and Twitter communities and toward a focus on “areas that will have the greatest positive impact to the public conversation.” We would hope that improving the platform’s enforcement around hate against marginalized communities, and better incorporating the perspective of those communities, would be at the top of the list in terms of steps that can positively impact the public conversation.

For Tech Platforms

Enforce platform policies equitably at scale

Platform policies on their own are insufficient; they require enforcement that is consistent and equitable across the full scale of every digital social platform. ADL recommends that tech companies hire more content moderators and train them well. Companies should develop automated technologies that recognize cultural and linguistic contexts and commit as few mistakes as possible when enforcing policies around violative content. While perfection is impossible, the sheer inadequacy of enforcement of antisemitism is unacceptable.

Be transparent about non-removal content moderation actions

Beyond removing content, tech companies must also provide information to the public, researchers, civil society, and lawmakers about when they take other actions on content that violates their policies. These stakeholders should be able to understand, for example, when an action is taken to deamplify content and why that action was taken. Researchers and civil society should also be able to independently verify that these types of actions have been taken.

Center the experiences of communities targeted by hate in design and development of tech products

Tech companies should design and update their products with the communities most often targeted by hate, centering their experiences in the development and improvement of their platforms. ADL has modeled this approach in creating our Online Hate Index antisemitism machine learning classifier. If a nonprofit can develop an effective tool to detect online antisemitism that incorporates the perspective and experience of Jewish volunteers, then technology companies with their vast resources have no excuse not to do the same. Moreover, if a platform creates a trusted flagger program so that experts from affected communities can escalate content to the platform, it should trust the judgment of those experts.

For Government

Support legislative solutions requiring platform transparency

The results of this research are yet another example of why the public, civil society, and lawmakers need further visibility into how tech companies implement their policies around hate and harassment on their platforms. It is no longer sufficient to rely on what platforms say publicly. We need legislative means to require platforms to be more transparent around how they conduct content moderation, such as AB 587 in California, which would standardize transparency reporting across all digital social platforms. The California legislature is working to pass AB 587, a bipartisan bill supported by more than 60 community organizations that will help ensure the transparency and accountability of social media platforms. If AB 587 passes, it will require social media companies to submit transparency reports that disclose how a platform defines hate speech, moderates harmful content, and evaluates the efficacy of enforcement. AB 587 helps create a safe and transparent environment for social media platform users.