Fight Antisemitism

Explore resources and ADL's impact on the National Strategy to Counter Antisemitism.

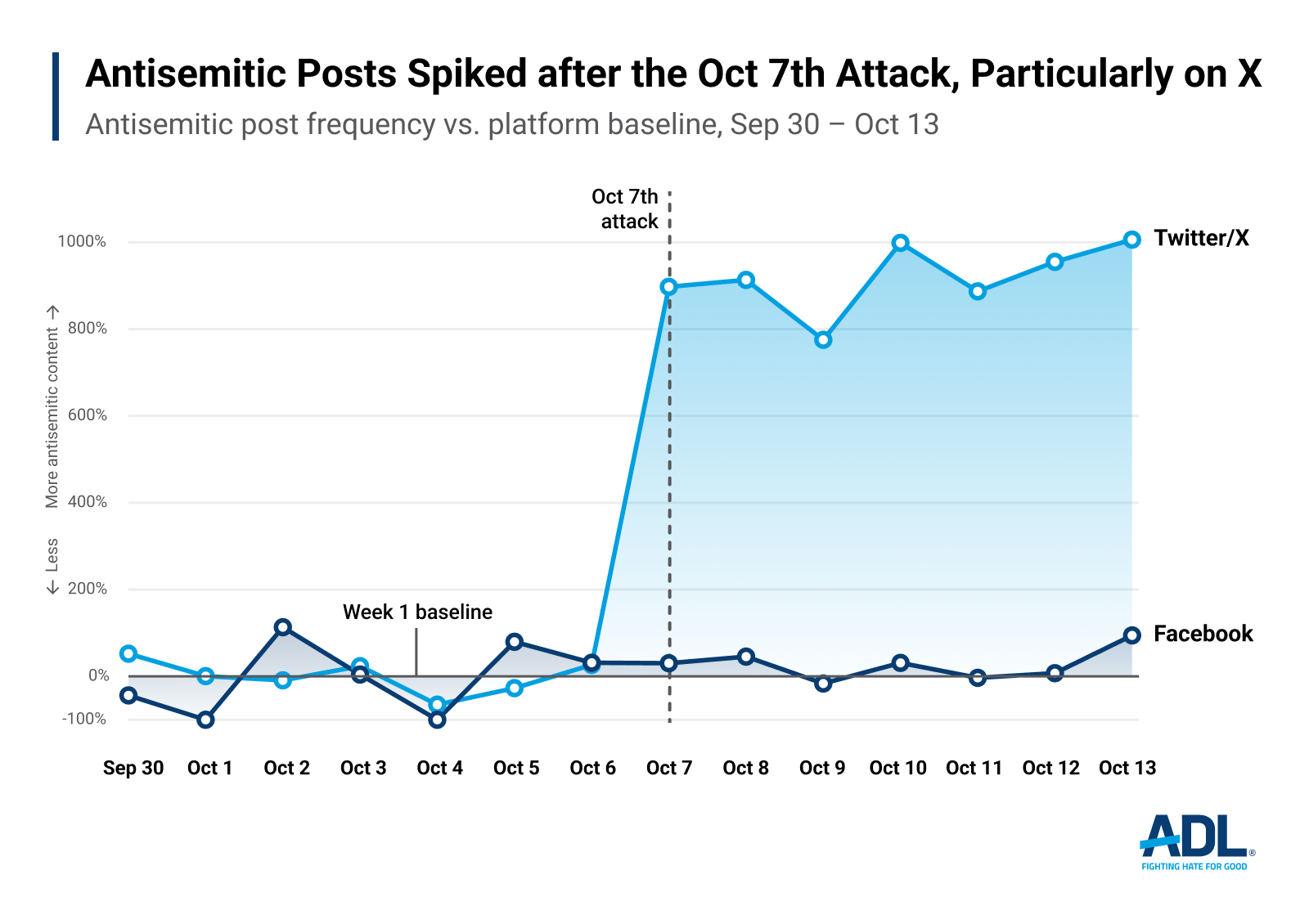

Since Hamas’ attacks on Israel on October 7, the Center for Tech and Society at ADL has documented a surge in online antisemitic hate and provided recommendations for moderating content during the war. Using computational data collection and analysis, ADL researchers have assessed the incidence of antisemitic hate on two mainstream platforms, X (formerly Twitter) and Facebook. Antisemitic posts increased dramatically on X beginning October 7, while the frequency of antisemitism on Facebook remained relatively stable. While not directly comparable data sets, these differences likely illustrate the central role content moderation plays in preventing hate online, particularly in times of crisis and conflict.

Quantifying online antisemitism helps make clear the magnitude of the last month’s spike in hate. ADL has recorded an increase already of over 300% in antisemitic incidents in the U.S. year-over-year since October 7. Online, the Global Project Against Hate and Extremism noted a nearly 500% increase in both antisemitism and Islamophobia on fringe platforms like 4chan, Gab, and Bitchute. Our latest findings show that increases in online hate, sometimes dramatic increases, are taking place on mainstream platforms as well.

Findings: Surging Antisemitism on X, Small Upticks on Facebook

We analyzed a sample of 162,958 tweets and 15,476 Facebook posts from September 30 to October 13 (a week before to a week after the initial Hamas attacks), and found a surge in antisemitism on X amounting to a 919% week-over-week increase. Our Facebook data showed a more modest 28% increase. While we were not able to gather a large enough sample on Facebook for that increase to reach statistical significance due to limitations in data access, the difference from X is stark enough to warrant comparison. Researchers used ADL’s antisemitism classifier, the OHI (Online Hate Index) to detect antisemitic content in these samples (see Method). We could not collect truly random samples because no major platform currently makes these readily available to researchers.

In the week after the outbreak of violence, Facebook saw only a minor increase in antisemitic content as compared to the previous week, in the public posts we were able to analyze. It’s possible there was less of an increase in antisemitic content posted publicly on Facebook, but it’s also possible Facebook enforced their hate speech policy more robustly and/or their content moderation tools were more effective at removing violative content (we could not assess private content on Facebook). In response to the start of the current crisis, Meta established a “special operations center staffed with experts, including fluent Hebrew and Arabic speakers.” The slight uptick in antisemitism on October 13 could indicate Facebook had not moderated that content yet: all data for this research were collected at midnight on October 14, meaning that the slight increase on October 13 may simply represent the antisemitic content Meta had not yet removed at the time of data collection. Without access to moderated content from Facebook, independent researchers cannot make such determinations.

On X, we saw a nearly tenfold surge in antisemitic content in the week after the attacks, despite having a baseline rate of antisemitism in the first week sample similar to that on Facebook. It is possible that the users on this platform began posting more antisemitic content after the attacks than Facebook users, but equally likely is a lack of effective content moderation. If the latter, it is unclear whether X is not moderating antisemitic content, or whether the platform lacks the enforcement tools and capacity. We cannot, however, verify if X downranked or deamplified any of the posts, only if they were still actively on the platform on the date we pulled the data. On October 12th, X CEO Linda Yaccarino posted that they had redirected resources and staff to address the crisis. In the past year, after X/Twitter’s repeated layoffs of trust and safety workers and disbanding of its Trust and Safety Council, we reported on X not enforcing their rules around antisemitic content.

Conclusion

Given the surge of antisemitic incidents offline and the swell in antisemitism on X, it is plausible Facebook also saw an increase in public antisemitic posts but detected and removed many of them, either through automated systems or with human assistance (we were unable to assess the prevalence of antisemitic content in private Facebook posts). Alternatively, it’s possible Facebook users are less likely to post content they expect will be removed.

This surge in antisemitism on X also highlights concerns we have previously written about. X has reinstated accounts banned for extremism and conspiracy theories, which often become “nodes of antisemitism,” after the platform drastically cut its Trust and Safety department, and failed to enforce its community standards, especially during times of crisis.

Recommendations

We have published multiple articles since October 7th with recommendations for how social media companies should moderate content during times of war. Key recommendations include:

-

Tech companies should proactively create guidelines for better-resourced and more intensive content moderation during crises, including increasing the number of moderators and experts with cultural and subject matter expertise related to the crisis at hand.

-

X should re-invest in content moderation and its Trust and Safety team and enforce its Hateful Content policy.

-

Tech companies should ensure that neither users nor the companies profit from posting hate. As we have previously written, monetization systems—such as the one in place for premium subscribers on X—present incentives for posting high-engagement content, regardless of its accuracy. This may be counteracted by enforcement of policies like X’s monetization standards, which do not permit monetization of content in violation of X’s rules (including around Hateful Content) or of content relating to “sensitive events” such as the ongoing war.

-

Tech companies should share platform data with independent researchers and civil society organizations. X removed its free API access for researchers, making it cost prohibitive for civil society organizations and researchers to access the quantity of data needed to monitor how harmful content proliferates on X and what mitigation tactics are most effective. While here ADL researchers were able to collect a sample of general Facebook and X posts using our data tools, such tools are expensive and imperfect. Transparency is crucial to improve and understand the effects platforms have on society.

Methodology

ADL researchers initially sampled Facebook, Instagram, and X/Twitter to quantify the surge in antisemitic content post the October 7 Hamas invasion of Israel. Truly random samples of such content, such as through Twitter’s “decahose” (once a free, random 10% sample of all tweets) or Reddit’s developer API, are no longer readily available to civil society researchers. X, for example, made the decahose prohibitively expensive in May of this year. ADL’s current data tools rely on keyword searches, so to approximate a random sample, we searched for public posts containing any of 20 of the most common words in the English language. Although this sample was not truly random, it was collected in a consistent manner before and after October 7, and we do not believe it introduced systematic bias. We cannot verify this without access to random samples from the platforms. Because our antisemitism classifier was built to analyze text-based content, we did not examine video sharing platforms like YouTube or TikTok. We also did not include Instagram data in this analysis, both because of data access limitations and because it is primarily image-based.

Using our data collection tools, ADL researchers searched for English-language posts using these keywords at 8 hour periods from midnight EDT on September 30 (one week before the initial Hamas attack) to 11:59pm EDT on October 13. All data were collected just after the period of interest, at midnight EDT on October 14. We collected a total of 162,958 unique tweets, 15,746 unique Facebook posts, and 28,011 unique Instagram posts. Instagram was ultimately excluded from analysis because the collected data skewed heavily towards recent posts (largely October 12 and 13) which made comparison against a baseline before the war impossible, and because Instagram is primarily image-based. We used Pearson’s chi-square test to make week-to-week comparisons in antisemitism frequency for each platform.

Antisemitism scores were calculated for the text of each post using the OHI (Online Hate Index), ADL’s proprietary antisemitism classifier. The OHI is a uniquely sophisticated tool which uses machine learning and a large dataset featuring over 80,000 pieces of content from Reddit and Twitter that Jewish volunteers labeled antisemitic, using a codebook developed by ADL experts. Having data annotated for hate by the affected identity group, in combination with natural language processing, furnishes the OHI a deeper and more nuanced understanding than that achieved by other automated classification tools. For example, internal documents from Facebook, leaked by whistleblower Frances Haugen and submitted to the SEC, appear to show how Facebook trains its classifiers only in terms of broad “hate” or “not hate” categories, without focusing on the specific experiences of targeted communities or the particular ways hate against specific communities manifests. We then manually reviewed a subset of posts the OHI identified as antisemitic and checked for obvious counterspeech, which we did not find.

One limitation to this study was the sample size of Facebook data, which was too small for the increase in antisemitism to reach statistical significance. In future research, we will be able to increase our sample size and test our hypothesis that the slight uptick in antisemitism on Facebook on October 13 was due to content that had not yet been moderated.

Note: This piece was updated on November 9th to add the sentence about X CEO's post.