Related Resources

Fight Antisemitism

Explore resources and ADL's impact on the National Strategy to Counter Antisemitism.

Since Hamas invaded Israel and massacred more than 1400 Israelis–including babies and children–we have seen the proliferation of hate speech and inflammatory language against both Jews and Muslims online. As ADL has long documented, online hate can inspire offline violence, which is especially concerning right now. And though many social media companies have publicly announced efforts to stem extremist content and mis- and disinformation related to the war, their tools, policies, and moderation efforts remain inadequate.

Platforms are struggling to prevent hate and harassment from proliferating in four main ways:

Failure to deal with niche or emerging hateful terms and acronyms.

Inadequate enforcement against dehumanizing and stereotyping language.

Difficulty moderating video and image content.

Curbing influential accounts’ use of tools, such as promoted posts, to amplify harmful content.

Extremist violence, such as mass shootings, often correlates with—and generates—spikes in online hate and harassment. For example, a Nashville shooting in March 2023 was attributed in some coverage to a transgender perpetrator, which led to a surge in anti-trans hate. We have also observed a correlation between the discussion of Israel, especially the Israeli-Palestinian conflict, and increases in antisemitic hate and harassment online.

The inverse is also true. Online hate and harassment has in some cases led to violence offline. For example, the shooter who attacked a Tops supermarket in Buffalo in May 2022 was fed a stream of hate on platforms such as Discord and 4chan. There is also evidence that both the Tree of Life synagogue shooter and the Christchurch shooter were radicalized online, on platforms like Gab and 8chan.

Hamas’s invasion on October 7, and Israel’s counter-action, have led to multiple violent incidents in the U.S., reminiscent of the days and weeks after 9/11. An Israeli student at Columbia University was assaulted on October 12 while putting up posters of the hostages taken by Hamas. That same day in Brooklyn, a group of men were assaulted by attackers with Israeli flags, shouting anti-Palestinian remarks. In Chicago, a six- year- old Palestinian-American boy was stabbed to death by his landlord, who reportedly yelled, “you Muslims have to die.” This was after the landlord apparently had been consuming conservative radio and becoming “obsessed” with news around the conflict. In an already tense environment, these attacks spread more fear among Jewish and Muslim populations, which in turn has the potential to fuel even more harmful online rhetoric.

ADL researchers manually reviewed posts from October 7th to October 18th to detect hateful and harassing content on popular mainstream sites: Facebook, Instagram, TikTok, X/Twitter, YouTube and Reddit. Our initial search found both anti-Jewish hate and Islamophobic content. Much of online content about the war is taking place on video- and image-based platforms like TikTok and Instagram, adding further challenges for detection and moderation. Although some content is directed at state actors (like Israel) or terror groups (like Hamas,) ADL found extensive hateful content, such as dehumanizing or stereotyping language, targeting Jews, Israelis, Palestinians, Arabs, and Muslims.

Niche or emerging hateful terms and acronyms

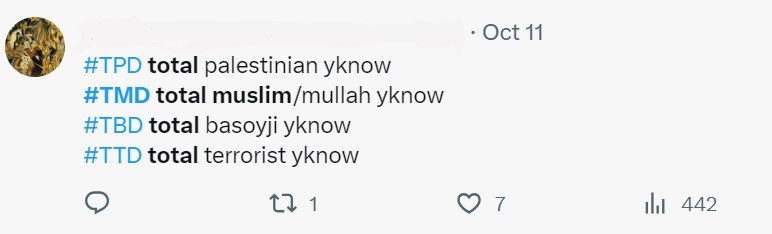

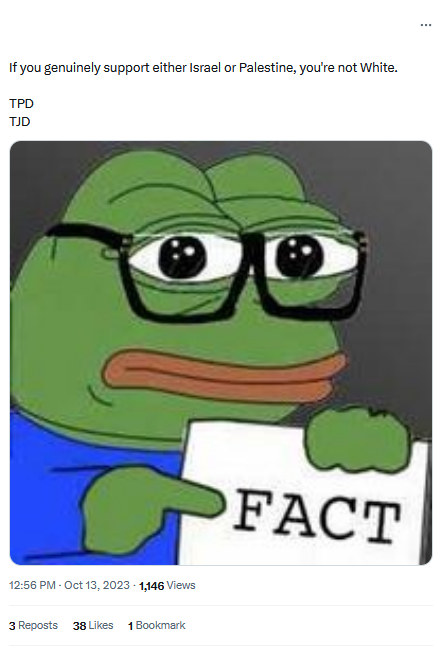

The language of hate and harassment often evolves rapidly online, making it difficult for some automatic detection tools (and human reviewers) to keep up. Many violative accounts across platforms use niche terms and acronyms like “TJD” (Total Jewish Death) or “TMD” (Total Muslim Death) and “TPD” (Total Palestinian Death) to advocate for genocidal violence, as in the examples in Images 1 and 2.

Image 1: TPD (Total Palestinian Death), TMD (Total Muslim Death), and related acronyms as hashtags on X/Twitter.

Image 2: TPD (Total Palestinian Death), TMD (Total Muslim Death), and related acronyms as hashtags on X/Twitter.

Recommendation:

Platforms must continually update their lists of hateful terms and use machine learning tools trained not only on toxic or hateful content, but on extremist language data sets generally, which are better able to detect extremist speech even when buzzwords or coded language change.

Dehumanizing and stereotyping language

We found a proliferation of dehumanizing and stereotyping language against Jews and Muslims, such as language conflating all Jews with the Israeli state or blaming all Palestinians or all Muslims for Hamas’s violence. Islamophobic violence rooted in this dehumanizing or stereotyping language has both historically and recently spilled over to non-Muslims as well, such as Sikhs.

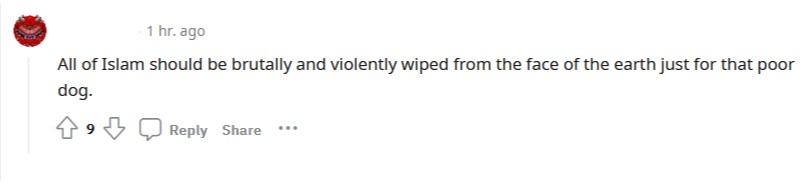

On Reddit, a post proclaimed that, “All of Islam should be brutally and violently wiped from the face of the earth just for that poor dog” (Image 3). This was in reference to a video purportedly showing a Hamas terrorist shooting a dog, and has since been removed.

Image 3: A Reddit post justifying how “all of Islam” should be punished for the death of a dog.

In other instances, Jews and Muslims are described in language that portrays them as uncivilized or less than human, such as “barbarians,” “savages,” “parasites,” or “animals.” While such language can be applied to specific acts of violence, generalizing all members of an ethnic group or nationality this way denies their full humanity and has been used to legitimize further violence against them.

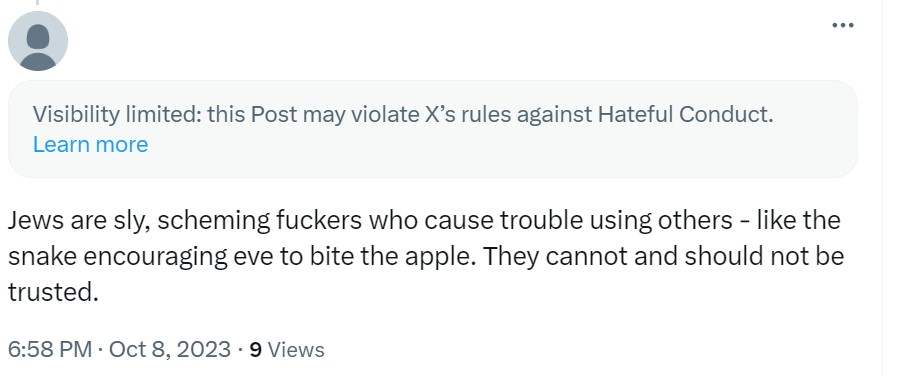

For example, a post on X/Twitter referred to all Jews as “sly” and “scheming” and likening them to snakes (Image 4). This kind of language makes it easier to justify violence against an entire group.

Image 4: Dehumanizing language against Jews on X/Twitter.

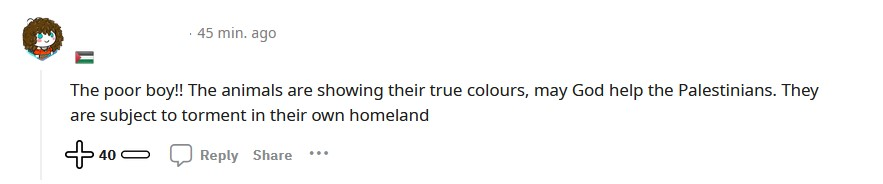

Similarly, a post on Reddit referred to all Jews as “animals” and that they had shown their “true colors” in regards to an unsubstantiated video of a Palestinian boy burnt by Israelis in a town (Image 5). This comment was active at time of this article's publication, but has since been removed.

Image 5: An example from Reddit of dehumanizing language against Jews.

Platforms often ban slurs denigrating ethnic or racial groups, but their automatic moderation tools do not effectively differentiate dehumanizing uses of otherwise non-violative words like “animals” or “parasites.” Moderation tools need to distinguish between language that is harmful to a group of people, like referring to all Muslims as “demons” or all Israelis as “animals,” as opposed to describing actions. And while AI-based moderation tools are necessary, they are not sufficient. Platforms need to ensure AI does not replace human moderators, who must be a core component of the content management pipeline. Automated detection and human review must take into account not just the literal meaning of words, but the context.

Recommendation:

Language around hate is always evolving to evade content policies, and that process does not stop when a war begins. Niche hate terms are still hate terms that platforms must act on in accordance with their hate speech policies. As the situation on the ground moves quickly, so too do the narratives and language used online. Platforms should always be aware of dehumanizing language and keep abreast of new and niche slurs and insults spreading on their sites. To do this they need a well-resourced and robust trust and safety team that can respond to threats quickly.

Moderating video and image content

Image- and video-based platforms have emerged as key sites for posting about the war, both firsthand accounts and responses to the violence. But these sites, for a variety of reasons, struggle to moderate hateful and harassing images and videos. We found more hateful content in videos and images than in text. Image- and video-based sites may be more popular means for sharing content about the war, but platforms may also face technical challenges quickly adapting video- and image-detection tools to new expressions of hate. Perpetrators often find loopholes such as sharing hateful screenshots rather than copying and pasting text, putting hateful text into images where it is less likely to be detected, or using implicit language and references in audio content paired with non-violative video to evade detection.

Image 6: Likely AI-generated antisemitic meme image.

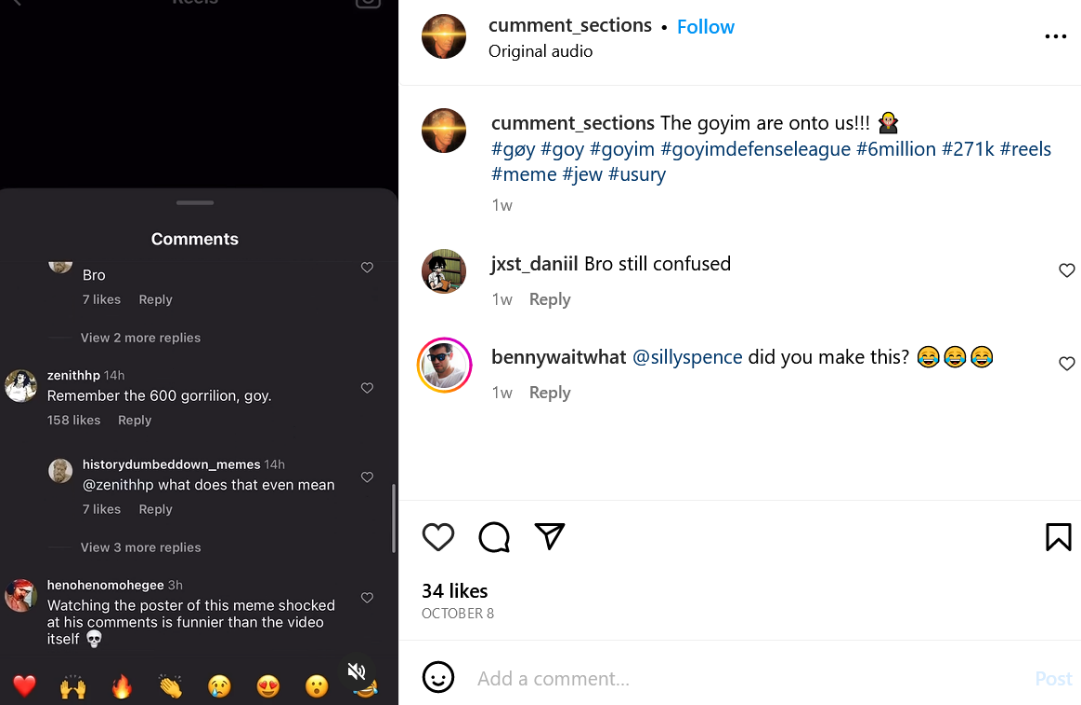

Image 6, for example, was found on Instagram and appears to be AI-generated. The hyper-realistic style of the art, as well as the blurring on the hat and other features, are signs that this image was not generated by a human. It includes not only an antisemitic caricature of a Jewish man, but text overlaying it, “the goyim doesn’t know,” an antisemitic catchphrase.

While tools to moderate image-based content like OCR (Optical Character Recognition), transcription, and AI have come a long way, they struggle at decision-making. Human annotators are still required to train AI moderation tools in what is and is not actionable content. Videos suffer from this difficulty as well. For example, Image 7 is a screenshot of a reel from Instagram. The content is blatantly antisemitic, implying that Jews are the cause of all European problems in history, and is overlaid with comments that are also antisemitic, such as, “they still are.” The audio itself is benign, however, featuring a soundbite of a man saying, “You’re a real, real problem.” There is currently no way for audio capture alone to determine if this reel is hateful.

Image 7: Screenshot of an antisemitic Instagram reel.

Recommendation:

Much of the content relating to the war is in video or images, so platforms should boost trust and safety resources – human and AI – to review those types of content. Video and images are notoriously hard categories to moderate and have been for years. We understand the large number of resources, both fiscal and human, that are required to automate content moderation to this level. But that means the onus is on platforms to surge their team resources to review this content.

Influential accounts amplify harmful content

Bad actors take advantage of amplification features, such as promoted posts that increase the reach of their content. By the time a platform takes action, or a user reports the content, it will have already been widely shared and viewed, amplifying the harm.

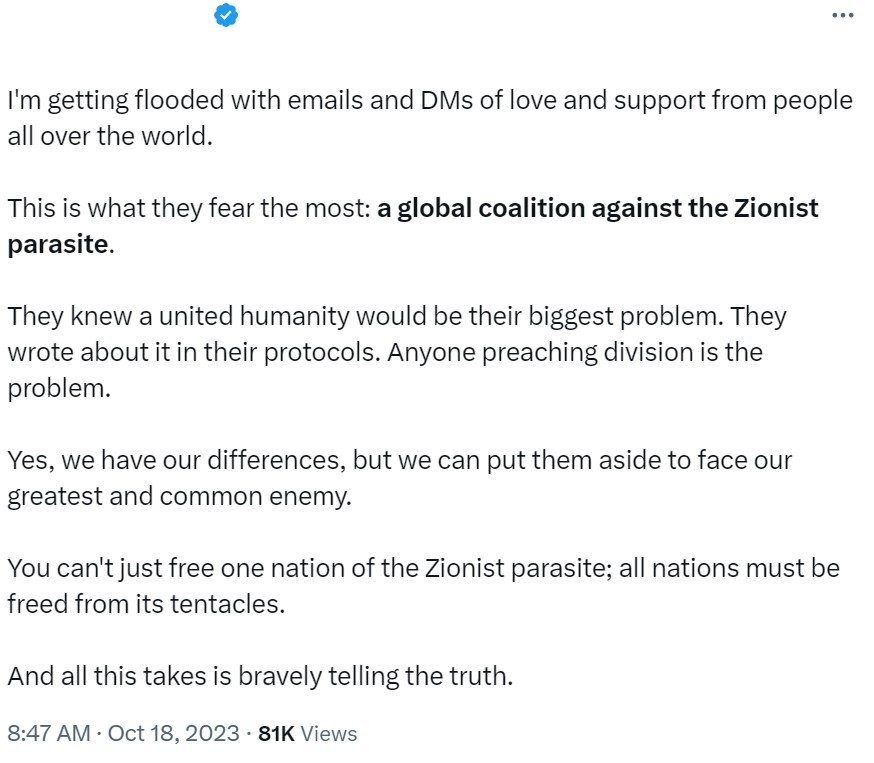

This post in Image 8 on X/Twitter references the antisemitic conspiracy theory Protocols of the Elders of Zion, and refers to Zionists as a “parasite.” The poster applauds the support they are receiving for other antisemitic posts they have made. As of October 18th, this post was still available to be viewed and has attracted over 45.9K views, 715 reposts, and 38 bookmarks. Because this poster is paying for a blue check mark, their posts are promoted across X/Twitter.

Image 8: Dehumanizing language targeting Zionists as a “parasite” which was likely amplified by X/Twitter’s Twitter Blue feature.

Recommendation:

Influential accounts can take advantage of amplification features (e.g., promoted posts) and if/when they get moderated, the damage is already done. To avoid this, platforms should minimize incentives to posting hate. Platforms should minimize the availability of incentives like promoted posts, and premium memberships, to hateful accounts. Once they find that their posts are being taken down and they are losing influence, they may be less likely to spread hate.