Related Resources:

Executive Summary

We examined whether four major social media platforms—YouTube, X (formerly Twitter), Facebook, and Instagram—are potentially profiting from ad placements alongside searches for hate groups and extremists. This new review by ADL and the Tech Transparency Project (TTP) found a range of results.

The most problematic of the four was YouTube. Not only did the company profit from searches for hate groups on its platform, YouTube is also creating—and profiting from—music videos for white supremacist bands. The review found that YouTube ran ads in search results for dozens of antisemitic and white supremacist groups, allowing the platforms to likely earn money from hate-related queries. This included ads for Walmart, Amazon, and Wayfair.

We also identified an issue specific to YouTube: The platform is serving ads for brands like McDonald’s, Microsoft, and Disney alongside music videos for white supremacist bands. Even more disturbing, YouTube itself generated these videos as part of an automated process to create more artist content for its platform.

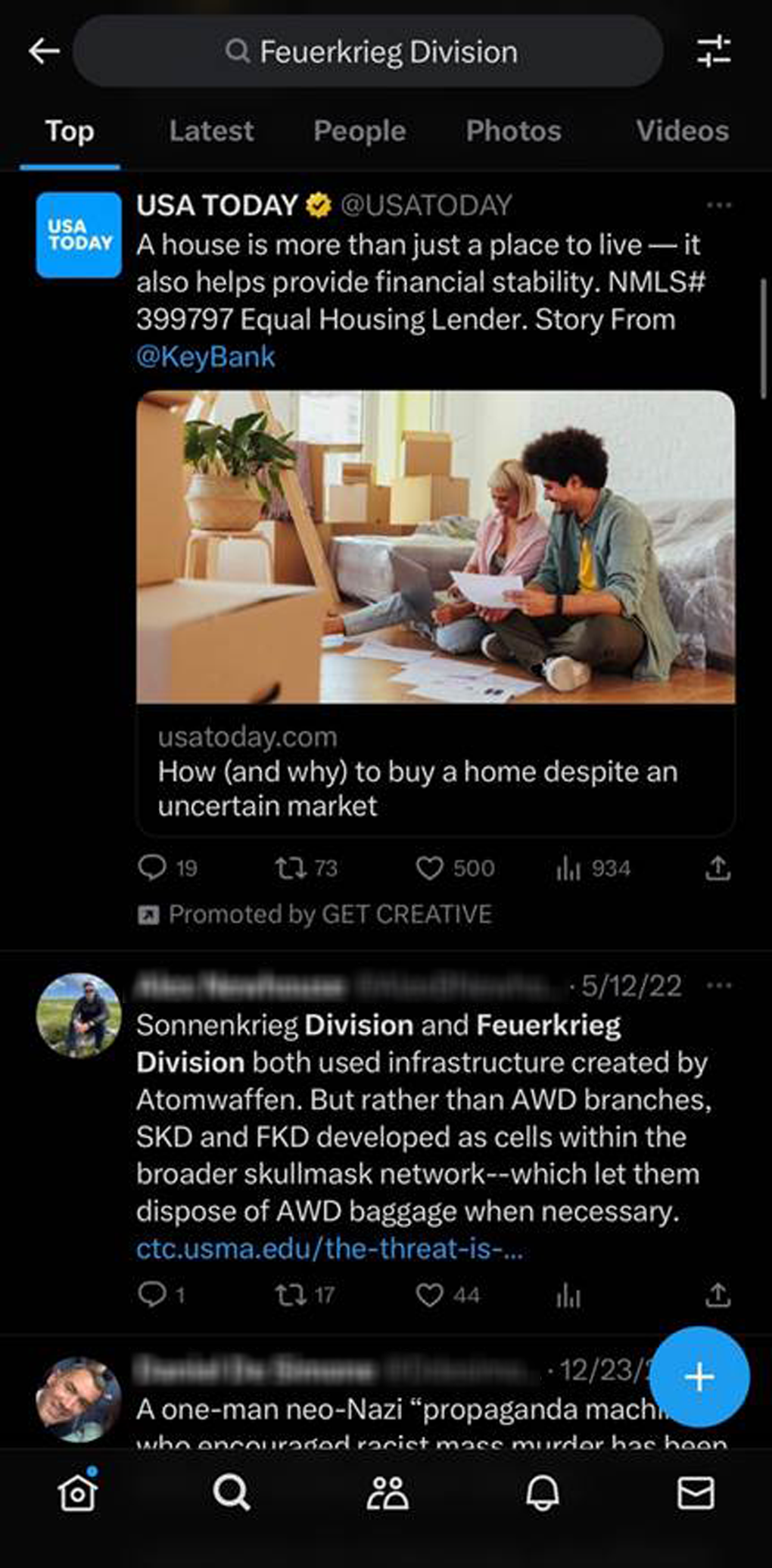

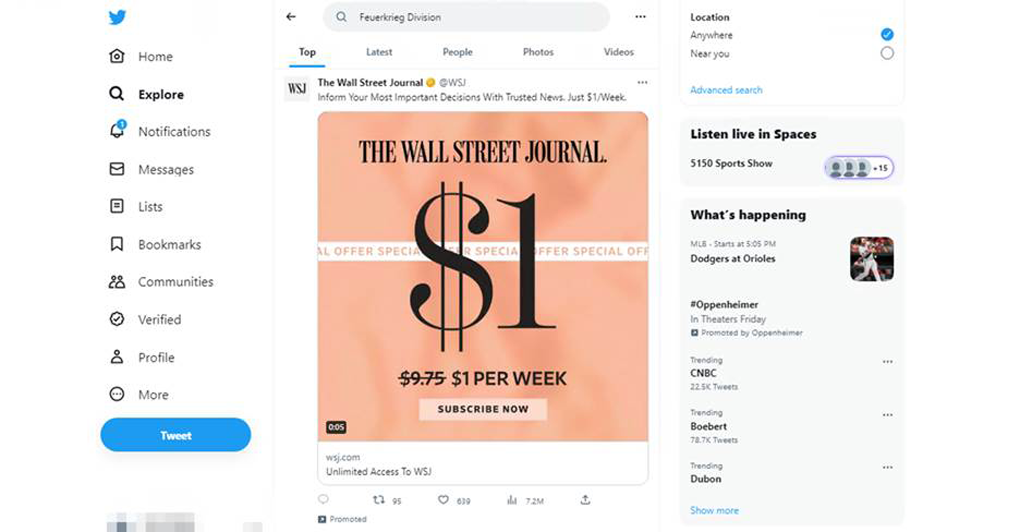

Our review found that X has also placed ads alongside searches for hate groups, but for a smaller percentage of hate group searches than YouTube.

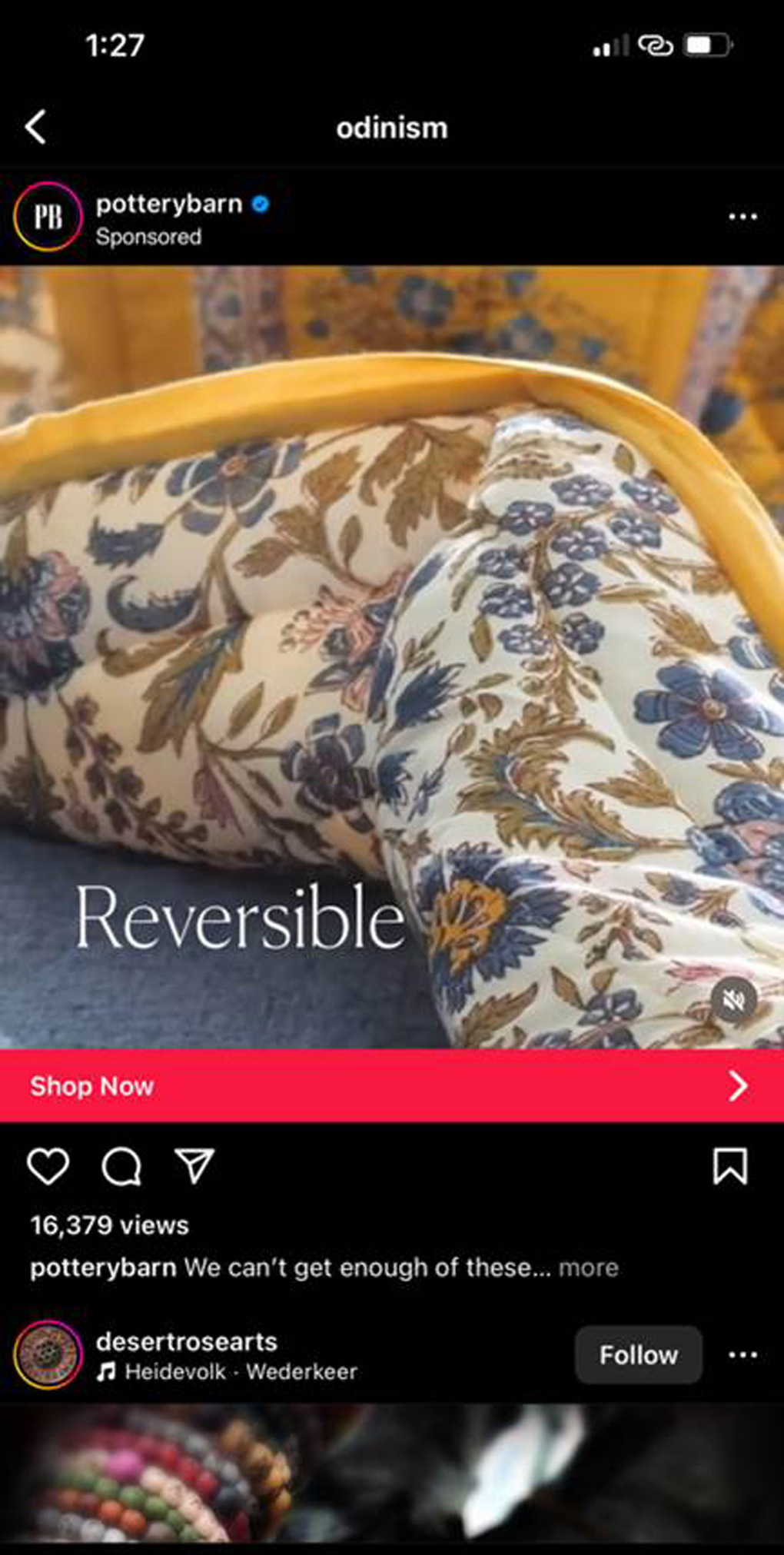

Meta-owned Facebook and Instagram, by contrast, only served ads in a handful of hate group searches, showing that it is possible for tech platforms to avoid this problem.

These findings show how YouTube and X are potentially generating ad revenue off content produced by hate groups that would violate their own policies. In the process, major brands are unwittingly associated with groups that stir up antisemitism and hate online and, in some cases, inspire violence in the physical world.

Notably, all ads displayed a notation such as “Ad,” “Promoted,” or “Sponsored”, which is designed to serve as a disclaimer of an association between the searched content and the served advertisement. Despite this effort, in our opinion the monetization alongside this content is problematic.

Note: These reviews were conducted in Summer 2023, but we ran spot checks in mid-September on both YouTube and X, using some of the same search terms. Our results showed that hate group searches continued to be monetized with advertising. Although some of the desktop searches for YouTube no longer produced ads, the mobile ones did. On X, hate group searches continued to produce ads on mobile and desktop.

Based on our findings, here are our recommendations:

For Industry

-

Improve Ad Placement Features. Platforms must ensure advertisements are not placed in close proximity to hateful or antisemitic content.

-

Stop Auto-Generating Hate. Platforms should either properly program tools to distinguish between auto-generating hateful versus innocuous content or pull down auto-generation features until the risk for creating nefarious content is mitigated.

-

Share More Information About Advertising Policies. Many of the monetization policies and practices on platforms are opaque or unclear. Platforms should be more transparent about revenue-sharing practices and policies around ad placement.

For Government

-

Mandate Transparency Regarding Platform Advertising Policies and Practices. Congress should require tech companies to share more information about how platforms monetize content and what policies drive advertising decisions.

-

Update Section 230. Congress should update Section 230—the law that provides near-blanket immunity from liability to tech companies for user-generated content—to better define what type of online activity should remain covered and what type of platform behavior should not be immune. These necessary updates to the law can help ensure that social media platforms more proactively address how they monetize content.

-

Regulate surveillance advertising. Congress must focus on how consumers, and advertisers, are impacted by a business model that optimizes for engagement. Congress should restrict ad targeting and increase data privacy, so companies cannot exploit consumers' data for profit—a practice that too often can result in monetizing antisemitism and hate.

Methodology

This project, conducted in Summer 2023, consisted of two reviews examining whether and when four major social media companies display ads alongside hate group content on their social media platforms.

We compiled a list of hate groups and movements from ADL’s Glossary of Extremism that were tagged with all three of the following categories: “groups/movements,” “white supremacist,” and “antisemitism.” The list totaled 130 terms.

For the first review, we typed each of the 130 hate groups and movements into the search bars of YouTube, X, Instagram, and Facebook on both the mobile and desktop versions of the apps and recorded any advertisements that the platforms ran in the search results. We then examined the content of the ads, including what companies, products, or services they advertised.

For the second review, we compiled a list of white supremacist bands from prior research conducted by ADL (as well as from the Southern Poverty Law Center and media reports). Using this list, we identified YouTube channels and videos for the bands that were auto-generated by YouTube. We then looked at whether YouTube is displaying ads alongside these channels and videos.

Search Monetization

The first review looked at whether YouTube, X, Facebook, and Instagram display ads in search results for hate groups on their platforms. Notably, all four of the platforms insert advertising at the top of search results to generate ad revenue for the companies. Two of the platforms, X and Instagram, introduced the feature just this year.

YouTube

YouTube began offering the option for ads in search results on its platform in 2019. The company initially called them “video discovery ads” and later changed the name to “in-feed" ads. In-feed ads run above search results on YouTube, and YouTube says the ads are “relevant” to the content surfaced in searches.

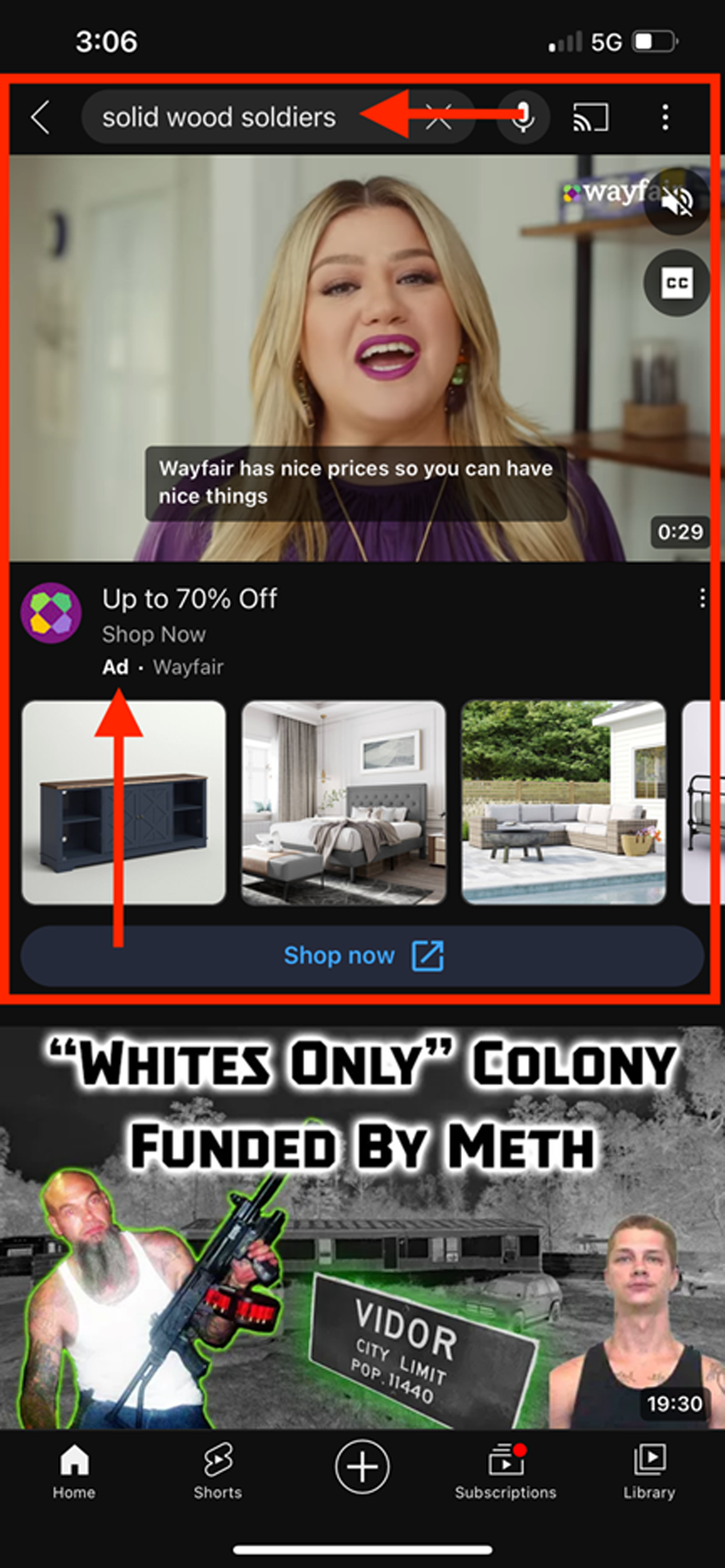

We entered the 130 hate groups and movements from ADL’s Glossary of Extremism into YouTube’s search bar on both the mobile and desktop versions of the app and found that the company served “in-feed” ads in search results for 67 of the terms.1 In some cases, YouTube displayed ads for well-known brands into hate group search results. For example, a search on YouTube’s mobile app for Solid Wood Soldiers, a Texas-based white supremacist prison gang, surfaced an ad for the furniture retailer Wayfair, featuring singer Kelly Clarkson. The Wayfair ad appeared directly above a YouTube video about a “‘Whites Only’ Colony Funded by Meth” that came up in the search results.

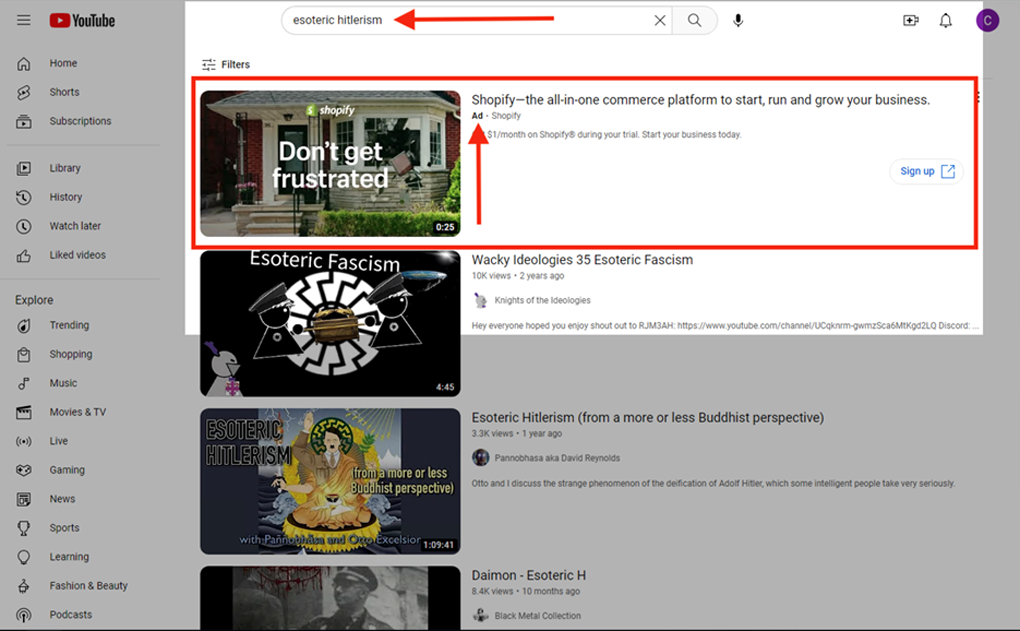

In another case, search results on YouTube desktop for Soldiers of Aryan Culture, a Utah-based white supremacist prison gang, and Esoteric Hitlerism, a pseudo-scholarly take on Nazi doctrine that synthesizes Nazism with Hinduism, included an ad for e-commerce site Shopify. The Shopify ad appeared above two informational videos on Esoteric Hitlerism as well as a video featuring music from the National Socialist black metal band Daimon and their album “Esoteric Hitlerism.” The album’s cover features a black-and-white stylized image of what appears to be a Nazi SS officer.

YouTube says its “policies ensure that certain types of content can’t be monetized, automatically preventing your ads from showing on content that isn’t appropriate for most advertisers.” That includes content that “incites hatred against, promotes discrimination of, or disparages an individual or group on the basis of their race or ethnic origin, religion … or other characteristic that is associated with systemic discrimination or marginalization.” But it is unclear if YouTube applies this standard to “in-feed” ads that appear in search results. We could find no mention of brand safety in YouTube’s policies for “in-feed” ads, and YouTube says that its Media Rating Council accreditation, which certifies that it meets industry standards on brand safety, excludes “discovery” ads, a reference to in-feed ads. If YouTube does not apply brand safety standards to ads inserted into search results, advertisers can be exposed to the risk of their messages appearing next to hateful or other types of inappropriate content.

The review also found examples of ads that appeared to be generated by YouTube to match the text of search queries for hate groups. These appear to be “Dynamic Search Ads,” which YouTube’s parent company Google explains this way:

When someone searches on Google with terms closely related to the titles and frequently used phrases on your website, Google Ads will use these titles and phrases to select a landing page from your website and generate a clear, relevant headline for your ad.

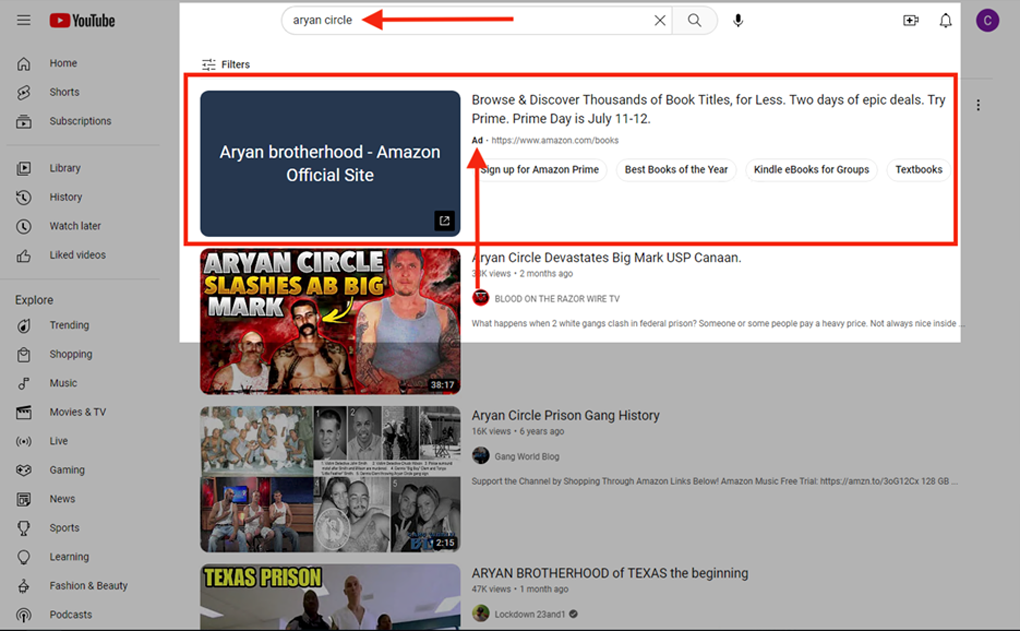

Notably, YouTube generated this type of ad for major brands even when the search queries involved hate groups. For example, a search on YouTube’s desktop app for Aryan Circle, a white supremacist prison gang in Texas, produced an ad with the text “Aryan brotherhood – Amazon Official Site” and a link to amazon.com/books.

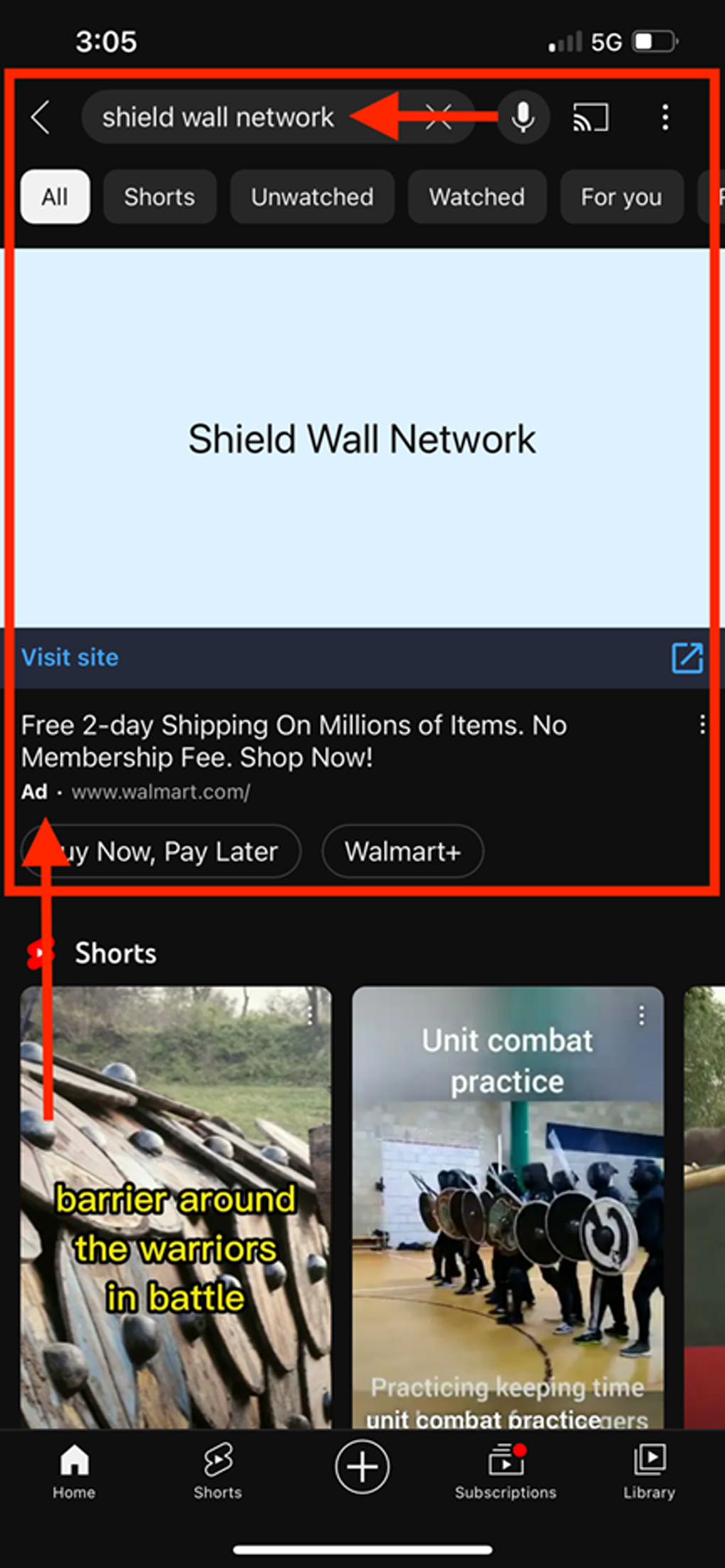

Likewise, a mobile search on YouTube for Shield Wall Network, a small Arkansas-based neo-Nazi group whose primary goal is to build a white ethnostate, produced an ad for Walmart with the text “Shield Wall Network” and a link to Walmart.com.

With Dynamic Search Ads, Google says it “may make modifications to better align the headline” with the company’s “ad integrity policies.” But it did not do so in the above cases, even though the hate groups in question appear to violate Google policies.

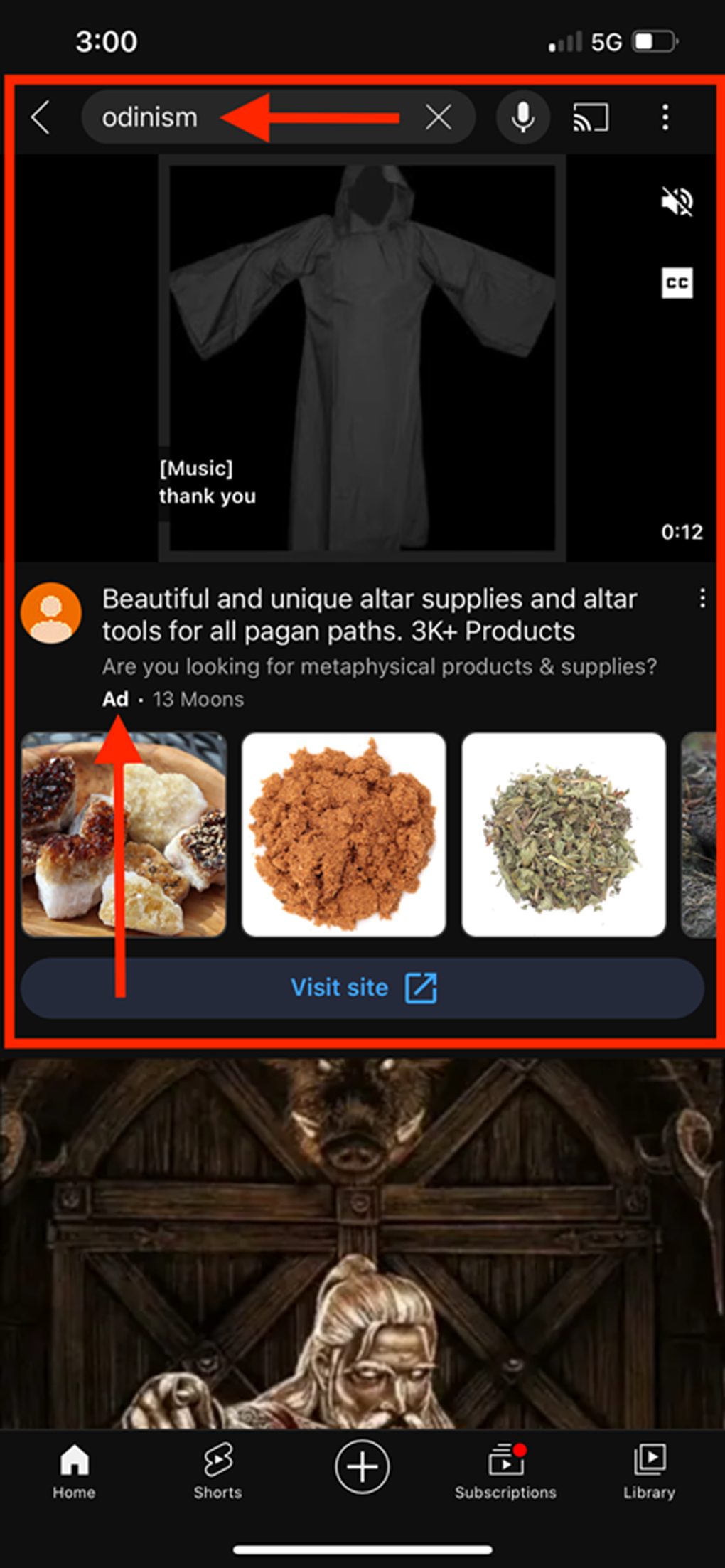

We also found examples of YouTube inserting ads with questionable content into hate group searches. For example, a mobile YouTube search for Odinism, a racist variant of a Norse pagan religion that’s been embraced by white supremacists, surfaced an ad offering “pagan” supplies that included a white-hooded robe reminiscent of the traditional garb of Ku Klux Klan members. This appears to violate YouTube’s prohibition against ads that “promote hatred” or “hate group paraphernalia.”

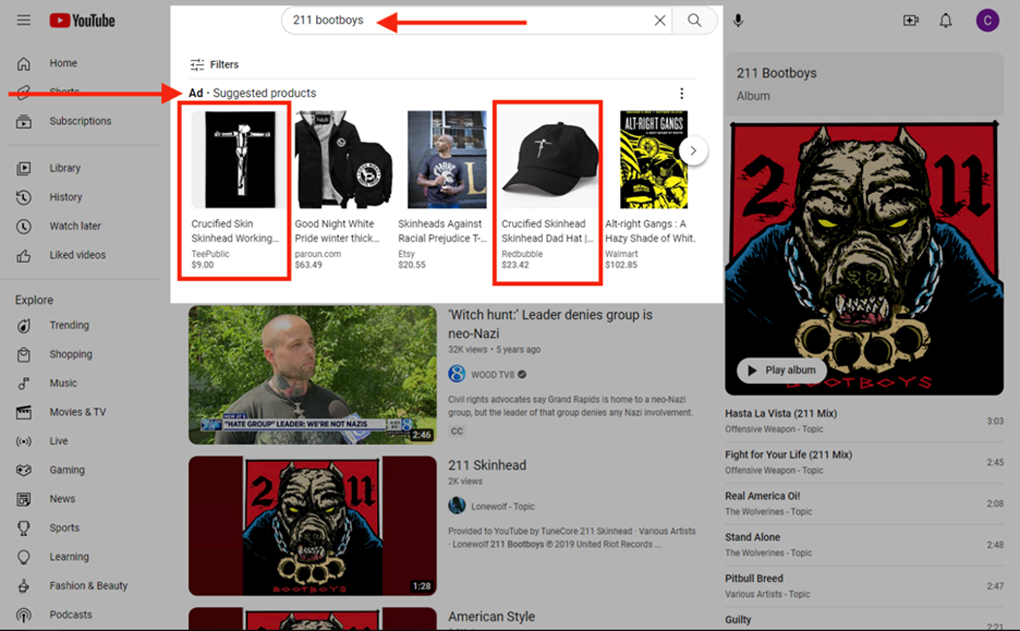

Another search on YouTube’s desktop app for 211 Bootboys, an ultranationalist skinhead group active in the hardcore punk scene in the Northeast, produced a “shopping ad” with a scroll of products that included a crucified skinhead T-shirt and baseball cap. The crucified skinhead image, which is meant to convey a sense of persecution and alienation, is used by the entire skinhead movement, including both racist and non-racist factions.

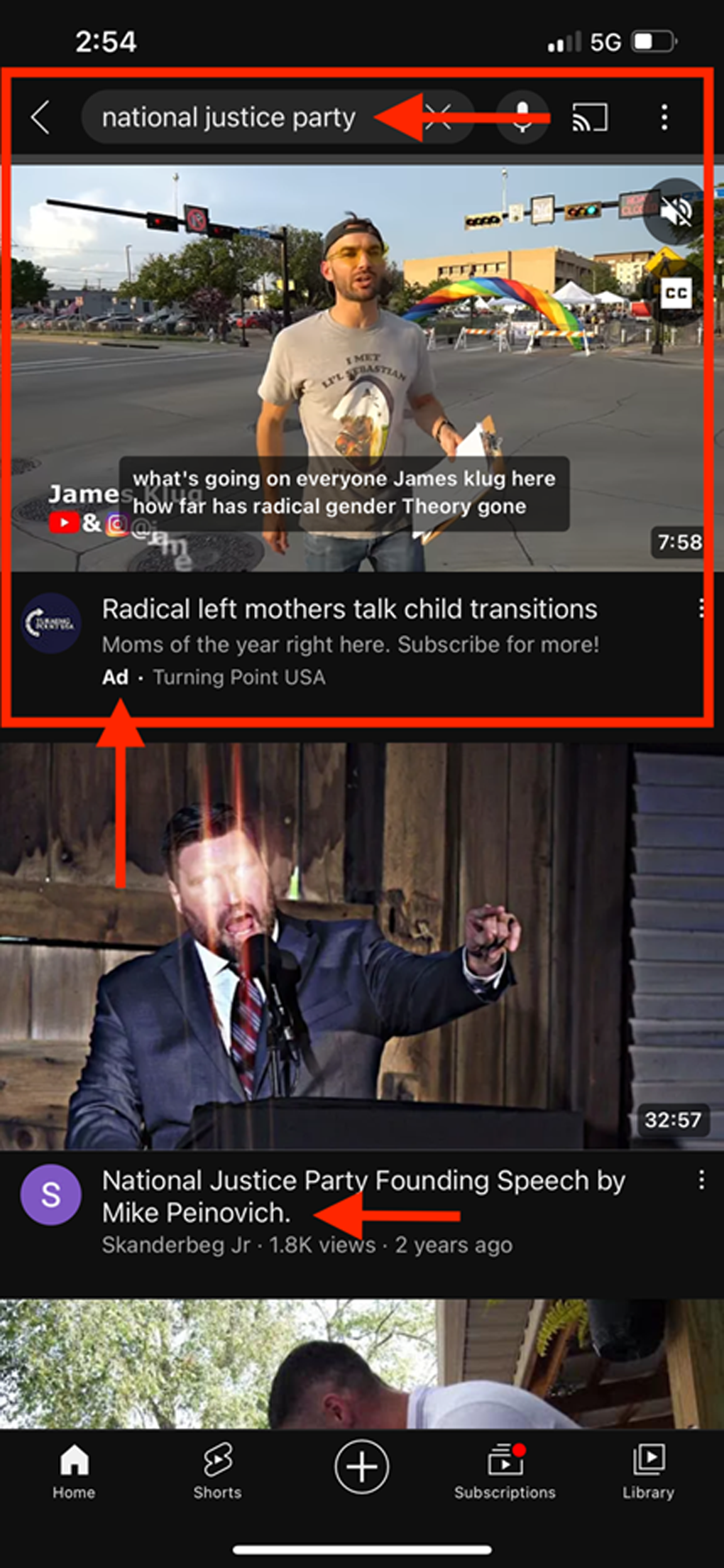

Another search on YouTube’s mobile app for the National Justice Party, a virulently antisemitic white supremacist group, surfaced an ad for a video by the right-wing student organization Turning Point USA that mocked parents who support transgender children. Turning Point USA is not on the list of 130 antisemitic and white supremacist groups cited in this report, but the ad’s anti-transgender message appears to violate Google policy, which bans ads that “promote hatred, intolerance, [or] discrimination.”

X

X (formerly known as Twitter) began offering advertisements in search results in January 2023. The feature, called “Search Keywords Ads,” enables advertisers to pay for their ads to be placed in search results. We found that X ran ads in search results for 40 of the 130 hate groups and movements from ADL’s Glossary of Extremism. In the 40 cases where ads were displayed, X ran the ads on both mobile and desktop.2

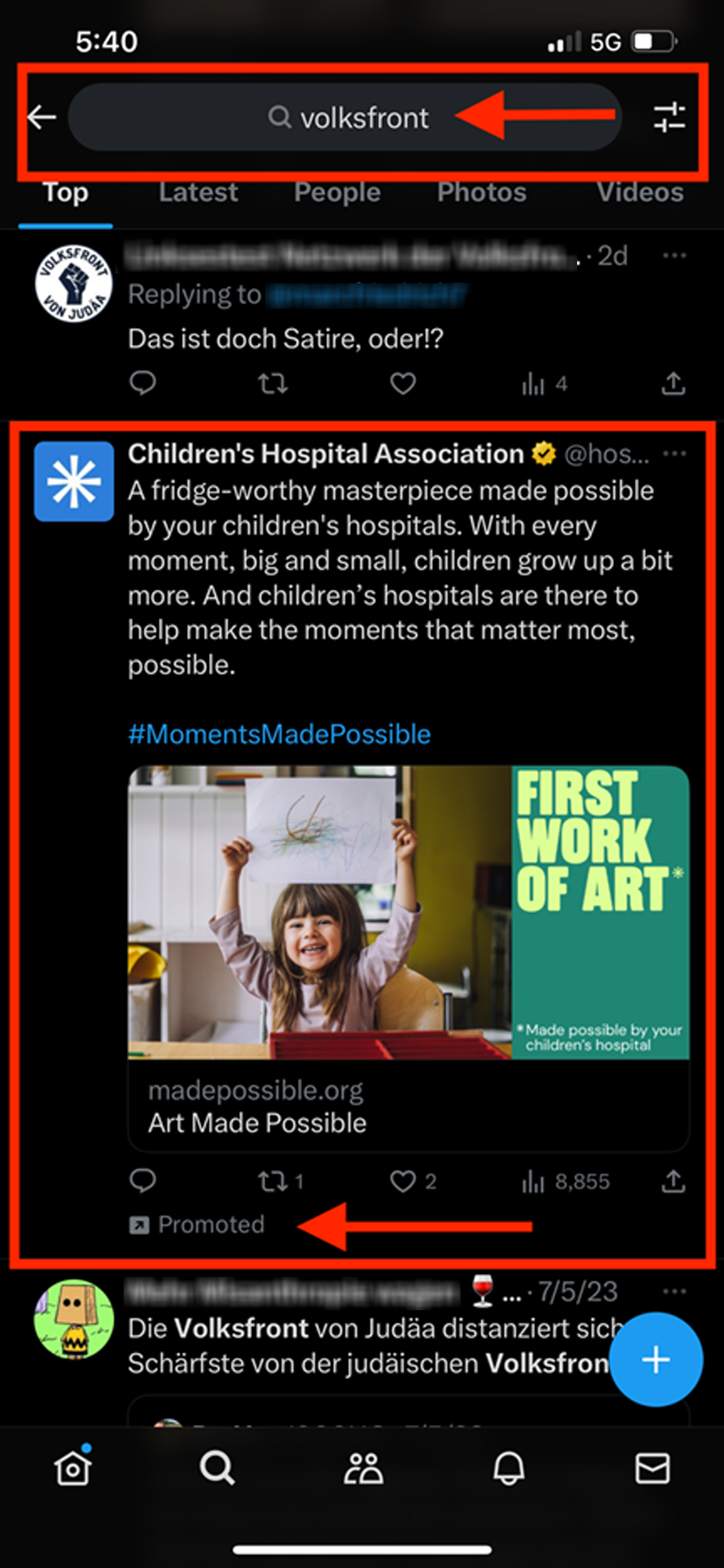

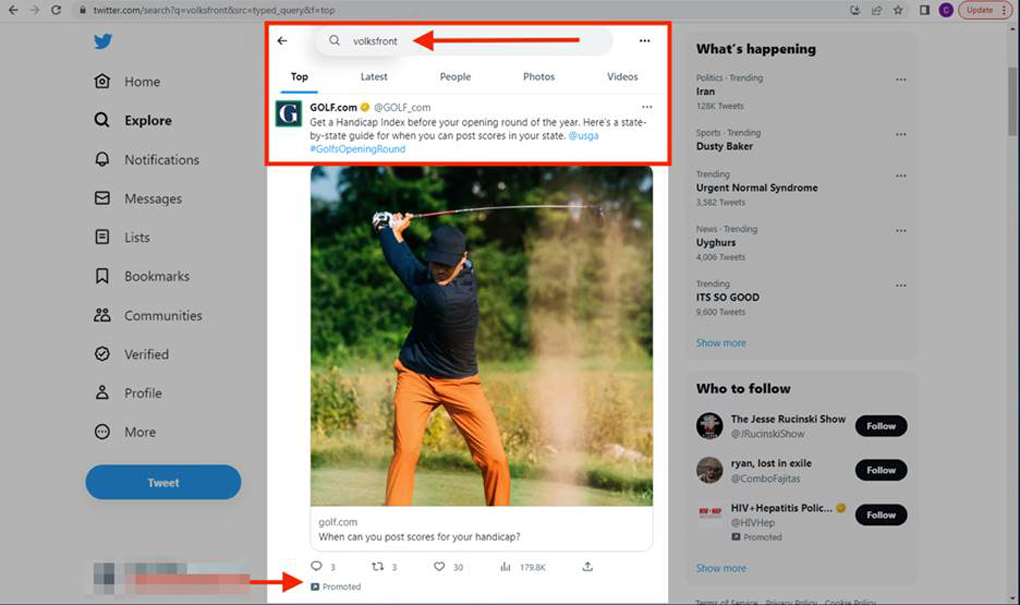

For example, when we searched X for Volksfront, a combination racist skinhead gang and neo-Nazi group created by prison inmates, the platform displayed an ad for the Children’s Hospital Association into the mobile results and an ad for the website Golf.com into the desktop results.

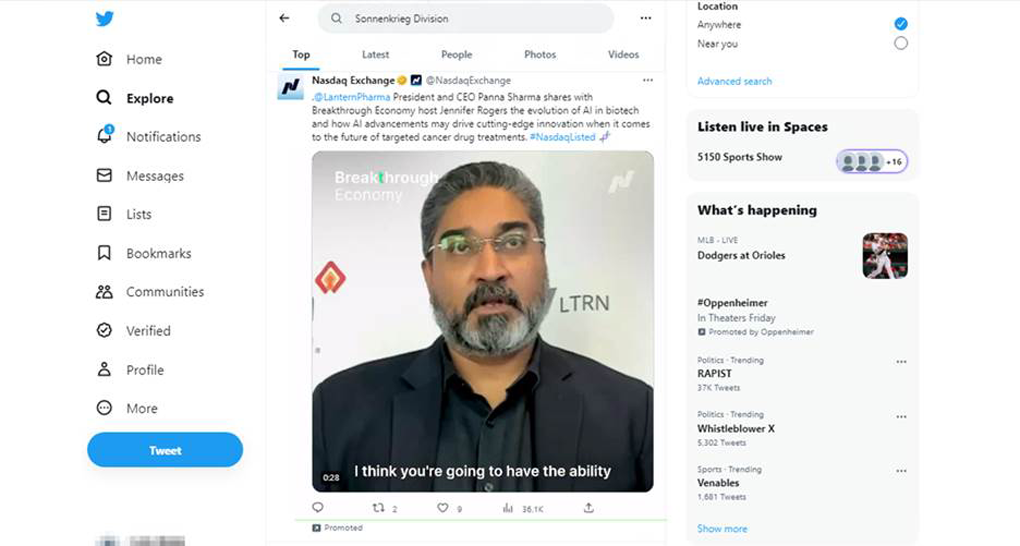

When X was searched for Sonnenkrieg Division, a white supremacist organization that launched online in the United Kingdom in 2018, the desktop results included an ad for the Nasdaq Exchange, and the mobile results included an ad for Local Chevy Dealers.

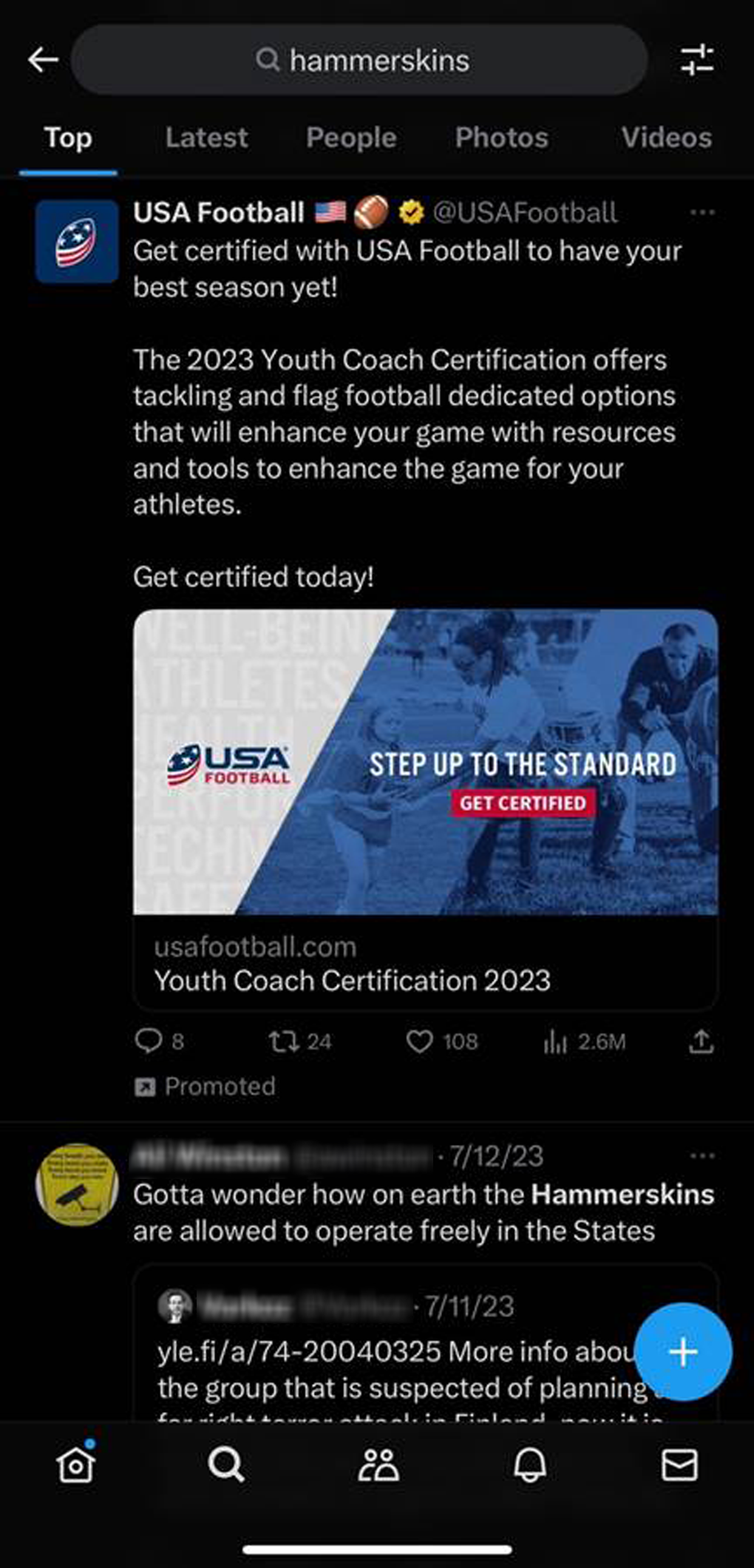

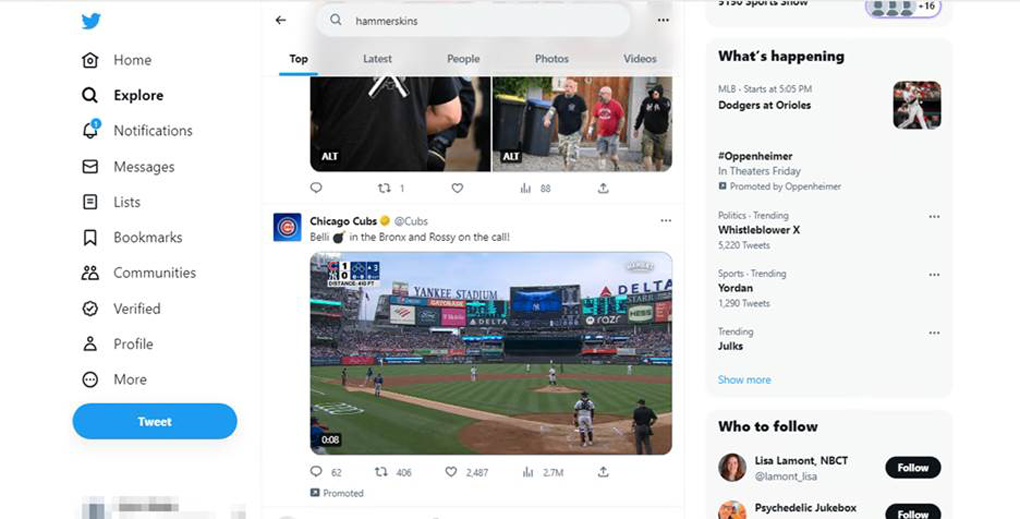

It was a similar situation with a search on X for Hammerskins, one of the oldest hardcore racist skinhead groups in the U.S. with a history of violence. The mobile search displayed an ad for USA Football, the national governing body for amateur football, and the desktop search surfaced an ad for the Chicago Cubs baseball team.

Meanwhile, a mobile X search for Feuerkrieg Division, an international neo-Nazi group that advocates for a race war and holds some of the white supremacist movement’s most extreme views, included an ad for USA Today in the results; the same search on desktop yielded an ad for the Wall Street Journal.

Instagram and Facebook

Instagram announced the ability to place ads in search results in May 2023. The feature, which is only available on the mobile application and not on desktop, pushes ads to users when they scroll through Instagram posts that come up in a search. The ads have a “Sponsored” label to distinguish them from regular posts. Here, we searched Instagram’s mobile app for the 130 hate groups and movements from ADL’s Glossary of Extremism and found that only a handful of searches—four—produced ads in the results. This is 16 times fewer than YouTube and 10 times fewer than X.

It is unclear why the Instagram hate group searches produced fewer ads. But Instagram parent company Meta has addressed this issue before. In August 2022, Meta-owned Facebook said it began “resolving” an issue of ads appearing in search results for banned hate organizations after an investigation by TTP covered by the Washington Post. Meta says its policies “protect your business from appearing next to harmful content,” and the company touts its relationship with the Global Alliance for Responsible Media (GARM), an advertiser initiative that works to address harmful content on digital platforms.

Still, two of the four ad-generating searches on Instagram did raise potential reputational concerns for a major brand. We found that searches for Odinism and Bound for Glory, a white power music band, produced ads for Pottery Barn in the results. (Bound for Glory could also refer to a book and movie about singer-songwriter Woody Guthrie.)

In good news, Facebook performed even better. When we entered the 130 hate groups and movements into Facebook’s search bar, on both mobile and desktop, just one search—a mobile search for Odinism—was monetized with advertising.

The findings suggest that Meta may have taken steps to restrict advertising on searches for hate organizations. Still, a clear understanding of why Meta performed differently than the other platforms requires more transparency from the companies about their monetization tools.

YouTube’s Auto-Generated Videos

We conducted a second review focused specifically on whether YouTube is monetizing auto-generated hate channels and videos.

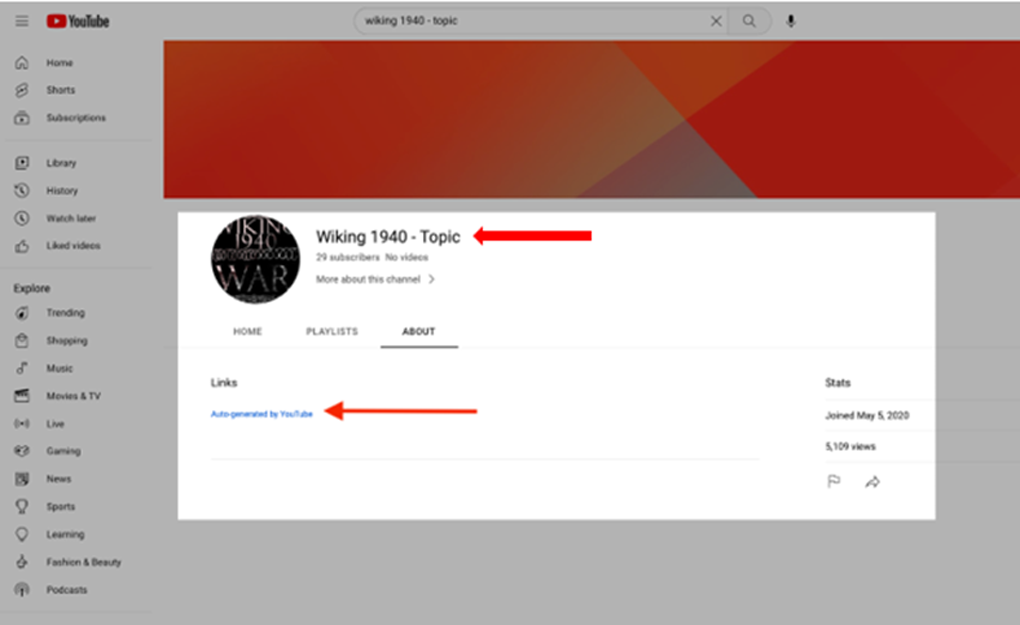

As ADL and TTP found and explained in a previous report, YouTube automatically generates “topic” channels for artists who have a “significant presence” on the platform, and even creates videos for them. It is not clear why YouTube does this. The auto-generated videos feature a song recording and a static image of album art. Concerningly, YouTube auto-generates this kind of content for white supremacist bands that violate the platform’s hate speech policy.

For this review, we examined whether advertisements appeared with YouTube’s auto-generated content for white supremacist bands.

We compiled a list of white supremacist bands that have been identified in reports by ADL, the Southern Poverty Law Center, and media outlets. We then typed the name of each band into YouTube’s search bar along with the word “topic,” which YouTube uses to mark channels that it has auto-generated. These topic channels frequently include videos that are also auto-generated by YouTube.

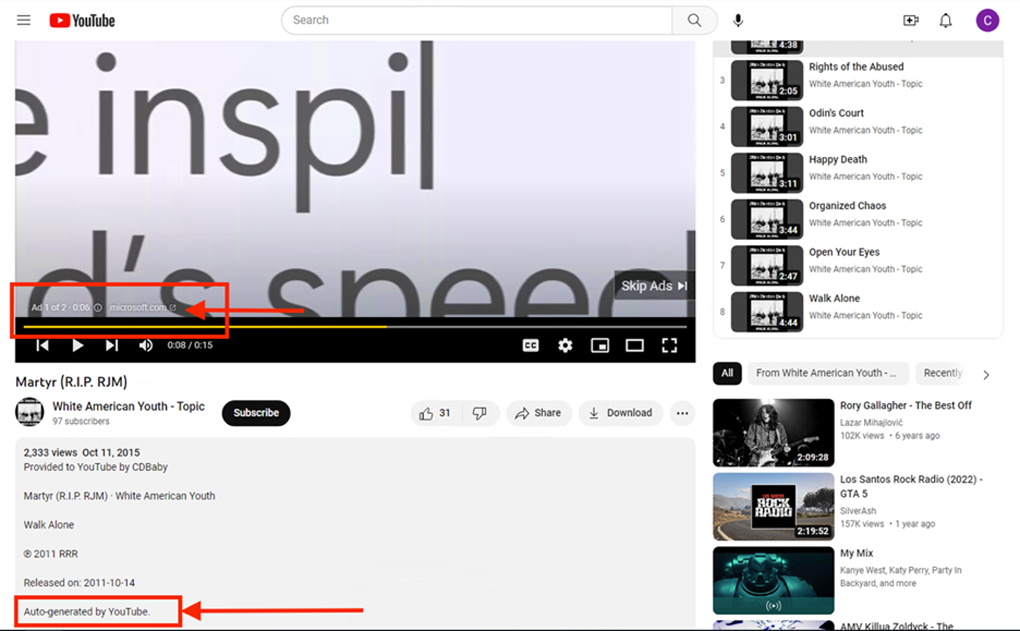

In fact, YouTube ran a 15-second ad for Microsoft before an auto-generated video for the song “Martyr (R.I.P. RJM)” by the white power punk band White American Youth. The “RJM” in the title appears to refer to Robert Jay Mathews, the leader of a white supremacist terrorist group killed in a shootout with the FBI in the 1980s. The song comes from the band’s album, which contains tracks titled “White Pride,” “White Power,” and “AmeriKKKa For Me.” The video—and the channel in which it appears—are labelled as auto-generated by YouTube.

It is not clear why YouTube would generate content for White American Youth, especially as it appears that YouTube has previously taken down other content associated with the band. A once-active link to a YouTube playlist for White American Youth, which was cited in a 2017 media article, now leads to a screen that states, “This video has been removed for violating YouTube's policy on hate speech.”

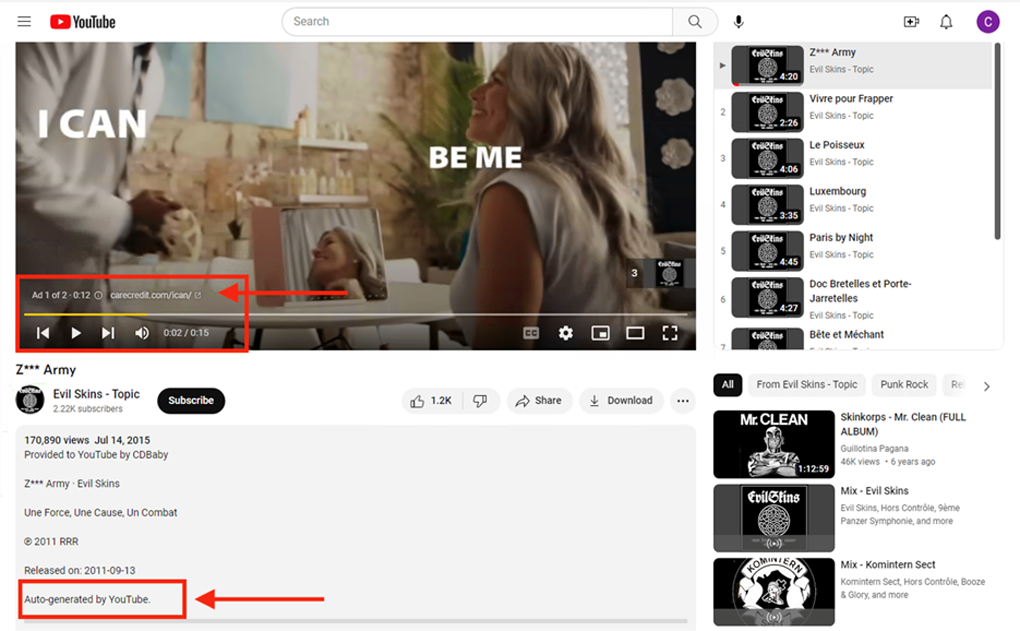

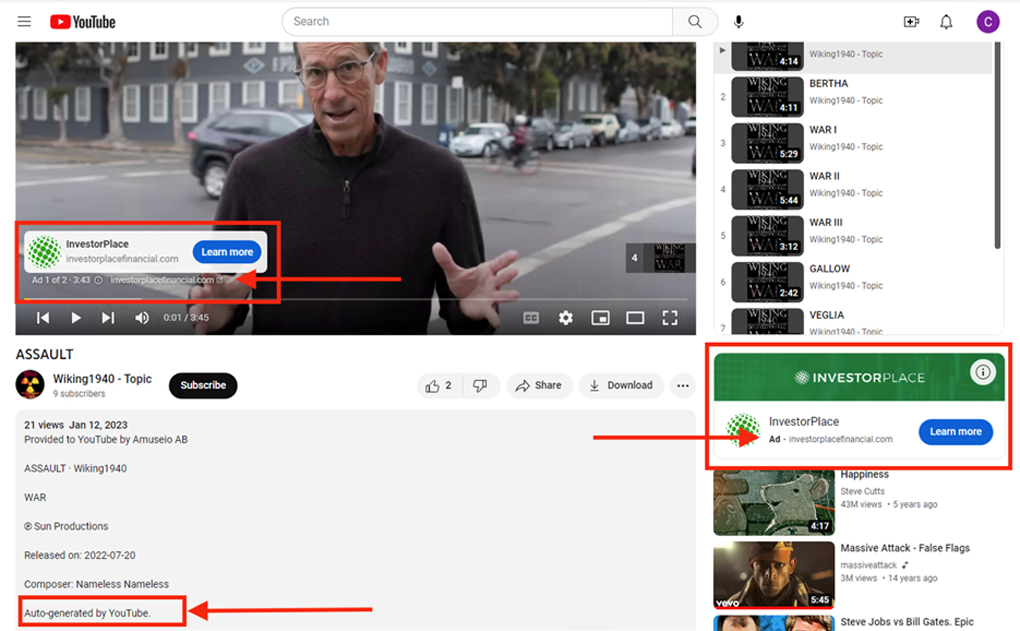

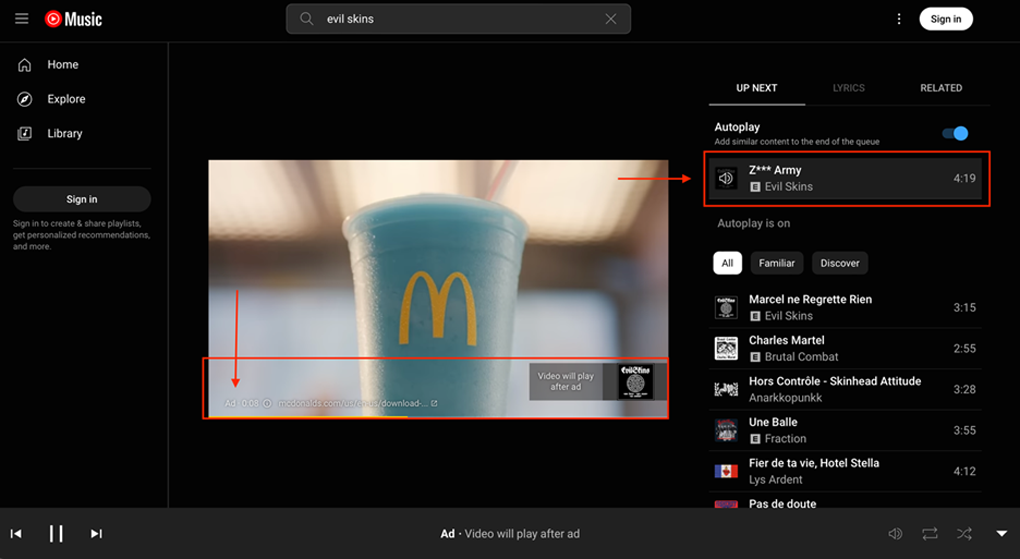

YouTube also ran a 15-second ad for CareCredit, a health care credit card, on an auto-generated video for the song “Zyklon Army,” a reference to the Zyklon B gas that Nazis used for mass murder in concentration camps during the Holocaust. (The song’s title was partially blanked out, appearing as “Z*** Army.”) The song—by Evil Skins, which has been described as a racist skinhead and neo-Nazi band—opens with a recitation of “Sieg Heil,” a phrase adopted by the Nazi Party during World War II that means “Hail Victory” in German.

The video, which had 169,000 views, appeared on a YouTube-generated “topic” channel for Evil Skins that was created in 2011. YouTube created the channel and video even though they appear to violate YouTube’s policies on hate speech, which ban content that promotes hatred against individuals or groups based on attributes like race, ethnicity, religion, and nationality.3 YouTube says that its Community Guidelines apply to “all types of content” on the platform.

YouTube refers to the above auto-generated videos—which feature the song recording and a static image of album art—as “art tracks.”

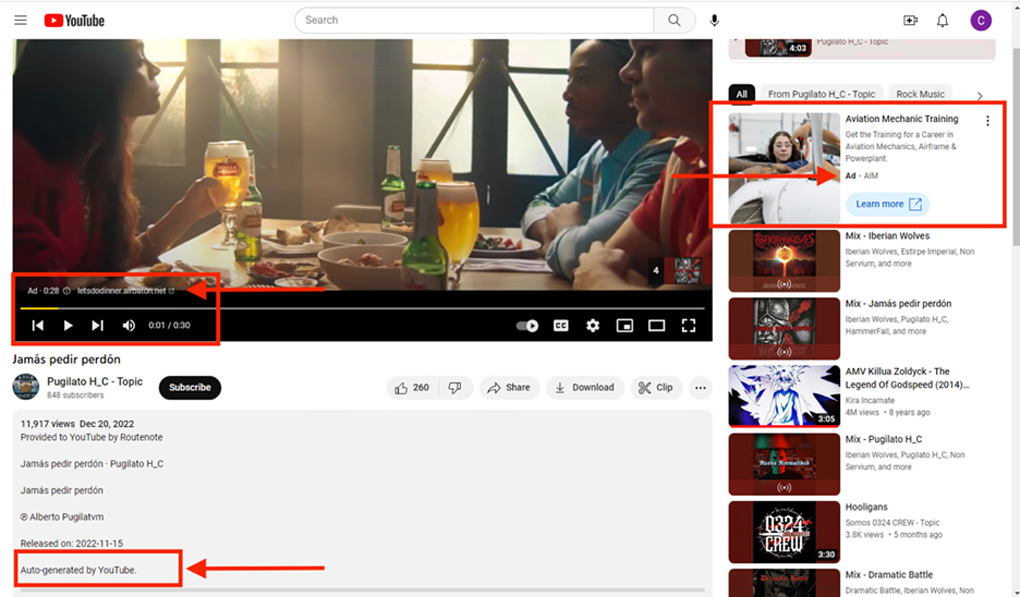

The platform does not disclose whether it shares revenue from ads that run on these auto-generated art tracks with the bands that created the music. But it appears that YouTube often monetized these videos in multiple ways. For example, YouTube ran a 30-second, non-skippable video ad for Stella Artois beer on an apparently auto-generated art track from the Spanish white supremacist band Pugilato H_C, formerly known as Pugilato NSHC. Non-skippable video ads require users to watch them in full before they can see the video content. YouTube also ran an ad for the Aviation Institute of Maintenance in the same track’s “watch feed” of recommended videos.

The review found YouTube running ads on other auto-generated content for white supremacist bands:

-

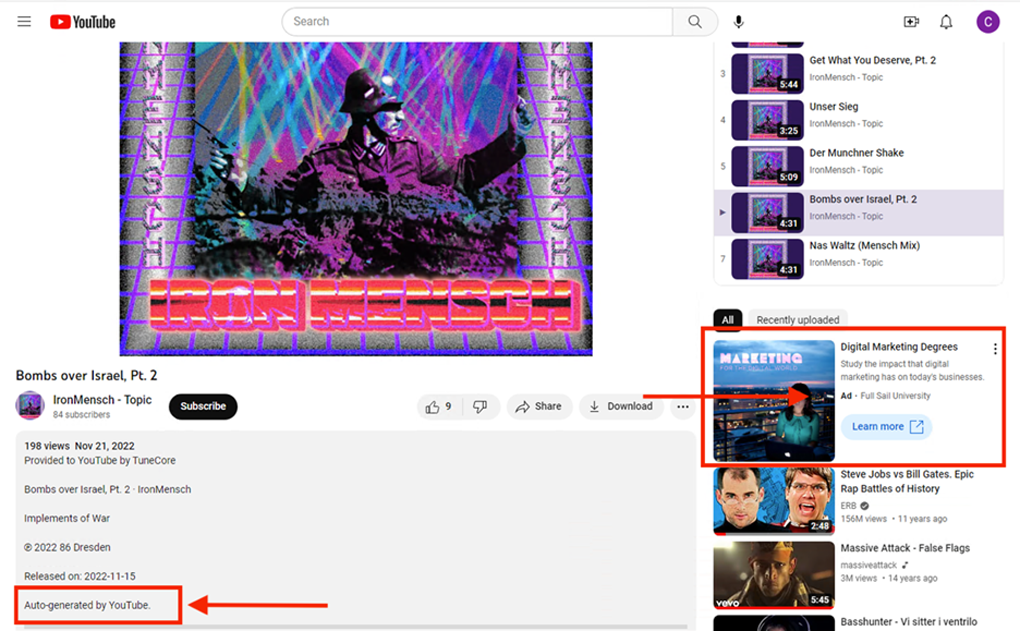

YouTube displayed an ad for Full Sail University, a for-profit vocational school in Florida, on an auto-generated video for the song “Bombs Over Israel, Pt. 2” by the band IronMensch. The band is part of the Fashwave (a mashup of “fascism” and “vaporwave”) genre of white supremacist music. The cover art used for the auto-generated video includes a colorized image of a dancing soldier in a Nazi SS-style uniform.

-

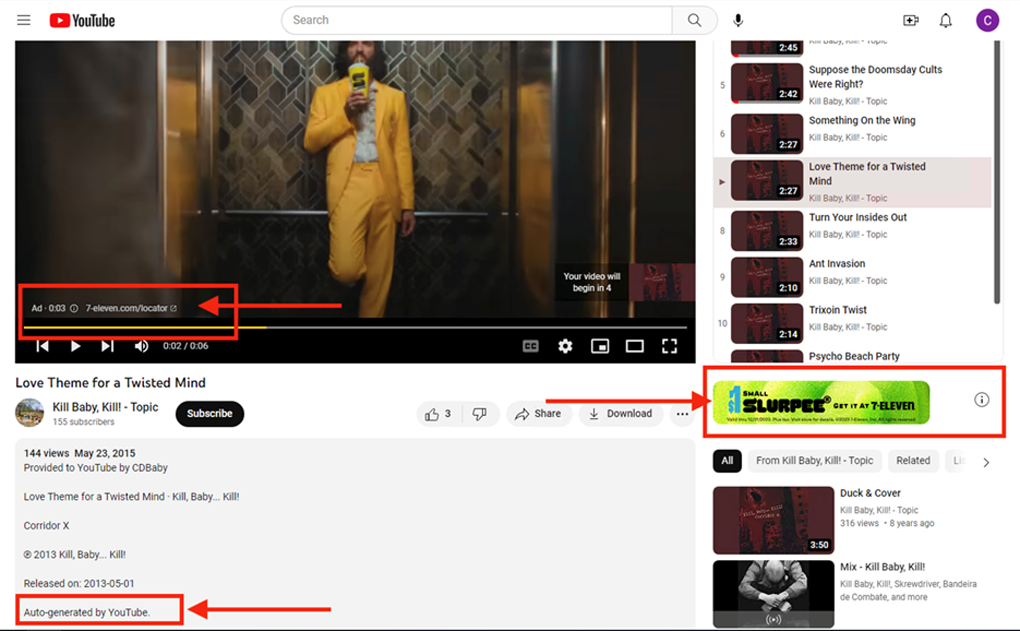

YouTube ran a non-skippable video ad for the convenience store chain 7-Eleven on an auto-generated video for the song “Love Theme for a Twisted Mind” by the band Kill Baby, Kill!, which the Southern Poverty Law Center has categorized as racist.

-

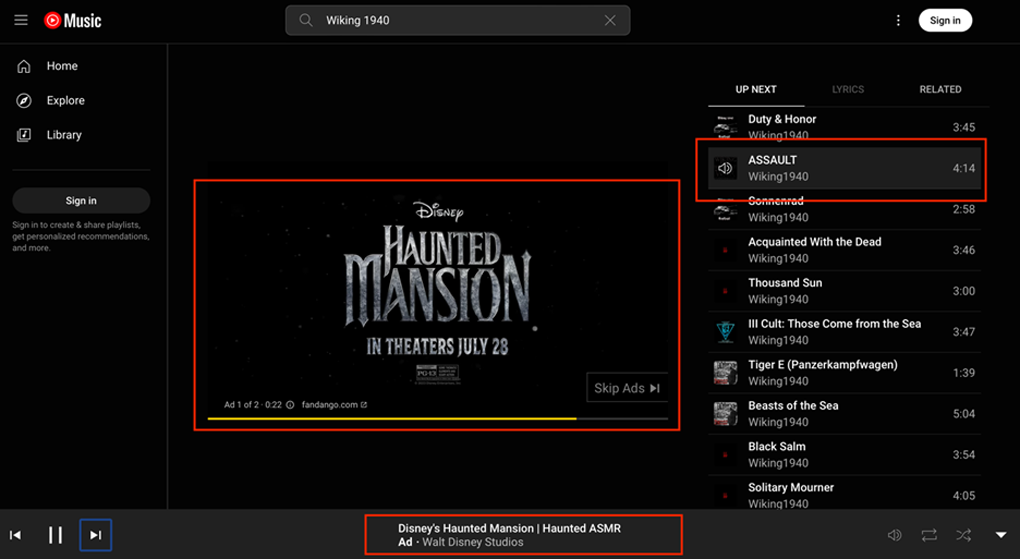

YouTube also ran a pair of ads, including one for financial advisory firm InvestorPlace, before an auto-generated video for the song “ASSAULT” by the National Socialist black metal band Wiking 1940.

These findings raise questions about YouTube’s enforcement of its policies around music. The platform has been criticized in the past for giving a megaphone to white power bands that promote hate and racism through their lyrics.

Our review found that YouTube is serving ads alongside some auto-generated hate band content on both its flagship site and YouTube Music, a separate music streaming service launched in 2018 to compete with Spotify and Apple Music.

YouTube Music offers a free, ad-supported streaming service and a paid version with no ads, currently $10.99 per month. Of note, on YouTube Music, these hate band channels and videos are not labeled as auto-generated, even though they are identical to content that is marked as auto-generated on the main YouTube site.4

The YouTube-generated video for “Zyklon Army” provides a good illustration of this dynamic. As noted previously, YouTube served an ad for CareCredit on the video on the main YouTube site. We found that YouTube also served an ad for McDonald’s on the video on YouTube Music. The McDonald’s ad, which was non-skippable and promoted the fast-food chain’s frozen Fanta drinks, ran before the video, which kicks off with chants of “Sieg Heil” and heavy metal guitar music.

Another example is the auto-generated video for the song “ASSAULT” by the National Socialist black metal band Wiking 1940. As noted previously, YouTube ran an ad for InvestorPlace ahead of the video on the main YouTube site. Reviewers found that YouTube also displayed a video ad for Disney’s “Haunted Mansion” movie ahead of the same track on YouTube Music.

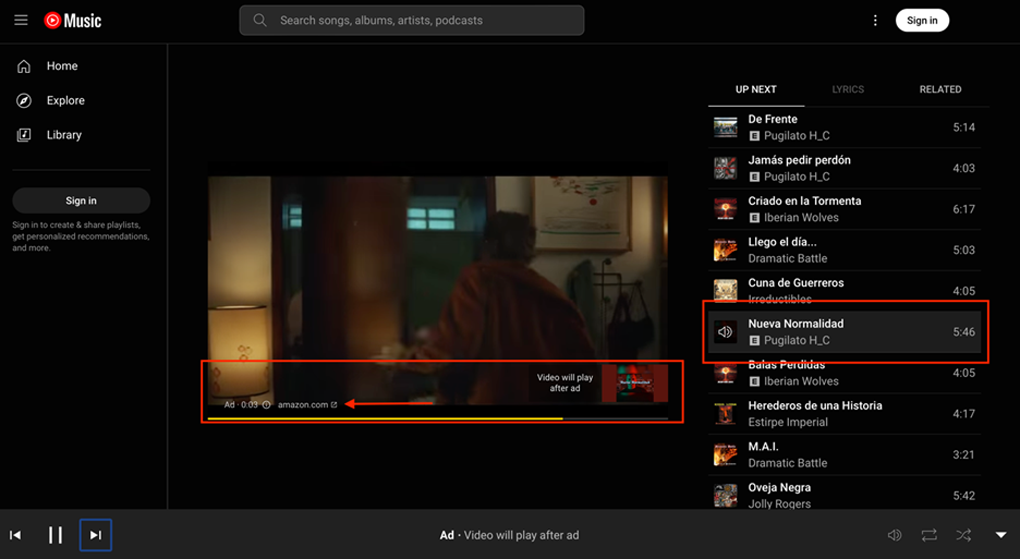

Additionally, we found that YouTube Music ran a non-skippable Amazon ad before an auto-generated track for the song “Nueva Normalidad” (“New Normal”) by the Spanish white supremacist band Pugilato H_C. The song’s lyrics speak of an “obsession of the masters of the bank to destroy the white race in the West,” according to one music website. This appears to be a reference to baseless conspiracy theories about Jewish control over financial systems and the “Great Replacement” of white people by non-white immigrants.

Recommendations

These investigations raise a host of questions about how the major social media platforms, particularly YouTube and to a lesser extent X, are profiting from hate groups’ content through ads placement. These platforms say they’re dedicated to rooting out hate speech. Unfortunately, however, the machinery of their advertising business indicates otherwise.

Based on our findings, here are recommendations for industry, government, and advertisers:

Industry

-

Improve Ad Placement Features. Platforms must ensure advertisements are not placed in close proximity to hateful or antisemitic content. First, to the extent content that is tied to advertising violates platform policy, it should not be on the platform to begin with. Second, ad placement near hateful or antisemitic content is a risk to brand safety for advertisers. Third, ad placement may at times provide opportunities for hateful content creators to benefit from profit-sharing agreements with major platforms.

-

Stop Auto-Generating Hate. Platforms should not be auto-generating hate content. If platforms are unable to properly program tools to distinguish between auto-generating hateful versus innocuous content, they should pull down the auto-generation feature until the risk for creating such content is mitigated. There is no reason platforms should be proactively producing hate-filled or antisemitic content.

-

Share More Information About Advertising Policies. Many of the monetization policies and practices on platforms are opaque or unclear. Tech companies should be more transparent about revenue-sharing practices and policies around ad placement. In addition to providing updated information around policies, tech companies should conduct risk assessments and engage in red teaming exercises to ensure that their advertising practices are safe for brands and users. They should share information with advertisers—and researchers—about these exercises. Increasing transparency around advertising product features, policies, and practices would allow for advertisers, regulators, researchers, civil society, and other stakeholders to better understand the impact of monetizing hate and improve the ad tech ecosystem.

Government

-

Mandate Transparency Regarding Platform Advertising Policies and Practices. We need more information about how platforms monetize content and what policies drive advertising decisions. Government-mandated disclosures are an important first step to understanding how to protect consumers and prioritize brand safety. Mandates, however, can and should be crafted in a way that protects private information about both advertisers and social media users. California has been successful in passing transparency legislation related to content moderation policies and practices. Still, Congress must pass comprehensive transparency legislation that specifically includes disclosures related to advertising policies and metrics.

-

Update Section 230. Congress must update Section 230 of the Communications Decency Act to fit the reality of today’s internet. Section 230 was enacted before social media and search platforms existed. Decades later, however, it continues to be interpreted to provide billion-dollar platforms with near-blanket legal immunity for user-generated content. We believe that by updating Section 230 to better define what type of online activity should remain covered and what type of platform behavior should not, we can help ensure that social media platforms more proactively address how they monetize content. Congress should clarify the specific kinds of content that fall under Section 230’s current liability shield. If user-generated or advertising content plays a role in enabling hate crimes, civil rights violations, or acts of terror, victims deserve their day in court.

-

Regulate surveillance advertising. We urge Congress to focus on how consumers—and advertisers—are impacted by a business model that optimizes for engagement. Congress should enact legislation that ensures companies cannot exploit consumers' data for profit—a practice that too often can result in monetizing antisemitism and hate. We know companies’ hands aren’t tied, and that they have a choice in what content they prioritize. Congress should pass the Banning Surveillance Advertising Act. Legislation like this is crucial to breaking the cycle and, ultimately, de-amplifying hate. ADL applauds the reintroduction of this vital legislation to disrupt Big Tech’s weaponization of our data.

Footnotes

1 Forty-one of the 67 search terms accompanied by in-feed ads generated ads solely on mobile. Twenty-four in-feed ads generated ads on both mobile and desktop. Two generated ads on desktop alone.

2 At the time of the experiment, the ads were identifiable by a small “Promoted” label in the bottom left corner. News reports indicate the platform later began using the label “Ad” in upper right corner. A mid-September spot check found that X used the “Promoted” label on mobile and the “Ad” label on desktop.

3 ADL and TTP first identified the auto-generated video for “Zyklon Army” in a previous report published in August 2023. Since that time, YouTube has removed the video, but the auto-generated “topic” channel for the band, Evil Skins, and the other auto-generated videos in that channel remained on YouTube as of mid-September. Some of those videos were monetized with advertising.

4 The hyperlinks for auto-generated artist channels and videos on YouTube Music are nearly identical to those on the main YouTube site, though the YouTube Music links start with “music.” For example, the channel for Evil Skins on YouTube is “youtube.com/channel/UCexG-ipS00OL2TmB34g6rSg” while the channel on YouTube Music is “music.youtube.com/channel/UCexG-ipS00OL2TmB34g6rSg."