This Gen AI illustrative image was made using Canva’s Magic Media

Co-produced with Builders For Tomorrow

ADL has identified extensive issues with antisemitic and anti-Israel bias on Wikipedia in multiple languages. These issues include 1) a coordinated campaign to manipulate Wikipedia content related to Israel, the Israeli-Palestinian conflict, and similar issues, in which a group of editors systematically evade Wikipedia’s rules to shift balanced narratives toward skewed ones, spotlighting criticism of Israel and downplaying Palestinian terrorist violence and antisemitism; and 2) pro-Hamas perspectives informing Arabic-language Wikipedia content on Israel and the Israeli-Palestinian conflict.

ADL has found clear evidence that a group of at least 30 editors circumvent Wikipedia’s policies in concert to introduce antisemitic narratives, anti-Israel bias, and misleading information.

These 30 editors were much more active than other comparable groups of editors, on average, by a factor of at least two, based on total edits made over the past 10 years.

In addition to this multiyear campaign by bad-faith editors to revise Wikipedia’s content on Israel and the Israeli-Palestinian conflict, ADL found evidence of biased and extremist content in Arabic-language Wikipedia. Wikipedia’s rules are not applied consistently outside of some English-language pages, allowing biased pro-Hamas content in the Arabic-language edition.

Pages related to Hamas in Arabic glorify the Palestinian terrorist organization and perpetuate pro-Hamas propaganda, while failing to follow Wikipedia’s rules for neutrality.

This report makes clear that Wikipedia needs to do far more to address anti-Jewish and anti-Israel bias and coordination. Until it has done that, those who rely on Wikipedia – from Google Search to large language models like ChatGPT – must deprioritize Wikipedia’s content on issues related to Jews, Israel and the Middle East conflict so that they do not perpetuate this bias.

Wikipedia has the distinction of being the most visited non-commercial site on the web, with approximately 4.4 billion visitors per month in 2024 (Statista). Since its origins as a decentralized encyclopedia produced and maintained by volunteer users, it has earned its reputation for reliable information. With the advent of AI tools based on large-language models (LLMs), its extensive collection of entries now informs the outputs of many generative AI applications such as Google Gemini and ChatGPT.

Despite Wikipedia’s efforts to ensure neutrality and impartiality, malicious editors frequently introduce biased or misleading information, which persists across hundreds if not more entries. Unlike commercial social media based on user-generated content, Wikipedia is not centrally managed. There is no top-down content moderation or uniformed forcement of its rules or principles. Wikipedia’s non-profit foundation, the Wikimedia Foundation, oversees Wikipedia but does not govern its content nor moderate the site. Instead, volunteer contributors (“editors”) collaborate to draft and edit content. These volunteers determine which edits to keep or reject, according to principles of decentralized decision-making and self-governance. A two-tier system of elected moderators, administrators and arbitrators, monitor the site, sanction users, and enforce policy decisions. There are millions of regular users on Wikipedia, but only 840 administrators and 15 arbitrators. Wikipedia has not developed large-scale or advanced technical solutions, such as automated detection, to enforce its policies against bias or harassment.

Wikipedia’s decentralized model, dependence on human review, and rudimentary tools for analysis limit its ability to address widespread abuse or manipulation. Issues of scale remain one of the primary challenges for Wikipedia in mitigating bias. Prior research shows that Wikipedia does not have capacity to apply its principle of “neutral point of view” (NPOV) at scale (Hube and Fetahu 2018). Only a small percentage of entries meet this standard; this 2018 study showed tens of thousands of entries still awaited review at the time of the publication (only new pages formally submitted are reviewed, while thousands of new pages created directly by confirmed editors only subsequently receive cursory review). Less urgent or newsworthy entries are therefore more vulnerable to manipulation. Core, highly visible articles tend to attract more attention (and sometimes, more conflict), and therefore are often more thoroughly vetted, requiring a higher bar to change, although even these can be manipulated with concerted effort. Current event pages related to breaking news typically receive sufficient attention from administrators, but once the news cycle moves on, administrators do not have capacity to monitor these pages and they become vulnerable to manipulation. Bad-faith editors also use tactics such as bullying and intimidation to overwhelm good-faith editors and push through edits. A 2023 analysis of user “talk” pages found that toxic comments were associated with a reduction in participation by volunteers, who became less active and were at risk of leaving altogether (Smirnov et al. 2023).

Although Wikipedia’s policies and processes should ensure that entries are impartial and high-quality, in practice, neutrality is difficult to maintain and malicious actors can game these systems. More details on Wikipedia’s processes and their limitations can be found in the appendix to this report.

The centrality of Wikipedia content to Google search and knowledge panels, and more recently, to LLMs, means that inaccurate or misleading content is included or featured in search results and other applications, such as AI chatbots. Google has been incorporating Wikipedia articles into its “knowledge panels” since at least 2012 and favors Wikipedia content in its search results. Wikipedia pages that might not have had much public visibility, and which are not as well-vetted or secured as well as high-profile ones, can be equally favored by auto-indexing, despite their less reliable content.

This report lays out findings from an extensive investigation into deceptive, manipulative practices in violation of Wikipedia’s policies targeted at entries related to Jews and Israel, as well as bias and extremism in Arabic-language pages related to Hamas. Prior investigations have uncovered similar campaigns to seed bias and disinformation, including efforts by some of these same editors to coordinate large-scale anti-Israel and pro-Iranian revisions off Wikipedia. These efforts were largely coordinated on Discord, and intensified after the Hamas-led October 7, 2023, attack on Israel. This campaign was further supported by advocacy group Tech for Palestine, which appears to have recruited volunteers to make such edits systematically, reshaping the narrative on Israel and the Israeli-Palestinian conflict to promote pro-Hamas, pro-terrorist views, until one lead editor was brought before Wikipedia’s arbitration committee (ArbCom) and received an indefinite site ban.

Recently ArbCom banned multiple editors from the Israeli-Palestinian topic area (known as a topic ban, rather than a more comprehensive site-wide ban). A World Jewish Congress report on Wikipedia has also detailed ongoing issues of anti-Israel and antisemitic bias on English-language Wikipedia, such as Holocaust revisionism. In one instance, a group of Polish editors appears to have engaged in coordinated efforts to insert a Polish nationalist narrative into articles on the Holocaust in Poland, downplaying Polish responsibility and blaming Polish Jews. Separately, there have also been similar campaigns to erase Native American history.

As part of this report, we identify key recommendations for Wikipedia to adopt so that it can safeguard against these kinds of campaigns and address them effectively while ensuring that editors adhere to its standards for neutrality, accuracy and reliability.

ADL has found evidence of bad-faith coordination among actors editing and revising pages related to Israel and the Israeli-Palestinian conflict, to amplify criticism of Israel and minimize evidence of violence and antisemitism by Palestinian terrorist groups. Wikipedia prohibits editors from privately coordinating to make changes to pages, both on and off Wikipedia. Public deliberation is encouraged, however, to reach consensus and ensure impartiality. We conducted a large-scale investigation of Wikipedia pages and editors (Wikipedia’s volunteer contributors), collecting all the contributions from four sets of 30 highly active editors. We collected data going back to 2002 (the earliest date one of the bad-faith editors joined), across multiple subjects. Based on their edits, we identified a group of 30 editors whose activity suggests a coordinated campaign to modify pages on Israel and the Israeli-Palestinian conflict, often with false or misleading information. We compared their activities to those of three other groups of editors and observed far higher rates of activity and collaboration, including usage patterns that suggest some editors engage in this work full-time.

We identified the group of 30 editors that appear to be in close coordination by calculating the minimum time between edits made on pages related to Israel, Jews, or the Palestinians, such as the 1948 Palestine War and Israeli War Crimes articles. We then analyzed all pages on which they commented or were mentioned, including backend discussion (“talk”) pages and user talk pages (discussion pages for individual editors).

Once we identified this set of 30 editors, we assembled comparable sets of editors to evaluate whether the suspicious group’s activities were typical. We selected several Wikipedia pages likely to have similarly active communities of editors, including the 30 most active editors who contribute to the main Israel-Hamas war page (now called Gaza war) and the 30 most active from the China-United States relations page, plus 30 random editors from Wikipedia’s overall set of 5,000 most active editors across all pages (the “Pro editors”). The Israel-Hamas war page editors engaged with similar topics as the suspicious editors, but appear to do so genuinely: they do not make nearly as many edits, nor do they appear to be collaborating in violation of Wikipedia’s rules. The 30 top editors we suspect of coordinating to bias Wikipedia content on Israel and the Israeli-Palestinian conflict were more than twice as active on average than these other groups of editors, based on total edits made over the past 10 years.

Chart 1: Bad-faith editors make more edits than other highly active editors

Chart 2: Average edits per day for the top 5 editors per group

Additionally, this high degree of activity among the bad-faith editors increased substantially following the October 7, 2023, attack on Israel.

Chart 3: Number of edits increased most among bad-faith editors since October 7, 2023

Along with unusually high editing activity among the bad-faith editors, we found strong evidence of coordination in two primary areas: communication within the group and tandem editing.

Group communication

To measure group communication, we analyzed the number of messages on talk pages with at least one member of each group that mentioned at least one other member over the past 12 months. We found 1,012 such instances among the 30 bad-faith editors, compared to 234 for the top 30 most active editors on the Israel-Hamas war page (four times as many), 36 among the China-US relations editors (28 times as many), and six among the Pro editors (168 times as many). This frequency suggests these editors were in much closer communication than is typical on other Wikipedia pages, even those who work on similar content.

Chart 4: Messages among editors in the past 12 months

Tandem editing

Next, we analyzed the time interval between when one editor from the group made an edit (such as a cut or addition) and another. Again, the 30 suspicious editors stood out, although in this case, we saw similar activity amongst the Israel-Hamas war editors, across all their edits, likely due to the Israel-Gaza conflict.

The bad-faith editors made over 71,855 edits within an hour of each other on the same page over the past 10 years; Israel-Hamas war page editors made 45,925, while the China-US relations editors made 4,961, and the Pro editors 486. In the past 12 months, the bad-faith editors made 19,605 edits, while the Israel-Hamas editors made 16,063, the China-US editors 815, and the Pro Editors only 42 (the 30 Pro Editors group is taken from a random sample of the top 5,000 most active Wikipedia editors, so the lack of overlap is not surprising). In the past year, the Israel-Hamas war editors have made more edits close together in time, but over the longer period, the 30 bad-faith editors were much more likely to edit in tandem.

Chart 5: Edits made in tandem in the past 10 years

Chart 6: Edits made in tandem in the past 12 months

The coordination is especially evident when looking at both front-facing ("main”) pages and internal discussion ("talk”) pages for editors. Main pages are the article pages the general public views, while talk pages are where editors propose and discuss changes. All are publicly viewable, as are past versions of main pages. On both main and talk pages, editors from this group were much more likely to make edits to the same pages and to make edits within the same period of time as one another, suggesting a high degree of coordination. The short time between edits raises the likelihood that these edits are coordinated off Wikipedia, potentially on sites like Discord.

Chart 7: Number of edits by at least 2 editors to the same page highest among bad-faith editors across main and talk pages

Time between edits

Looking even more granularly, the bad-faith editors also had the lowest time between edits of any group. The average time between edits for this group was less than others by a factor of 10 compared to the Israel-Hamas editor group, and by a factor of 4,294 compared to a random sampling of all Wikipedia top editors. This activity on talk pages is particularly concerning because these pages are where editors argue for their changes; the suspicious editors use talk pages to bully and intimidate others; six were topic-banned for doing so.

Chart 8: Minimum time between edits (in hours) by group of editors

Taken together, this high degree of communication and tandem editing suggest that this group of editors is working together, likely off-site and potentially in violation of Wikipedia’s policies, to edit pages related to Israel, Jews, and the Palestinians.

Harassment and intimidation

Among this group of 30 bad-faith editors, a smaller core regularly engaged in harassment and bullying against other editors, often spending more time reporting other edits than actually editing. Six were ultimately banned from posting to pages about Israel and the Israeli-Palestinian conflict as a result. This harassment took place on talk pages as well as on administrator and arbitration notice boards. One editor, for example, spent more time editing article talk pages (44% of their edits) than actually editing article pages (38% of edits). Others engaged regularly in explicitly hostile attacks, denigrating other editors and berating them, such as calling their edits “bullshit” and using “Zionist” as a slur.

Along with direct attacks and bullying, these bad-faith editors used frequent reporting as a means to harass and intimidate. One editor made 37% of their total edits to Wikipedia admin pages (over 13,000 edits in total), more than 5,000 of which were reports against other editors on administrative notice boards. This high volume of reports suggests these editors focused more on attacking those they viewed as opponents rather than contributing to articles. Such harassment can be a form of area denial, making it impossible for good-faith editors to participate, and can lead to editors participating less or leaving altogether (Smirnov et al. 2023).

Manipulation and disinformation

To avoid detection, these editors do not only make changes to pages on Israel, Jews, and the Palestinians; they appear to spend time across Wikipedia editing unrelated pages as well, and pages related more generally to the Middle East or Jews but that do not fall under the protective umbrella of ARBPIA (Arbitration of Palestine/Israel Articles). ARBPIA typically only applies protection to pages directly related to the Israeli-Palestinian conflict since the advent of modern Zionism in 1885, which means historically related or relevant articles have typically not been protected and are more easily subject to vandalism and manipulation.

This additional activity can camouflage potentially violative edits by burying biased edits in a flurry of less consequential changes, which are harder to track and identify for the purposes of censure. These accumulated edits also help bad-faith actors quickly gain credibility on Wikipedia, which requires a minimum number of edits for contributors to be allowed to make edits to certain articles. There are not enough administrators to monitor so many pages and edits, and editors must self-report to instigate review. Editors who do report may also be subject to harassment.

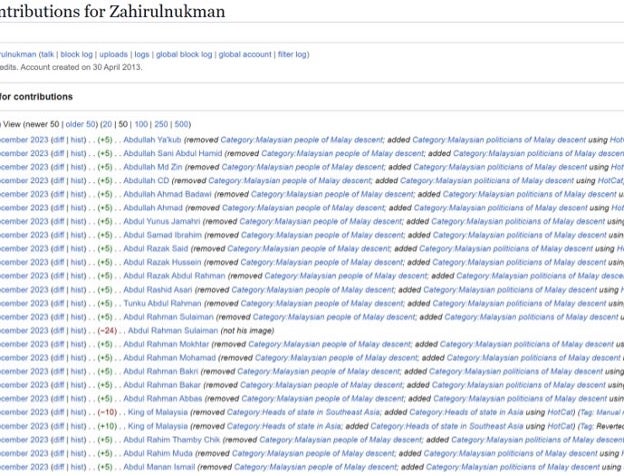

Image 1: One editor made hundreds of minor edits, removing categories across many articles. In other instances, they added categories or “+” to “LGBTQ” in hundreds of places. These numerous small edits allow bad-faith editors to “game the system,” in Wikipedia parlance, to rack up the necessary number of edits (500 in at least 30 days) to access editing privileges to protected pages on Israel and the Israeli/-Palestinian conflict.

In one analysis, we charted how much time each editor spent making edits. We found that many of these 30 editors spent long blocks of unbroken time editing, often as many as eight hours in a day. While we cannot know the circumstances under which they edit, this pattern resembles full-time work, and poses the question of whether or not they are being paid to do so.

Image 2: Edit activity among the bad-faith editors in two 24-hour periods, December 6, 2023 (above) and February 4, 2024 (below). Dots represent edits by user. Some are active for approximately six- to eight-hour blocks, such as those in blue, green, and red.

These suspicious editors have made revisions to over 10,000 articles related to Israel, the Israeli-Palestinian conflict, or similar topics. Within these articles, they have made more than a million edits. At least two or more of this group have contributed to each of these articles, yet they only overlap on topics related to Israel and the Israeli-Palestinian conflict (these editors do contribute to unrelated topics as well, but they do not appear to coordinate). They engage in multiple types of manipulation and falsification, adding or cutting content, removing citations and moving content to other pages, in ways that shift the narrative on the Israeli-Palestinian conflict to downplay Palestinian violence and antisemitism and heighten criticism of Israel. While many of these editors have been active for years (in some cases more than a decade), there was a significant increase in edit activity in 2022, with many notable changes made between 2022 and 2024.

Image 3: Edits to the Wikipedia page for Samir Kuntar, a Lebanese Palestinian Liberation Front member who participated in a terrorist attack in the Israeli city of Nahariya in 1979, killing multiple Israeli citizens. The edits removed multiple citations for his conviction on murder and terrorism charges in Israel, as well as references to his accolades in Syria and Iran and designation as a terrorist by the U.S., while adding mention of his claims to innocence on some of the charges.

The following examples illustrate the editors’ efforts to remove supporting evidence for political violence and explicit antisemitism. One editor, for example, appears to have systematically removed references across multiple articles documenting terrorist violence, such as academic studies of suicide attacks during the Second Intifada:

Image 4: Edits to “Palestinian political violence” by one of the 30 suspicious editors in November, 2023. The highlighted text on the left, consisting of citations to multiple peer-reviewed academic journal articles, was removed.

References were also removed to media coverage of perpetrators calling for the destruction of Israel:

Image 5: Edits to “Palestinian political violence” by one of the 30 suspicious editors in November, 2023, removing references to calls for the destruction of Israel.

The main Wikipedia entry on Hamas underwent changes to de-emphasize the organization’s terrorist actions. A sentence indicating that multiple governments designate Hamas as a terrorist group was moved from the beginning of the second paragraph to near the end of the fourth. The first reference to Hamas as a terrorist organization now appears further down in the lead section, which also no longer mentions the more than 1,100 fatalities of the October 7 attack. Instead, the lead section focuses on Hamas’s role as a political, social, and military organization that promotes “Palestinian nationalism in an Islamic context.” A lengthy discussion of attacks against Israeli civilians and military targets was also removed, including a list of rocket attacks, a description of Hamas’s rocket arsenal, and language describing Hamas attacks on Israeli civilians as retaliation for assassinations of Hamas leaders.

Image 6: Main Hamas page, difference between revisions, October 19, 2023 10:45am and 11:14am.

In an example from August 2024, the same editor mentioned earlier removed references to reports of widespread sexual violence perpetrated by Hamas during the October 7 attacks:

Image 7: Edits to “Gaza war,” August 2024. The yellow highlighting indicates deleted text.

Other edits by members of this group minimize incidents of explicit antisemitism. In an example from March 2024, the same editor removed the following description of a Palestinian flying a kite with a Nazi swastika: “ In an interview with NPR, one young Palestinian preparing to launch an incendiary kite emblazoned with a swastika explained that they used the symbolism and embraced antisemitism and Nazism so that the Israeli's [sic] would know ‘that we want to burn them.’” References to mainstream media coverage of Hamas getting a “propaganda boost” following mass protests in Gaza in 2018 were also removed.

Image 8: Edits to “2018–2019 Gaza border protests,” March 2024, showing removed text.

Edits to the main Zionism page since 2022, meanwhile, seek to reframe Israel’s founding. The lead section now characterizes Zionism as an “ethnocultural nationalist movement” with an explicitly colonialist, ethnonationalist goal: “Zionists wanted to create a Jewish state in Palestine with as much land, as many Jews, and as few Palestinian Arabs as possible.” Multiple bad-faith editors were involved in this revision, including the six who were topic-banned for their behavior. Other editors attempted to restore the previously neutral language, but in February 2025, an admin with no previous involvement with the topic area or the editors (some of whom had recently been sanctioned for their behavior), imposed a 12-month moratorium on further discussion in a Request for Comment (RFC), locking the current version and silencing further debate. This incident raises concerns about the efficacy of arbitration sanctions (such as topic-banning) and remedies intended to alleviate these problems.

Image 9: Current version of Zionism main page, as of February 11, 2025.

This pattern of edits suggests not an attempt to ensure a balance of perspectives, but a coordinated effort by multiple bad-faith editors to selectively remove or downplay Palestinian incidents of antisemitic hate, calls to abolish the Israeli state, and brutal acts of violence, especially on the part of Hamas, while leaving in place criticism of Israel. These changes cumulatively shift the narrative around Israel and the Israeli-Palestinian conflict to one in which explicit antisemitic themes are intentionally omitted, such as the use of Nazi imagery in Palestinian protests in Gaza in 2018, while calling into question negative characterizations of terrorist actors and elevating positive ones. Removing references to academic research and mainstream media coverage (while favoring biased and politicized sources) further delegitimizes discussion of anti-Jewish or anti-Israel violence.

These edits openly suppress examples of antisemitism and attacks on Israel’s right to exist, while being part of a broader pattern of more subtle efforts to elevate anti-Israel perspectives on Wikipedia. Extensive edits to multiple articles illustrate this strategy. The 1948 Palestine War article, for example, underwent significant shifts in tone, content, and perspective from 2022 to 2024, including an extensive section on historiography that introduces concepts such as "Zionist narrative as not history in the proper sense" and "Neo-Zionist narratives."

In these examples, the larger pattern of changes demonstrates a systematic effort to skew numerous Wikipedia entries to promote a set of narratives critical of Israel, often delegitimizing Israel’s existence and actions. In many cases, these changes shift the entries from balanced historical accounts to a narrative that skews toward militant Palestinian perspectives, likely violating Wikipedia’s policies against advocacy. These edits are part of a concerning trend of gradually obscuring historical Jewish presence in the Middle East through unjustified expansions and modifications.

Voting patterns

This pattern of misleading edits was evident in how the bad-faith editors voted on which articles to keep and which to delete. Editors weigh in on decisions about which topics should constitute separate entries, which should be merged with other entries, and which should be removed. They make arguments for or against the quality or impartiality of a given article, which administrative editors then consider in making a final determination. The bad-faith editors frequently voted in lockstep on these decisions. These editors voted on keeping or removing 5,745 entries to date overall, nearly five times as many as the Israel-Hamas page editors (1,165). They were more than twice as likely to vote on the same entries as others in their group (they voted on 2.7% of pages) compared with the Israel-Hamas editors who voted together on 1% of pages. Among those voting, they were also much more likely to be in consensus: they voted the same way 90% of the time on average compared with 68% of the time for the Israel-Hamas editors.

As with edits that minimize Palestinian violence and terrorism while delegitimizing Israel, their voting patterns reflect a broader agenda to highlight criticism of Israel, endorsing topics such as “Genocide against Palestinians,” “Human rights violations against Palestinians by Israel,” “Nakba denial,” and alleged massacres by Israel. At the same time, these bad-faith editors repeatedly voted to delete topics detailing Palestinian or anti-Israel violence, calls for the destruction of Israel, and criticism of Palestinian violence or terrorism, such as “Jerusalem Light Rail stabbing,” “Glorification of martyrdom in Palestinian society,” “Free Palestine phrase,” “List of Islamist terrorist attacks,” “Alleged Palestinian genocide of Israelis,” and “Denial of atrocities during the 2023 Hamas attack on Israel.” Many of these votes explicitly target denial of Hamas’ atrocities against Israelis on October 7, 2023, or denial of other violent attacks.

Sources

The sources bad-faith editors use in their citations are another cause for concern and a flag that these editors are trying to shift the narrative in violation of Wikipedia’s standards. Wikipedia places great emphasis on citing reputable sources and notes when articles contain claims without appropriate supporting evidence. Reliable sources include peer-reviewed academic publications (such as academic books or journal articles), coverage of current events in reputable news outlets, and first-hand witness accounts, unless these sources have been proven unreliable. Editors vote on which sources are generally considered reliable (such as news outlets or authors), but individual editors can include sources of their own choosing on articles that are not locked. If others question those sources, they can challenge them. There is no procedure to flag sources that may be reliable on some topics but not others.

The most common sources the bad-faith editors use in their contributions, however, include unreliable or highly biased resources and media outlets (while frequently removing reputable sources, such as mainstream media or academic publications). We pulled the top 50 sources these bad-faith editors cite in their edits for the 5,611 pages we analyzed, and 50 of the top-cited links, and found that the thirteenth most common among the bad-faith editors was to “PalestineRemembered.com,” an independent website critical of Zionism that contains mostly opinion and commentary rather than first-hand reporting or evidence. For example, the site includes an FAQ on why Palestinians purportedly want to “destroy Israel and drive Israeli Jews into the sea.” This site does not meet Wikipedia’s criteria for a credible source.

The third most-cited source among these editors is the Qatari news site AlJazeera.com; Wikipedia considers Al Jazeera a credible source, but it has been banned in Israel during the war for its close ties with Hamas, and was previously sanctioned by the Palestinian Authority and by multiple Mideast countries amid accusations of inciting violence and platforming Islamist extremists. The fourth is the Wafa News Agency, the official state-run news agency of the Palestinian Authority. Google Books is the top most cited source, which represents links to a range of books, some of which are also biased.

Looking at these editors’ contributions since October 7, 2023, Al Jazeera moves to the top most-cited, though PalestineRemembered.com no longer appears.

Among the top links (rather than domains) cited within these sources, the fourth most cited was a page on PalestineRemembered.com on 1945 village statistics, which was cited 51 of times in 47 articles over 10 years. Almost all of the other top links among these editors were to census records of varying kinds, including the second most cited, a set of village statistics from 1945 (cited 57 times) and this 1922 census of Palestine (cited 50 times). Wikipedia policy explicitly bans users from adding “original research” to articles based on primary sources such as census records that are subject to interpretation. Only four links are to news outlets at all (the BBC, Democracy Now, and the New York Times). Multiple links are also broken, including ones to radio.islam and jafi.org.

The activities and modifications outlined in this case study highlight the problem of biased, antisemitic and anti-Israel narratives spreading on Wikipedia through intentional manipulation and coordination. Wikipedia has processes in place to mitigate these risks, but they apply only in a small number of cases. There are also not enough administrators nor adequate technical tools to enforce these rules consistently. Articles on contested topics should, by policy, be locked automatically to direct editing by inexperienced editors. Instead, delays between such designation and locking these pages allow bad-faith editors to make changes: roughly 13% of article edits on locked Israel/Palestinians edits were made by editors who should not have editing privileges (because they have not earned the "extended confirmed” status), according to user-generated Wikipedia statistics cited by arbitrators (itself an example of the limited data tools available to evaluate these issues).

Wikipedia’s policies are not enforced uniformly or consistently, both because there are not enough administrators or arbitrators to supervise protected pages over long periods of time and because its technical tools are inadequate to the scale of the problem. Wikipedia should apply its policies consistently and should designate most if not all articles related to Israel and the Israel-Palestinian conflict as contested, to prevent manipulation on peripheral articles. It should ban editors engaged in advocacy (what Wikipedians — Wikipedia contributors or “editors” — call "point-of-view pushing") from making changes to related topics (topic banning), and only administrators should be able to supervise contentious topics.

In addition to this campaign of anti-Israel manipulation and disinformation found on English-language Wikipedia pages, Wikipedia’s policies seem inadequate to prevent widespread bias and partiality on its Arabic-language pages related to Hamas. ADL analyzed pages on the terrorist organization in Arabic and found that both the primary page and related pages glorify Hamas, perpetuate pro-Hamas propaganda, and flout Wikipedia’s rules for neutrality.

Wikipedia has 340 active language “editions,” including multiple editions for languages with major dialects. The Arabic-language edition of Wikipedia comprises 1.2 million articles and over 4,000 volunteer editors (compared to the almost seven million articles and 120,000 volunteer editors in the English edition, there are far more than 120,000 active monthly users on English wiki, approximately 6,000 of whom were active in the past six months with more than 100 total edits). The Arabic-language edition is widely used: between January 2023 and January 2025, the project had between 180 million and 250 million monthly views via roughly 50 million unique devices, according to Wikipedia’s statistics. Top articles for December 2024 include the pages for ousted Syrian dictator Bashar al-Asaad and Ahmed Al-Sharaa, the head of the Islamist-rooted HTS Syrian group and the country's current president.

The Arabic-language page for Hamas, perhaps even more egregiously than the English-language version, glorifies the terrorist organization, minimizes its atrocities, and, in places, appears to function as propaganda that does not abide by Wikipedia’s NPOV requirements or its content source standards.

Examples

The opening paragraph of the Hamas article, for example, glorifies the terrorist organization, using phrases such as “from the river to the sea” and refers to its goals as “liberation” of "Palestine."

Under the subhead “On military action,” the Arabic-language page highlights how Hamas “expends enormous energy from its members” “24 hours a day, all year round” to build the tunnels from which the terror group launched the October 7 attack and where hostages taken the day of the attack are held. This language lauds the violent actions of the terrorist organization.

Under the subhead “Social and charitable work,” the Wikipedia contributors uncritically recite Hamas’s social credo, e.g.: “Hamas, within its vision for reform, has also established local reconciliation committees to help in Resolving conflicts between individuals; in order to maintain the strength of society, Hamas did not neglect the workers and fishermen....” There is only one citation for the whole section, which is also broken, as opposed to one to two per sentence, as is typical in other Wikipedia entries. This lack of supporting evidence does not meet Wikipedia’s typical standards for citation.

The Hamas article addresses the terror group’s relationship with Fatah, a political rival in the West Bank that administers the Palestinian Authority, from the perspective of Hamas. For example, the section describes Hamas’s views without counterpoint and characterizes its rival’s views as “absurd." Again, there are few citations and the links to them do not work.

Additional examples

Beyond the Hamas page, we found numerous examples of biased or unreliable content related to Hamas on Arabic-language Wikipedia pages.

For example, the page for the Shalit Deal, which praises the 2011 prisoner exchange deal for the release of Israeli soldier Gilad Shalit (who was taken captive in 2006), argues that the situation “was unique because the Palestinians were able to keep the Israeli soldier captive for about five years despite Israel raging two wars on the Gaza Strip.” Shown alongside this text, the page displays a Hamas photo with the descriptive text: “Our heroic prisoners, every year a new Gilad.”

The page for “The killing of Yahya Sinwar,” the Hamas leader in the Gaza Strip from 2017 and the architect of the October 7 attack who was killed by the Israeli military in October 2024, similarly represents Hamas’s perspective, glorifying his legacy and death:

Lastly, the Arabic-language page for the Al-Qassam Brigades, Hamas’s military arm, includes a list of violent actions under the title “the lead in the Palestinian resistance,” and nearly 50 bulleted references to terrorist acts. Highlighted examples include:

“The first to involve resistance fighters in carrying out martyrdom operations in the Sbarro Restaurant operation”

Wikipedia’s design as a peer-edited encyclopedia is grounded in principles of neutrality through shared consensus. These principles depend on the participation of good-faith actors to succeed. Although Wikipedia’s policies and processes help prevent most Wikipedia content from becoming explicitly erroneous or hateful, it is difficult to maintain them at scale, especially against coordinated bad-faith actors targeting contentious topics.

ADL’s research shows that with enough time and resources, concerted efforts to skew content can succeed, such as a group of editors dedicated to revising narratives about Jews and Israel. The research to uncover this coordination was painstaking and time-intensive, requiring resources that neither most Wikipedians (or “editors”) nor the Wikimedia Foundation have available. The level of coordination – and time involved in making these edits, both in terms of years spent gaining credibility and hours spent making changes – suggests some may be paid agents. We are not suggesting simply that people critical of Israel are systematically revising Wikipedia. Good-faith editors with multiple points of view, for example, contribute to Wikipedia’s Israel-Hamas (now Gaza war) page and don’t appear to be engaged in intentional, coordinated efforts to skew content in antisemitic or anti-Israel ways. While more explicit antisemitic sentiments rarely made it through Wikipedia’s deliberation process, the scale of antisemitic and anti-Israel bias is concerning.

The advent of LLM-based AI tools trained on Wikipedia has also raised the stakes of reliable, impartial articles, as content from articles that may be highly biased is now incorporated into search, chatbot, and other tools. Google has been favoring Wikipedia content in its search algorithm and highlighted knowledge panels (short summaries of people and topics) since at least 2012. But neither LLMs nor Google limit their use of Wikipedia content to vetted entries. Tech companies should exclude contentious topics (and articles with a disputed neutrality tag) from these applications, unless they can verify their content and sources. Curbing the use of unreliable content from Wikipedia will both reduce its spread and visibility, and disincentivize such manipulation.

These concerns arise on Arabic-language Wikipedia pages as well, where many articles on Israel and the Israeli-Palestinian conflict replicate Hamas’s own language and perspective. Wikipedia must take steps to ensure its high standards are met across the site, especially on pages that are more likely to be contested.

To address this abuse of Wikipedia and the harms of widespread biased content, we make the following recommendations for Wikipedia, for companies using LLMs, and for search tools.

Policymakers across the country should prioritize raising additional awareness of antisemitism – and structural issues – within Wikipedia. Because of the diffuse nature and content of the project and its pages, direct regulation may not be practical, advisable, or constitutional. But that does not mean that policymakers should turn away from the important role Wikipedia plays in our daily lives, the risks it presents, and opportunities for improvement.

Policymakers who focus on fighting antisemitism online should consider:

How Wikipedia Prevents Abuse

The Wikipedia model

Wikipedia provides generally robust safeguards to ensure neutrality and reliability on the platform. These safeguards are intended to minimize bias through deliberation and consensus making. But there are still ways around these safeguards for bad-faith actors. To understand how editors can introduce bias into Wikipedia entries, it’s important to understand the Wikipedia model for creating and maintaining content.

First, a Wikipedia editor creates a new article. From a type of bean, to a deputy prime minister, to a description of a prehistoric tool, these topics would not likely have articles without Wikipedia contributors creating them, citing them, and discussing their relevant merits.

Often, Wikipedia contributors (“Wikipedians” or “editors”) disagree on what should be included in a public-facing article on a particular topic. This discussion takes place on a “talk” page that is associated with an individual article. When contributors can’t come to agreement quickly on a controversial topic—say, whether a tomato is a vegetable or a fruit—then they hash it out on the talk page, where a dispute resolution mechanism referred to as a “request for comment” may be initiated.

This “request for comment” is also referred to as a “request for help,” elevating the topic and discussion in a way that, after a period of time, and comments have been made, an experienced Wikipedian makes a judgement call on the question at issue. This experienced Wikipedian may be an “uninvolved editor,” one who has not taken part in the discussion thus far, or may be an “administrator” (typically, a longtime contributor selected by other users with more extensive site privileges). On Wikipedia, administrators are tasked with ensuring contributors follow the rules more than with being arbiters of truth.

What Wikipedia requires

While a new user can begin making edits on most pages soon after signing up, experienced contributors gain additional privileges. For example, at 500 edits and/or six months of account activity, an editor may weigh in on otherwise closed topics and receives the special designation of “extended confirmed.” As an additional example: contributors that have over 3,000 edits and six months of user history can be designated as a “page-mover,” or someone who can consolidate or relocate Wikipedia articles. Page movers can only be designated by administrators, of which there are only 837 English-language administrators for the hundreds of millions of Wikipedia pages. Administrators are granted privileges to delete accounts, pages, and others, and one can only be selected as an administrator by consensus and thorough review by the Wikipedia community.

Wikipedia relies on its community of editors. There are no full-time, paid Wikipedia staff, although the Wikimedia Foundation, a non-profit, oversees the administrative aspects of managing the project and furthering the foundation’s mission, such as advocating against regulations that would harm Wikipedia. According to its Wikipedia page, the Wikimedia Foundation had approximately 700 employees and contractors in 2023, in communications, legal, community engagement, fundraising, administrative and advocacy roles.

At its core, Wikipedia is the product of the collaborative work of its voluntary contributors and editors (often simply called editors). It’s been said that Wikipedia could never work in theory, but manages to in practice. Wikipedia editors are supposed to be kind to one another, operate in good faith, and be bold. They’re supposed to contribute to noteworthy topics, and they’re allowed to create communities of like-minded contributors who meaningfully contribute to each other’s work. Wikipedians are encouraged to hold each other accountable to Wikipedia’s guidelines, and they’re allowed to do so in a manner that is sometimes considered brusque. Wikipedia maintains lists of guidelines its contributors should follow, up to and including a procedural article on the policies and guidelines itself.

In theory, anyone can edit Wikipedia. In practice, however, the community has created a network of policies and guidelines that can be difficult to follow, creating a barrier to entry for the average user.

What Wikipedia prohibits

While Wikipedia’s guidelines provide editors with significant autonomy, certain activities are serious violations of Wikipedia’s code that result in a user’s account being deleted. Because contributors often spend so much time on Wikipedia, and are deeply invested in their efforts, threatening a user with account deletion carries real weight. Like on social media, harassment and bullying take place on Wikipedia, which research shows can chill the participation of those targeted. Engaging in harassment can result in loss of privileges, though Wikipedia relies primarily on human review; most harassment goes unreported (Wulczyn et al. 2017).

One major violation that is more-or-less unique to Wikipedia is known as “canvassing.” Canvassing refers to the practice of enlisting other Wikipedians to support one’s position in a discussion. This could be on a vote in a request for comment or just within a discussion on a talk page. Canvassing off Wikipedia is referred to as “stealth canvassing,” and includes conduct whereby one editor uses a third-party service, such as Discord, to affect the outcome of edits to a Wikipedia article.

Canvassing is particularly anathema to Wikipedia’s process because consensus is supposed to be built through discussion on its pages, using its rules and sources. If contributors are gaming the system—whether they’re supporters of a sporting club looking to elevate their profile, paid consultants for a brand, or nation-state actors influencing a global narrative—Wikipedia’s rules are built around keeping its discussions, decisions, and effects internal to its platform, for the purposes of transparency and consistency. If Wikipedia were to allow for coordinated behavior—either authentic or inauthentic—that would potentially diminish the credibility of its entries.

Builders for Tomorrow (BFT) is a venture philanthropy and research organization focused on combating anti-Jewish and anti-West ideologies. It is led by industry leaders from the tech community who have built some of the most consequential technology companies. As a venture philanthropy platform, BFT provides grants and accelerates the most promising teams and ideas. In terms of research, BFT leverages cutting-edge advances in generative AI and large language models (LLMs) to address pressing global challenges. The group conducts research across three core areas: combating misinformation online, identifying bad actors engaged in criminal activities, and helping to scale the most promising emerging news and social media accounts that shape public discourse.