With recent ADL research showing that 15% of adults and 9% of young people have been exposed to white supremacist ideology while playing online games, ADL has examined three leading game companies’ policies to see how they prohibit content that promotes extremism and terrorism in their online multiplayer games. Despite widespread exposure to such content, game companies have generally been slower than social media companies to address this issue.

ADL recommends that all gaming companies form policies explicitly prohibiting the support of extremism and terrorism, especially the glorification of violent acts and the spreading of extremist ideology.

Online games rely on policies that set the rules by which players in an online multiplayer game are expected to abide in order to guide behavioral norms and curb online hate and harassment. But few address extremism (such as white supremacy) and terrorism, in their policies. All major social media platforms, in contrast, have policies against content that promotes terrorism and extremism, in many cases in response to past problems with extremist groups using their platforms to recruit.

Many players of online games such as League of Legends or Call of Duty use these games to find other players and make friends. Unfortunately, this kind of platform can also be used to normalize extremist rhetoric, or even potentially exploited by extremist and terrorist groups to recruit members and possibly plan violent offline activities. Young people still forming their world view are prime targets for extremist groups. As a result, they are more susceptible to extremist propaganda and ideology. Furthermore, many online games are popular with young men, prime targets for recruitment by extremist groups. Even when online activities of extremist and terrorist groups do not lead directly to offline violence, these groups are dangerous and detrimental to online community safety.

Activision Blizzard, Microsoft, and Roblox Corporation stand out for specifically addressing extremism and terrorism in their platform policies. Each of the three companies in ADL’s most recent research approaches extremism and terrorism differently in their policies: Activision Blizzard has incorporated it into a Call of Duty’s player-facing code of conduct. Roblox discusses extremism in its platform rules, and Microsoft includes the issue in its global policy and player-facing code of conduct. These can serve as examples for other game companies, though they still have room for improvement.

Fighting Extremism Through Policy

Public-facing platform policies are crucial for establishing a welcoming community for players in online games. Gaming companies set up guidelines for the moderation that players can expect in the community. While moderation is important in order to police communities and deter and punish bad behavior, moderation cannot cover all interactions in any community. Setting up a baseline of expectations encourages members of the community to act in ways that are beneficial to all. An effective policy is clear about the expected standards of the community, the game publisher’s values, what constitutes bad behavior, and explanations of the consequences of breaking the rules.

The three examples discussed here show the different ways that game companies can protect against the dangers of extremism and terrorism in online games through policy. The Call of Duty code of conduct is meant to be read by all players and has a concise explanation of expected conduct in the game. Roblox outlines specific examples of extremist and terrorist behavior that can happen in online games. Microsoft has multiple policies for all of their products, Xbox, and Minecraft. Minecraft’s code of conduct is similar to Call of Duty’s and is meant to be read before playing the game. Xbox and Microsoft have more detailed policies against terrorism and extremism.

Ultimately, addressing extremism and terrorism in online games is a practice that should be adopted by more game companies. This includes policies that make players aware of threats and alert them to what behaviors to avoid. Online games continue to be spaces where white supremacist rhetoric and narratives are normalized—and sometimes potentially even where they and other extremists recruit. Game companies have a responsibility to protect their players and their online games spaces from these threats.

Activision Blizzard: Call of Duty

Call of Duty is a major first-person shooter franchise published by Activision Blizzard that has run for more than twenty years. As of mid-2022, the series had sold 425 million units since its 2003 debut. Like many online games, it has grown into a large and robust community of players and has had issues with moderation and maintaining safe environments for players, especially members of marginalized communities.

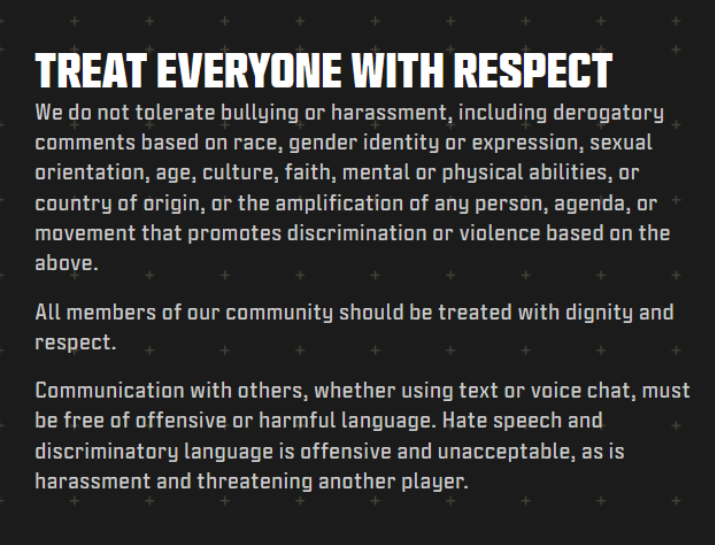

According to ADL’s 2023 survey on the hate and harassment in online games, Call of Duty is the game where the most adult gamers were exposed to white supremacist ideology in 2022 and 2023. Activision Blizzard has been consulting with ADL and in early 2024 it updated the code of conduct for Call of Duty to add prohibitions against “the amplification of any person, agenda, or movement that promotes discrimination or violence based on the above” to its existing policies against hate speech. All players must accept this code of conduct before they play.

This language addresses extremism in a broad sense by expanding Call of Duty’s definition of hate. Many kinds of extremism and terrorism are rooted in hate and can include, for example, celebrating individuals who engaged in hateful violent acts or calling for hateful violent acts themselves. A standard hate policy might cover slurs or aggressive actions against individuals based on their identity, but may not help prohibit, for example, celebrating the name of a famous white supremacist or neo-Nazi.

Figure 1. The new and revised policy on Call of Duty’s Code of Conduct page (screenshot, March 3, 2024)

Roblox Corporation: Roblox

Roblox has had numerous issues in the past with extremist groups using its platform to target the game’s core audience of children and teens for recruitment. It faces two unique issues specific to its game platform. First, Roblox allows players to create their own game worlds (called instances) within its online ecosystem. As a result, extremist groups have designed their own instances that promote extremist ideology. The white supremacist mass shooter who attacked a Buffalo grocery store in 2022 referenced Roblox as being crucial to his radicalization.

Roblox Corporation has been particularly sensitive to safety concerns because its player base is primarily children. Leaked documents have shown high level discussions at the corporation in response to problems such as extremist content, grooming, and stalking.

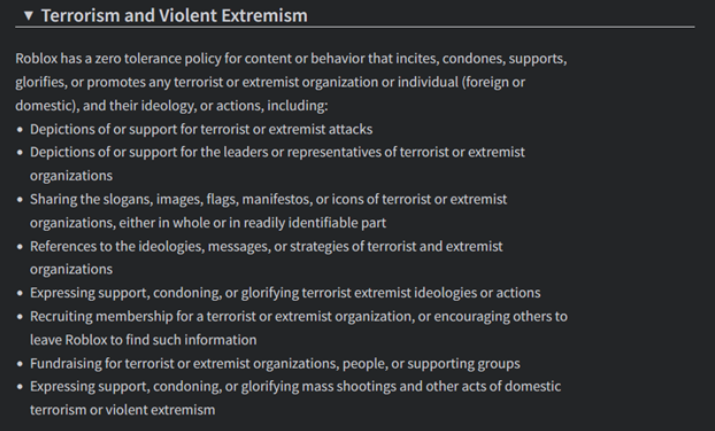

Figure 2. Section on Terrorism and Violent Extremism on Roblox’s Community Standards page (screenshot, February 29, 2024)

The section in Roblox’s policies (called Community Standards) discussing terrorism and extremism on the Roblox platform presents numerous examples of prohibited behavior: endorsing terrorist or extremist activities; supporting the leaders of such organizations; spreading ideology related to these organizations; and fundraising for extremist and terrorist causes.

Unlike the Call of Duty example, this policy page is a comprehensive guide to safety on the platform, expected behavior, and further information about the game itself.

Microsoft: Minecraft

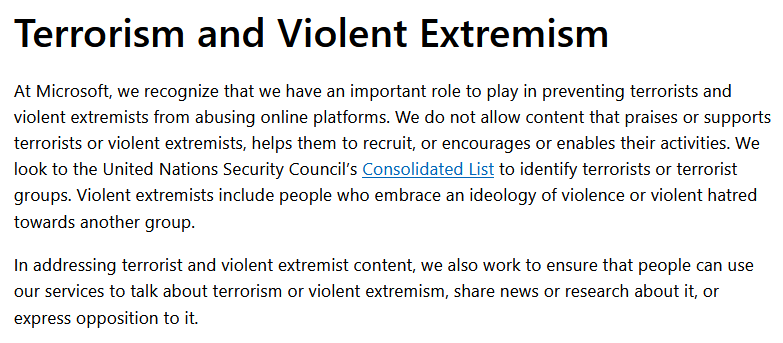

Microsoft has three different levels of codes of conduct: global level, Xbox games, and game specific. Minecraft’s policy is brief, while the Xbox and Microsoft global standards are longer and more detailed. At the highest level, Microsoft’s global Digital Safety policy addresses extremism and terrorism. It takes a broader focus than Roblox on extremism and terrorism beyond groups and organizations; many white supremacists act individually without membership in a specific group. (Figure 3)

Figure 3. Excerpt from Microsoft’s Digital Safety page (screenshot, February 29, 2024)

Microsoft’s policy makes an important distinction between support for and discussion of terrorism and extremism; many researchers and other interested parties using online platforms discuss and exchange information about terrorist and extremist threats in order to counter such groups.

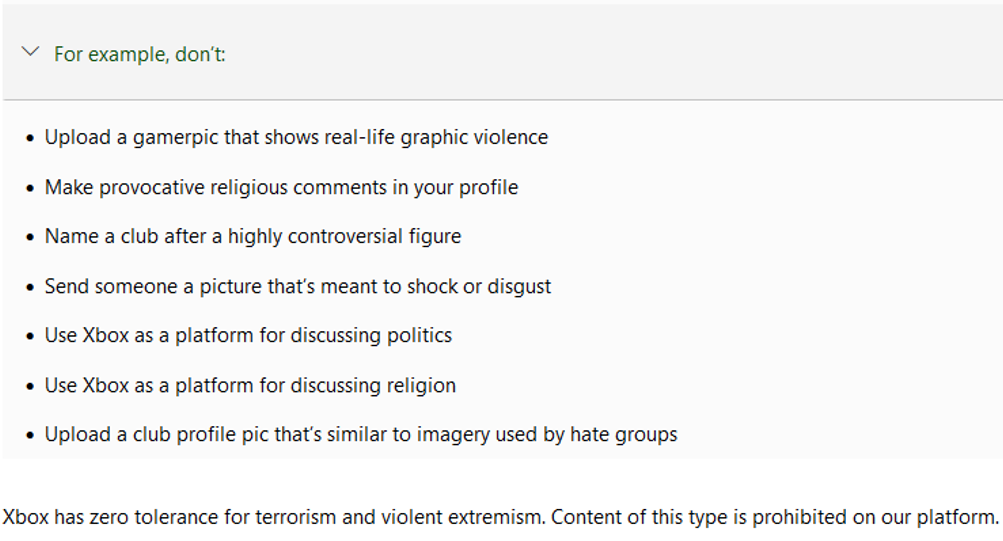

Figure 4. Section called “Be yourself, but not at the expense of others” on Xbox’s Community Standards page (screenshot, March 12, 2024)

At a lower level, Microsoft’s Xbox console platform has its own Community Standards that focus on games. It also addresses terrorism as an issue. These standards limit political and religious discussions, especially any kind of discussion meant to shock or offend others. They also specifically forbid support for violent extremism and terrorism, and do not allow the use of images linked to hate groups (depicting violence in one’s profile image, and naming groups after controversial figures linked to hate).

At the lowest, game-specific level, Minecraft, one of Microsoft’s most popular games, has a shorter page for Community Standards that establishes basic community values.

Figure 5. Screenshot of the top of Minecraft’s Community Standards page (screenshot, March 1, 2024)

Minecraft’s Community Standards focus more on general conduct and emphasize that the game aims to foster an inclusive community.

This page also links to a Player Reporting FAQ that explains in depth how to report other players for abuse, what players can be banned for, and consequences for abusing the reporting feature to falsely accuse other players.

Ultimately, addressing extremism and terrorism in online games is a practice that should be adopted by more game companies. Including policies that explain the threat can inform players and alert them to what behaviors to avoid. Online games continue to be spaces where extremist rhetoric and narratives are normalized—and sometimes potentially even where extremists recruit. Game companies have a responsibility to protect their players and their online games spaces from these threats.