Online Hate and Harassment: The American Experience 2024

18 min read

Executive Summary

A group of moderators, who were struggling to cope with antisemitism in their communities on the social media platform Reddit, reached out to the ADL Center for Technology and Society (CTS) for support. Following engagement with Reddit—based on research into these moderators’ experiences—the platform took several important steps to support these moderators and stem instances of antisemitism on the site.

Some of these steps include:

• Providing refresher training to safety staff on Reddit policies around hate, including antisemitism.

• Onboarding identity communities, including Jewish community representatives, to their Partner Communities Program.

• Started Moderator Code of Conduct proceedings on interference with some Jewish communities.

• Instituting new workflows around outreach from any sensitive or identity-based communities to ensure that the concerns of these communities are reliably escalated.

For the complete list of Reddit’s response and changes, see Reddit Public Statement at the end of the report. For a list of the recommendations for the platform by the moderators of Jewish subreddits who participated in this research study, see Moderator Recommendations at the end of the report.

Background

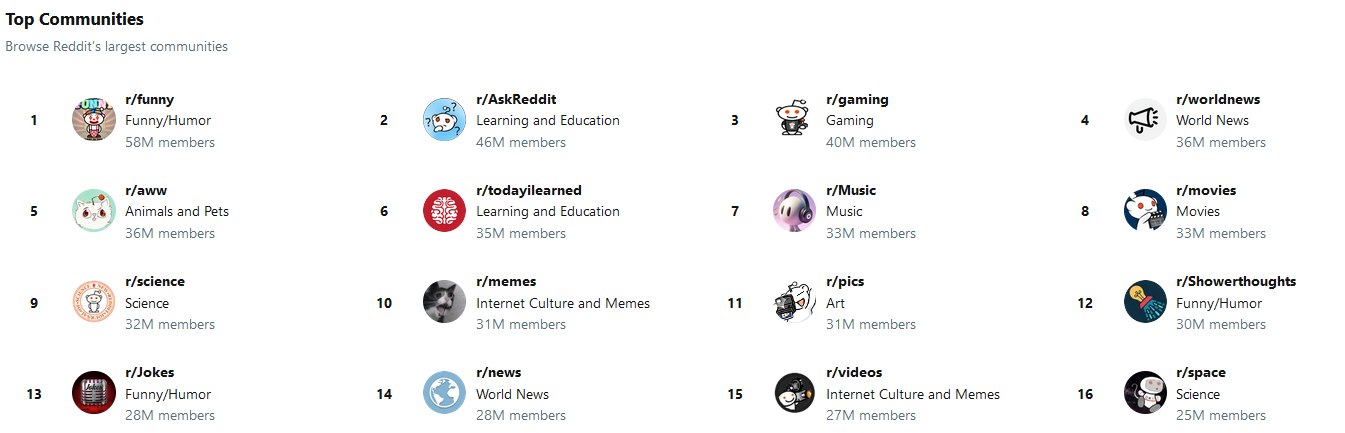

Reddit is a unique social media platform: it acts both as a news and information aggregator, and a forum with 73 million daily users. Platforms like X/Twitter or TikTok make posts available to all users. Based on views and likes, the algorithm moves these posts higher or lower. Reddit, in contrast, is divided into thousands of "subreddits" covering different topics ranging from news items to location-based communities, hobbies, and everything in between (see Image 1). Users can join subreddits based on their own interests, rather than being governed by an algorithmic feed. Within subreddits, posts are ‘upvoted’ or ‘downvoted,’ with the most popular posts being given more prominence on a subreddit’s page. There is also a popular page where users can see the most upvoted content across all subreddits. These posts are usually from the top communities (see Image 1.)

Each subreddit is run by moderators, volunteer users who set and enforce rules of their individual communities. Alongside Reddit’s Internal Safety teams, they keep subreddits clear from policy-violating content such as spam and hate speech. They enforce the broader sitewide rules as well as subreddit-specific rules, which are developed by moderators and their communities. They tend to the community culture. Moderators are a critical component, not only to their subreddits but also to the fabric of Reddit as a site. In contrast to moderators, Reddit administrators or "admins", are paid workers from Reddit. Their job is to enforce Reddit’s site-wide rules and the Moderator Code of Conduct. They often interface with moderators to enforce rules and advise on matters of Reddit’s policies and tools.

Image 1: List of the top subreddits on Reddit by subscriber

CTS ran a focus group with six moderators from a variety of Jewish-focused subreddits. We are keeping the names and identifiable information of these subreddits private to protect the moderators’ privacy and protection, and are instead calling them Andrew, Ellison, Jeff, Taylor, Peter, and Shaun. The subreddits they moderate have, by their own estimate, an average of 78,000 subscribers, and receive approximately 50 posts and 1400 comments per subreddit per day.

The moderators described a site where they experience antisemitism and hate speech. Prior to their engagement with ADL and Reddit, they claim to have received insufficient support from admins. They report that this antisemitism has increased sharply since October 7th and talk about a concerted effort from some subreddits to harass and punish users from Jewish subreddits.

Antisemitism on Reddit

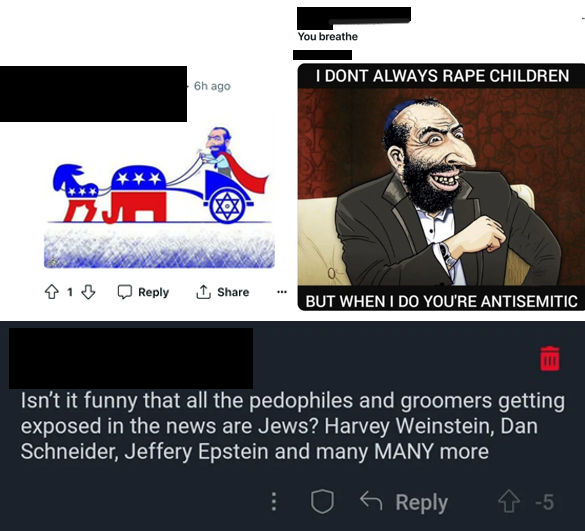

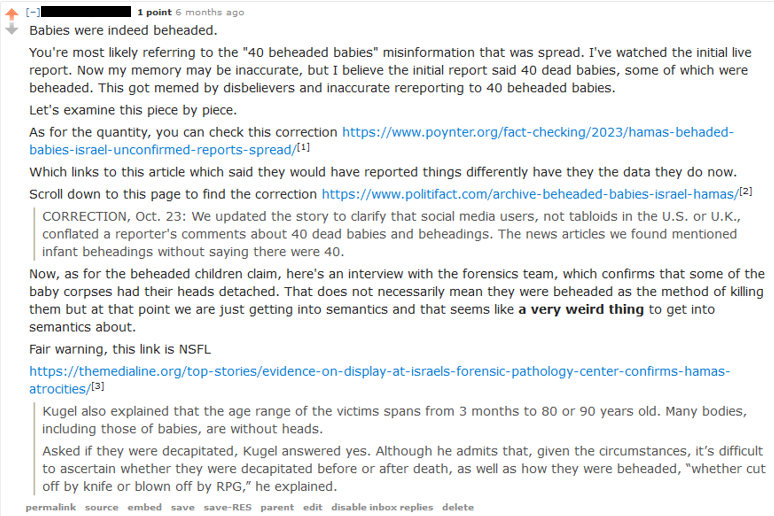

Jewish subreddits provide users with a space to talk about their unique experiences. These spaces have taken on an increased importance after October 7th. The mods we spoke with, however, describe a situation where even these safe spaces have become inundated with antisemitism that they constantly have to keep in check (see image 2).

The subreddit that Taylor moderates, for example, is a space that they say has “a lot of people with a lot of trauma that they're working through…We have a lot of people who are very scared, we have a lot of people who are…dealing with antisemitism, in their real lives, and antisemitism online in various places.” And yet, at the same time there are antisemites who purposefully seek out subreddits like theirs to harass users and spread hate. This requires Taylor to be constantly vigilant of the subreddit’s content and users.

Image 2: Examples of the types of content that moderators must action on, and that were also actioned in parallel by Reddit safety employees

Taylor has no shortage of examples. “Yesterday somebody posted a bunch [about] pogroms and attacks, and the Holocaust and exiles the Jewish community has faced and was like ‘There's a reason for all of that. The Jews are unacceptable’…And then we get a lot of blood libel… the Jews killed Jesus, and this is just constant. Not once a day, not once a month. This is just constant.”

The other moderators echoed Taylor’s sentiment. They explained that they deal with slurs, footage of the October 7th massacre, hate speech, and blood libel, among other hateful words and acts. One of the moderators, Peter, sums up the experience of moderating a Jewish subreddit as having seen, “literally anything that's listed, for example, in the IHRA working definition of antisemitism—we probably experience each of them multiple times a week in some form.”

The already substantial amount of hate that they have had to filter has increased after October 7th. Ellison says that the situation has “gotten a lot worse recently,” especially around topics related to Israel, Palestine, and the war. This included removing videos taken by Hamas of the massacre. Andrew states that after October 7th he had to remove “a lot of gross stuff” including “a lot of the GoPro footage that Hamas had recorded and that got shared.” This footage was not only shared by those who wanted to revel in the terror, but by those who were shocked by the attack. Yet, mods must deal with it all the same because, as Andrew says, “all those things violate the terms of service.”

This has taken a toll on the moderators themselves, not only from having to manage the perpetual hate, but also having to face harassment directed at them in person. Moderators volunteer to moderate subreddits out of personal interest and their investment in the community. But the moderators of Jewish subreddits must devote so much time to keeping the space safe that they have no time to participate in the communities they love. “I can't really participate because I'm so busy dealing with the moderator end of things,” says Taylor.

The workload that the moderators must handle is sometimes the result of intentional acts by bad actors on the platform. Users can report comments on posts that are sometimes months old, and moderators then have to review these reports, one by one.

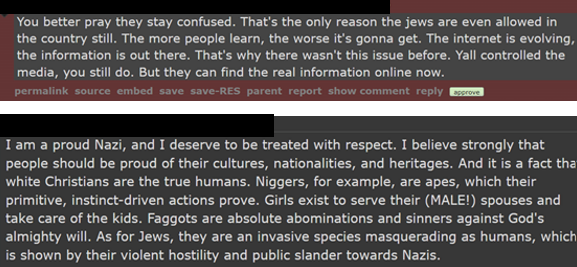

Image 3: A comment that was flagged by AutoMod and Crowd Control, two automatic moderation functions available from Reddit

Some calculating users have taken advantage of this function. Jeff describes an average beginning of the day for a moderator as logging on and facing, “a queue of things that were either flagged or automatically reviewed that we have to remove or make some kind of decision about,” and how “one user can definitely go into, let's say, a post that was made six months ago and just report every single comment for some spurious reason and you have to go in and clear all those out if you want to have a sense of what's actually going on in your community.” This report abuse increases mods’ workload substantially, taking precious time away from handling current problems or even participating on the site.

There is a report abuse button available to moderators to indicate that a user is reporting benign posts or comments just to hassle moderators. This may work for other subreddits, but because of the amount of hate that Jewish subreddits face the tool is less effective. According to Peter, “if you get flooded with tens or dozens or hundreds of these reports at once… from multiple individuals,” there simply isn’t enough time to report them all as abuse. Ultimately, the amount of harassment “really discourages you from reporting them . . . because it's just way too much to do. You have to effectively ignore the rest of your responsibilities as a moderator.”

Moderators also face steady personal harassment from users, ranging from slurs to pointed personal attacks. Peter recalled a recent situation where a user targeted the mods individually, insinuating that the user knew where they lived and was searching for them. This user “suggested that they want to meet with us in person, and that they're hoping that they're closer to being able to talk to us, and just kind of suggesting that they're trying to find us.”

The moderators felt that they have to be careful not to share any personal information about themselves on their subreddits. “A lot of us have to be careful when we do our moderation duties,” Andrew explains. “We're taking on this administrative stuff-we don't really post too much about our personal lives in case it could come back, and somebody could track us down. That's a real fear I have.”

Subreddit Antagonism

The moderators described not only having to deal with hateful users, but also antagonistic subreddits and their respective mods. They discussed how some subreddits are, in their experience, openly hostile to Jewish users. This creates friction because the mods of different subreddits often need to communicate or work together when dealing with hate speech. When mods of other subreddits are hostile, then not only is it impossible to work with them—it is outright dangerous.

Taylor and their peers keep a “running list” in their heads of which subreddits are safe to report antisemitism on. This is because if a mod reports a comment or post as ‘antisemitic,’ the subreddit mods could retaliate. According to Shaun, “if you're in an unfriendly subreddit, then there's a higher likelihood that not only will they ignore it [antisemitism reports], but they will report you to the admins as falsely reporting content in order to get you banned.” Jeff echoes Shaun and Taylor: “it's kind of a known thing that you can only report antisemitism on subreddits where the moderators are friendly.”

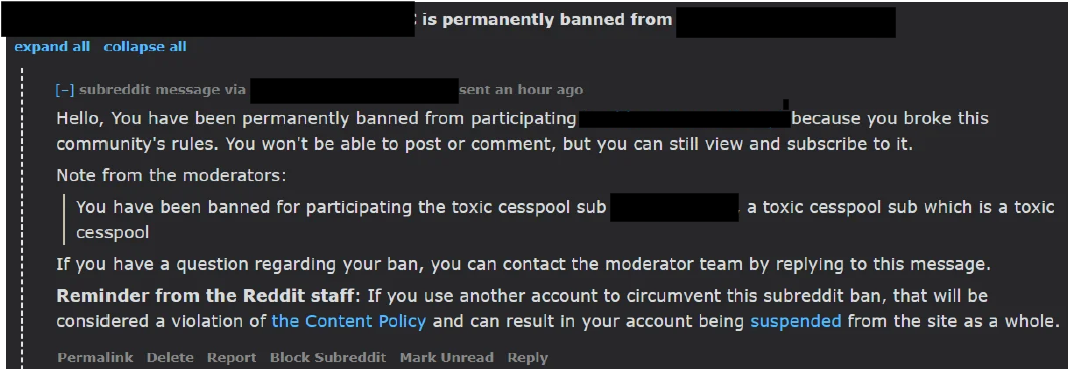

Moderators can auto-ban users who participate in certain subreddits. This tool was ostensibly created to prevent users of hate subreddits from accessing communities. But the moderators described it as being used to punish those who engage with Jewish topics. Shaun, who moderates a debate subreddit, says that the moderators of another subreddit “would send users an automated message that they were banned for participating in a ‘toxic cesspool’” as they describe his subreddit.

Image 4: An example of the permanent ban from r/therewasanattempt

They don’t believe these actions were restricted to small subreddits with a few users. Andrew states that he was banned from r/news (27.7m members) and r/worldnews (35.8m members), two large subreddits. The first ban on r/news (Image 5) came shortly after October 7th on a post he made that he said, “was trying to debunk October 7th propaganda” that, “violated a rule, an unspecified rule.” The second on r/worldnews was for ban evasion, which involves someone who has their account banned and creates a second one to get around the ban. But Andrew says he doesn’t have multiple accounts, “I just use this one.”

Image 5: Andrew’s comment that caused him to be banned from r/news and follow-up conversation asking for a lifting of the ban

Lack of Support from Admins

In the face of antisemitism and hostility from other subreddits, the mods we spoke to described frustration with the support they received from Reddit. Prior to engaging through ADL most of the mods we spoke to rarely interfaced with the admins; “I have had virtually no direction [from] them on a regular basis. I think that once in a while [they have] removed stuff …They've done close to nothing,” says Ellison. Reddit prides itself on its community moderation. Admins are Reddit employees, but most day-to-day moderating decisions are left up to the mods with little oversight from the admins. However, some subreddits do have regular admins they are able to interface with.

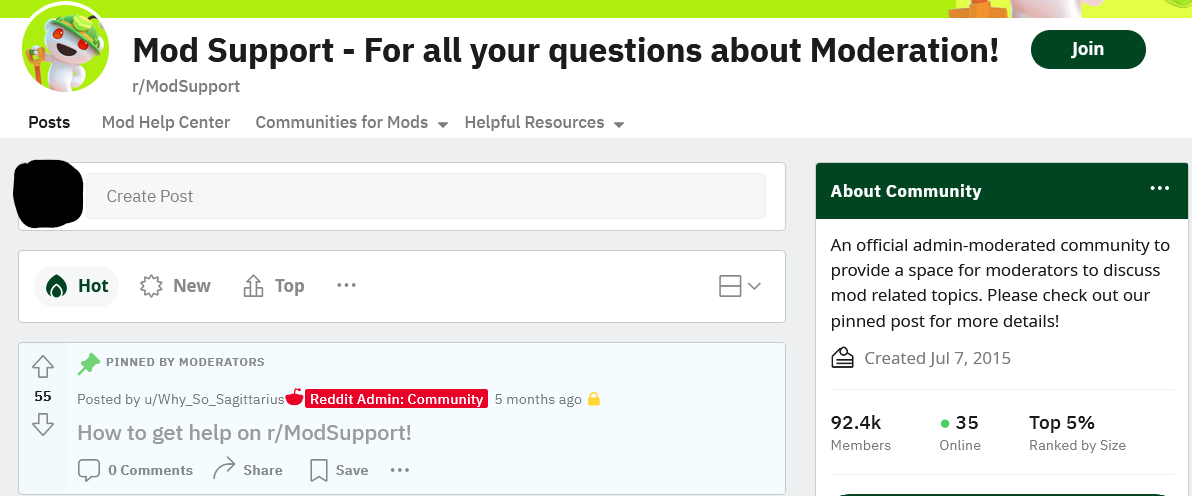

Image 6: The Mod Support Subreddit

Peter and the other mods have contacted admins directly using these tools, but, while they may “get the same admin contacting us” it is, “in no way a kind of ongoing dialogue.” The admins give yes or no answers to questions and forward questions or concerns to ‘the appropriate team’ when they cannot answer them themselves, but otherwise mods receive, “no feedback or follow up, or anything at any point.” Jeff agrees that the admins give no follow-up support. He describes one incident where they reported harassment, only to receive an automated message that said the admins had reviewed their claim and determined that there was no harassment. When he went to mod support to contest the no harassment claim, he says it took one week for the admins to respond. In the end, however, he has no idea what happened to that claim, saying, “I don't think we ever heard a resolution about what happened.”

There is also a concern that admins are not aware of the special circumstances of Jewish users and moderators. For example, Andrew claims that every few months the mods deal with harassment around Leo Frank, a Jewish businessman who in 1913 was wrongfully convicted of the rape and murder of a 13-year-old female employee, and later lynched. These cyclical antisemitic tropes are not dealt with by the admins. Andrew hypothesizes that it is because they, “are not in the know enough about that [antisemitism] to understand that basically that's a huge dog whistle, for antisemitism.”

Antisemitism has resulted in a chilling effect on speech for the mods and those on their subreddits. Jeff says that the response to antisemitism reports on the site has stopped him from reporting antisemitism. “I’ve had my account suspended for report abuse, reporting what I earnestly thought was antisemitism on other subreddits.” Taylor concurs that not only are they discouraged from reporting, but “Jewish users are discouraged from reporting things. I'm discouraged from reporting things. I know I am. I know other Jewish users are.”

The increase in hate that moderators are facing post October 7th is not an isolated phenomenon. We have seen this same increase across the online and offline world as well. Global antisemitism has soared; in the United States, antisemitic incidents have increased by 140%. We’ve seen subsequent increases on other platforms, notably X/Twitter, and TikTok. The challenge for Reddit and all platforms is to continue the ongoing work of addressing antisemitism on their platforms to keep online spaces safe for Jewish users.

Methodology

ADL was contacted by six moderators of Jewish subreddits looking for support handling the antisemitism on their subreddits. On March 20th, 2024, we conducted a 90-minute group interview with these mods to discuss the antisemitism they have experienced on the platform and the interventions that Reddit has, or has not, taken to support them. All names of the moderators have been anonymized. Minimal reference to their personal information (age, gender, etc.) or to the subreddits they moderate has been made to protect them from harassment.

Moderator Recommendations

Based on their experiences on the platform, the Reddit moderators who participated in this research study made the following recommendations, which we support:

Create an Ambassador for Minority Communities: The moderators want an ambassador or admin who is solely dedicated to minority communities and will respond quickly to concerns. As it stands right now, their concerns are shuffled from admin to admin with seemingly no one taking their reports seriously. There are also concerns that admins do not have the subject matter expertise to understand the antisemitism that they face. A dedicated ambassador would be able to interface with the community so they can feel heard and that someone is advocating on their behalf.

Clear Definitions of Hate Speech and Antisemitism: Reddit’s definition of hate speech is vague and is abused by other subreddits to harass Jewish subreddits. There also is no working definition of antisemitism, leaving it up to moderators and admins to determine on their own what violative content is. The mods would prefer Reddit to adopt the IHRA definition of antisemitism.

A Limit on Auto-Banning Users: Mods can punish their users by blocking them from accessing certain subs, for no reason other than having a different viewpoint. The mods we spoke to do not want to get rid of the auto-ban tool. Instead, they wanted to work with a Reddit admin to find a way to address the problem of other subreddits punishing those who access Jewish subreddits.

Reddit Public Statement

Reddit’s mission is to create community, belonging, and empowerment for everyone in the world, so we were alarmed to hear that some of the moderators of a small number of our Jewish communities shared negative experiences. We work hard to enforce our Rules, which make clear that everyone has a right to use Reddit free of harassment, bullying, and threats of violence, and we do not tolerate communities and users that incite violence or that promote hate, including antisemitism or other types of identity or religious-centered hate. We are grateful to several moderators and the ADL for bringing this matter to our attention, and we are happy to share the steps that we have taken so far, while recognizing that our efforts are continuing, and this work is never done.

• We reached out to the mods concerned and had several conversations with them to understand their specific experiences.

• We also had several conversations with the ADL.

• Based on the information they both shared, we started workstreams in several areas, including safety tooling, human operational processes, Moderator Code of Conduct enforcement, and product design.

Reddit’s processes for combating hate

▪ Hateful content, including antisemitism, is prohibited on our platform, and our dedicated internal Safety teams use automated tooling and human review to action this content across the platform.

▪ As we’ve shared, we saw a ~9% increase in hate and abuse at the start of the Israel/Hamas conflict, as well as a correspondingly large (~12%) uptick in admin-level account sanctions (e.g., user bans and other enforcement actions from Reddit employees) throughout Q4 2023. This reflects the rapid response of our teams in recognizing and effectively actioning this content.

▪ As noted in our latest Transparency Report, we removed over 68,000 posts and comments under our Rule 1 against hate from July – December 2023 and will continue to enforce our policies across the platform in collaboration with mods. Specifically, we can confirm that all of the content depicted in Image 2 was removed contemporaneously through the regular processes of our Safety Team prior to being brought to our attention in this report.

Safety Tooling

▪ We reviewed the safety settings of the communities in question and talked through with the mods the full range of tools available, including the Modmail Harassment Filter (which filters potentially hateful or harassing messages from Modmail) and the Harassment Filter (which filters such content from posts and comments). We also took their feedback on their experiences with the different tools and how we can improve them, including the identification and resolution of a bug.

▪ Because the mods were concerned that cross-posting their communities’ content into other parts of Reddit was a vector for brigades, we disabled external cross-posting for those communities that requested it.

▪ Reddit has zero tolerance for the dissemination of terrorist content on our platform, and we constantly monitor the platform to detect and remove it. This includes using tools such as hashing, AI detection tools, and automated URL alerts provided by partners like Tech Against Terrorism. Our goal is for terrorist content to be automatically removed before a human encounters it. We share detailed statistics on these efforts in our Transparency Report (see “Legal Content Removals”).

Operational process improvements

▪ We provided refresher training to our safety staff on Reddit’s policies regarding hate, including antisemitism, to ensure that violating content is addressed appropriately.

▪ We instituted new workflows regarding outreach from any sensitive or identity-based communities and reiterated training for the teams doing this work to ensure that the concerns of our communities are reliably escalated.

▪ We onboarded several religious identity-based communities, including Jewish community representation, into our Partner Communities Program. This is a program available to various mod teams to enable their success through higher-touch support and programs to address mod challenges.

Moderator Code of Conduct Enforcement

▪ Our Moderator Code of Conduct applies to all Mod teams. We are committed to enforcing it equitably and transparently, and these details are disclosed in our Transparency Report (see the “Communities” section).

▪ In this instance, we received and investigated four reports of interference with some Jewish communities, and instituted Moderator Code of Conduct proceedings where violations were found.

▪ We heard allegations from the mods that discriminatory auto-banning might be happening in other communities based on participation in Jewish communities, which would be a clear violation of both the Moderator Code of Conduct and Reddit’s rules against identity-based abuse. While we did not find evidence of this being a widespread phenomenon, we did note one temporary, small-scale, unintentional incidence of misuse of a moderation bot in one community, which we’ve since addressed. We will continue to monitor for this activity and will take action if we detect it.

Engagement with Mod Feedback

▪ We took extensive mod feedback on various user experience and interface issues. We shared this feedback with our product team to review and remain committed to continuously developing and improving our product safety offerings and mod tool functionality.

ADL gratefully acknowledges the supporters who make the work of the Center for Technology and Society possible, including:

Anonymous

The Robert A. Belfer Family

Crown Family Philanthropies

The Harry and Jeanette Weinberg Foundation

Modulate

Quadrivium Foundation

The Tepper Foundation