Related Resources

Read Time: 54 mins

Executive Summary

Some Facebook groups in local communities have become toxic sites of harassment, particularly identity-based harassment against Jews, women, LGBTQ+ advocates, immigrants, and people of color.

ADL has investigated online hate and harassment occurring on Facebook groups or pages in three local regions through interviews with community members, targets, and regional directors, combined with online observation, content review, and quantitative data analysis. In all three cases, the harassers—often part of the local political establishment, whether anonymous or not—excluded those they perceived as threatening outsiders to consolidate their control over civic life.

In a suburban town outside New York City, neighbors organize against a growing Orthodox Jewish community, claiming new synagogues and yeshivas (Jewish schools) are undermining their quality of life. In a large town near Boston, a backlash ensues when an outsider—a woman of color— wins a municipal election, triggering a spate of harassment on Facebook and online spaces. In small town Ohio, a local reproductive rights activist is hounded out of town when she runs for city council, harassed online and in person by residents and extremist groups. These incidents all share a common feature: hate-based harassment taking place on neighborhood Facebook pages and groups.

Researchers at ADL Center for Tech and Society (CTS) investigated online hate and harassment in local regions, identified through reports to ADL’s regional offices. Through interviews with regional directors, community members, and targets, combined with online observation, content analysis, and data scraping, we found that some Facebook groups in these communities have become toxic sites of harassment, particularly identity-based harassment against Jews, women, immigrants, and people of color. In some cases, this harassment was overt, while in others, harassers deployed levers of public governance — zoning laws, FOIA requests, community hearings — to harry newcomers, intimidate critics, and ultimately, consolidate political power.

ADL has previously found that the majority of Americans who experience harassment are harassed on Facebook, 54% in the previous year, according to our 2023 annual survey of online hate and harassment. Facebook is the most popular social media platform in the US by number of users; even still, the number of people who report harassment there is out of proportion to its popularity: 54% of those harassed were harassed on Facebook, twice the percent of the next most likely platform where harassment happens, X/Twitter. You are more likely to be harassed on Facebook than other platforms, yet this harassment is rarely visible to researchers. Facebook provides very limited data access, making it understudied compared to platforms like Twitter/X and Reddit, which until 2023 provided public tools (called APIs) for studying their data (see ADL’s 2023 report on data accessibility for evaluations of each major platform). So where is this harassment happening and who is targeted?

Unlike other forms of online harassment, such as trolling campaigns where targets don’t typically know their perpetrators (the most notorious of which was dubbed “Gamergate” in 2014), targets of local harassment are more likely to interact with their harassers in everyday life, inhabiting the same town or region, and often know people in common. Harassment where you live can be even more damaging. Not only can it cause emotional and reputational harm (as well as lead to physical violence), but many targets withdraw from participating in civic life, effectively silencing critics.

Addressing online harassment in local communities requires concrete steps from platforms and policymakers and broader investments in public life. Facebook must close loopholes that allow moderators to remain anonymous on community Groups and Pages, given their “real names” policy elsewhere; they must also apply network analysis to moderating content in these spaces, where harassment typically involves cumulative attacks that may not be obviously harmful in each individual comment or post. All targets we interviewed said they reported the harassment to Facebook but were told it didn’t violate Facebook’s community guidelines. We found many posts and comments that used hateful language or targeted others based on a protected characteristic like religion. Such reports should serve as signals to Facebook that particular Groups and Pages have a harassment problem. Often, residents were targeted in private groups where their harassment was visible to others in their community but not them if they weren’t a member. This allows perpetrators to identify targets and coordinate harassment without recourse for targets. Facebook must also allow targets to escalate reports of harassments, especially ongoing campaign harassment or threats of violence, which are often implicit, as ADL advocates in our 2023 report card on abuse reporting tools, Block/Filter/Notify: Support for Targets of Online Hate Report Card.

Beyond Facebook policies and enforcement, broader changes are needed in local communities to support targets, educate law enforcement, and build trust in public institutions. Targets need better information on how to respond to local online harassment, such as how to document it, report it to platforms and law enforcement, and how to protect their safety, such as increasing privacy protections on their social media accounts. Local law enforcement too needs to understand local laws, the nature of online harassment, and their jurisdiction, to provide meaningful help for targets.

In a suburban town outside New York City, neighbors organize against a growing Orthodox Jewish community, claiming new synagogues and yeshivas (Jewish schools) are undermining their quality of life. In a large town near Boston, a backlash ensues when an outsider—a woman of color— wins a municipal election, triggering a spate of harassment on Facebook and online spaces. In small town Ohio, a local reproductive rights activist is hounded out of town when she runs for city council, harassed online and in person by residents and extremist groups. These incidents all share a common feature: hate-based harassment taking place on neighborhood Facebook pages and groups.

Researchers at CTS investigated online hate and harassment in local regions, identified through reports to ADL’s regional offices. Through interviews with regional directors, community members, and targets, combined with online observation, content analysis, and data scraping, we found that some Facebook groups in these communities have become toxic sites of harassment, particularly identity-based harassment against Jews, women, immigrants, and people of color. In some cases, this harassment was overt, while in others, harassers deployed levers of public governance — zoning laws, FOIA requests, community hearings — to harry newcomers, intimidate critics, and ultimately, consolidate political power.

Unlike other forms of online harassment, such as trolling campaigns where targets don’t typically know their perpetrators (the most notorious of which was dubbed “Gamergate” in 2014), targets of local harassment are more likely to interact with their harassers in everyday life, inhabiting the same town or region, and often know people in common. Harassment where you live can be even more damaging. Not only can it cause emotional and reputational harm (as well as lead to physical violence), but many targets withdraw from participating in civic life, effectively silencing critics.

Addressing online harassment in local communities requires concrete steps from platforms and policymakers and broader investments in public life. Facebook must close loopholes that allow moderators to remain anonymous on community Groups and Pages, given their “real names” policy elsewhere; they must also apply network analysis to moderating content in these spaces, where harassment typically involves cumulative attacks that may not be obviously harmful in each individual comment or post. All targets we interviewed said they reported the harassment to Facebook but were told it didn’t violate Facebook’s community guidelines. We found many posts and comments that used hateful language or targeted others based on a protected characteristic like religion. Such reports should serve as signals to Facebook that particular Groups and Pages have a harassment problem. Often, residents were targeted in private groups where their harassment was visible to others in their community but not them if they weren’t a member. This allows perpetrators to identify targets and coordinate harassment without recourse for targets. Facebook must also allow targets to escalate reports of harassments, especially ongoing campaign harassment or threats of violence, which are often implicit, as ADL advocates in our 2023 report card on abuse reporting tools, Block/Filter/Notify: Support for Targets of Online Hate Report Card.

Beyond Facebook policies and enforcement, broader changes are needed in local communities to support targets, educate law enforcement, and build trust in public institutions. Targets need better information on how to respond to local online harassment, such as how to document it, report it to platforms and law enforcement, and how to protect their safety, such as increasing privacy protections on their social media accounts. Local law enforcement too needs to understand local laws, the nature of online harassment, and their jurisdiction, to provide meaningful help for targets.

Background

The rise of community and neighborhood pages on social media coincides with a decline in local news media (and daily newspapers generally) and accelerated during the pandemic, when online community spaces became a central alternative to in-person communication. The rise of social media and digital news contributed to the consolidation of national news at the cost of local newspapers, which have disappeared dramatically over the past two decades, as numerous researchers and journalists have chronicled (see for example, the annual State of Local News report by researchers at Northwestern University). Social media can serve as a new “public square,” but the mainstream platforms are privately owned by tech companies, meaning such public space are controlled privately.

In this context, Facebook has become a key platform for neighborhood news and discussion groups. Communities across the U.S. typically have multiple Facebook Groups or Pages, some open, some closed, devoted to local topics, from neighborhood parent groups and “Buy Nothing” groups to community discussion pages. For many residents, these pages are important sources of information about local happenings, including community hearings, elections, livestreaming board or council meetings, etc. In many cases, one or two area groups fragment into multiple ones over political polarization and disagreements about whether or not to engage in “political” discussions, such as party politics, national conversations about race or sexuality, etc. Facebook has also declined in the past decade in popularity among young people (who prefer mobile video and image-based apps like Instagram and TikTok), even though it remains the second most popular social media site overall, after YouTube (according to Pew, 68% of American adults used Facebook in 2023). Many American adults use Facebook for community connection and engagement, while neighborhood platforms like Nextdoor have been introduced to address these needs as well, although they have not been adopted as widely.

Findings: Case Studies & Quantitative Evidence

Case Studies of Localized Harassment

We identified a shared pattern of harassment across the diverse locales we studied. This report describes examples from each of the three regions/communities we studied, showing how they illustrate this larger pattern. We have removed identifying details to protect the privacy of targets and others we interviewed.

- An event or series of events unfold when someone from a marginalized group gets involved in local civic life, such as running for office, bringing to the surface ongoing tensions over demographic change in the community

- These tensions erupt in existing or newly created community Facebook Pages and Groups, involving online hate and harassment, often anonymously

- Disgruntled community members pursue public actions such as FOIA requests, allegations of corruption, doxing those they see as interlopers, in-person protests, and other forms of online and offline harassment like hateful memes and messages

Example #1: The New Group on the Block

A new group, often from a marginalized community, begins arriving in a suburban town.

- Some local residents object to perceived neighborhood changes

- Disgruntled residents post to neighborhood social media pages, or create new pages or blogs, to complain or harass newcomers

- Residents use levers of local governance (zoning laws, local elections, other regulations) to make life difficult for newcomers but deny identity-based harassment is a factor

Since the 1970s, Orthodox Jewish communities from NYC (mainly Brooklyn) and Philadelphia have been moving to suburban towns and villages in New York and New Jersey, such as Kiryas Joel, founded in the 1970s and established as a separate town in 2017. This process intensified in the past decade as these communities, like many others, found themselves priced out of bigger metropolises and looked for more affordable places to live. In their new towns, these communities seek to recreate necessary features of observant Jewish life, building yeshivas and synagogues, or hosting community events in their homes.

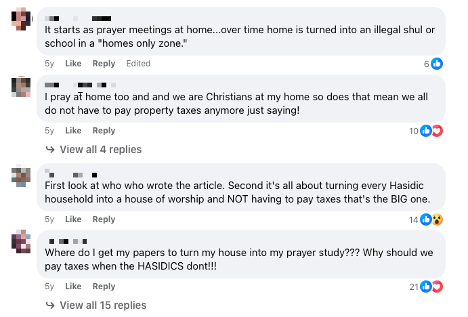

Jewish organizers fought back and succeeded in getting Facebook to pull down the group in February 2020 for hate speech, but only after lobbying public officials. These takedowns did not end the harassment; numerous similar websites and Facebook pages sprouted up to cover similar issues in nearby towns, complaining about density and overdevelopment, allegations of corruption on a local planning board (whose president was an Orthodox Jew), and other “quality of life” concerns. Although these concerns can reflect legitimate issues, the rhetoric disproportionately targeted Jewish homes and buildings and drew on antisemitic imagery and tropes. One Facebook group started in 2022 lamented that building new houses of worship--in this case, synagogues--would lead to unwanted parking in residential areas and would cut into property tax revenue (since houses of worship are exempt). In the following example, residents argue that Hasidic households are trying to avoid paying taxes, evoking antisemitic tropes of Jewish greed (Figure 2).

Figure 2. Discussion on a Facebook community page where residents claimed that Hasidic families were converting homes into synagogues and schools to evade taxes, drawing on antisemitic stereotypes

These new sites and pages regularly linked back to the original ones. Though they similarly focus on issues of overdevelopment and zoning, many of the comments took aim at Orthodox residents, whom they viewed as having “destroyed” quality of life in another town that has a larger Orthodox population. This town has since become synonymous with decline allegedly caused by the longstanding Orthodox community. As one commenter wrote in September 2023: “[the hearing] was moved to [redacted] so it’s closer to [redacted} and more of that community will attend, just so it looks like they have more support, DISGUSTING.” “That community” explicitly refers to the Orthodox.

Although comments on these public Facebook pages rarely crossed into explicit hate speech, they regularly invoked classic antisemitic tropes and themes about Jewish greed and secret control over finance and politics in the U.S. (other incidents residents reported to ADL of antisemitic harassment were explicitly hateful) A post last June by the group admin illustrates this typically veiled antisemitism:

[NAME OF TOWN] RESIDENTS ARE BEING VICTIMIZED BY A POLITICAL BLOC WHO WANTS POWER. Sorry [Name] and [Name], we call you out with your AG, DOJ and [NAME] prosecutor friends who promote a "UNITY" project that has ONE agenda, and it is not for the average AMERICAN family, its a sham. The agenda to destroy a town, and its public-school children, at the hands of [name] and [name] is all about ...... $$$$$$ That benefits a Conglomerate that hides behind SHELL companies.

The two men named both have recognizably Jewish names; it’s also intimated that the average “American” family is not Jewish, and that Jewish greed will destroy the town. When members of one group backed off of the more inflammatory language, and acknowledged that it bordered on hate, there appears to have been a schism which lead to another new website. A post on that site called out the first group for failing to be loyal:

It wasn’t too long ago that [first group] was on the same team, that Quality of Life matters. Now [first group] has turned on [town] Residents. What we all consider common sense, he labels it, “to stop orthodox Jewish families from moving to [town] “& “..bordering on hatred, fueled by pent up frustration” NO LOYALTY or TRUST

This second group shared the same GoFundMe and PayPal accounts as yet a third group, suggesting that these groups were closely linked. When we searched for references to these groups on fringe platforms like Gab and Telegram, we found support from more explicitly hateful groups, such a European “identitarian” group based in New Jersey, with the tagline “Men of European descent coming together to preserve their heritage, race, and nation. Located in New Jersey.” Their Gab page openly endorses anti-Jewish, anti-immigrant, racist views, accusing Black Lives Matter and antifa activists of being “Jewish terrorists” and asserting that “mass immigration is white genocide,” a classic example of the great replacement theory.

Antisemitic themes and harassment didn’t only take place online in these towns. A rash of attacks took place in these New York and New Jersey towns between 2019 and 2020, including doxing, burglary, vandalism, and threats. In one particularly egregious incident one Shabbat, the tires of cars parked at Jewish homes were slashed—57 sets in all, only in front of houses with mezuzot—and a dead pig was left on a rabbi’s porch. An even more violent assault took place in 2022, when an assailant used a stolen car to attack four Jewish men wearing traditional clothes, after stealing another victim’s car, striking three of the men and stabbing a fourth (all survived). The attacker has since been charged with a hate crime.

Example #2: Resisting The New Town Order

A historically white, suburban or exurban town becomes more ethnically diverse over time, leading to backlash when a perceived outsider gains political power.

- An anonymous group or groups form on Facebook and privately run news-style blogs to target women, people of color, immigrants, and/or LGBTQ+ members of the community, especially those visible in public life

- Harassers imitate the style of local news reporting, covering plans for new developments, crime incidents, and updates about local businesses, often publishing documents acquired through extensive FOIA requests that are burdensome for municipal government

- Some targets continue their efforts and resist intimidation, but in many cases, critics are silenced and the political establishment maintains power

In 2017, a large suburban town in central Massachusetts elected its first mayor of color, a highly educated and accomplished woman not originally from the area. This town had also seen steady Latin American immigration over the years and has a large bilingual population as a result. More recently, housing pressure from Boston has led to developers proposing large residential buildings in the town center, triggering concerns about density, traffic, parking, and other quality-of-life issues.

In 2018, an anonymous person or group launched a news-style website, which was eventually replaced by a similar site and a number of Facebook groups. They posted under a single pseudonym, but press coverage and those we interviewed suggest multiple authors. The pages and websites presented themselves as sites for community news, but posts targeted the mayor and non-white residents, mainly a Latin American immigrant community (Figure 3), as well as women and LGBTQ+ people who involved themselves in local politics.

Figure 3. Anti-immigrant comment on a community website in suburban Massachusetts

One woman who was involved in the effort to recruit an alternative to the establishment’s chosen mayoral candidate in 2017 said the city council reacted negatively immediately, organizing to “sink” the newly elected mayor. Some participants of the town’s civic Facebook pages were banned from those spaces for expressing “virulent” views against Black people, women, and LGBTQ+ people. First, they targeted the mayor, especially during the pandemic when they could “hijack” Zoom meetings, posting offensive, harassing comments on Facebook under pseudonyms. When the woman spoke out against the abuse, she was herself targeted, through hate memes comparing her to a witch and even a line of t-shirts. Eventually one of the harassers doxed her on Facebook, sharing her cell phone number and address, leading someone to crash into her yard and others to come and take videos of her. She shrugged off the attacks, refusing to let them bother her, but others said they “would never say anything negative about these people” to avoid being targeted.

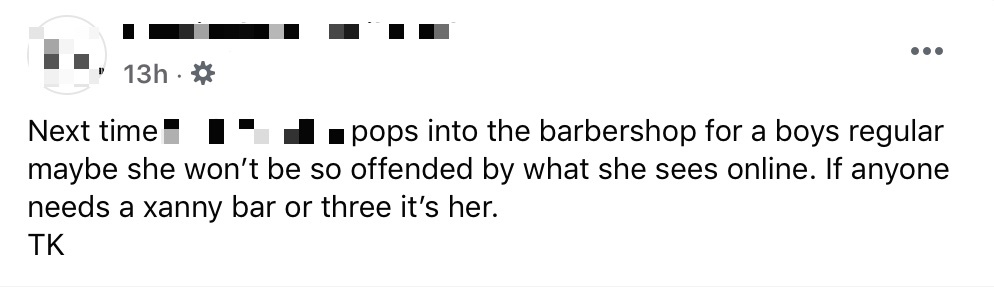

Figure 4. Harassment targeting a Massachusetts woman who criticized harassment happening on her town’s community Facebook pages. The text accompanied a hateful meme with the target’s face.

The mayor ultimately lost re-election; she had not been a politician previously and the harassment led her to become increasingly frustrated with the city council, including in leaked messages. But the harassment didn’t end there. The next mayor appointed people affiliated with one of the websites, included the person who had doxed the former mayor and another whose involvement later became public. The former mayor had not documented the harassment adequately and found it difficult to contest their appointments.

Another person we interviewed was targeted after becoming involved in post-Roe reproductive rights activism; she described these sites (and the people behind them) as “antisemitic, homophobic; they’re everything—pro-life, pro-gun, anti-DEI—everything I’m not.” Although she wasn’t sure who was behind the posts, she suspected people with positions in the town government: “Upstanding citizens, just like with KKK, polite to your face, doing all this stuff behind the scenes.” Her conviction was supported by the frequent – and often, rapid—publication of city documents. Someone got ahold of confidential responses to a survey she completed, for example, and threatened to release the data, hoping to embarrass her.

These women found themselves targeted after getting involved in local issues, such as through activism or speaking up at a town council meeting. But they were also visible in community spaces on Facebook, such as “town community chat,” created for discussing topics of civic and community interest. One suspects her support for the former mayor put her on harassers’ radar. Often, more direct harassment took place in private groups and pages, and she only found out about it when someone shared screenshots with her. Not being a member of these private Facebook groups made it all the more difficult to report. Even though these were private Facebook groups, others in her community were members and witnessed the harassment, causing harm even if she wasn’t there.

While they refused to be intimidated, the harassment succeeded in chilling the speech—and civic participation—of others. By the spring of 2023, a person alleged to be one of the founders of the hateful site was appointed to a town committee. Although some local leaders objected, he insisted he had stepped away from the site and apologized to city council members. Overriding the concerns of some council members, a majority voted in favor of his appointment.

Example #3: Trouble in a Small Town

A small town drives out an activist who gets involved in local elections:

- The pandemic sent many urban professionals seeking more space to the suburbs and small towns, leading to a spike in housing prices and a decline of inventory in 2021-2022 that has not let up

- The end of Roe in 2022 sparked renewed reproductive rights activism across the U.S., further polarizing local communities

- School boards and other local elections have become targets for activism, especially attacks on LGBTQ+ rights and teaching race in schools

- Individuals who speak out in favor of causes like LGBTQ+ or reproductive rights are harassed online and offline, including by local chapters of extremist groups

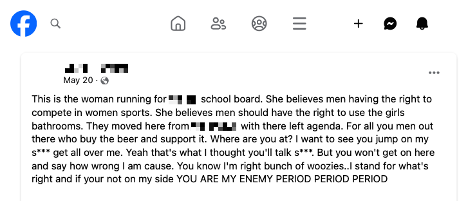

A white woman in her late 30s moved from urban Ohio to a small town with her family after the 2020 COVID pandemic, seeking more green space and lower crime rates. In 2023, following the end of Roe vs. Wade and the constitutional right to abortion, she also became active in reproductive rights advocacy and supported queer visibility such as drag performances. In her new town, which she initially found welcoming to more progressive politics, she joined local civic Facebook groups, similar to those described in the previous case studies. Motivated by issues such as problems with the town water, she announced a run for City Council on the town’s Facebook page, while her husband similarly announced a run for school board. She was also engaged in gathering signatures for a women’s health initiative that welcomed transgender and nonbinary people.

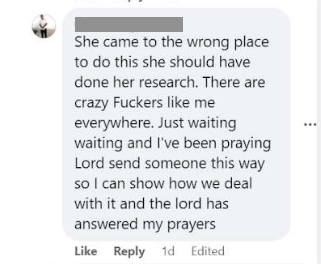

Figure 5. Facebook comment targeted an Ohio woman who advocated for LGBTQ+ and reproductive rights

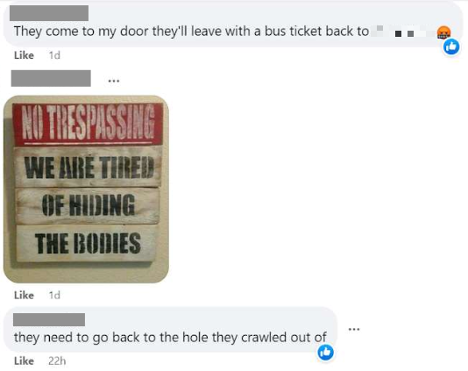

Although in daily life they felt they had found a supportive online community-new friends for her daughter, sharing vegetables from neighbors’ gardens-they faced an “onslaught” of “degrading and vile” attacks because of their advocacy efforts, and their leftwing politics (Figure 5). When they had privately supported liberal causes, such as putting up a pride flag in their yard, they found others in the community who were likeminded. But when she sought to have a pride flag flown in town, it led to “all hell… breaking loose.” A local public official allegedly led much of the harassment, often supported by other residents, primarily on these Facebook community discussion groups (Figures 6 and 7).

Figure 6. Facebook comment by Ohio town residents implicitly threatening violence (”we are tired of hiding the bodies”). Using an image instead of text makes it more difficult for Facebook to detect

Figure 7. Facebook comment threatening violence implicitly, skirting Facebook’s rules

The harassment escalated quickly, including threats on Facebook’s messaging app, harassers sharing photos of her child, and threats to call Child Protective Services. She continued her involvement with reproductive rights and LGBTQ+ causes, even as conflict over drag and transgender visibility reached a fever pitch in Ohio and across the U.S.; at one drag brunch in another town, neo-Nazi groups showed up to protest. Not long after, she was informed by a friend that she was being targeted by multiple far-right groups accusing her of being a “groomer”: “a groomer is running for office in [small town], be prepared to offer resistance.” She was supposed to speak at a town meeting, asking the mayor to resign over corruption, but instead, she and her family fled when they learned an extremist group would be attending the meeting to “protect straight people.”

The personal and financial costs of this harassment were severe, including likely bankruptcy, lost earnings, the stress of moving and finding somewhere to stay on short notice, and emotional challenges for their young child, not to mention giving up small town life they otherwise loved.

Antisemitism on Facebook: Quantitative Evidence

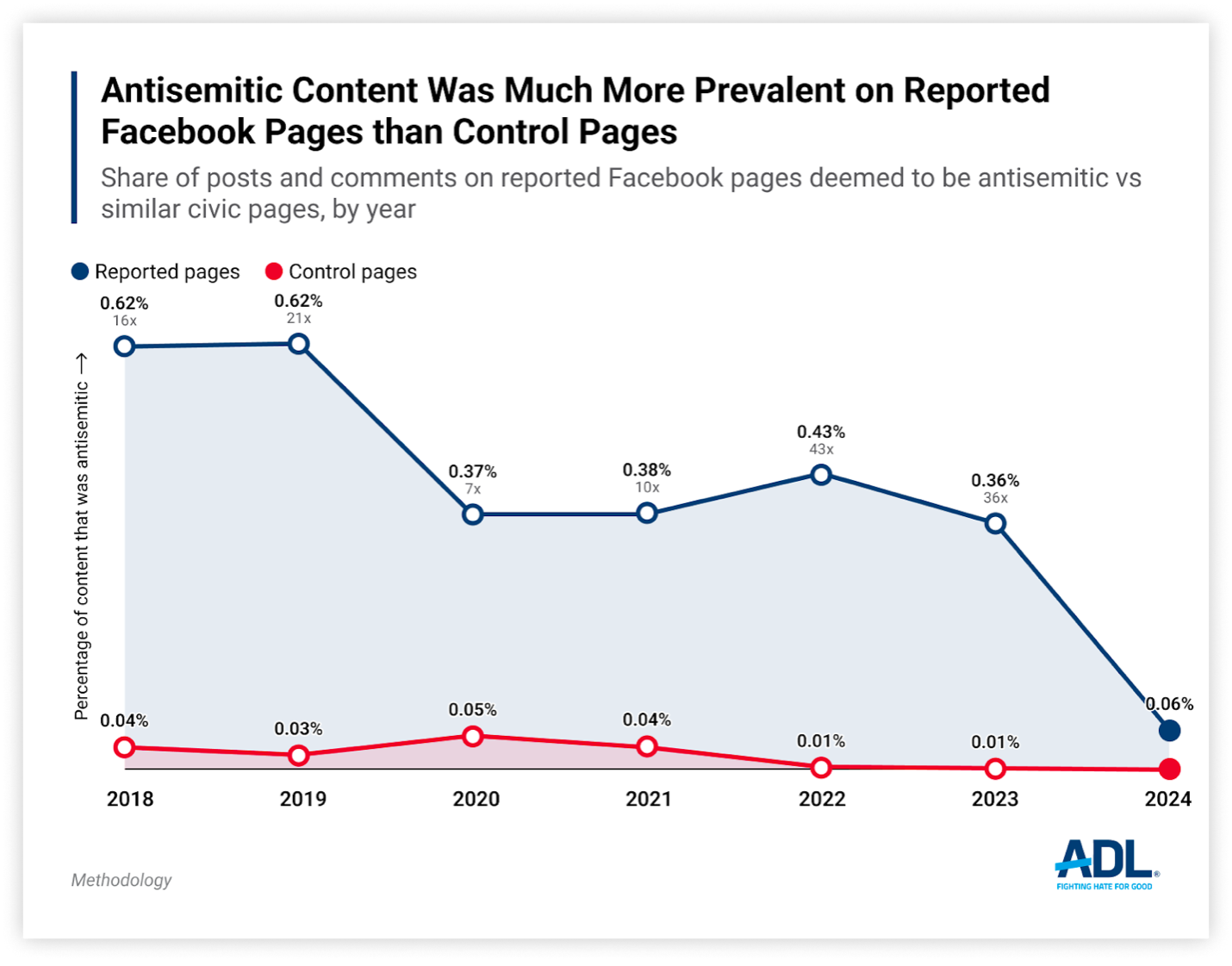

In addition to interviews and qualitative analysis for the three case studies, ADL researchers analyzed the quantity of antisemitic content on Facebook communities where harassment took place, using ADL’s own antisemitism classifier. Our findings supported the firsthand experiences of those we interviewed: the reported Facebook communities had 5-10 times as much antisemitic content as control pages.

Chart 1. Antisemitic Facebook content higher on reported Pages than control Pages

We compared neighborhood Facebook pages where harassment took place to similar civic pages (such as town Facebook pages), collecting a total of 46,290 posts and 82,723 comments for the control pages from January 1, 2018, to February 14, 2024. For the same time period, we collected 10,172 posts and 72,087 comments for the reported Facebook pages. We analyzed the data using ADL’s antisemitism classifier, a machine learning model which rates content for being antisemitic, and found that the reported pages had up to ten times more antisemitic content compared to the control pages. Most antisemitic content was in comments rather than posts.

We were only able to scrape data from public Facebook Pages, and not from Facebook Groups (which are structured differently than Pages). Based on interviews, it is likely that hateful and harassing content is even higher in private Groups and Pages. In one instance, we discovered a Facebook Page that was not reported to us that had a similarly high frequency of antisemitic content; the rest of the control Pages, however, had no antisemitic posts and little to no antisemitic comments.

We also found that some of the reported Pages had overlapping users: two pages in NY/NJ, one ostensibly a historic preservation group and one of the Pages notorious for targeting Orthodox residents, shared 65 (out of 135 total) unique users. A much smaller number of users accounted for a disproportionate number of antisemitic comments: across all the reported pages, we found that almost a quarter, 24%, came from just five unique users.

Discussion: Chilling Online Civic Participation

As ADL’s previous research has shown, women, people of color, Jews, and LGBTQ+ people are disproportionately targeted for identity-based online harassment. Campaign or networked harassment discourages already marginalized or minority groups from having a public voice in online spaces. Often, just being a woman of color on social media or a trans woman gamer is enough to trigger severe online abuse. When networked harassment takes place on local community discussion pages and forums, such as Facebook groups or independent blogs, it chills not only targets’ public voice, but their participation in local civic and political life.

We illustrate three main examples from our case studies: 1) harassment when a minority group arrives in a relatively short period of time (as in suburban NY/NJ); 2) harassment when existing groups gain political power, influence, and visibility (as in suburban MA); and 3) harassment and conflict when a newly transplanted urban professional advocates for abortion and LGBTQ+ rights in a small town in Ohio. Despite their differences, these three incidents shared multiple features, including economic pressures, demographic transitions, the growing importance of Facebook groups and pages to community life, and failures by platforms and law enforcement to handle reports of harassment adequately.

In all three cases, the harassers—often part of the local political establishment, whether anonymous or not—consolidated their control over civic life, excluding those they perceived as threatening outsiders.

Platforms Fail to Prevent Harassment

In each set of incidents, extensive harassment took place on Facebook, often across multiple public and private Facebook groups. But when targets reported to Facebook, Facebook routinely determined that the content “does not violate our community standards.” As one person put it, “Facebook never does anything.” Most people we interviewed reported that Facebook never took any action. Only in one case did Facebook eventually remove a hateful page, after community members enlisted the help of a state district attorney. Some we spoke with wished for greater transparency from Facebook, such as more dialogue about why a piece of content—such as an explicit slur—doesn’t violate their rules. Although Facebook reports that it removed 94.5% of hate speech it found proactively in the fourth quarter of 2023, ADL’s annual survey of online hate and harassment found that most people who are harassed experience harassment on Facebook (54% of people who were harassed were harassed on Facebook).

For Facebook, the individual pieces of content users report may not violate their policies or may require additional context for a human reviewer to understand why the content is violative. In these types of networked harassment, however, it is the accumulation and repeated nature of ugly comments that constitutes harassment, or implicit references that invoke hate, such as complaining about one town becoming more like a nearby town known for having a majority Jewish population. Facebook Groups also allow moderators to remain anonymous, despite the platform otherwise requiring “real names.” While anonymity is not a panacea for harassment—many harassers in our study used their real names—it makes it possible for public figures to evade responsibility for engaging in hate or harassment.

Local Law Enforcement Limited in Ability to Respond

Besides reporting to Facebook, many people we spoke with contacted local law enforcement, especially targets of more severe harassment. One woman in MA spoke with her town’s chief of police as well as other detectives and the county DA, who concluded there wasn’t sufficient evidence to charge one of her harassers for allegedly hacking city records. Another target in MA suspected that town officials were behind some of the harassment. In Ohio, the target went to the police, and while some officers were sympathetic, she felt that others were siding with the harassers. Police told her they would have a threatening comment removed but were ultimately unable to do so. Another time, when she learned far right protestors would attend a town meeting to protest her, she claims the police would nםt take a report as no direct threats were made.

Even when police or other law enforcement are sympathetic, their jurisdiction may be limited, or at least they may believe it is. Most police departments have limited training and inadequate policies on cybercrimes like swatting, doxing, or harassment. One man in MA had a more positive experience with local police, who offered their protection and got federal investigators involved. He also had the support of the mayor, however, who had contacted the police on his behalf. This community and institutional support provided a greater sense of safety and mitigated some of the harm.

Local Online Harassment Harms Targets & Chills Civic Participation

In all cases localized online harassment took a toll on those who were targeted, burdened their families, friends, and other witnesses, and dampened their participation in civic life. Orthodox Jews in NY and NJ were made to feel like pariahs for being observant Jews in historically non-Jewish areas. A rabbi and community representative told us about a Hasidic resident who was confronted in line at a hardware store by someone who believed of Hasidic Jews that “you don’t pay taxes, you live on welfare” and so forth, based on what others said online. In other incidents, local non-Jewish candidates for office described the Orthodox as “parasites” to be exterminated, the type of rhetoric that ultimately underpins and justifies genocidal violence.

In suburban MA, the targets we spoke with refused to be intimidated, and wanted to share their experiences with us to call more attention to the problem. As one woman explained: “It didn’t affect how I operated… I never replied on their page, I just looked at it.” But it concerned her family, who were worried it could lead to in-person violence at their home. Her adult children asked her to quiet down and not risk further ire. Her friends were upset as well, and were sometimes harassed themselves, causing them to withdraw from public engagement: “I know, because people told me, [that] it chilled free speech of other people, ‘cause no one wanted that target on their back.” Meanwhile, the alleged harassers, even after being outed, succeeded in gaining positions in local governance, such as committee appointments.

The most dramatic example of online harassment curtailing civic participation took place in small-town Ohio. The family targeted with an ongoing harassment campaign learned that an extremist group planned to protest at a town meeting where they were planning to speak against the mayor. Neo-Nazi groups at the time were regularly protesting events in other Ohio cities and towns, such as at a drag queen brunch. The family decided for their safety to leave town and contacted the FBI and local elected representatives. After a harrowing time packing up themselves and their young daughter and scrambling for somewhere safe to stay, they left their dream home and never returned. They watched the town meeting on Facebook instead, and saw one of their harassers and multiple members of a far-right group on the towns livestream. The personal costs were extremely high—emotional distress, especially for their young daughter, and bankruptcy—but they remain committed to their politics, even if they can no longer pursue them in their small town.

Extremist Groups Target Local Sites of Polarization

In addition to the harms described so far, we found evidence of extremist groups in two of the three sites, and potential but more marginal ties in the third. In Ohio, multiple extremist groups were actively protesting LGBTQ+ and reproductive rights activism, including the group that showed up at the town meeting. The family targeted wanted to report the threats when they were leaving to the town police, but a watch group that had tipped them off about the protest alleged that some officers were involved with known extremist groups.

In NY and NJ, we found ties online to at least one white identitarian group, promoting the harassing websites and groups on the fringe platform Gab. In a post from June 2019, the group described flyering in New Jersey in support of their efforts:

[Identitarian Group] activists distributed and posted flyers in [Town Name] NJ in support of [Community Group]. These brave Patriots are being targeted for repression by the Zionist supremacist social media monopolies Twitter and Facebook. The Goldman Sachs puppet Governor of NJ and his foreign-born Attorney General are persecuting this group for daring to exercise their First Amendment rights. [Community Group] has a right to organize! While we couldn’t find direct ties back from the community group to the white supremacist group, the group had a Gab account, as did one of the organizers.

In suburban MA, ties to extremists were weaker but still evident. Interviewees mentioned a pair of well-known area white supremacists that ran their own hateful, antisemitic website; one person suspected they were affiliated. Another person mentioned a far-right radio personality who embraced Trump and antisemitic conspiracy theories.

Recommendations

For Tech Companies

Close anonymity loopholes. Facebook Groups and Pages can be created without linking to a specific Facebook user; only an email address is necessary. Admins can post to their groups under the Group or Page name, effectively allowing anonymous, or at least, pseudonymous, posts. Ending anonymity will not end harassment; in some cases, harassers posted on Facebook under their legal names anyway. But many targets suspected that local officials and community leaders were behind these anonymous groups, who were able to avoid public censure or responsibility for their actions. If Facebook requires users to use their “real names,” why are Pages and Groups not subject to this rule? This loophole is doubly frustrating for the users on these pages who cannot be anonymous as they endure harassment—policies should clarify situations in which anonymity is no longer permissible, like when harassment occurs. The bar should also be higher for public figures than for private citizens; civic leaders should not be able to hide behind anonymity on community social media groups.

Use network analysis to detect local harassment campaigns. Facebook uses network analysis to identify campaigns in other contexts, but does not appear, from what we found, to apply the same methods to local Pages and Groups. All the targets we spoke with said they reported harassment to Facebook multiple times, but were told the individual posts didn’t violate Facebook’s Community Guidelines —a clear downside of our digital ‘public spaces’ being controlled by private companies. Most platforms review reported posts and comments individually, even though the context is often necessary to understand the harassment, such as multiple harassing comments that accumulate, or implicitly hateful language and references. Facebook and other platforms should also use multiple reports in the same group or against the same user as a signal that human review by subject matter experts is needed.

Provide user-friendly tools to report & prevent harassment. Our Abuse Reporting Scorecard lays out specific recommendations for fixing abuse reporting tools, while our Social Pattern Library demonstrates how these tools would work. For local online harassment campaigns, we recommend batch reporting, real-time support for targets, related activity/cross platform reporting, and delegated access, among other key tools. Targets also need better tools for documenting abuse to ensure effective reporting. Most interviewees took screenshots, but these did not always include necessary information such as user IDs, dates, or comment thread histories.

Make transparency reports accessible and understandable to non-experts. Users should be able to make informed decisions about the risks of social media, including how well the platform enforces its rules against hate and harassment —especially to contextualize their own experiences with localized online harassment. Although the majority of users may not read these reports, they should be aware of their existence and able to make sense of the insights. Reports or hubs should be easily discoverable (reachable in one click from the landing page or from a relevant policy page) and include clear explanations of key metrics and their significance. Making transparency reports more prominent also sends a strong signal about a platform company’s commitment to trust and safety.

Give greater access to data for researchers and third-party auditors. Academic and civil society researchers can be partners to tech companies in identifying gaps in policy enforcement and making online social spaces safer, and in deepening understanding of localized online harassment. Independent auditing is valuable for building trust with the public and holding platforms accountable for addressing harms. That work is only possible when researchers have access to platforms’ data—typically via open APIs—in a responsible way that protects user privacy. Yet recently most social media platforms have been moving in the opposite direction by restricting access to APIs.

For Government

As discussed in this report, too often researchers don’t have adequate access to platforms, and platforms themselves take approaches to transparency that are hard to compare across the industry. Additionally, policymakers can update laws to provide more accountability for hate and harassment online.

We urge federal legislators and other state lawmakers to follow the example of California and to enact similar policies to California’s A.B. 587, a landmark bill requiring publication of aspects of platforms’ terms of services and the actions they take against content that violates those terms.

Furthermore, we support and urge passage of current legislative efforts that aim to address important issues highlighted throughout this report, including: H.R.6463 - STOP HATE Act, which aims to require social media companies to publicly post their policies on how they address content generated by Foreign Terrorist Organizations (FTOs) or Specially Designated Global Terrorists (SDGTs) and S. 1876 - Platform Accountability and Transparency Act, which would require social media companies to share more data with the public and researchers.

Finally, we urge lawmakers to enhance access to justice for victims of online abuse. As this report discusses, hate and harassment exist online and offline alike, but our laws have not kept up with increasing and worsening forms of digital abuse. Many forms of severe online misconduct, such as doxing and swatting, currently fall under the remit of extremely generalized cyber-harassment and stalking laws. These laws often fall short of securing recourse for victims of these severe forms of online abuse. Policymakers must introduce and pass legislation that holds perpetrators of severe online abuse accountable for their offenses at both the state and federal levels. ADL’s Backspace Hate initiative works to bridge these legal gaps and has had numerous successes, especially at the state level.

For Law Enforcement

- Consider setting up a task force, sub-unit, or committee on digital abuse. Members of this unit should establish a model for supporting victims of abuse while investigating a crime.

Method

ADL researchers conducted research for this study in two phases from October 2022 through December 2023, collecting data from three different geographic regions. In phase one, we reviewed ADL’s internal incident reporting data to see where antisemitic incidents were concentrated and interviewed staff at nine of ADL’s regional offices whose constituents had reported local online harassment campaigns. Comparing ADL incident data to firsthand reports from regional offices, we identified locales with online harassment that met the following criteria: (1) the harassment was ongoing, not a one-off incident; (2) the harassment involved multiple perpetrators and/or targets; and (3) the harassment involved local digital platforms such as Facebook groups. We then recruited targets and community members to participate in in-depth interviews about their experience and interviewed five people who volunteered. One interviewee reached out to us through our recruitment efforts and we added her area to our locales. We also removed one set of incidents that happened primarily on private blogs and websites; the perpetrator’s main website had been taken down and he was ultimately arrested.

Along with interviews, we analyzed social media pages and screenshots that targets shared with us. We reviewed the content of social media pages manually, using thematic analysis to identify key topics, and analyzed them computationally, using ADL’s antisemitism classifier. We manually selected five Facebook pages reported to us as sites of hateful content and antisemitic harassment. We then selected a set of eight control Facebook pages from the same geographic areas, such as town Pages. For each of these pages, we collected all public posts and comments from 01-01-2018 to 02-14-2024. We then used ADL’s antisemitism classifier to calculate scores for each post and comment. This classifier uses a machine learning model ADL built by annotating more than 80,000 pieces of text-based content from Reddit and Twitter (which previously had public APIs for researchers). The data were annotated by Jewish volunteers using a codebook developed by ADL experts.

ADL gratefully acknowledges the supporters who make the work of the Center for Technology and Society possible, including:

Anonymous

The Robert A. Belfer Family

Crown Family Philanthropies

The Harry and Jeanette Weinberg Foundation

Modulate

Quadrivium Foundation

Strear Family Foundation Inc.

The Tepper Foundation