Major Platforms’ Midterm Election Policies: Are They Enough?

Major Platforms’ Midterm Election Policies: Are They Enough?

Social Media Election Policies: The Good, the Bad and the Misinformed

The ADL Debunk: False Narratives Around the 2024 Presidential Election

The ADL Debunk: False Narratives Around the 2024 Presidential Election

Executive summary

False and misleading election narratives are surging on social media platforms in the lead up to the US presidential election. Since 2022, at least three major platforms have weakened their rules against disseminating election misinformation. Of these, X/Twitter also appears to have rolled back enforcement against hateful election misinformation.

Ahead of this election, a flood of narratives has surged rehashing antisemitic tropes and pushing anti-immigrant misinformation. These include rumors that immigrants are voting illegally in large numbers, that Jews or Israel are rigging the election, and that Jews were behind the assassination attempt on Trump. More outlandish theories claim that the federal government (and/or Jews) created Hurricane Helene to suppress voting in swing states like North Carolina.

Rolling back rules against false election narratives

In 2022, ahead of the US midterm election, researchers at ADL analyzed the election misinformation policies of eight major platforms (then-Twitter, Meta’s Facebook and Instagram, TikTok, Reddit, Discord, Twitch, and YouTube). While all platforms had election misinformation policies in place, they failed to enforce them consistently. No platform addressed disinformation specifically (that is, intentionally false information, which platforms more often prohibit under policies against inauthentic behavior), and none allowed adequate access to independent researchers to audit their claims of increased security or misinformation detection. We also found that policy rules and updates were decentralized and difficult to find, often scattered across different pages and site sections.

Following the 2022 election, we conducted an audit of whether five of those platforms enforced their election misinformation rules (Meta’s Facebook and Instagram, TikTok, Twitter, and YouTube).

Our 2023 audit showed that:

Enforcement was inconsistent (even for identical pieces of content)

Rules were often inadequate (for example, against screenshots from rumors on fringe platforms or linking to unreliable content)

Election policies that only apply during elections allow denial narratives and other false election information to flourish between elections.

For 2024, we have updated our analysis to evaluate whether four of these platforms where we found hateful election misinformation (X/Twitter, Facebook, TikTok, and YouTube) implemented any of our recommendations or made other changes to their policies or enforcement.

We found that:

Most platforms continue to make exceptions for some kinds of misinformation, such as false allegations of past voter fraud.

Their policies are generally insufficient to prevent coordinated disinformation campaigns, such as users knowingly spreading fringe conspiracy theories, unless they interfere with voting.

No platform allows sufficient data access for independent researchers to study the extent of misinformation or rules enforcement. In fact, X/Twitter and Reddit curtailed data access for researchers in 2023.

We find it concerning that major platforms have rolled back policies against delegitimizing elections in two key areas: rules against claiming early victory and rules against false narratives about past elections. One positive change was that all four platforms we re-examined now appear to prohibit threats against election workers, including harassment and threatening violence.

Platform-specific findings

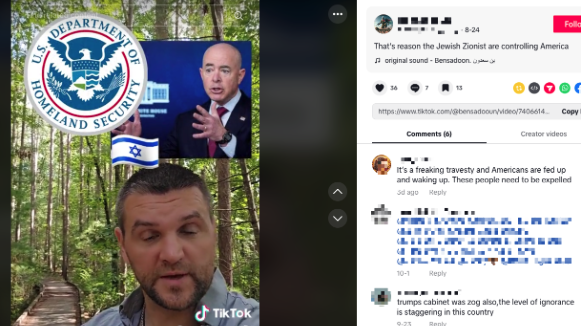

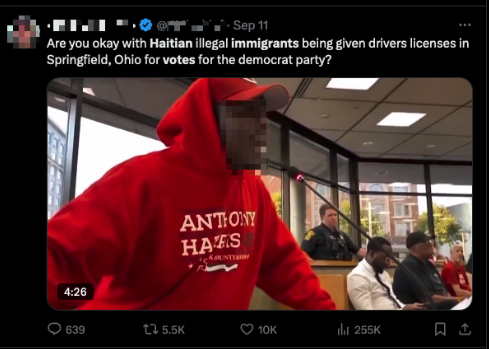

Post on X claiming Haitian immigrants are being given driver’s licenses in exchange for votes in Ohio. (Screenshot by ADL, Oct. 11, 2024)

Video titled “Proof That The Trump Assassination Was A Jewish Ritual” on YouTube. (Screenshot by ADL, Oct. 11, 2024)

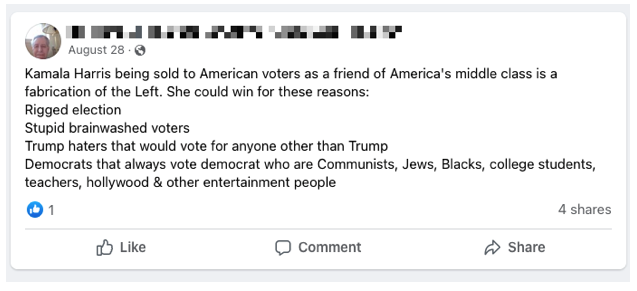

Post on Facebook claiming Kamala Harris could win the election because it could be rigged. (Screenshot by ADL, Oct. 14, 2024)

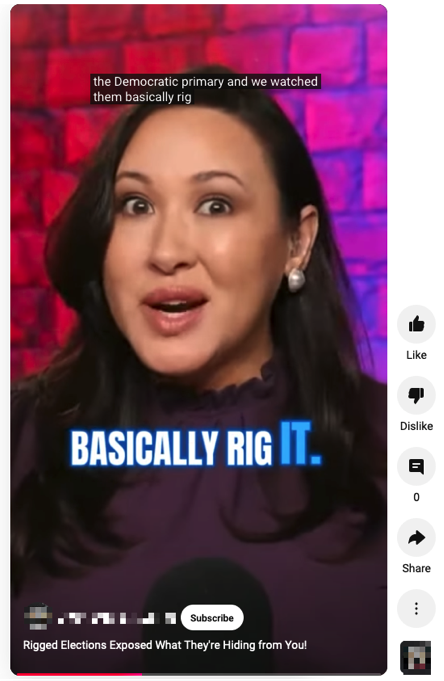

Video on TikTok claiming Democrats rigged the Democratic primary. (Screenshot by ADL, Oct. 14, 2024)

Hateful Election Misinformation Surging

While many platforms weakened rules against false claims of election fraud since 2022, X/Twitter also appears to have rolled back its enforcement against hateful election misinformation. We identified five hateful election misinformation narratives based on research by the ADL Center on Extremism, and collected examples of each on X/Twitter, YouTube, Meta’s Facebook and Instagram, TikTok, and Reddit. We then reported these as regular users.

Our collection efforts were limited by the difficulty of collecting such data; no platform provides a comprehensive research API (application programming interface, a tool for accessing platform data) that allows random sampling of all content. As a result, we cannot know for certain how much false and misleading election information is actually on these platforms or how such content is being recommended to users.

We easily found false election rumors on X/Twitter, including ones targeting immigrants and/or Jews by searching for terms such as “Jews rigged election,” “Jews Trump assassination,” or “Helene voting.”

Such narratives featured claims that:

Jews or Israel secretly control US elections

Jews were behind the assassination attempt against Trump

Haitian immigrants are illegally voting to help Democrats win the election

Jews in the US government are weaponizing (or even creating) monster hurricanes to interfere with voting

X/Twitter tries to fight misinformation through its "Community Notes" feature. A recent report from the Center on Countering Digital Hate found that "X's Community Notes are failing to counter false and misleading claims about the US election."

We also identified examples of hateful election misinformation on TikTok, YouTube, and Facebook, but they were more difficult to find.

Instagram was the only platform where we could not find obviously violative content through manual search. The only hit that came up in our search was an account with the following profile:

Rigged elections=communism

WATCHWOMAN - Romans 10: 9+10

MAIL IN VOTING will collapse USA

VOTER ID MUST BE ENACTED 2020

We were able to surface more examples of violative content through largescale data analysis (rather than manual search), but in general, all of the platforms we examined except X appear to be detecting and restricting most hateful false and misleading election narratives. It is possible such content is circulating among smaller networks or that our manual search terms were not adequate to finding it.

No platform took action on hateful election misinformation we reported, as of publication. After identifying examples across each platform, we reported them as regular users. While ADL partners with platforms to escalate such content, user reporting can scale in a way that relying on watchdog and civil society organizations cannot.

We also found that the user reporting options in some cases do not match a given platform’s policies. This was especially evident on TikTok and on X/Twitter. TikTok’s election misinformation policy is extensive, but the reporting interface only lists two types of violative election content, misinformation on voting or running for office or on final election results:

Based on our findings, we recommend that platforms:

Reinstate prior policies: Rolling back rules against false claims of past fraud or declaring early victory has enabled a resurgence of hateful narratives targeting immigrants and Jews. These rumors undermine electoral integrity and put targeted groups at risk, fueling antisemitism and potentially political violence.

Enforce rules consistently and equitably: Policies are only as good as their enforcement. Platforms must scale the resources necessary to identify and remove prohibited election misinformation, including automated and human review. High profile accounts such as political officials or candidates should not be exempt; on the contrary, because of their influence, they should be held to higher standards.

Ensure user reporting matches policy: TikTok, X/Twitter, and others must make available to users the complete list of prohibited misinformation through the reporting interface. Streamlining the interface to simplify reporting is a worthwhile goal. But it is also a means of communicating the rules that may dissuade users from reporting violative content that isn’t listed. A link to the full policy would address this gap.

Respond to user reports of election misinformation: Our recent research shows that platforms increasingly do not act on regular user reports, relying instead either on their own proactive detection (often automated) or on reports from reputable organizations like ADL, as part of “trusted flagger” programs. Users can play an important role in preventing the spread of hateful misinformation, especially narratives that require human interpretation to detect, such as those that depend on context or implicit information.

Apply election rules year-round: Election misinformation does not end after an election; on the contrary, false and misleading narratives about elections often grow and metastasize between elections.

Data access for researchers: Restore (or establish) free or low-cost research APIs for independent researchers that allow for random samples of all content, not just libraries of curated content or top posts. Archives of moderated content (with protections for user privacy) are also necessary to allow researchers to study hate and misinformation in depth, and to track extremist content.