2021 Online Antisemitism Report Card

Executive Summary

Platforms are still failing to take action on antisemitic hate reported through regular channels available to users. Researchers at the ADL Center for Technology and Society (CTS) tested how well five major platforms enforced their policies against hateful content in two areas: antisemitic conspiracy theories and the term “Zionist” used as a slur.

Most platforms only took action when ADL escalated the reports through direct channels, and even then, only on some of the content ADL deemed hateful. We recommend that tech companies conduct comprehensive audits of how their platforms moderate antisemitic content.

Introduction

ADL has documented a dramatic increase in antisemitic hate online since the October 7, 2023, attacks by Hamas on Israel. The hateful language has in many cases shifted, however, and new antisemitic conspiracy theories have taken shape alongside the resurfacing of old ones.

ADL has previously evaluated leading tech companies Meta (owner of Facebook and Instagram,) TikTok, YouTube, and X on their policies and enforcement to curb antisemitism, finding that most platforms have appropriate policies in place, but fall short on enforcing them. This scorecard similarly evaluates both policy and enforcement around antisemitism, but with a focus on more specific ways that antisemitism manifests online, especially following the October 7 attack on Israel.

Antisemitic conspiracy theories and the use of “Zionist” as a slur (often referring to all Jews) have long been widespread, especially among right wing extremists. However, in the aftermath of the Hamas attacks, the term has become more common as a way to denigrate Jews and Israelis. Meta recently announced that it was updating its policies to address this shift, a decision ADL applauded. Even as other platforms may have similar policies, the very public statement Meta made helped push back on the growing public acceptance of the term as an antisemitic slur, or in a manner that threatens or incites violence. ADL’s annual report on online hate and harassment found that 47% of Jews saw antisemitic content or conspiracy theories related to the Israel/Gaza war, compared to 29% of Americans overall. The ADL Center for Antisemitism Research has also found that belief in conspiracy theories correlates with antisemitic sentiment.

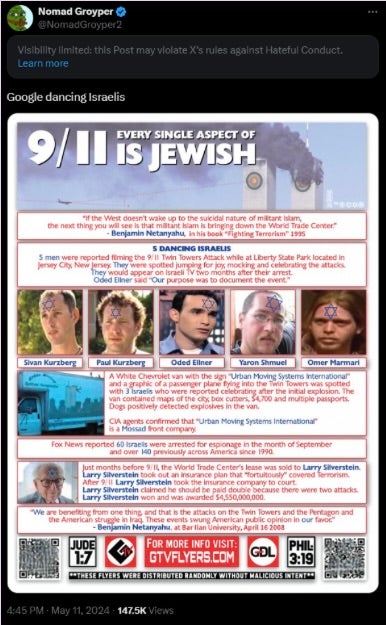

For this scorecard, ADL researchers reviewed the five platforms’ antisemitism policies, then identified potentially violative content in each category. For antisemitic conspiracy theories, we selected examples that combined false or misleading information with antisemitic sentiment, such as blaming Jews for September 11th. For use of Zionist as a slur, we identified examples where the term was used refer to all Jews and/or Israelis in a hateful or derogatory way. We then reported this content (such as posts or comments), first as a regular user and then as a trusted flagger.

We evaluated each platform in the following six areas:

Policies concerning hateful conspiracy theories

Policies concerning slurs targeting marginalized groups

Enforcing antisemitic conspiracy theory content reported by a regular user

Enforcing antisemitic conspiracy theory content reported by a trusted flagger

Enforcing Zionist as a slur reported by a regular user

Enforcing Zionist as a slur reported by a trusted flagger

Method

To test each platform’s enforcement of its antisemitism policies, we first reported individual pieces of content using tools available to a regular user. After a week, we checked to see whether any action had been taken. For content where no action or partial action (such as limiting the content’s visibility) had been taken, ADL escalated the content to our direct points of contact at the companies, most often via our trusted flagger partnerships.

We also evaluated each platform’s policies on whether they appear to cover antisemitic conspiracy theories and slurs targeting marginalized groups. This generally encompassed a review of policies related to misinformation, hate speech, harassment, and cyberbullying, as well as specific language around conspiracy theories and slurs.

When comparing policies to actual content, nuances often arise, making case-by-case determinations necessary for a large share of potentially violative content. Each piece of content we escalated to the platforms’ trusted flaggers was evaluated by our internal experts on the platforms’ policies. CTS collected 391 pieces of content related to antisemitic conspiracy theories or using Zionist as a slur. After our internal evaluation, we included 195 (49.8%) of these in our research. We reported between 10 and 17 pieces of antisemitic conspiracy theory content, and between 13 and 37 pieces of Zionist as a slur content.

Following the trusted flaggers’ reviews of the remaining content, we evaluated each platform on its rate of action against content we reported as a regular user, and its cumulative rate of action after trusted flagger escalations.

Platforms received full credit if they had policies that covered each type of antisemitic content, and none if they did not. In our scoring, we gave less weight to whether they had appropriate policies than to how well they enforced them, as policies are only as good as their application. We also wanted to ensure that the content we flagged would be considered violative by the platforms. Our internal experts evaluated each piece of content against the platforms’ policies before we escalated the report through trusted flagger channels.

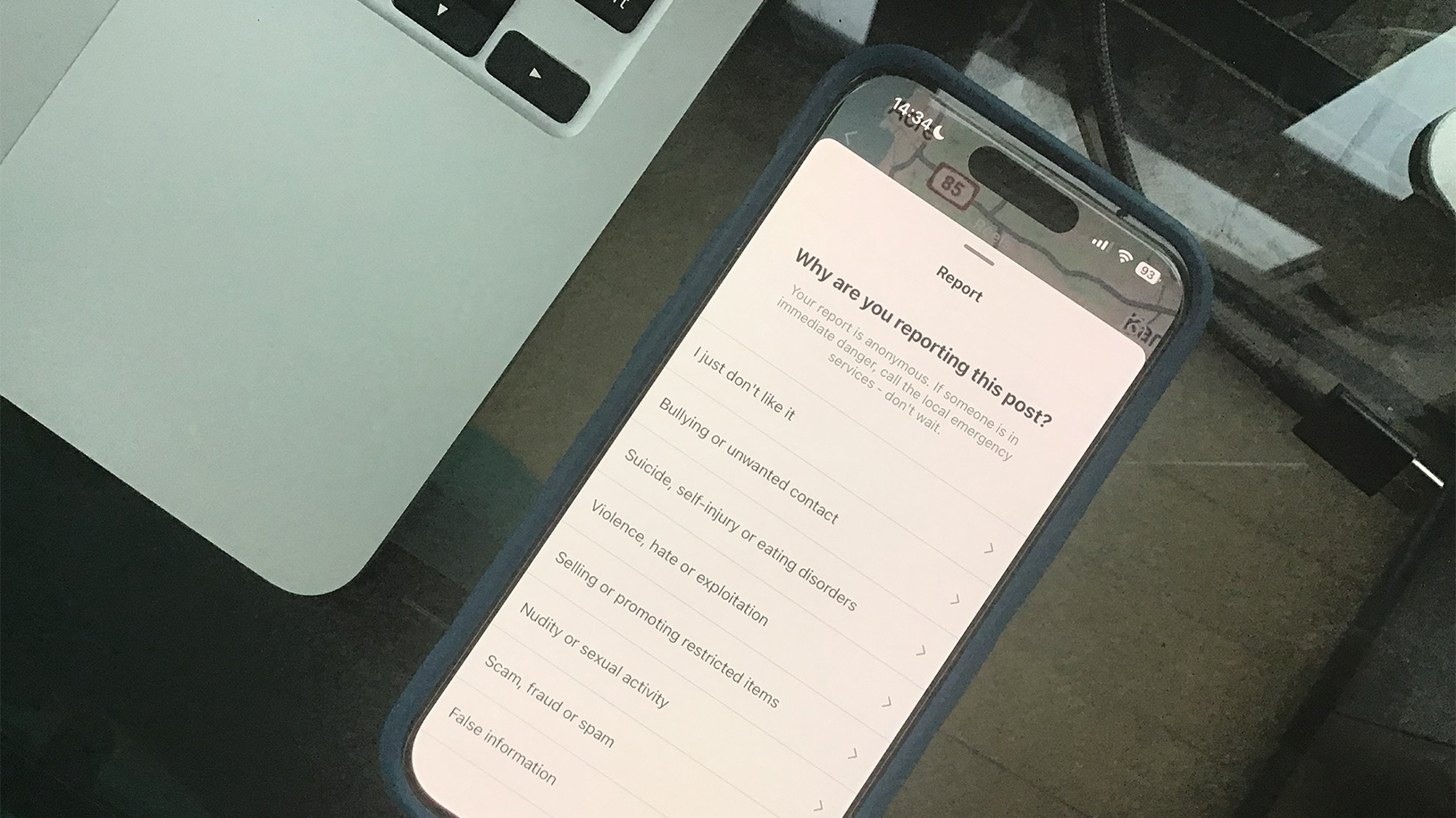

Action rate refers to the percentage of content we reported to a platform that was subsequently removed for violating its policies. The only platform with an exception to this formula is X. Most of X’s actions were to limit that content’s visibility, as illustrated in the figure below. We credited X for taking action on these pieces of content. But in contrast with the other platforms, X did not remove the hateful content from the platform.

X’s notification for limiting the visibility of flagged content. Screenshot, September 13, 2024.

We awarded X ‘half credit’ for each post it limited the visibility of, to reconcile the muted impact of X’s actions compared with those of other companies.

Some platforms did ultimately reach out to the regular user accounts we used to report in order to inform us of the action they took. But since these responses were outside the seven-day window between when we reported as a regular user and as a trusted flagger, we refrained from retroactively counting these actions.

Platforms: Failing to Act on User Reports

All five platforms prohibit hate speech against protected classes, such as religion, race, gender, or sexuality. These policies typically cover the instances we reported of antisemitic conspiracy theories and Zionist as a slur.

We found all of the platforms’ guidelines except one to be robust and broad enough to allow or require enforcement against both types of content in question. The exception is X, which does not have a policy that specifically prohibits misinformation. And while the company’s policies on hate speech and extremism appear to provide broad protection from such violative content, “you may not attack other people on the basis of ... religion,” in reality X has limited enforcement.

When it came to reporting antisemitic hate as a regular user, however, a majority of platforms (3 out of 5 in each category) took no action. This finding is similar to what we found in our prior research in 2021 and 2023 testing how well platforms enforce their policies against antisemitism. Facebook was the only platform to take action against more than one-tenth of the user-reported content in either category, removing 13.3% of the content that we reported for using Zionist as a slur.

Platforms only took meaningful action when ADL escalated flagged content through direct channels. This finding indicates that the reported content did violate the platforms’ policies, and that ADL’s interpretation of these policies aligns with how their internal experts understand and apply them (once brought to their attention). This outcome further underscores the inadequacy of the reporting process for regular users, whose reports are regularly rejected.

We found several instances of violative content shared on one platform that included links to other platforms. Multiple pieces of content, for instance, linked to X, which was less likely to remove antisemitic hate. This observation aligns with our previous research on how hatemongers share links and gather on YouTube channels with likeminded participants.

Recommendations for Platforms

Improve user reporting. Although we recognize the challenges of moderating at scale, and the fact that user reports are often misused, these platforms should be able to ingest reports, review content, and take action on clearly hateful content in less than a week. Improvements could take the form of platforms investing in better means of evaluating good faith user reports and providing good faith users with the opportunity to provide more detail. It could also include investing in determinations of severe forms of antisemitism and hate, and creating new escalation pathways for users having those experiences.

Fix the gap between policy and enforcement. We found that most platforms were not enforcing their anti-hate policies, especially on content reported by a regular user. Companies should begin by conducting an internal audit to assess 1) whether staff are prepared to recognize and act on antisemitic content; 2) whether current policies address the multidimensional nature of antisemitism; 3) their enforcement capacity and effectiveness; and 4) support mechanisms for users who encounter or are targeted by antisemitic hate and harassment. An independent audit would signal a serious commitment to stamping out antisemitic content by inviting independent experts to evaluate each platform and develop tailored recommendations to implement.

Provide independent researchers with data access, including to archives of moderated content. Companies should allow academic and civil society researchers to study their internal enforcement data. As this study demonstrates, researchers are limited to conducting enforcement testing manually (that is, by identifying individual pieces of violative content and reporting them). It would benefit both companies and the public if independent researchers could study content moderation decisions and apply their expertise to resolving gaps between policy and enforcement, including navigating context and other challenges.

Review reported content in context. Multiple reports or other signals on a single piece of content may provide context for content that otherwise does not appear violative. Platforms should use these signals to take into account additional information, such as username or profile details, or user activity, such as networked harassment, to inform moderation decisions.

Follow emerging trends and adversarial shifts. The language and tactics of hate shift over time; these changes have only accelerated for antisemitic hate since October 7th. Companies must keep track of these shifts and update their policies and enforcement accordingly. Are Jewish communities on the platform being targeted in novel ways? Are certain topics or keywords trending? Collaborating with civil society and other experts remains a vital pathway for sharing this information. However, companies must also increase resources, adjust policies, and build in extra guardrails or friction to mitigate the spread of hate, especially by bad actors who exploit the reach of mainstream platforms. In the context of both the war in Gaza and the domestic surge in antisemitism, tensions remain extraordinarily high. Companies must make the investments necessary to meet the moment.

Recommendation for Government:

Increased Data Access for Researchers, Policymakers, and the Public

Platforms’ community guidelines, public-facing policies, and enforcement actions all play a role in mitigating antisemitic content. To analyze and evaluate platforms, policymakers, the public, and researchers require access to platform data. Without data access, we are limited to user surveys or manually collecting data (such as the content we identified and reported for this scorecard). Researchers, and, where possible, the public need governments to mandate access while preserving user privacy, so civil society and policymakers can work alongside, not at odds with, platforms to reduce hate online.

Fortunately, legislation has been proposed that would take a meaningful step towards providing necessary data for researchers: the Platform Accountability and Transparency Act (PATA), led in the Senate by Senator Coons. Congress should quickly enact PATA to jumpstart researcher access. Additionally, Congress should begin considering similar legislative frameworks that could be used to allow the public meaningful access to platforms’ data.

ADL gratefully acknowledges the supporters who make the work of the Center for Technology and Society possible, including:

Anonymous

The Robert A. Belfer Family

Crown Family Philanthropies

Modulate

Quadrivium Foundation

The Tepper Foundation

Examples

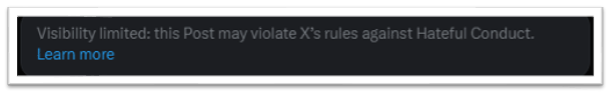

Figure 1: Example of content that X left up with its visibility limited. (Screenshot, September 13, 2024)

Figure 2: Example of content that X left up with its visibility limited. (Screenshot, September 13, 2024)

Figure 3: Example of content that X left up with its visibility limited. (Screenshot, September 13, 2024)

Figure 4. Example of a post responding to the controversial influencer Andrew Tate. this post was deemed non-violative by X after user reporting and escalation to the platform. (Screenshot, September 13, 2024)

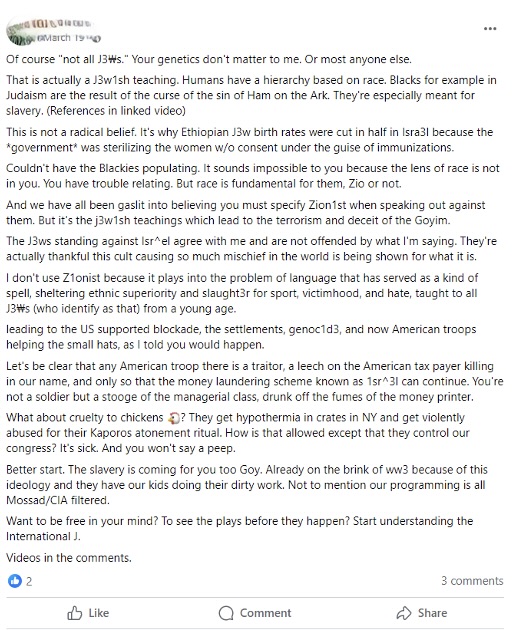

Figure 5. A post that Meta decided to leave up following user reporting and escalation to the platform, which concludes by encouraging people to start understanding “the international J,” which would appear to be a poorly veiled reference to The International Jew. (Screenshot, September 16, 2024)

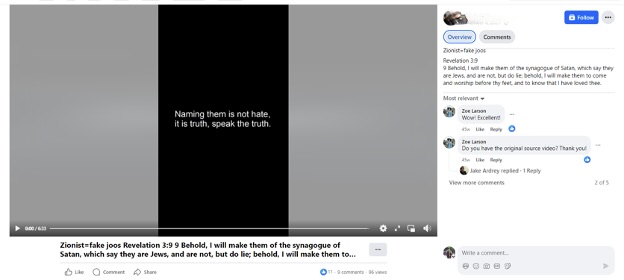

Figure 6. A video left up on Facebook following user reporting and escalation to the platform that states “Zionist=fake joos” (an intentional misspelling of “Jews”) and references the Synagogue of Satan. (Screenshot, September 16, 2024)

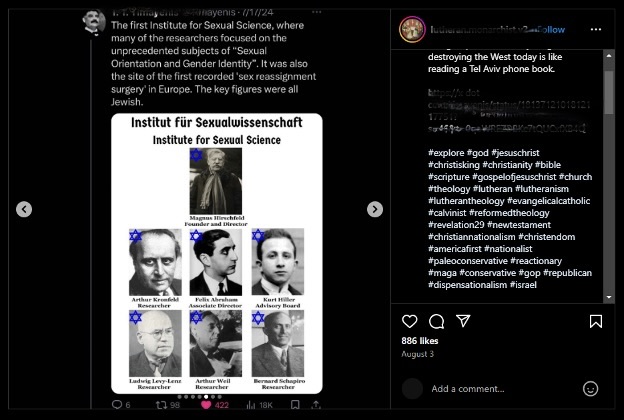

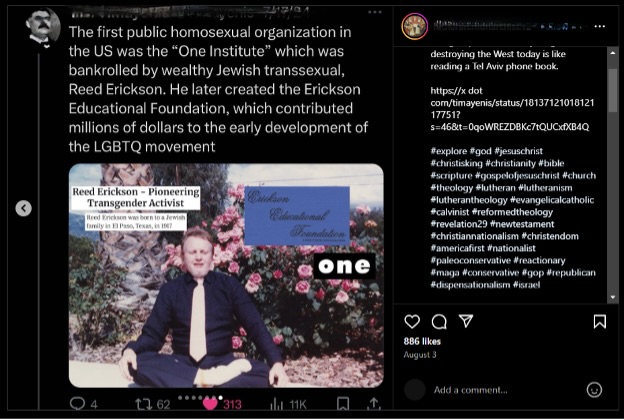

Figure 7. An Instagram slideshow alleging a connection between Jewish people and the conspiracy of LGBTQ+ agenda. The caption links to a thread on X that builds upon the content included in the slideshow. This post is still on Instagram following user reporting and escalation to the platform. (Screenshot, September 16, 2024)

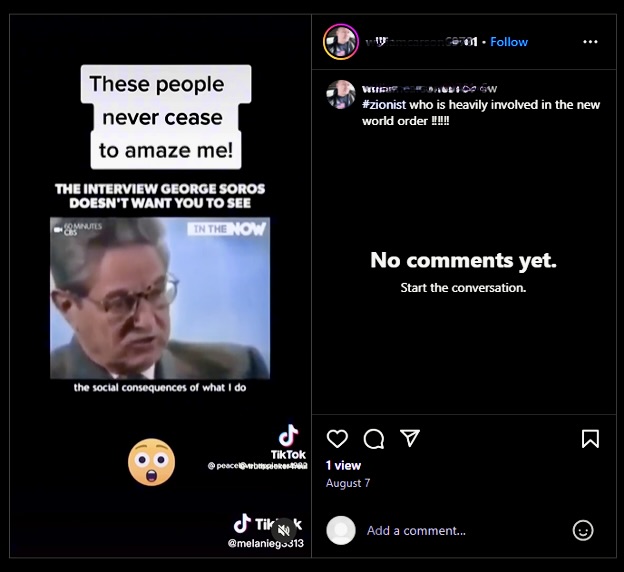

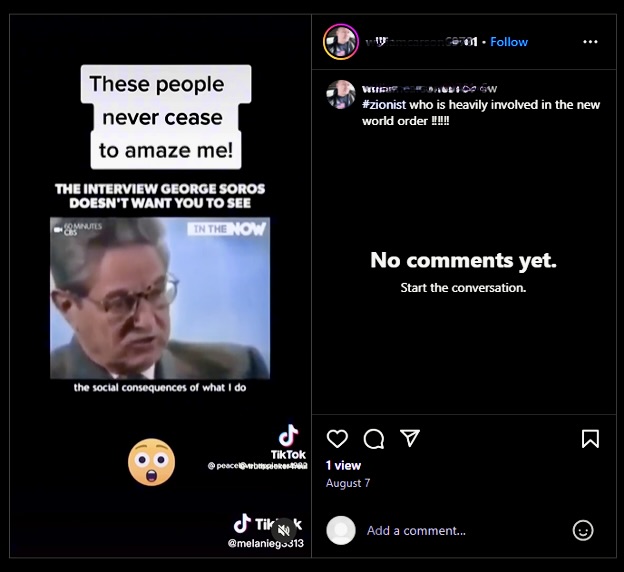

Figure 8. An instagram post referring to George Soros as a Zionist “heavily involved” in the New World Order. It was only removed following further engagement with Meta after user reporting and escalation to the platform. (Screenshot, September 16, 2024)

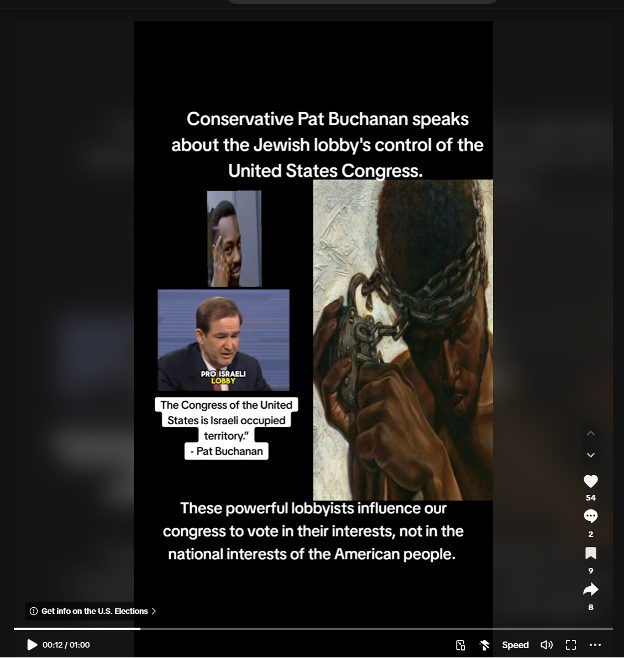

Figure 9. TikTok video with text suggesting the “Jewish lobby’s control of the United States Congress” and video of a Pat Buchanan speech. It is still on the platform following user reporting and escalation to the platform. (Screenshot, September 16, 2024)

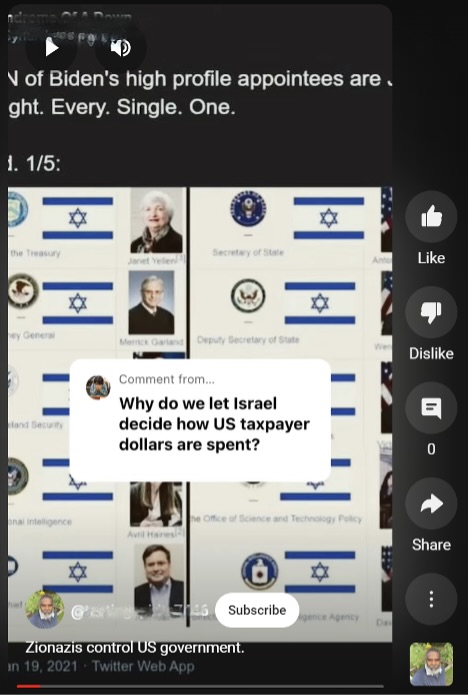

Figure 10. YouTube short with the caption “Zionazis control US government.” (Screenshot, September 16, 2024)

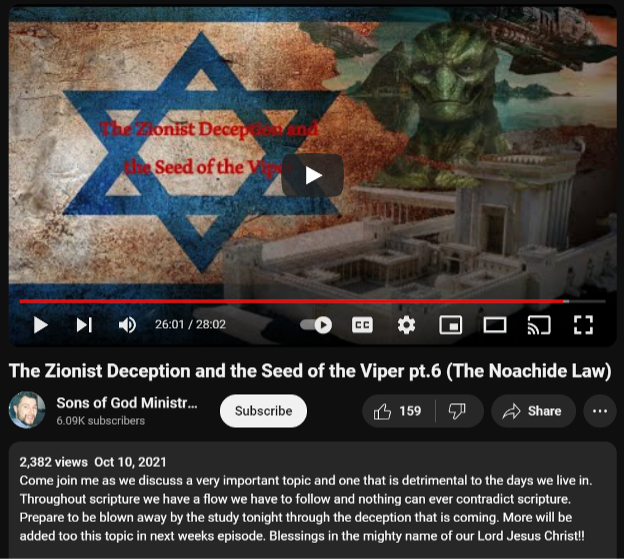

Figure 11. A video left up by YouTube. The creator uses the word Zionist in the title, but throughout the video they reference scripture referring to Jews, stating that Jews are going to exterminate non-Jews and concludes by equating Jews to Satan. While YouTube has incredibly robust protections for users posting content grounded in their religious beliefs, further investigation into this video series and other content on the channel revealed a consistent and compounding narrative perpetuating harmful and hateful stereotypes. This is an example of where evaluating videos or posts as individual pieces of content can miss important context and lead to decisions that allow a larger pattern of harmful activity to persist. (Screenshot, September 16, 2024)