Related Resources

In October 2022, ADL Center for Technology & Society listed five things we would monitor on Twitter following Elon Musk’s acquisition of the platform. A number of debates around Twitter’s policy and enforcement changes have flared up since then, and observers have been parsing their meaning. But with all the new developments happening on Twitter, which ones are worth paying attention to and have real consequences for users?

Six months later, we take stock of the policy and enforcement developments that have transpired since then and explain what they may mean for Twitter’s enforcement of its hate speech policies and its commitment to protecting users.

1. Reinstating Deplatformed Users → Now: General Amnesty Policy

Last year, it was reported Musk wanted to eliminate permanent bans because he did not believe in lifelong prohibitions. We expressed concerns about the platform allowing back high-profile users who spread hate.

Twitter then announced it would grant “general amnesty” to suspended accounts that had not violated any laws—except this is not quite true.

In December 2022, Twitter reinstated the account of notorious neo-Nazi Andrew Anglin (and then suspended him again this month), who has a warrant out for his arrest for ignoring a $14 million judgment against him for directing an antisemitic harassment campaign against a target.

Twitter also reinstated the accounts of people who have incited violence or frequently posted hateful content:

Twitter must be careful to delineate between users who occasionally make careless mistakes versus those who consistently show malicious intent and worse yet, inspire their followers to coordinate harassment campaigns, such as Anglin. Increasingly, Twitter is setting itself apart from mainstream platforms by implementing such amnesty without any clear process for evaluating the accounts.

2. Enforcement Against Election Misinformation→Now: Hazy Future

Twitter laid off many employees responsible for keeping misinformation off the platform, and on Election Day, false narratives about malfunctioning voting machines in Arizona’s Maricopa County spread. It is uncertain whether Twitter will continue to prioritize election integrity between cycles or even during them.

3. Protections for Marginalized Users→Now: Hateful Conduct Policy

On April 19, Twitter quietly rolled back the section of its Hateful Conduct Policy that prohibits users from abusing transgender people, garnering concern from civil society groups.

Previously, Twitter’s policy stated, “We prohibit targeting others with repeated slurs, tropes or other content that intends to degrade or reinforce negative or harmful stereotypes about a protected category. This includes targeted misgendering or deadnaming of transgender individuals.” The platform removed the second sentence earlier this month.

Of note, Twitter also removed a line from the policy specifically detailing certain groups of people who experience disproportionate abuse online, including “women, people of color, lesbian, gay, bisexual, transgender, queer, intersex, asexual individuals, and marginalized and historically underrepresented communities” without explanation of why this line was struck.

These changes fly in the face of what our annual Online Hate and Harassment survey has found every year: Hate-based harassment, which targets people who belong to a marginalized identity group, is high, with LGBTQ+ people being the most likely to experience harassment.

4. Hateful Users Emboldened→Now: Violent Speech Policy

Shortly after the deal to buy Twitter, ADL researchers noted several instances of users posting hateful content to the platform, testing whether Twitter would enforce its current policies around hateful conduct.

Twitter has drawn a line, but it is unclear what it is.

Twitter created a page for its Violent Speech Policy, separate from its Hateful Conduct Policy, that seems to have replaced its previous Violent Threats Policy. In other words, it is a rewrite of a former policy, but Twitter distinguishes between hate speech and speech that incites violence, with stricter rules against the latter. However, ADL has long reported on how unaddressed hate can escalate into offline violence.

This distinction leads to a number of questions about how Twitter will protect users from hateful speech and targeting, in particular: How is "violent speech" different from "hateful conduct"? Under its previous leadership, Twitter seemed to enforce hateful conduct policies against antisemitic tweets that had several hateful images or comments.

However, with the new leadership, when we reported antisemitic tweets that violate the hateful conduct policy and had numerous tropes, they did not appear to meet the new threshold and were not enforced without a clear explanation of how far the line had shifted.

Under the violent speech policy, Twitter also says it allows “expressions of violent speech when there is no clear abusive or violent context, such as (but not limited to) hyperbolic and consensual speech between friends, or during discussion of video games and sporting events.” It also allows “certain cases of figures of speech, satire, or artistic expression when the context is expressing a viewpoint rather than instigating actionable violence or harm.”

But extremists are known to weaponize irony and humor to spread hateful messages and to provide cover for their views. Extremists often use memes and pop culture references to promote edgy humor that can gradually become racist, tactics used particularly in online games. When “just kidding” can be used to explain away violent content, how are content moderators determining intent?

5. Platform Policy Changes→Now: Twitter Blue and “Freedom of Speech, Not Reach”

Twitter was more thoughtful than most major platforms in its hate speech policies, approach to content moderation, and efforts to combat misinformation. It was one of the top two platforms for meaningful data accessibility, which allowed for third-party measurement of antisemitism.

But the platform’s recent moves threaten to undo much of this work.

Musk announced the end of Twitter’s free blue check mark program, originally intended to verify the identity of users who could be subject to impersonation, such as public officials, journalists, and celebrities. Under the new program called Twitter Blue, anyone can pay $8 per month for the check mark.

This change has potentially dangerous consequences, including lending a false sense of credibility to hate groups and other bad actors. For example, the previously-banned far-right Britain First political party, and anyone associated with it, have been given blue checks, lending unearned legitimacy to extremist groups.

Furthermore, giving the Britain First party and its supporters blue checks worsens hate. Twitter Blue promotes an account's tweets in a follower's timeline, and moves their replies to the top of a comment thread. In other words, it directly amplifies hateful content if the Blue-subscribed account is a hate account, like Britain First. From Twitter's own description of Blue benefits: “Prioritized rankings in conversations and search: Tweets that you interact with will receive a small boost in their ranking. Additionally, your replies will receive a boost that ranks them closer to the top. Twitter Blue subscribers will appear in the Verified tab within other users’ notifications tab, which highlights replies, mentions, and engagement from Blue subscribers.”

However worrying Twitter Blue is, it is not the most significant change the platform has implemented. The biggest development made by Twitter is its murkiest one: The platform has expanded its ability to limit visibility for tweets that violate its hate policies.

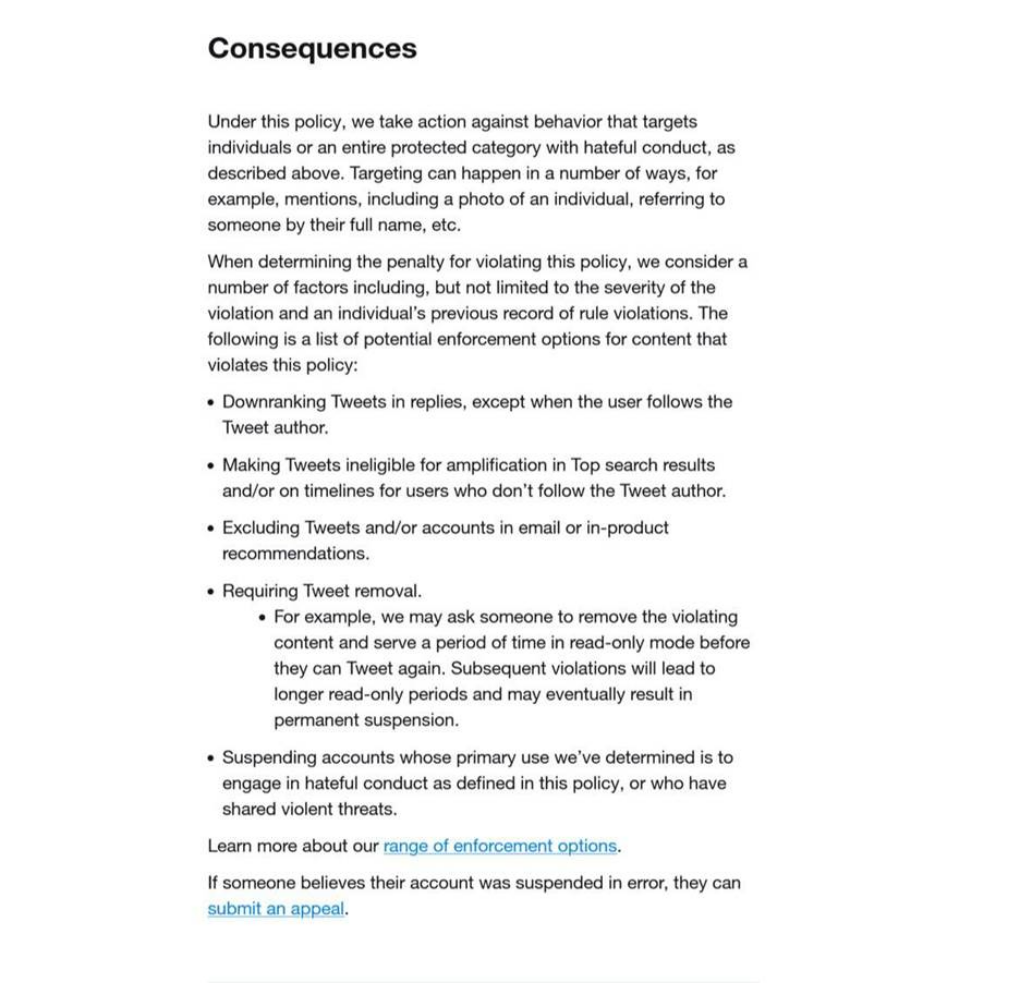

Twitter’s Consequences page from its Hateful Conduct Policy, October 2022.

From Twitter’s Hateful Conduct Policy, April 2023.

Comparing the changes, it is noteworthy Twitter has whittled down its language on removal and suspension. The platform is moving to downrank violative content instead of removing it.

Downranking or the de-amplification of certain types of content is a key aspect of keeping online spaces safe and equitable. That said, the avoidance of removal and suspension raises substantive questions— and has potentially serious ramifications for fighting hate and misinformation.

- What will the threshold be for what is taken down and what is downranked?

When downranking is emphasized, it implies that users are given opportunities to course correct and follow Twitter’s policies. But at what point have too many lines been crossed, and a user must be suspended or permanently removed? For example, Twitter had a five-strike system to curb Covid-19 misinformation. Five or more strikes led to permanent suspension. Will there be an equivalent measure?

Twitter also announced it will begin adding a label to downranked tweets. As Twitter explains, this will provide more transparency to the company’s enforcement actions. This leads to multiple questions:

- Other than trusting the label, how can researchers and civil society independently verify that downranking of a hateful tweet has occurred? How can researchers or civil society verify how many people saw a hateful tweet before it was downranked or labeled?

Given the current state of Twitter API access, this has become difficult. Twitter previously offered a generous free tier of its API, and its highest advertised subscription rate was $2,899 a month. Now, Twitter offers access starting at $500,000 a year for access to only 0.3 percent of the company’s tweets, out of reach for the vast majority of researchers and civil society groups.

Opaque Changes and Discouraging Trends

Regardless of some tweaks and changes, policies are only as good as their enforcement. CTS has found Twitter does not enforce its policies on antisemitism, even when flagged content openly incites violence, as we also found in our recent Holocaust Denial Report Card. And, of course, strong enforcement is only possible when teams responsible for trust and safety are adequately supported and resourced. Twitter, unfortunately, has eliminated most of its people responsible for content moderation, and it also disbanded its Trust and Safety Council, a volunteer group of independent civil society experts who advised the platform on curbing harms, of which ADL was a member.

The changes Twitter has introduced are opaque and make it difficult for civil society groups to determine whether they will have a positive effect in reducing the amount of hate users see. With porous policies and the lack of basic mechanisms to verify accounts, Twitter runs the risk of steering users to harmful content. The trends we continue to document are not encouraging.