RELATED CONTENT

12 min read

Executive Summary

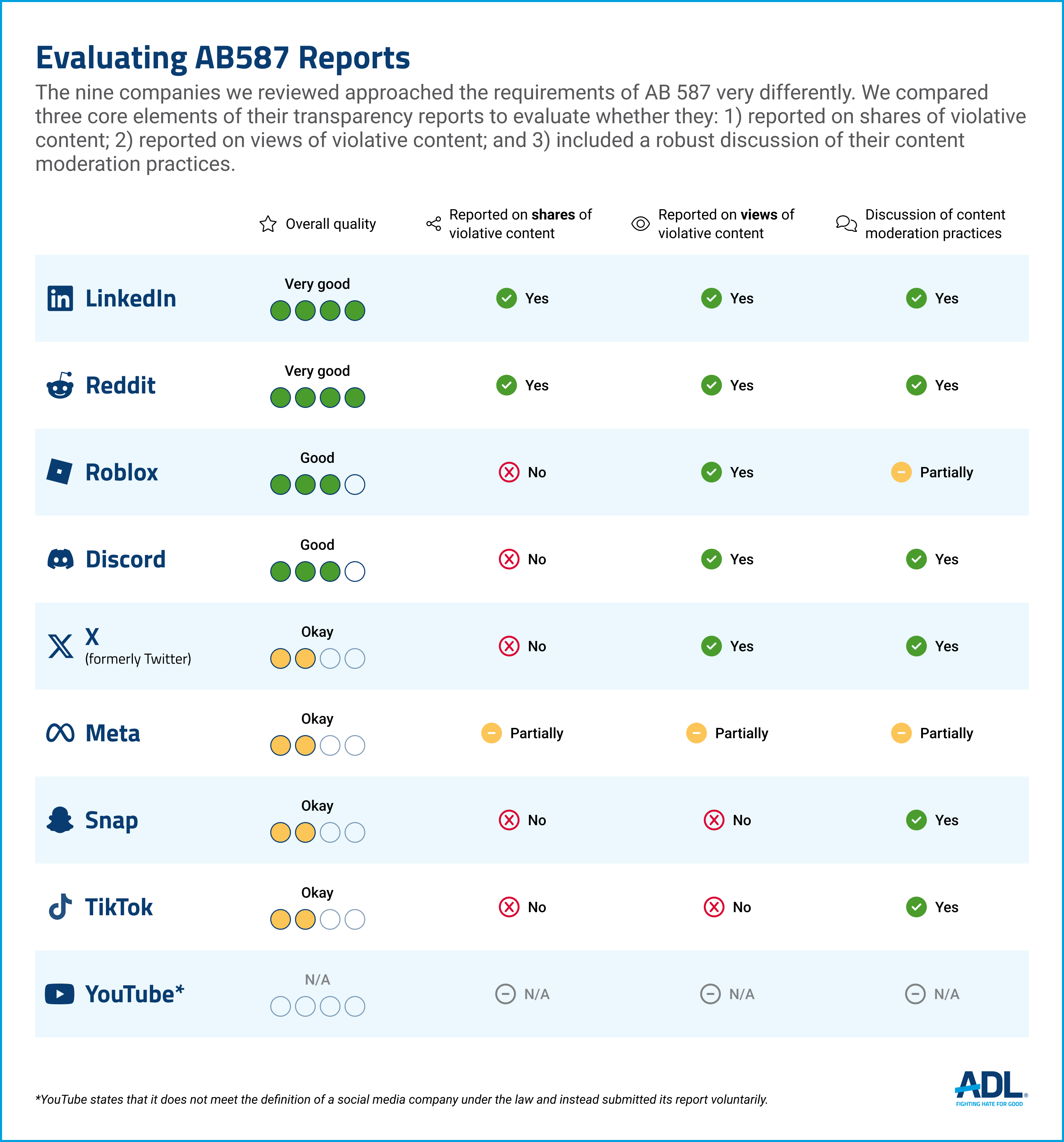

Most tech companies have rules against hate and harassment. But until a new law in California was enacted in 2022, they had not been accountable to the American public for enforcing them. The law that could change all this, California AB 587, requires social media companies over a certain size to publish their terms of service, including key definitions of terms, and report regularly on how they are adhering to their policies.

Such reports, known as transparency reports, used to be published voluntarily, on an ad-hoc basis. They were also largely inconsistent and far from transparent. Before the law went into effect, ADL evaluated nine major social media platforms for their degree of transparency in enforcing these rules (Platform Transparency Reports - Just How Transparent? February 2024).

We found that such reports were generally not comprehensive, often lacked key metrics, were hard to find for non-experts, were inconsistent from year to year, were not published at a standard cadence (such as quarterly) and were impossible for third-party researchers or auditors to verify independently.

Background

The new social media transparency law aims to address these shortcomings by standardizing transparency reporting and allowing the Attorney General to fine noncompliant companies up to $15,000 per day, or (as of publication) almost $3 million per company since the law went into force. ADL has reviewed the first and second rounds of reports that major social media companies submitted to the California AG on January 1 and April 1 of this year.

Such reports should in theory now be easier to compare and make companies’ efforts to combat hate and harassment more transparent and visible to users. This update lays out the key findings from our in-depth review of each platform's mandated report.

We found that most companies are complying to some degree, and that where companies complied the reports are more standardized and provide a clearer picture of harmful content on their platforms.

Some metrics remain difficult to compare because of the platforms' different features. In some cases, however, platforms may not be complying fully.

Without mandated transparency reporting, users have little insight into how well social media companies enforce their own rules, such as rules against hate speech, abuse, and identity-based harassment. When platforms report voluntarily on the enforcement of their rules, they often leave out key information, focusing more on what they get right than where they fall short. The major social media platforms prohibit most forms of hate, yet ADL’s research routinely finds most Americans experience hate and harassment online, including 61% who experienced hate or harassment on Facebook, and that some local Facebook groups are toxic sites of identity-based harassment.

In California, however, major social media companies with a revenue of over $100 million and that met certain other criteria are now required by AB 587 to submit regular, standardized transparency reports. The first round was submitted in January 2024 and can be found here. A second—and, in some cases, a somewhat improved report--was published on April 1.

Among those who determined they met the criteria were eight platforms we previously evaluated in our research: Discord, LinkedIn, Meta, Reddit, Roblox, Snap, YouTube, and X.

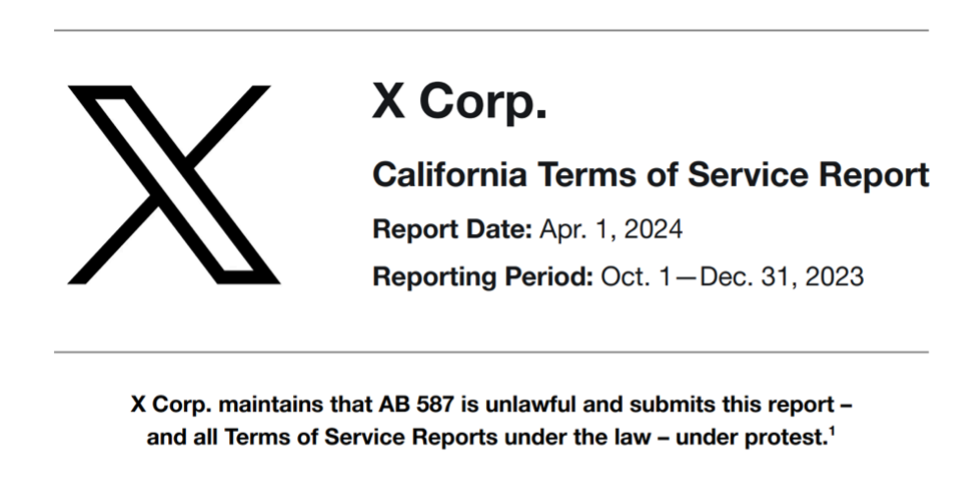

AB 587, codified at Business & Professions Code, sections 22675-22681, and subsequently amended by AB 1027, is being challenged in federal court in a case led by X and is currently in appeal. But in the platform’s first filing in January 2024, they made no mention of the suit. In April’s report, they stated:

Source: https://tinyurl.com/vf7rh8wf (Screenshot taken by ADL on July 22, 2024)

Similarly, YouTube maintains a disclaimer on both of their reports in which they state they do not meet the definition of “social media” under the statute and are filing voluntarily.

Findings

Reviewing these reports in detail, and comparing them to earlier voluntary reports, the benefits and challenges of mandating transparency reporting become clear. It can, however, be difficult to compare the actions companies take against violative content. Snap, for example, often moderates hate speech after it is flagged by users, while Discord issues “warnings” that can take place at different levels, such as the server level or user level. Reddit relies on its robust system of community moderators, but that community has faced challenges with the loss of Reddit’s public data access in June 2023. Many Reddit moderators used third-party moderation tools that depended on API (application programming interface) access, which is how developers collect and use platform data. Without the API’s public availability, these moderation tools became inoperable (as we assess in our 2023 data accessibility scorecard update).

Our analysis found that mandated reporting made it easier to find key data and compare definitions of key terms over time. It also showed the scope of hate and harassment on these platforms when companies answered all of the questions required by AB 587.

- Mandated reporting makes transparency data significantly easier to find. Others, however, still eluded us, such as contextual information necessary to make sense of the numbers and metrics, including a true accounting of the violative view rate across platforms, views of removed or shared content, and the ability to verify reports independently (which requires data access for researchers).

- Standardizing definitions for reporting makes it easier to compare these definitions over time. Requiring platforms to define their terms pushes them to provide more straightforward explanations of how they define violative content and makes it easier to track changes in these definitions over time. However, when a company merely provides hyperlinks to their community guidelines and a “copy-and-paste" of their terms of service as an appendix, they are arguably not living up to the spirit of the law.

- Accurate reporting makes it easier to understand the scope of the problem – even when that scope is quite shocking. X’s report answered each of the required questions in-line with the corresponding statute. This format made the report one of the easiest to read—but also at times the most bracing — when, for example, confronted with X’s lack of definitions of radicalism or extremism, or their stated technical inability to determine the number of times content was shared before X took action on it.

Multiple platforms committed to addressing hate put significant effort into their reports. Discord, LinkedIn, Reddit, and Roblox published detailed, informative reports.

In other ways, however, we found platforms avoided meaningfully answering the questions required by the law.

Some of the platforms may have failed to meet the statutory requirements of the law to publish shares of removed material or view counts of violative content.

- Meta, in its April report, uses percentages to display views and shares of violative content prior to being actioned, instead of raw numbers.

- YouTube, in keeping with their perspective that the law does not apply to their platform, provided links and charts that did not directly address the requirements of AB 587-- instead of percentages or raw numbers of views or shares of violative content prior to being actioned.

- TikTok, while providing a narrative that is more substantive than YouTube’s or Meta’s, does not directly report on views of removed material. And although TikTok may partially address the issue in a footnote, their report could be strengthened by directly reporting these data.

Where platforms failed to list views and shares of actioned content they could be in violation of the statute.

Platforms don’t provide the necessary context to make sense of their numbers.

It is easy to speculate as to why these platforms may not want to share that information: it does not make them look great. The numbers are big and could be taken out of context to imply that one platform has more of a toxic content problem than another platform. In contrast, Reddit did include these stats, and their “number of users that viewed actioned items of content before it was removed” for harassment reports/flags for the period between October and December 2023 is 45,463,766. This makes sense for a platform of their size, and Reddit should be given credit for being straightforward. For TikTok and their more than 170 million American users, the number of views and shares of violative content to removal may be very large.