Protect Facebook Users from Hate

Fight Antisemitism

Explore resources and ADL's impact on the National Strategy to Counter Antisemitism.

Special thanks to Viktorya Vilk, Director of Digital Safety and Free Expression at PEN America, for providing expert review and feedback on this report.

Executive Summary

Online hate and harassment are at record highs, but targets of hate continue to struggle to receive the support they need from social media companies. To evaluate how and whether people are being protected from online hate and harassment, ADL Center for Technology and Society (CTS) reviewed how nine tech companies currently support people targeted by these harmful and traumatizing experiences on their platforms.”

CTS reviewed recommendations from PEN America’s report on social media abuse reporting (released in 2023), ADL’s social pattern library (released in 2022), UNESCO and ICFJ’s study of online violence against women journalists (released in 2022), The World Wide Web Foundation report on Online Gender-Based Violence (released in 2021), PEN America’s report on platform mitigation features for the experience of targets of online harassment (released in 2021), ADL’s qualitative study of targets of online abuse (released in 2019), and thousands of responses from targets of online hate as reflected in the ADL Online Hate and Harassment surveys from 2019, 2020, 2021, 2022 and 2023.

Based on these reports, CTS compiled a list of eleven platform features necessary to protect targets of online hate, all of which were previously recommended in at least four of these resources. Each of these features is rooted in the experience of targets of online harassment and the ways targets wished they were supported when facing hate and harassment on social media.

Key Findings

-

None of the nine platforms analyzed have all eleven fundamental features so often requested by targets of online hate and harassment, as shown in research from ADL, PEN America, the World Wide Web Foundation, UNESCO, and ICFJ between 2019 and 2023.

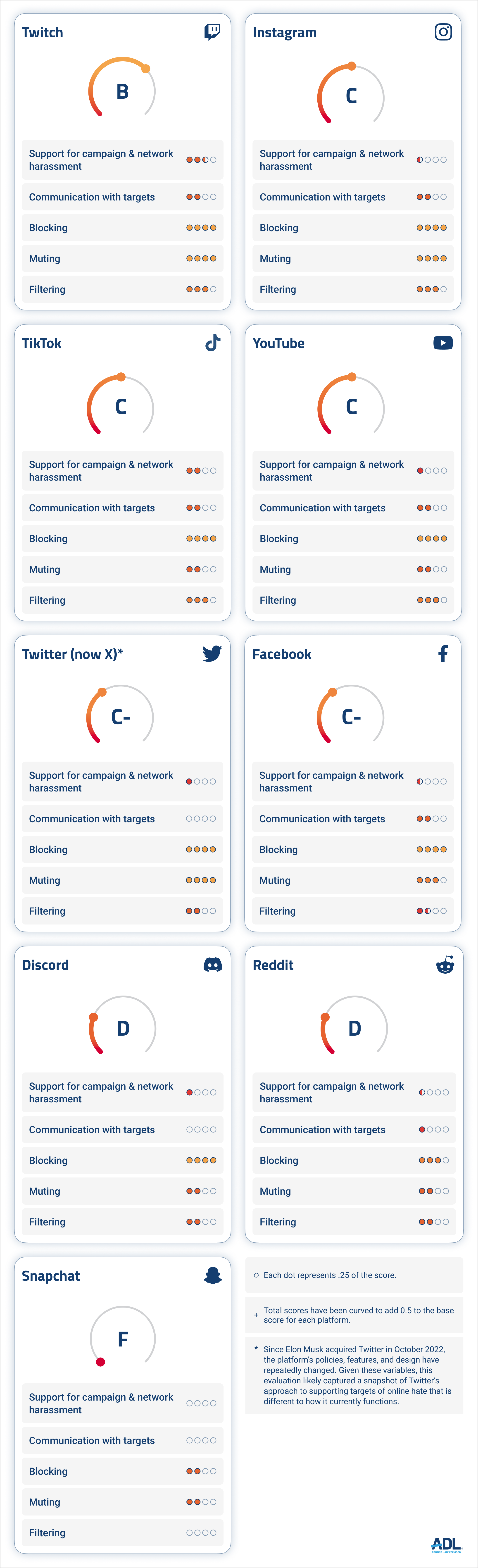

- Twitch received the highest score of any platform (B) for getting at least partial credit on ten out of eleven features.

- Snapchat received the lowest grade (F) overall for having two out of eleven features.

- Only three platforms—TikTok, Twitter (now X) and Twitch —allow batch reporting, the ability to flag multiple related comments or pieces of content simultaneously.

- No platform offers the ability for a target of abuse to speak to a live person for support, regardless of the level of severity or urgency of abuse they’re experiencing.

ADL recommends that platforms:

-

Center the experiences of people targeted by identity-based hate and harassment in the platform and product development and design- especially experiences of those disproportionately targeted for their identity.

- Increase investments in trust and safety to prioritize improvements to the experience of targets on platforms.

- Implement features such as those suggested in this report that will improve the user experience and support given to targets of online hate.

- Utilize resources and expertise from civil society and academia, including those cited in this report card.

Block/Filter/Notify: Support for Targets of Online Hate Report Card

How do these social media platforms score according to our criteria?

Introduction

Julie Gray runs the popular TikTok account The True Adventures with her life partner Gidon Lev, a Holocaust survivor born in former Czechoslovakia and imprisoned at six years old in the Theresienstadt concentration camp until he was 10. The account has over 420,000 followers and is devoted to Holocaust education. What started as a promotional strategy for a memoir has now become Julie’s life work, but it comes at a heavy price.

“If you're Jewish, you have to pay a fee to go on social, and that fee is trauma and hate,” she says.

Julie says TikTok leaves her ill-equipped to fight against the influx of hate accompanying her account’s popularity. Reporting abuse to the platform, particularly imagery and coded language that abusers deploy to evade content moderation, accomplishes little, she says:

“TikTok doesn't have antisemitism as a category to record hate… In terms of reporting, I would say it's effective only maybe 10% of the time because the person has to say, 'I hate Jews.' If they say—and this is a comment I really got—’How did you like the showers? [many Holocaust victims were murdered in gas chambers, some of which were equipped with fake showers]’ you can report it, but they can't compute that.”

Although Julie and Gidon’s experiences highlight the emotionally taxing experiences of Jewish content creators on TikTok, their plight reflects the problems faced by so many women, people of color, LGBTQ+ people, and religious and ethnic minorities across all social media platforms. ADL’s 2023 Online Hate and Harassment survey found that 33% percent of American adults and 51% of teens experienced harassment on social media just in the last year. Our 2023 survey also found that when users reported violent threats to a social media platform, the platform only took action to remove the threatening content 21% of the time.

To fill the gap left by social media companies, some organizations have stepped up to provide resources and services to support targets of online harassment. PEN America’s Field Manual Against Online Harassment is an invaluable resource to help targets understand and effectively respond to the abuse they face on social media. The Cyber Civil Rights Initiative has a safety center geared toward people targeted with nonconsensual intimate imagery (also known as “revenge porn”). The Vita Activa Hotline provides support for women and LGBTQ journalists and human rights defenders experiencing online violence. Equality Labs provides digital security resources that center South Asian Caste-Oppressed, Queer and Religious minority communities. ADL provides a reporting portal on its website, where constituents can report online and offline hate and harassment. ADL Center for Tech & Society (CTS) helps users who are harassed online, often escalating reports of hate and harassment to social media companies when the content violates platforms’ rules and users' reports go unaddressed.

The research this report card draws on is also part of these efforts to support targets of online hate and harassment. In 2019, CTS released The Trolls are Organized and Everyone’s a Target: The Effects of Online Hate and Harassment. This study consisted of 15 in-depth interviews with people targeted by online hate and harassment because of their identity and synthesized their experiences into unique insights, recommendations, and user personas.

In 2021, PEN America released their report No Excuse for Abuse which pulled from over 50 interviews and insights from PEN America’s Online Abuse Defense Program, which has reached over 15,000 people targeted with online abuse. The report provided important insights into the toll that online harassment takes on individuals personally and professionally and detailed recommendations on what platforms could do to support targets and disarm perpetrators.

Additionally, in 2021, the World Wide Web Foundation released its report Online Gender-Based Violence Policy Design Workshops: Outcomes and Recommendations. The report was based on a series of design workshops the organization had convened to co-create product solutions to online gender-based violence and abuse, which included stakeholders from tech companies, civil society, government, and academia. The report contained robust recommendations around solutions for online gender-based violence, as well as design prototypes and suggestions for potential solutions to better support targets of online gender-based violence.

In 2022, based on these reports and other research, CTS released the Social Pattern Library. This resource showcases design patterns—some in collaboration with PEN America—for tech companies that implement ADL’s recommended “anti-hate by design” principles to mitigate hate and support targets of online harassment.

UNESCO and the International Center for Journalists (ICFJ) released their report, The Chilling: A Global Study On Online Violence Against Women Journalists, in 2022. Based on the experiences of more than 850 international women journalists, this report found that online violence is worse at the intersection of misogyny and other forms of discrimination, such as racism, religious bigotry, antisemitism, homophobia, and transphobia. The report had recommendations for tech companies to better support women journalists targeted with online violence, such as cross-platform collaboration around multi-platform harassment and building shields that enable users to proactively filter abuse for review and response, in addition to steps newsrooms and governments should take.

In 2023, PEN America and Meedan released their report Shouting into the Void, which focused on the abuse reporting process on social media platforms. This report was drawn from interviews with nearly two dozen experts, journalists, writers, human rights activists, and content creators who regularly experienced online harassment. It made key recommendations around allowing targets of networked harassment to report coordinated off-platform content and allowing users to empower trusted allies with access to their accounts to aid in abuse mitigation efforts.

From 2019 to 2023, CTS conducted an annual nationally representative survey of the experiences of targets of online hate and harassment in America. CTS has released the results of this survey each year, along with recommendations that tech companies and governments should take to reduce the proliferation of online hate and harassment.

Block/Filter/Notify: Support for Targets of Online Hate Recommendations

These reports over the years have detailed what is necessary to support targets of online hate. Below is a timeline of reports where the features in this report were recommended between 2019 and 2023.

While the efforts of civil society groups are important, they are filling a gap left by tech companies whose platforms do not meet the needs of targets. Tech companies should enforce their own rules and be responsive to the needs of targets, especially those from marginalized groups that are so often the targets of this kind of vitriol.

“Whether you're doing a Jewish account about the way you make matzo balls or your LGBTQ theater group, it doesn't matter,” Julie Gray says. “We pay dearly to be on these apps, and it's traumatic as heck.”

The Report Card Explained

The following nine social media platforms were chosen based on their number of active users (see below chart) and ADL’s experience of working with them to address our concerns about online hate and harassment. Platforms without abuse mitigation tools were excluded from the sample. We also excluded platforms that were either not based in the US or did not have many US users, such as Douyin or Weibo.

|

Social Media Platform |

Global Active Users 2021 (in millions)[1] |

|

|

2,797 |

|

YouTube |

2,291 |

|

|

1,281 |

|

Tiktok |

732 |

|

Snapchat |

528 |

|

|

430 |

|

Twitter (now X) |

396 |

|

Twitch |

140[2] |

|

Discord |

150[3] |

Figure 1: Social media platforms and their number of global active users

CTS identified eleven important features social media platforms should adopt to protect targets of online harassment and divided them up into five categories:

I. Communication with Targets

-

Tools to communicate clearly with people reporting abuse

- Real-time support for those facing severe harassment

II. Support for Targets of Networked Harassment

-

Reporting on related activity on an external platform

- Batch reporting of multiple related posts

- Delegated access to trusted individuals

III. Blocking

-

Blocking accounts

- Blocking direct messaging

IV. Muting

-

Muting accounts

- Muting keywords

V. Filtering

-

Safety Mode

- Content Filter

I. Communication With Targets

Targets need consistent communication and feedback from platforms so they can track their reports under review, such as a ticketing system or reporting dashboard. Tech companies earned a score for the following:

Metric #1: Does the platform provide tools to stay in communication with targets reporting abuse?

This metric focused on whether a platform provided tools that allow it to communicate with targets. These tools include reporting portals or ticketing systems where users can see the status of their reports and understand the platform’s decision. Platforms were given a maximum score of .5 for this metric.

Metric #2: Does the platform provide real-time support for targets of severe online harassment?

At present, users cannot receive any real-time live human support from a social media platform, regardless of the severity of the abuse they’re facing. While some platforms have service accounts on other platforms (for example, Tiktok and Twitch have support accounts on Twitter (now X)), it is unclear how responsive these accounts are, whether they are resourced to support targets of harassment, or if targets are even aware of the existence of these kinds of accounts.

Platforms should provide real-time support on their own platforms, ideally from live employees, to users experiencing severe forms of harassment such as physical threats, stalking, sexual harassment, discrimination based on identity, sustained harassment, doxing, and swatting. A trained employee can direct users to resources and then tell users what comes next after the event is over. Platforms were given a maximum score of .75 for this metric, though no platform currently has this metric in place.

II. Support for Targets of Networked Harassment

Platforms must also provide support for targets of harassment campaigns or harassment that spans multiple platforms and channels. Harassment campaigns provide unique challenges to incident reporting that are not addressed by basic reporting options. ADL has observed that in many cases, harassers coordinate campaigns on a different platform and then post multiple harassing comments or posts, often from different accounts, on a target’s page or profile. Users need the option to ban links from that platform to their page or profile, report related content, and report multiple pieces of content at a time. Because managing high volumes of harassment is also exhausting and harmful, targets should be able to delegate access to trusted individuals who can review this content for them. Tech companies earned a score for the following:

Metric #3: Does the platform allow reporting on related activity from an outside platform?

This metric analyzed if the platform’s reporting form allowed users to report harassment originating from an outside website. For example, if a user on Facebook identifies that a Discord server is organizing harassment, they should be able to report this activity. This allows the platform to provide more targeted action. Platforms were given a maximum score of .5 for this metric. ADL’s social pattern library shows how a related activity report might be implemented.

Metric #4: Does the platform allow batch reporting, and how many reports are allowed within one batch?

Batch reporting is the ability to report a number of abusive messages or comments in a bundle. An example of the process of batch reporting on Tiktok is provided below.

Without batch reporting, users who are the targets of a harassment campaign are forced to click each message individually to report it. Users may receive hundreds or even thousands of comments or messages during a harassment campaign and must spend significant amounts of time reporting this content piecemeal and without the ability to indicate the harassment is coordinated. Platforms were given a maximum score of .5 for this metric.

ADL’s social pattern library shows how batch reporting might be implemented.

Metric #5: Does the platform allow for delegated access?

Delegated access gives trusted parties access to the targeted user’s account, including comments and direct messages, so these trusted allies can weed through the harassment instead of the user, freeing them from reading abusive messages. Platforms were given a maximum score of .25 for this metric.

ADL’s social pattern library shows how delegated access might be implemented.

III. Blocking

Blocking is one of the most basic abuse mitigation tools that online platforms have in their arsenal. Blocking allows a user to prevent another user from accessing their online space, such as posting on their page or directly messaging them. Doing so stops them from abusing a user directly. The picture below illustrates the process of blocking a user on Reddit.

All platforms should have blocking available to users. ADL’s social pattern library shows how blocking can be implemented. Tech companies earned a score for the following:

Metric #6: Does the platform allow blocking users?

This metric analyzed if platforms allowed users to block specific accounts. Platforms were given a maximum score of .5 for this metric.

Metric #7: Does the platform allow blocking direct messaging?

This metric analyzed if platforms allowed users to opt out of being direct messaged by other users. Platforms were given a maximum score of .5 for this metric.

IV. Muting

In addition to blocking, muting is another basic abuse mitigation tool. Muting — which is sometimes called “hiding” or “snooze” — allows users to prevent an abusive user’s comments from being seen without blocking or unfollowing them. For example, the picture below shows the difference between blocking and muting, called “Snooze,” on Facebook.

The abusive user will often have no knowledge that they have been muted. This is useful for preventing retaliation while the user being harassed figures out their next steps. It is also useful for de-escalation. Certain platforms, such as Reddit, operate less like personal social media spaces and more like community forums. When a user is muted, they are allowed to remain a part of the community but are prevented from spreading any further abuse or hate. Like blocking, all platforms should have muting available to their users. Tech companies earned a score for the following:

Metric #8: Does this platform allow accounts to be muted?

This metric is focused on if platforms allow users to mute accounts. Platforms were given a maximum score of .5 for this metric. ADL’s social pattern library shows how user muting can be implemented.

Metric #9: Does this platform allow keywords to be muted or hidden?

Sometimes users do not want to mute entire accounts. Platforms should allow users to have a set list of keywords that can be muted or hidden from comments or threads while still allowing users to see the rest of the account's content. For example, a user who reads book reviews can set keywords to mute for genres they do not enjoy. Platforms were given a maximum score of .5 for this metric.

ADL’s social pattern library shows how keyword muting can be implemented.

V. Filtering

Online platforms must give users not only the ability to filter content but provide comprehensive and proactive filtering tools. Filters are, at their most basic, tools to refine content so that users can avoid content they do not want to see. The picture below shows examples of different filtering options across platforms:

Filtering Options on Twitch

Online platforms, however, can and should do better than basic filtering. Users who face abuse need to be able to set which words or phrases they want to filter, such as slurs, and the ability to enable safety mode settings with filter presets. This metric focuses on user access to these kinds of filtering tools and does not consider if community moderators, for example, have access to filtering tools. Tech companies earned a score for the following:

Metric #10: Does the platform have a safety mode setting or safety toggle?

A safety mode setting or safety toggle is a feature that combines many different tools (filtering, muting, and blocking) to create a preset suite to moderate content on a user’s page. Platforms were given a maximum score of .25 for this metric. ADL’s social pattern library shows how safety mode can be implemented.

Metric #11: Is there a content filter that one can pre-set?

Filtering tools should not only allow users to be able to filter accounts they do not want to see but content as well. For example, users may want only to ban profanity on their homepage without banning an entire account. Platforms were given a maximum score of .5 for this metric. ADL’s Social Pattern library shows ways filters can be implemented.

Conclusion

ADL, our colleagues in civil society, and targets of abuse have recommended many of the features in this report card to tech companies since at least 2019. While some tech companies have taken steps to improve their support for targets since then, none have fully implemented these best practices. Moreover, ADL’s annual surveys of online harassment show that tech companies’ efforts have not meaningfully reduced the hate and harassment Americans experience online; on the contrary, online hate and harassment spiked in 2023.

Our evaluation shows that tech companies, for the most part, cannot be relied on to address the needs of targets of online harassment adequately. Tech companies must center the expertise and experience of groups and individuals who understand identity-based harassment firsthand, especially those from marginalized communities. They must invest in integrating these perspectives into how they design, maintain, and update their products. This kind of investment by tech companies is critically important in order to eliminate, as Julie Gray said, the “fee” of “trauma and hate” that people from vulnerable and marginalized communities pay every day when they use social media platforms, a high cost which drives many offline entirely.

Recommendations

1. Implement features recommended by academic and civil society experts to improve user experience and support for targets of online hate.

For example, the tools and strategies contained in ADL’s Social Pattern Library are designed to address and combat online harassment, make reporting easier, and strengthen the efficacy of platforms’ trust and safety efforts. Each feature has a particular function that, when integrated, contributes to a safer, more respectful digital environment for online users. The library provides a comprehensive roadmap of best practices for online safety, harm prevention, and effective support for victims of online hate and harassment. PEN America’s No Excuse for Abuse and PEN America and Meedan’s Shouting Into the Void reports similarly provide recommendations and examples of how abuse reporting can be improved on social media platforms and case studies that show examples of how these improvements can be implemented in practice.

2. Incorporate the experiences of targeted people and marginalized groups in product development and design.

To best protect online users from harm, platforms must be proactive in centering the experiences of targets of online hate and harassment, as well as communities most likely to experience identity-based hate and harassment. Targets of online harassment are necessary partners in developing products and policies that are anti-hate by design and that enable straightforward, effective reporting of online hate and harassment.

3. Invest in trust and safety to prioritize the experience of targets on platforms.

ADL has long maintained that moderation efforts are one of many necessary steps to combat online hate and harassment. Developing more robust moderation efforts is a starting point for building trust and creating safer online spaces. This should include hiring more staff to address issues of scale, investing further in training for human moderators, developing more accurate automated products to detect abusive content, and ensuring speedier review of users’ reports of harmful content to ensure that victims of online hate and harassment receive stronger support in real-time.

[1] Data provided by Hootsuite and We Are Social https://datareportal.com/reports/digital-2021-april-global-statshot

[2] Data provided by https://earthweb.com/twitch-statistics/ and https://www.bankmycell.com/blog/number-of-twitch-streamers/

[3] Data provided by https://earthweb.com/discord-statistics/

Acknowledements

The Robert Belfer Family

Craig Newmark Philanthropies

Crown Family Philanthropies

The Harry and Jeanette Weinberg Foundation

Righteous Persons Foundation

Walter and Elise Haas Fund

Modulate

The Tepper Foundation