Related Content

Games Industry Struggles to Moderate Content, Prevent Toxicity In Online Gaming, ADL Report Finds

Executive Summary

By Dr. Kat Schrier, Professor and Director of Games & Emerging Media at Marist College

Executive Summary

Harassment is unfortunately far too common when people play online competitive multiplayer experiences (online games). The nature and extent of harassment varies according to the identity of the players and the type of game. This study examines how expressing Jewish identity gets played out in some online multiplayer games.

To do this research, 11 participants were asked to play three selected games (Valorant, Counterstrike 2 and Overwatch 2) with the username Proud2BJewish and record their play sessions. 44% of their gameplay sessions resulted in some form of harassment, and between 25% and 41% of their gameplay sessions resulted in harassment on the basis of identity (hate-based harassment toward Jewish people).

Widespread though it is, hate-based harassment is not inevitable and should not be normalized. Jews and all other people must be able to fully express their identities without worrying that they will be harassed by other players.

This study suggests that we need to carefully design games and game communities with consideration toward inclusion, equity, and care. We outline strategies for industry, government, researchers, and civil society to enhance prosocial play while significantly reducing hate-based harassment.

Background

Over 215 million people in the U.S. play games, according to the Electronic Software Association (ESA), and there are over 3 billion players around the world in total. The eSports or online competitive gaming market is growing, with an estimated $1.72 billion imarket value in 2023. Many people also watch livestreams of competitive gaming on platforms like Twitch. The global audience for eSports (competitive gaming) is estimated to be over 540 million.

Online games are communities where people exchange ideas, socialize, and share opinions on current events. Similar to other social media platforms, such as Facebook, Instagram, X, and TikTok, people express their identities in games and engage in civics. They may also comment on and engage with others around world events such as the October 7 Hamas massacre, the war in Ukraine, or the Holocaust. Similarly, online games are also communities where people express their identities, learn about themselves, and shape their own opinions, behaviors, and ideas about others.

Our research was conducted in the context of a massive spike in antisemitic incidents in the U.S. and beyond, following a steady increase over the past decade; in the aftermath of the 10/7 Hamas massacre in Israel, the number of antisemitic incidents soared by 360%, ADL research shows.

ADL Online Gaming Survey

For the past few years, ADL has been investigating online multiplayer games and hate, harassment, and extremism in them, as well as prosocial behavior. In 2023, it found that an estimated 83 million of the 110 million online multiplayer gamers in the U.S. were exposed to hate and harassment over the last six months. 76% of adult participants (18-45 years old) in online multiplayer games reported experiencing any harassment in online games. Types of harassment include things like name-calling as well as more severe forms of harassment such as threats of violence, sexual harassment and discrimination based on identity. The same study found that 75% of teens (ages 10-17) had experienced some type of harassment in online multiplayer games.

For adults, the three identities that reported the highest increase in levels of gaming hate from 2021 to 2022 were Jewish (from 22% to 34% of Jewish gamers experiencing identity-based harassment), Latino (25% to 31%), and Muslim (26% to 30%).[12] In 2023, the study found that 37% of teens ages 10-17 reported that they were harassed based on their identity (e.g., gender identity, racial identity). The ADL report defines identity or hate-based harassment as “disruptive behavior that targets players based at least partly on their actual or perceived identity, including but not limited to their age, gender, gender identity, sexual orientation, race/ethnicity, religion, or membership in another protected class.”

The 2022 ADL study also looked at harassment by game; the two online games with the highest percentage of adults reporting harassment were Counterstrike (86% experiencing harassment) and Valorant (84%). Among the other games that were investigated in their study were Overwatch (73%), Roblox (69%) and Minecraft (53%)

Other studies have also investigated toxicity, harassment, and dark participation in games or gaming-adjacent spaces (e.g. Twitch). Kowert et al (2024) found that in their study of 432 online game participants, 82.3% reported experiencing toxicity and harassment firsthand and 88.1% explained that they witnessed it happening to someone else. 31% of the respondents shared that they had participated in harassing others. Trash talking was the most common type of harassment or toxicity witnessed (87%) and reported (78.9%). 47.9% of the respondents explained they were the direct target of hate speech. Kowert et al (2024) also share the mental health impacts of being the targets of toxicity, with over half of the direct targets reporting increased anxiety. Only around 10% of direct targets and witnesses reported no impact on their mental health.

Likewise, in a study of 2097 Hungarian gamers, 66% of the respondents explained they were victims of toxic and harassing behaviors such as “hostile communication, sabotaging, and griefing (deliberate attempts to upset or provoke).” Zsila et al. found that players who spent more time in competitive multiplayer games were more likely to be direct targets of these problematic behaviors. Ghosh (2022) looked at game-adjacent communities and conversations around popular games in X and Reddit and found that racism and sexism is rampant in these social media platforms.

Games, however, have also been sites of prosocial and positive behavior. A 2021 ADL report found that 99% of online multiplayer game players reported experiencing positive interactions in online games such as mentorship, friendship, and the discovery of new interests. The ESA notes that most Americans see play in positive ways, too, such as being a way to experience joy, stress relief, and mental stimulation, as well as building community and connection and helping foster skills like conflict resolution, teamwork, and problem solving. Researchers like former ADL Belfer Fellow Kat Schrier and ADL Belfer Fellow Constance Steinkuehler have written extensively about the use of games for learning, empathy, care, and compassion.

The study

To understand the presence of anti-Jewish hatred, as well as prosocial behavior in the wake of the October 7 attacks, 11 university students were asked to play three games in increments of one hour: Valorant, Counterstrike 2, and Overwatch 2, using one of two different usernames (Proud2BJewish and a control).This is initial study is one part of a larger project which will look at the experience of Jewish users, but also the ways in which other identities are targeted by hate in online game. The broader project will be published later in 2024.

This report looks at three questions:

1. Does harassment occur in online multiplayer games while expressing a Jewish identity, as compared with a control (non-identity related) username?

2. If so, what types of harassment occurs?

3. Is there any prosocial behavior happening in these games?

The three games we investigated:

Valorant (Riot): In this first-person tactical and hero shooter game, players play as an Agent and need to work with a team of four others to either defend or attack. Specifically, players in this study engaged in the Swiftplay mode, which are shorter, unrated matches where they need to either plant or defend the planting of a spike device (bomb). Players win if they are the first team to win five rounds of nine. Each agent character has different types of weapons and special abilities.

Counterstrike 2 (Valve): This first-person tactical shooter game replaced Counterstrike: Global Offensive (CS:GO) at the end of 2023. Similar to Valorant, players play on teams (terrorists and counter-terrorists) and need to plant (or defuse) an explosive or kill all the other members of the opposing team. Players in this study played in the Casual mode, where it was ten players competing against ten players.

Overwatch 2 (Blizzard): Similar to Valorant, Overwatch 2 is a hero shooter where players select one hero to play as from among 35 different hero characters. There are a number of different types or classes of characters, like “damage” and “support.” Players play in teams of five versus five; in this study, players had to play as Moira, in a support role.

Results

Overall instances of harassment and identity-based harassment:

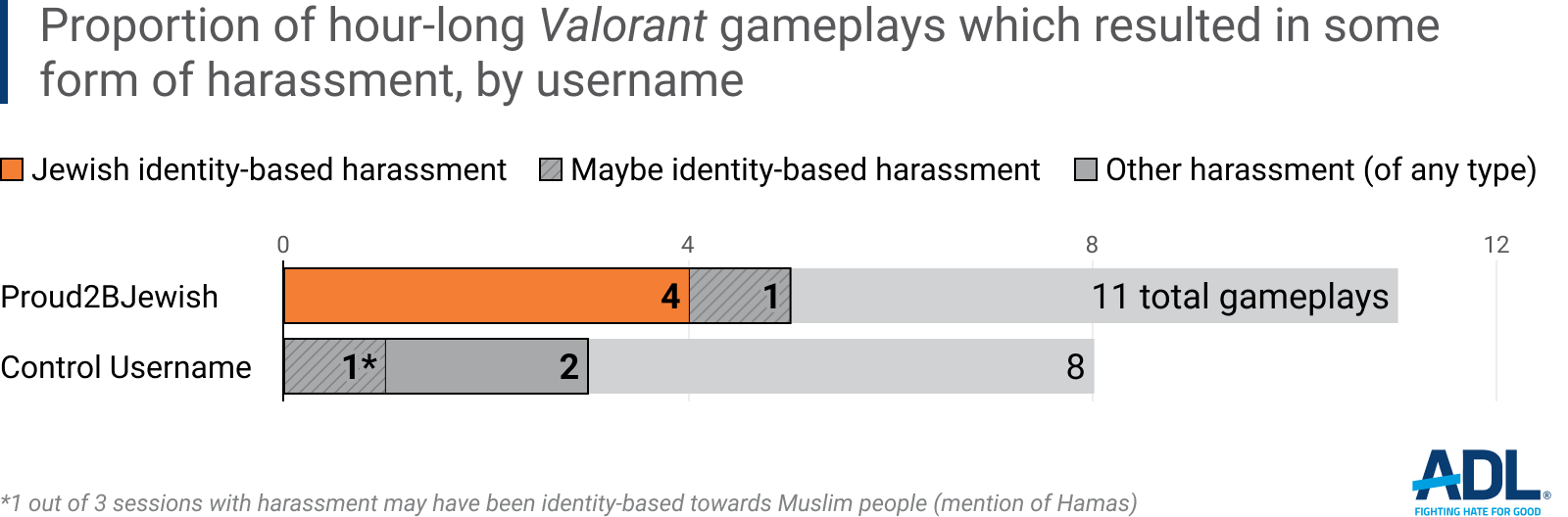

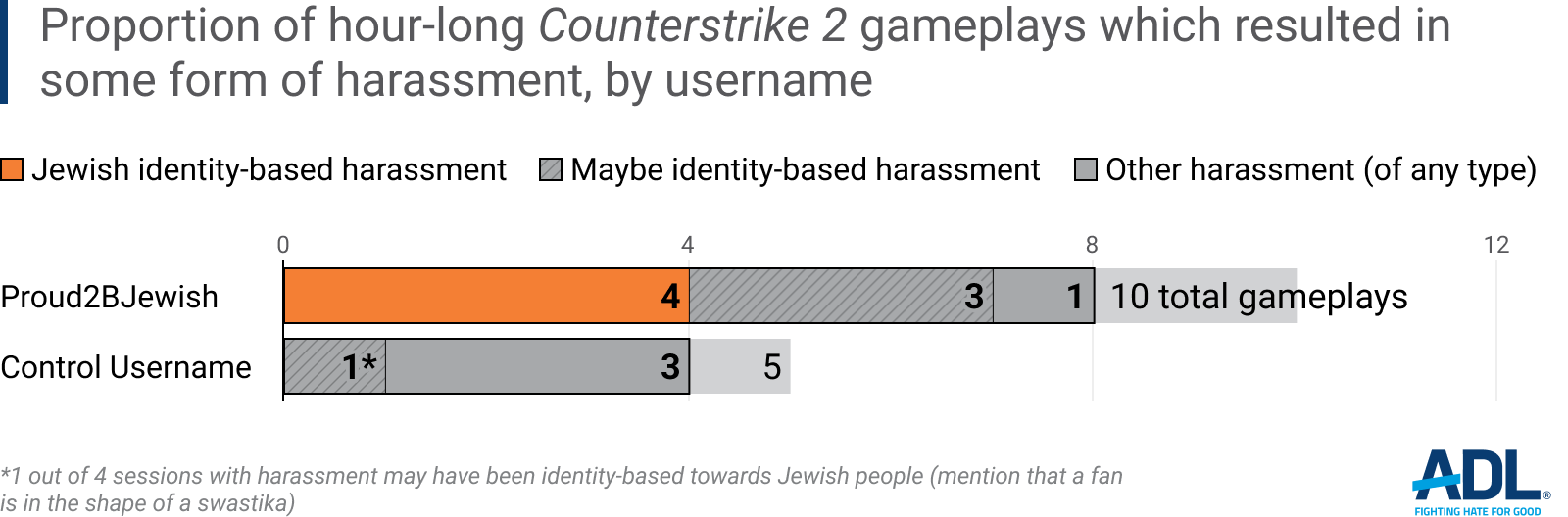

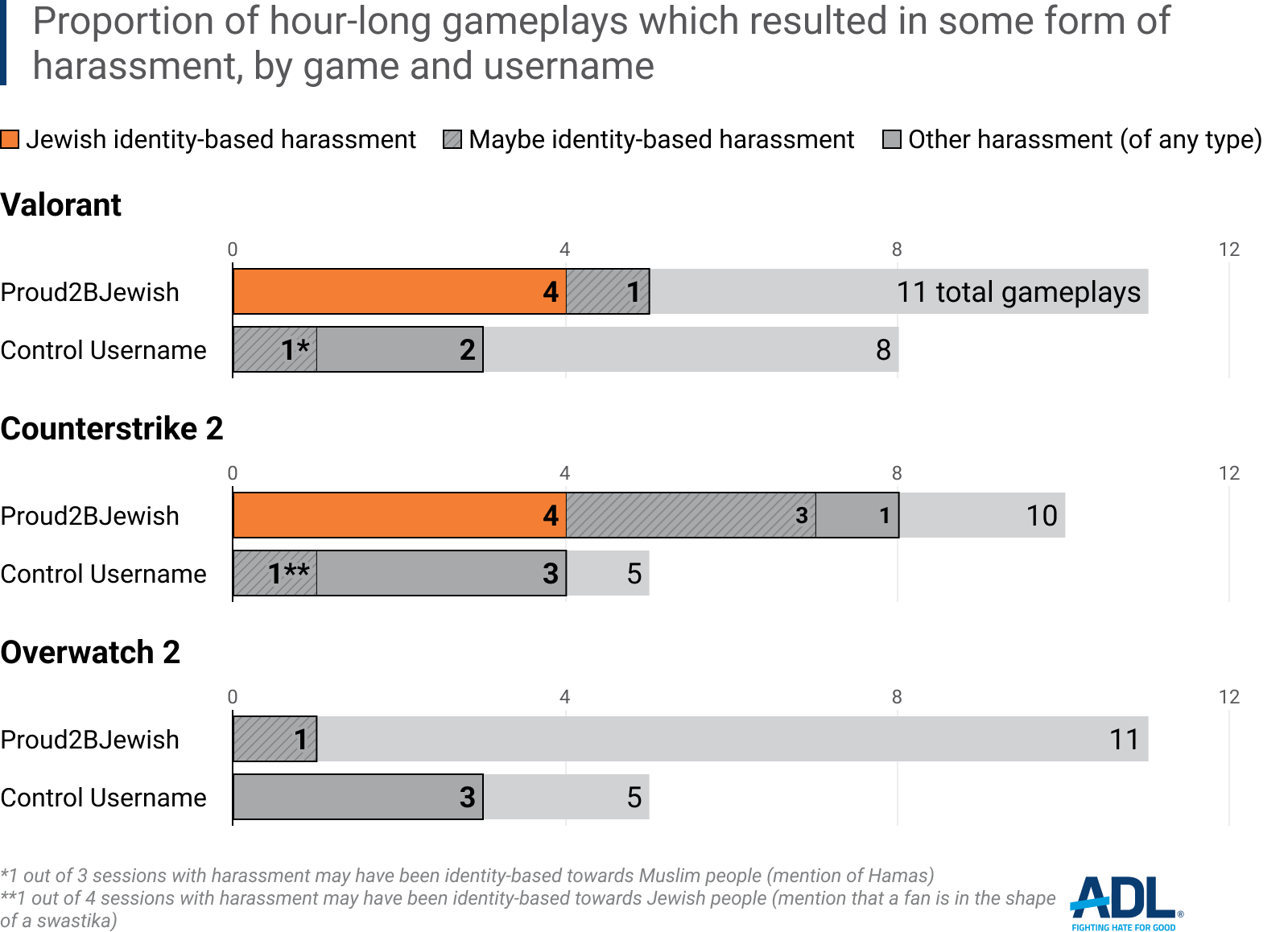

Players of Valorant, Counterstrike 2, or Overwatch 2 observed or experienced bias and harassment in many of their gameplay experiences. 44% of gameplay with Proud2bJewish usernames resulted in some form of harassment. 25% of the hours (8 total) definitely resulted in harassment on the basis of identity (hate-based harassment toward Jews) and an additional 5 hours may have been on the basis of identity, bringing the total to 41% (See Figures 4, 5, and 6).

On the other hand, only one session in all of the gameplay sessions with the control username may have resulted in some form of hate-based harassment toward Jews. Specifically, one of the control username play sessions in Counterstrike 2 may have included anti-Jewish harassment, as one of the players kept stating that the fan on their computer was shaped like a swastika.

Play sessions in Valorant and Counterstrike 2 had more harassment overall in them than in Overwatch 2. If we just look at Valorant and Counterstrike 2 play sessions, over half of the gameplay experiences in these two games resulted in some type of harassment (59%) across both conditions. Around 55% of the gameplay sessions with the Proud2bJewish username in these two games included harassment that was definitely or possibly hate-based toward Jews. This ranged from being called a “Jew” in a derogatory manner, hearing phrases like “gas the Jews,” and getting shot at intentionally by teammates. 38% of the control condition for Valorant and 80% of the control conditions for Counterstrike 2 had some type of harassment in them (though only one “control” play session possibly had identity-based harassment toward Jews).

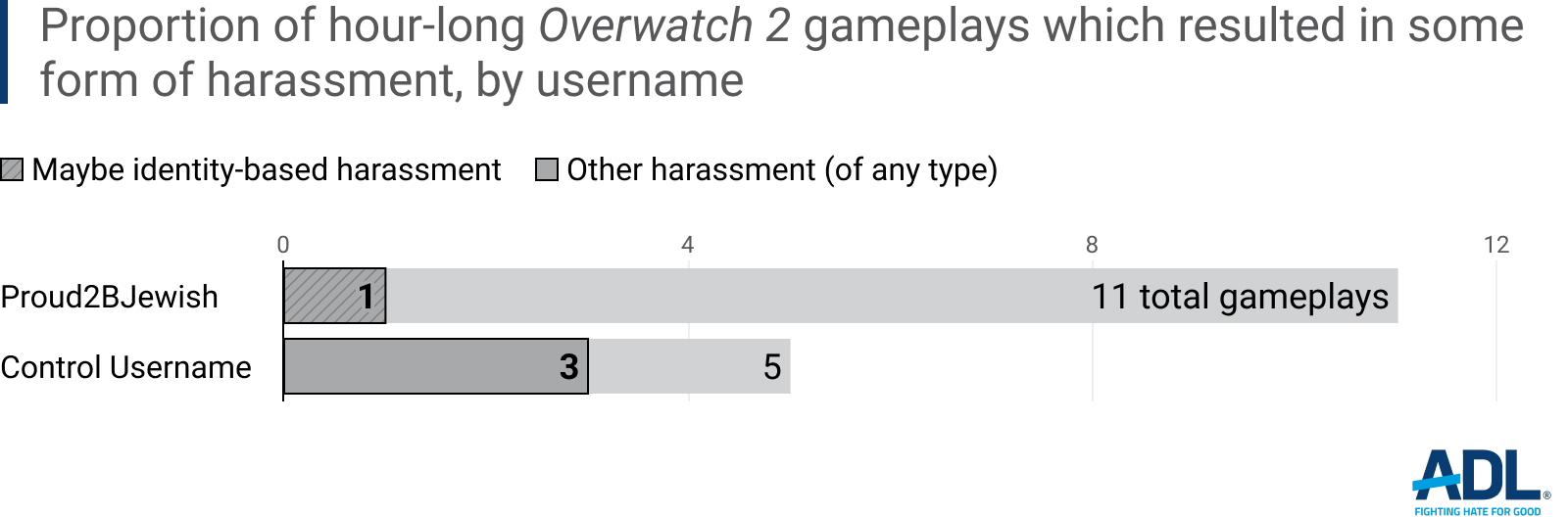

While some harassment was identified in new and expert game play experiences of Overwatch 2, it was not as widespread as it was for the other two games in this particular investigation.

As a comparison, harassment of any type was witnessed or experienced in 25% of gameplay experiences in Overwatch 2. Only one gameplay session (6%) may have resulted in harassment on the basis of Jewish identity.

In four of the sessions (8% of the game playing hour-long sessions out of the total, across all games and conditions,) players witnessed other forms of identity-based bias. For instance, one session of Counterstrike 2 (with the control username) included identity-based harassment toward the LGBTQ+ community and people of color. In this case, Jews were not specifically targeted.

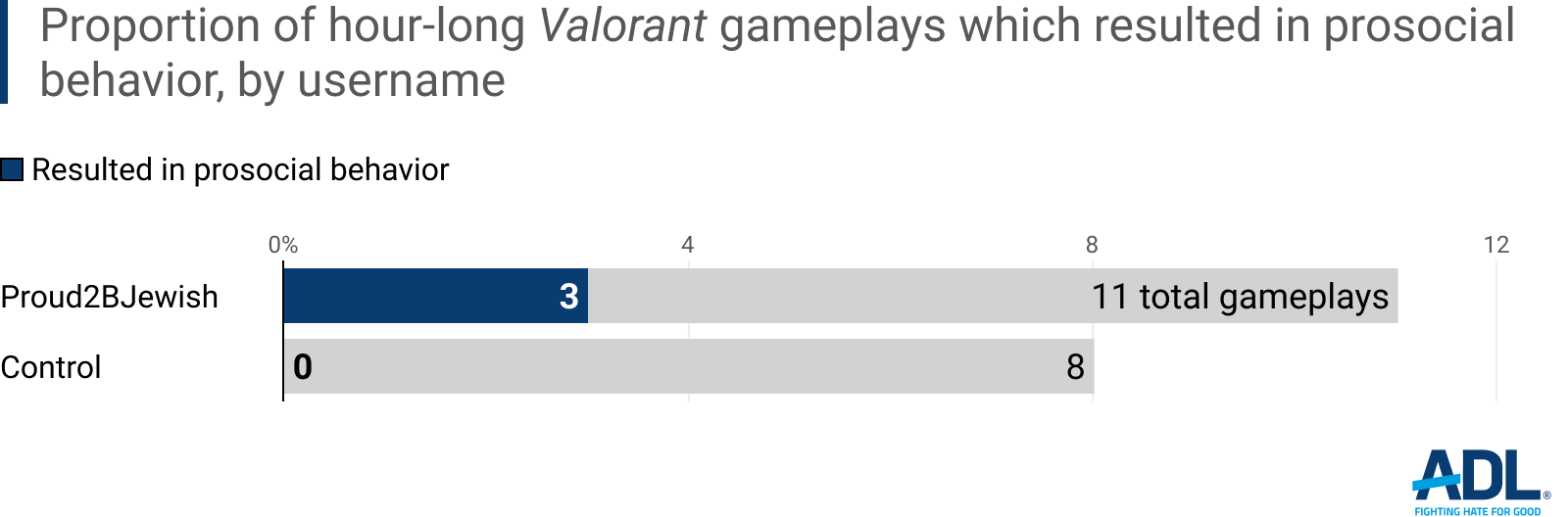

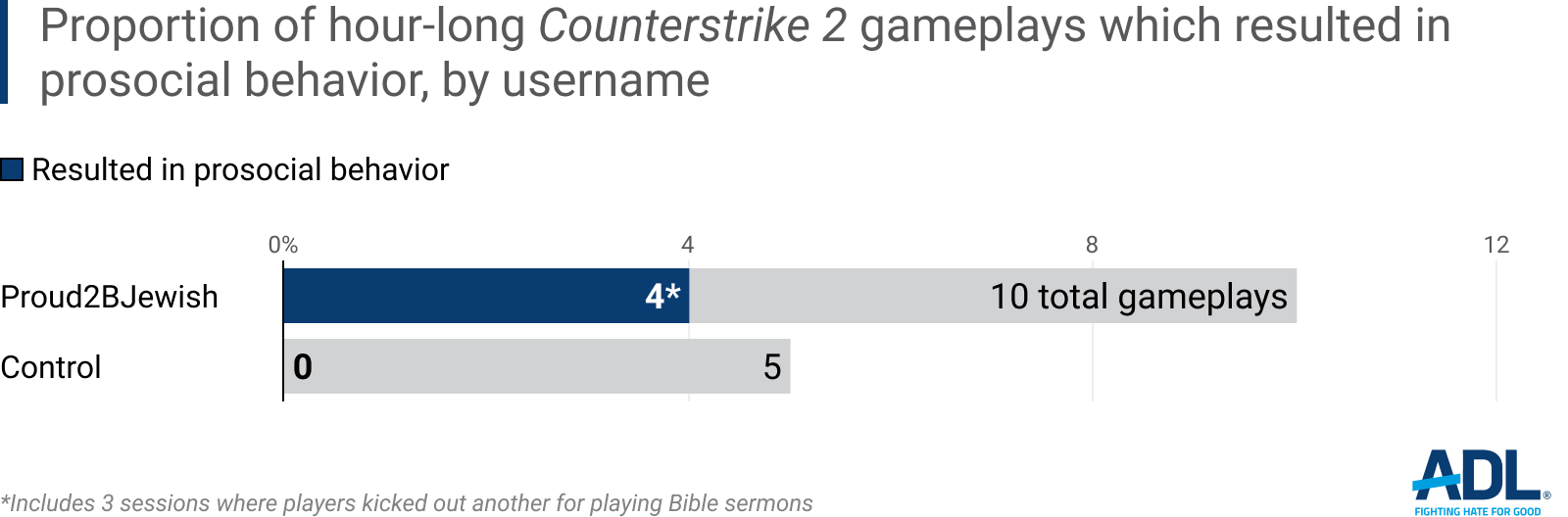

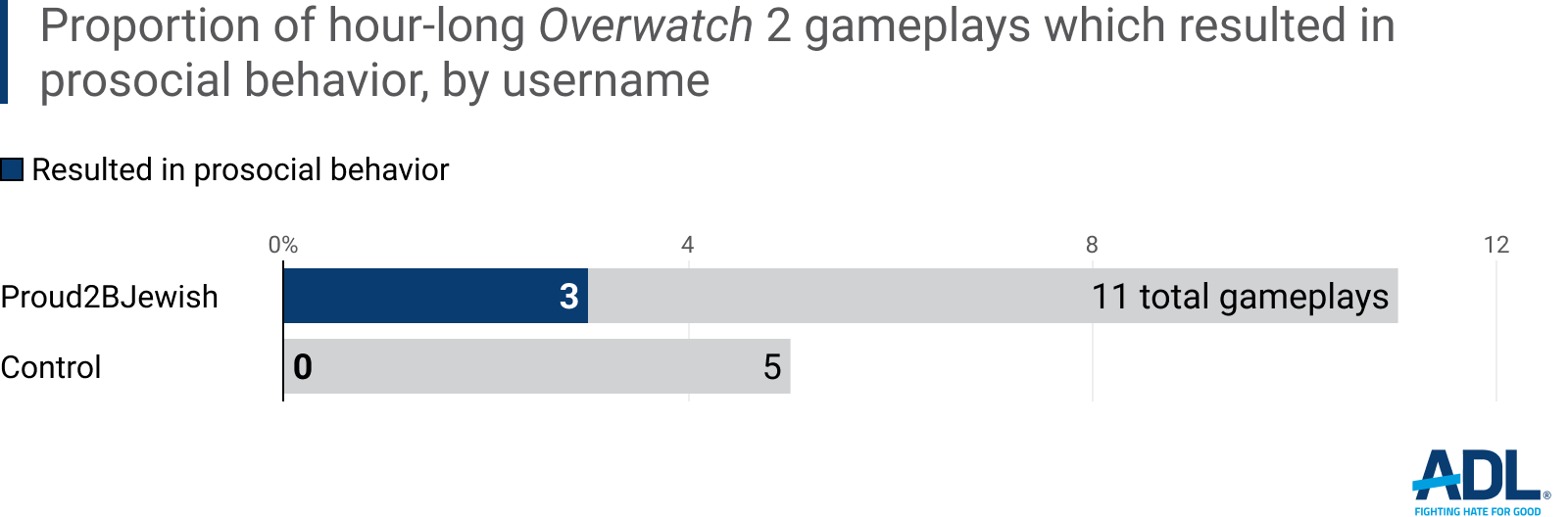

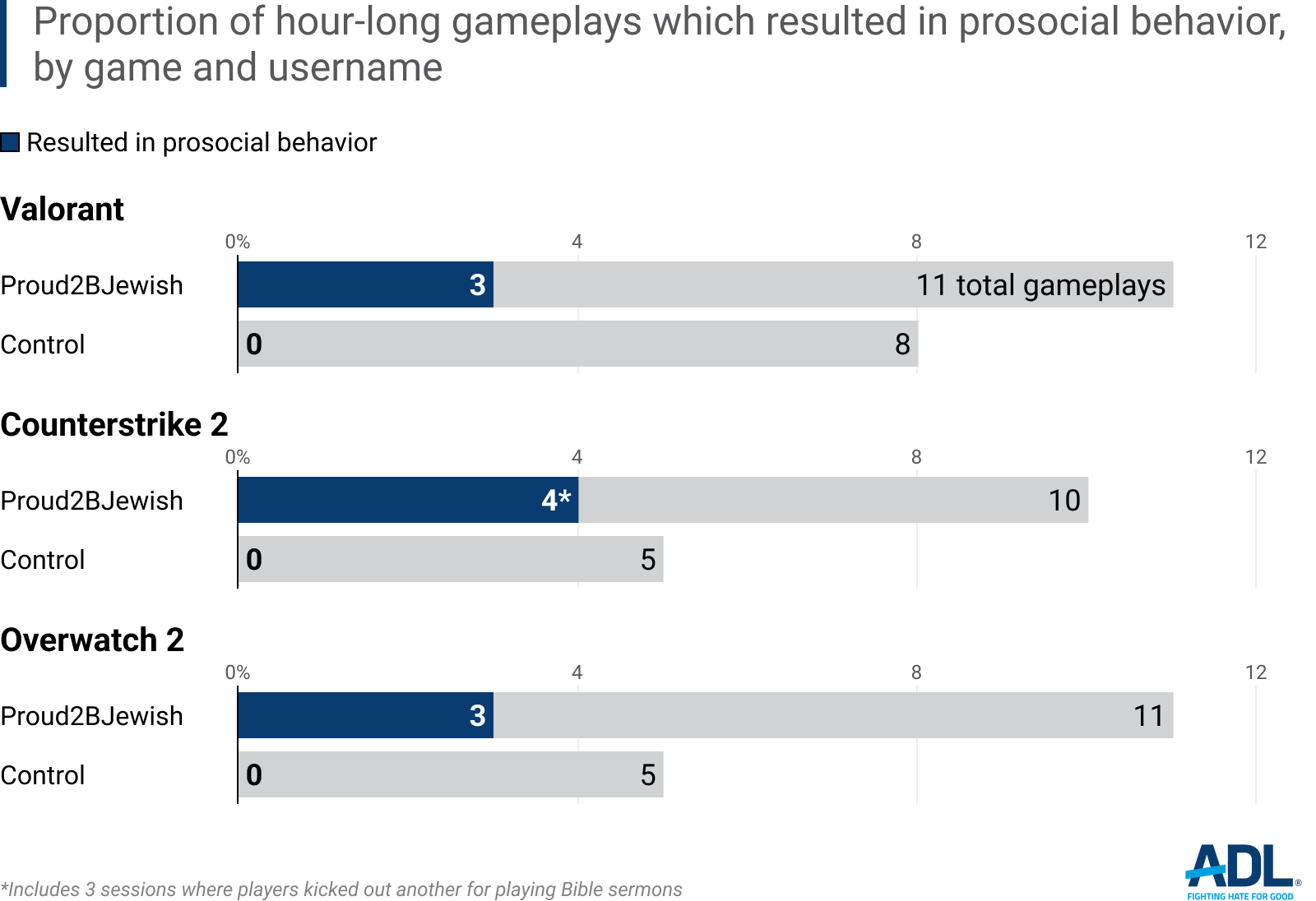

Prosocial behavior and positive play were also identified in these games, with around 31% of the play sessions (with the Proud2bJewish username) resulting in positive play, such as voting to kick out someone spouting racist slurs or being disruptive to the gameplay, or getting endorsements or friend requests from another player. Many of the play experiences had both harassment and positive play within the same hour-long session.

Examples of identity-based harassment of “Proud2BJewish” player in Valorant include:

“The Iso [character in Valorant] on the team yelled ‘fuck Jewish people’ at the very last second.”

“The person who picked Neon scoffed at the students’ name and said they should not be proud to be Jewish right now. Then he said, ‘whatever’ and did not continue speaking on the topic.”

“People kept saying ‘Free Palestine’ in the chat.”

“You have good aim hammaz wants to recruit” (Control)

Examples of identity-based harassment of “Proud2bJewish” in Counterstrike include:

"One teammate followed me around and shot at me for a while."

“Kept being called a ‘Jew’ for no reason.”

"A player on the other team was named Hitler.”

“People tried to vote to kick me at one point. I’m not sure why. Some people voted yes but it didn’t pass.”

“[One player] threatened to get antisemitic if I didn’t talk. [They] talked about Jew tunnels.”

When interviewed about playing the three games, the participant players explained what happened in the games overall, and their responses to any harassment. 100% of the players who were interviewed felt that there was less harassment and toxicity than they expected. They were surprised that there was not even more hate and harassment in the games they played. 100% said that none of the harassment bothered them personally.

In a few instances, participants observed that other players in the games reported, muted, or banned another player. This was often due to general curses, slurs unrelated to Jews, and disruptive gameplay behavior (purposely not playing) rather than due to it being related to Jewish slurs or harassment. In a few of the sessions, players voted out a player who was reading sermons and generally annoying the others, rather than targeting their harassment. None of the participant players initiated a report or voted to block another player, except when they were playing Bible sermons through their audio.

Overall instances of prosocial behavior

Prosocial behavior was also present, though it mostly related to gameplay and not identity expression. In particular, Counterstrike (27%), followed by Overwatch (19%), had higher overall rates of cited positive interactions. Most of these were either “good job” sentiments in chat or votes to kick out players who were sharing Bible sermons over the voice chat (the sermons are seen as disruptive because they prevented players from hearing each other strategize or concentrating on the gameplay). 16% of Valorant gameplay had some form of prosocial or positive behavior in it. Only a small share of the prosocial behavior involved people standing up to someone who was bullying or harassing another person. (Note: the student-players were told just to play, and not to talk or type about anything other than what was absolutely necessary for gameplay).

The student-players noted that when they were harassed based on their username, no one else stood up for them. There was only one instance (out of all 50 gameplay sessions) where another player stood up to a harasser who was being racist, and none where someone was being antisemitic. One student said, “other players were either A. silent and ignored the general harassment I experienced, or B. were just as toxic/harassing as the other people.” Another student-player noted that the harassment seemed contagious: “The harassment actually spurred on other players to harass each other.”

Some of the gameplay experiences included both positive and negative experiences. However, many of the gameplay experiences had no notable antisocial or prosocial behavior at all—no speaking, no chats, no disruption, and no prosocial gameplay; there was just competitive gameplay.

Examples of prosocial behavior for “Proud2bJewish” in Valorant:

“Some games I do well or clutch a round, and then they say ‘nice/good job/woahhh, etc…’

Got some “nice jobs”

Examples of prosocial behavior for “Proud2BJewish” in Counterstrike 2:

“Teammates kicked out person who was using racist terms and slurs in general.”

“Teammates kicked out a person who was playing a religious sermon.”

Examples of prosocial behavior for “Proud2BJewish” identity in Overwatch 2:

“I [was] asked to stay as a team, so I didn’t have to find another queue and get into more games faster. And I was doing good, so people like playing with people who will help carry the team.”

“After one game, I believe my first one, one of my teammates gave me an endorsement after the game.”

Context Matters

The way actions or language were interpreted depended on context. Calling someone a “Jew” or “Jewish” in the game in a disparaging manner, for example, was considered problematic or harassing behavior. This was used as a slur in a few of the games. In addition, some student players dismissed slogans and words like “Free Palestine” or “Jew” as not bothering them, perhaps because they themselves are not Jewish, and/or are used to hearing such things in game environments. It was only after speaking to a Jewish friend, as one of the players noted, that they realized saying “Free Palestine” to a Jewish person might be viewed as an intentional insult.

Moreover, the same behavior in some instances can be seen as positive (teabagging after a victory), but in other instances it could be considered as problematic or harassment (teabagging after a defeat, or teabagging while saying other words). Students commented on whether players targeting them was due to the gameplay (competitive first person-shooter and being shot at by the opposing team,) or due to being Jewish (if their own teammates were shooting at them). Depending on the context within the game, being shot at could be interpreted in a variety of ways. One of the players, for instance, said that someone was shooting at their corpse, and another said others would not accept their weapons, which were gameplay indicators of aggression. Non-gamers not used to the cultural norms of these communities may not necessarily have noticed or looked for such indicators.

These types of nuances might make it difficult for AI moderation tools solely based on large language models to pick up hateful behavior and marginalization. ADL Belfer Fellow Libby Hemphill echoes this view, explaining that “When content moderation is too reliant on detecting profanity, it ignores how hate speech targets people who have been historically discriminated against. Content moderation overlooks the underlying purpose of hate speech—to punish, humiliate and control marginalized groups.”

All of this suggests that moderators and researchers cannot just listen or watch to learn about hateful behavior and speech in online games. We need to understand the context of the game and norms of a particular game’s culture to realize fully how someone may or may not be harassed and marginalized in these spaces.

In the interviews, the student players framed the expression of one’s identity as Jewish as being problematic or causing problems, or as undesirable. One student noted that they assumed other players thought they were “a troll account” because of the username and because they were not good at the game. Other players shared their trepidation, at first, of using this username in these spaces. All of them, however, shared their pleasant surprise that the game play sessions “weren’t that bad.” A few of the students mentioned “having a good time playing the game” with the Jewish username. Despite witnessing antisemitic, Islamophobic, and other racist behavior and language, they seemed inured to its effects, and said that they often saw “much worse” behavior in previous play sessions with these games (with their usual username).

These results relate to other studies done on the normalization of toxic culture. Adinolf & Turkay (2018) interviewed game players in a university sports club and found that participants seem inclined to rationalize toxic behaviors and language as part of the competitive gaming culture. Likewise, Beres, et al. (2021) explored player perception of toxic behavior and the fact that players may not report negative behavior because they think it is fine, typical, or not concerning. This is not necessarily due to resiliency, but perhaps due to a feeling of helplessness, as the impact of identity-based hate and harassment in games and beyond has been shown to be damaging. Despite observing hate, players themselves may not be worried about hate speech and marginalization in games, which in itself is worrying.

Conclusion and Recommendations

Nobody should have to hide their identities in their online or offline communities. As more people engage in online gaming spaces, and particularly competitive gaming spaces, we need to find ways to minimize toxicity. Players themselves may be directly affected, but those watching via other platforms such as Twitch or in Discord communities may also be indirectly affected by these negative behaviors. The impact of this type of behavior is more pervasive than just the game itself. As Kowert, et al. (2024) note, antisocial and negative behaviors like trash talking or hate speech also affect the mental health of players, just as it would in the classroom, on the playground, in the office, or at a town hall meeting. This is why many players stop playing and are completely marginalized from the game and those communities. Players may also respond by disrupting gameplay or affecting others’ gameplay, further propagating the notion that games are toxic, thereby continuing the cycle of toxicity.

Games do not have to be toxic, and most players do not want to play in toxic environments. This study’s investigation of three different games, each first-person shooters, with different measures of harassment and prosocial behavior, helps us to see that design and community, and not just the fact that they are first-person games, matters. Games can facilitate prosocial outcomes and wellbeing, and we should continue to advocate for our games and gaming communities to be more compassionate, rather than cruel.

Recommendations for industry

Hate speech, toxic discourse, and harassment happen in online competitive games—but they are not inevitable. It is crucial that company executives, game developers, community managers, and other people involved in designing game environments consider the following:

Implement industry-wide policy and design practices to better address how hate targets specific identities. Hate manifests differently based on the identity that is being targeted. Companies should look at their policy and design solutions taking into account this difference. They should work with trusted industry partners and experts that represent impacted communities, such as ADL, to ensure that these policies are effectively designed and implemented across their platforms.

Incentivize and promote prosocial behavior through design. In addition to countering hate, game companies must encourage players to positively engage with one another. For example, Overwatch 2 enables endorsements, which could be utilized for encouraging another player’s excellent gameplay but also for their actions making the community safer, such as standing up to hate. In the words of ADL: “Use social engineering strategies such as endorsement systems to incentivize positive play.”

Expand, not contract, resources. Rather than reducing Trust & Safety headcount, as many companies have done in 2023 and 2024 thus far, companies must expand resources in this important area. Failure to do this will eventually impact player retention and player spending, as many players will leave if a game is too toxic, or not play at all.

Improve reporting systems and support for targets of harassment. Games companies cannot stop at creating policies against online abuse: they must also enforce them comprehensively and at scale. Having effective, accessible reporting systems is a crucial part of this effort as it allows users to flag abusive behavior and seek speedy assistance. Companies must ensure they are equipping their users with the controls and resources necessary to prevent and combat online attacks. For instance, users should have the ability to block multiple perpetrators of online harassment at once, as opposed to having to undergo the burdensome process of blocking abusive users individually. Games companies must connect individuals reporting severe hate and harassment to human employees in real-time during urgent incidents.

Strengthen content-moderation tools for in-game voice chat. Often, bad actors in gaming spaces can evade detection by using voice chat to target other players in online games. To date, the tools and techniques that detect hate and harassment in voice chat lag behind those that moderate text communication (though some companies have made progress in the last year). Although this requires an investment of resources, companies must address this glaring loophole to ensure their users’ safety.

Release regular, consistent transparency reports on hate and harassment. Unlike mainstream social media companies, games companies—with few exceptions such as Xbox, Roblox and Wildlife Studios—have not published reports about their policies and enforcement practices. Transparency reports should include and share data from user-generated, identity-based reporting, aggregating data around how identity-based hate and harassment manifest on their platforms and target users. For guidance, companies can consult the Disruption and Harms in Online Gaming Framework produced by ADL and the Fair Play Alliance, which provides a set of common definitions for hate and harassment in online games.

Submit to regularly scheduled independent audits. Games companies should allow third-party audits of content-moderation policies and practices on their platforms so the public knows the extent of in-game hate and harassment. While transparency legislation efforts are still underway, games companies should voluntarily provide independent researchers and the public access to this essential data. In addition to promoting accountability, independent audits pave the way for games companies to assess areas for improvement with data-based findings.

Include metrics on online safety in the Entertainment Software Rating Board’s (ESRB) rating system of games. The ESRB provides information about a game’s content, allowing adults, parents, and caretakers to make informed decisions about the games that their children may play. Although game companies caution players that the gameplay experience may depend on the behavior of other players, no components related to the efforts of online games to keep their digital social spaces safe exist in the ESRB ratings at present. The ESRB should devise and implement these kinds of ratings across all the online games they review.

Recommendations for government

In February 2024, ADL released its annual publication Hate is No Game: Hate and Harassment in Online Games 2023. This whitepaper highlights a specific issue surrounding hate and harassment in games, and further expresses the need for governments to take a more active role in fighting hate and harassment in games. As in our February publication, ADL recommends:

Prioritize transparency legislation in digital spaces and include online multiplayer games. States are beginning to introduce, and some are successfully passing, legislation to promote transparency about their content policies and enforcement on major social media platforms (see California’s AB 587). Legislators at the federal level must prioritize the passage of equivalent transparency legislation and include specific considerations for online gaming companies. Game-specific transparency laws will ensure that users and the public can better understand how gaming companies are enforcing their policies and promoting user safety.

Enhance access to justice for victims of online abuse. Hate and harassment exist online and offline alike, but our laws have not kept up with increasing and worsening forms of digital abuse. Many forms of severe online misconduct, such as doxing and swatting, currently fall under the remit of extremely generalized cyberharassment and stalking laws. These laws often fall short of securing recourse for victims of these severe forms of online abuse. Policymakers must introduce and pass legislation that holds perpetrators of severe online abuse accountable for their offenses at both the state and federal levels. ADL’s Backspace Hate initiative works to bridge these legal gaps and has had numerous successes, especially at the state level.

Establish a National Gaming Safety Task Force. As hate, harassment, and extremism pose an increasing threat to safety in online gaming spaces, federal administrations should establish and resource a national gaming safety task force dedicated to combating this pervasive issue. As with task forces dedicated to addressing doxing and swatting, this group could promote multistakeholder approaches to keeping online gaming spaces safe for all users.

Resource research efforts. Securing federal funding that enables independent researchers and civil society to better analyze and disseminate findings on abuse is vitally important in the online multiplayer gaming landscape. These findings should further research-based policy approaches to addressing hate in online gaming.

Recommendations for researchers

There are many gaps in the research surrounding harassment and hate in online games. We need more research as to what is happening in these games, and also how to design interventions to promote more prosocial play.

Investigate these games more deeply, with an enhanced number of players, and play sessions.

Consider expanding on this investigation to other online games (including non-competitive ones, or ones for other demographics). For instance, how do Roblox games or games that are not first-person shooters compare to the harassment found in this game:

Research different variables such as:

Longer gameplay (2 hours, not 1) or other increments of data (30-minute increments or by game)

Playing well (intentionally)

Playing poorly (intentionally)

Expert levels for each of games (or at least higher than new)

Focusing on only the lobbies vs. gameplay matches

Speaking aloud by different gender identities

Try out additional usernames and/or conditions

Consider names like Proud2bMuslim and Proud2bMexican, which are based on additional ethnicities, religions, and nationalities.

Use voice chat with non-English languages and non-U.S. accents, such as Hebrew language or Israeli accents.

Study the specific elements (design, moderation, diversity level, game environment, players) that influence how much a game community does or does not encourage hate or harassment versus prosocial behavior.

Recommendations for civil society

We all need to help advocate for safe, moderated, and caring communities in our virtual civic spaces.

Everyone should consider the impact of toxic discourse and harassment in our spaces, including online multiplayer games.

We should hold government and industry accountable. We should demand safety and care in our online multiplayer spaces and ask government and industry leaders to protect ourselves and our families.

As more and more people spend a significant part of their social and civic lives online in environments like online multiplayer spaces, we need to prioritize having up-to-date policies and practices to ensure that we are protected from harassment.

We need to not be complacent that “games will be games” and toxic cultures are to be expected. Hate and harassment are not inevitable. We should urge game companies to do better with their communities and game designs.

Parents of online competitive game-playing children (whether 8 or 18-years old) should consider the types of interactions they might be having in these games. They should have engaged discussions around issues of safety online, such as around what their child should do if they are harassed. But they should also consider how to encourage their kids to stand up to harassers (such as by reporting any harassment), and not propagating harassment.

Games can support the well-being of people, as well as negatively affect mental health. We need to advocate for games to inspire good health rather than problematic impacts.

The public should encourage and incentivize resilient and caring communities whether offline and online, to model prosocial civic behavior wherever people are interacting.

Appendix

Methodology

For two months in late 2023 and early 2024, 11 university students participated as game players in three online competitive first-person shooter games (Valorant, Counterstrike 2, and Overwatch 2). The students recorded their game play and filled out a short checklist after each hour of game play.

Choice of Game

These three games were chosen because they are popular multiplayer first-person shooter games (Counterstrike is the top played game on Steam, for instance), they are all free-to-play, and all three appear in the 2022 ADL report. Two of them, Counterstrike and Valorant, were the two games with the highest reported harassment and online hate (86% and 84%). As a comparison, Overwatch 2 which is also in the report and slightly lower on the list, with harassment reported by 73% of the respondents.

Usernames

Students played each game in hour-long increments, using one of these usernames:

Proud2BJwish or Proud2BJewish or ProudToBeJewish or Proud2bJewish

Proud2B4399 or User2258999 or User4399 (“control” usernames with randomized numbers)

Usernames were sometimes shortened due to character limit constraints for one of the games, and sometimes altered if the game wouldn’t let them use that exact username.

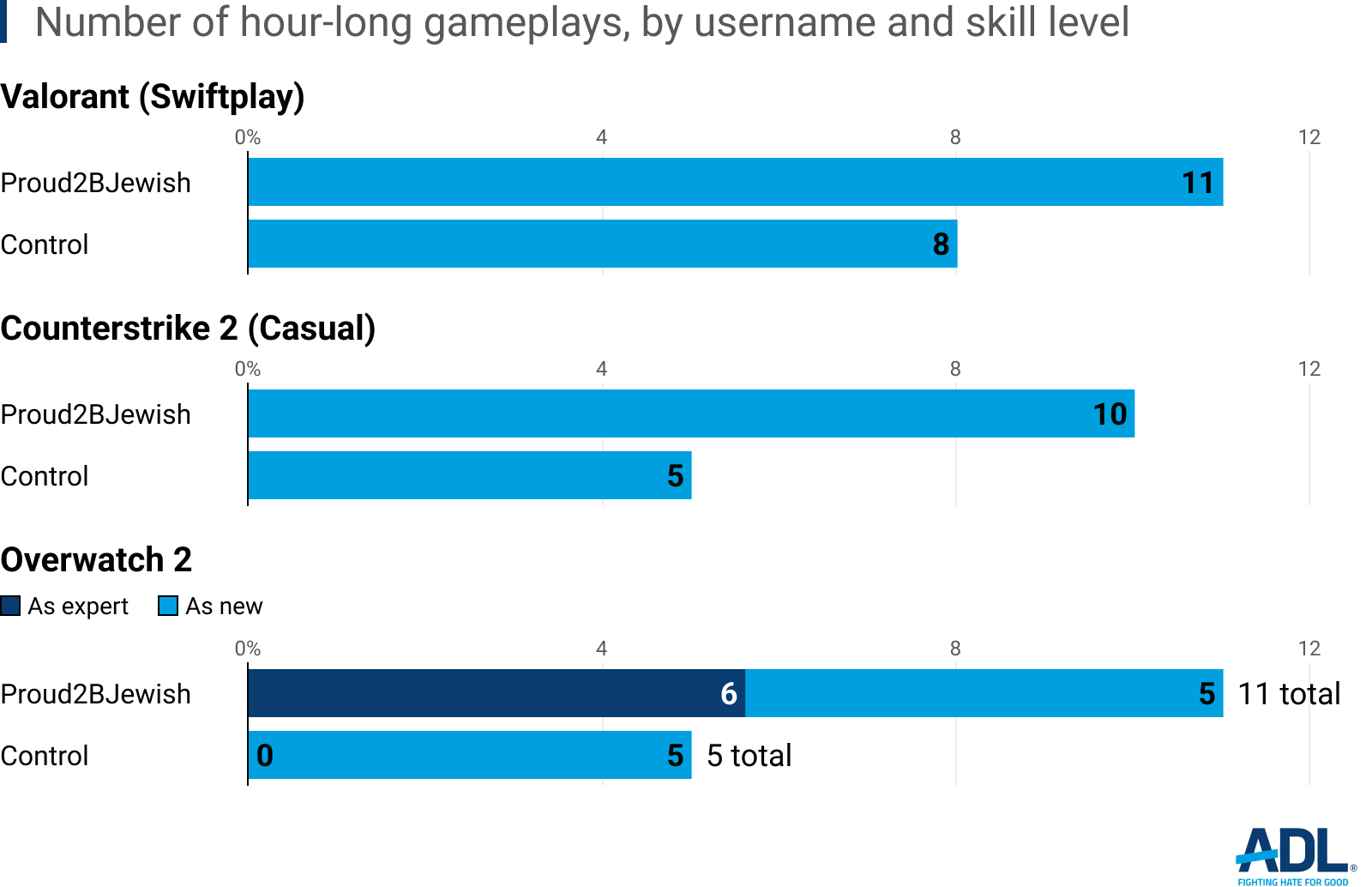

Players played as a “new” player for Valorant and Counterstrike 2. Players played as either new players or expert players for Overwatch 2. Please see Figures 1, 2, and 3 for more information on the different conditions.

Playing as a “new” player meant that the player created a band-new account for that game and started out with one of the three usernames. Playing as an “expert” for Overwatch 2 means that players played on a Platinum 1 level account, which was shared among the “expert” players. See specific numbers of hours of gameplay per game and per username in Figures 1, 2, and 3.

For each of the games, players played without speaking or typing to ensure that the results were not affected by the perception of intersectional identities (such as those based on gender identity or racial identity, alongside the username). They enabled team voice chat and they recorded what was happening on the screen and in the team voice chat.

Procedures

Players filled out a checklist for each game-hour they played, where they explained which game they played, which username they used, and what types of behaviors and chats (voice, text) occurred during that hour. For each increment of one-hour, they wrote down all instances of harassment, including name-calling, virtual problematic gestures, general slurs, and other toxic behavior. For instance, if someone called another person a “Jew” in a derogatory way or purposely attacked their own teammate by shooting at them or their corpse.

Players wrote down all instances of prosocial behavior, including friendship, mentorship, and praise from teammates. For instance, players noted if they got endorsements from other players, or if others stood up against misbehavior and toxicity.

They also engaged in a short interview with the researcher. All completed checklists and interviews were coded using an iteratively-developed coding scheme. Each instance of problematic or prosocial behavior reported by the players was coded with different codes, like “slurs (to the player)” or “other players endorsed a player.”

Participants

All participants in this study are university students. There was a mix of student ages and gender identity, with about half of the participants identifying as male, and the other half identifying as female and/or non-binary. Students volunteered for the study based on an advertisement shared with all students on Handshake, a career platform, and an advertisement on a server in Discord (a social media platform that consists of many public and private communities or “servers”). Students had a variety of experience levels (novice to expert) with the games played, and with online competitive multiplayer games in general.