Related Resources

Executive Summary

Social media content creators on sites like YouTube are suffering due to severe harassment. Members of minoritized groups, such as women, Jews, people of color, and LGBTQ+ creators, are especially at risk for identity-based harassment. This harassment is typically experienced in the form of hateful comments creators receive on their content. In an original user research study, ADL finds that YouTube creators who face harassment, especially identity-based harassment, are stressed and overwhelmed moderating these comments. To help alleviate this we propose instead a user-centered, collaborative tool that enables creators to moderate their comments automatically, using customizable templates they can share.

Key Findings

Harassment is highly distressing and damaging to content creators. Dealing with hateful and unwanted comments is emotionally draining and undermines creators’ ability to engage with their audience. This is especially the case for targets of identity-based harassment.

Content moderation for creators is onerous. Moderating hateful comments is labor-intensive and stressful. Creators fear under-moderating, which leads to more hateful comments and possibly penalties from the platform, or over-moderating, which may reduce engagement with their content or create backlash from viewers.

Word filters are inadequate and time-consuming. One of the few tools that content creators have for automating comment moderation is a word filter, which lets creators specify phrases that get automatically removed. Word filter tools on most major platforms are rudimentary, however. This leads to significant frustration and manual effort.

Content creators want collaborative filters they can adapt to their needs. Platforms could significantly reduce the effort and stress that harassment causes content creators by enhancing their automated comment moderation tools to be more controllable, customizable, and shareable.

Recommendations

For Platforms

Content creators perform vital moderation work to reduce the toxicity of comments and protect themselves against harassment. Platforms should build out their existing word filter tools to provide features that alleviate stress and manual effort while allowing creators to assist each other.

For Developers

Because harassers use targeted and shifting strategies, creators emphasize the importance of being able to customize what they automatically filter and understand why something is removed. Developers implementing automation capabilities should build in support for authoring filters, such as templates and suggestions, as well as features for explaining and tracking automated actions, including visualizations and an activity log.

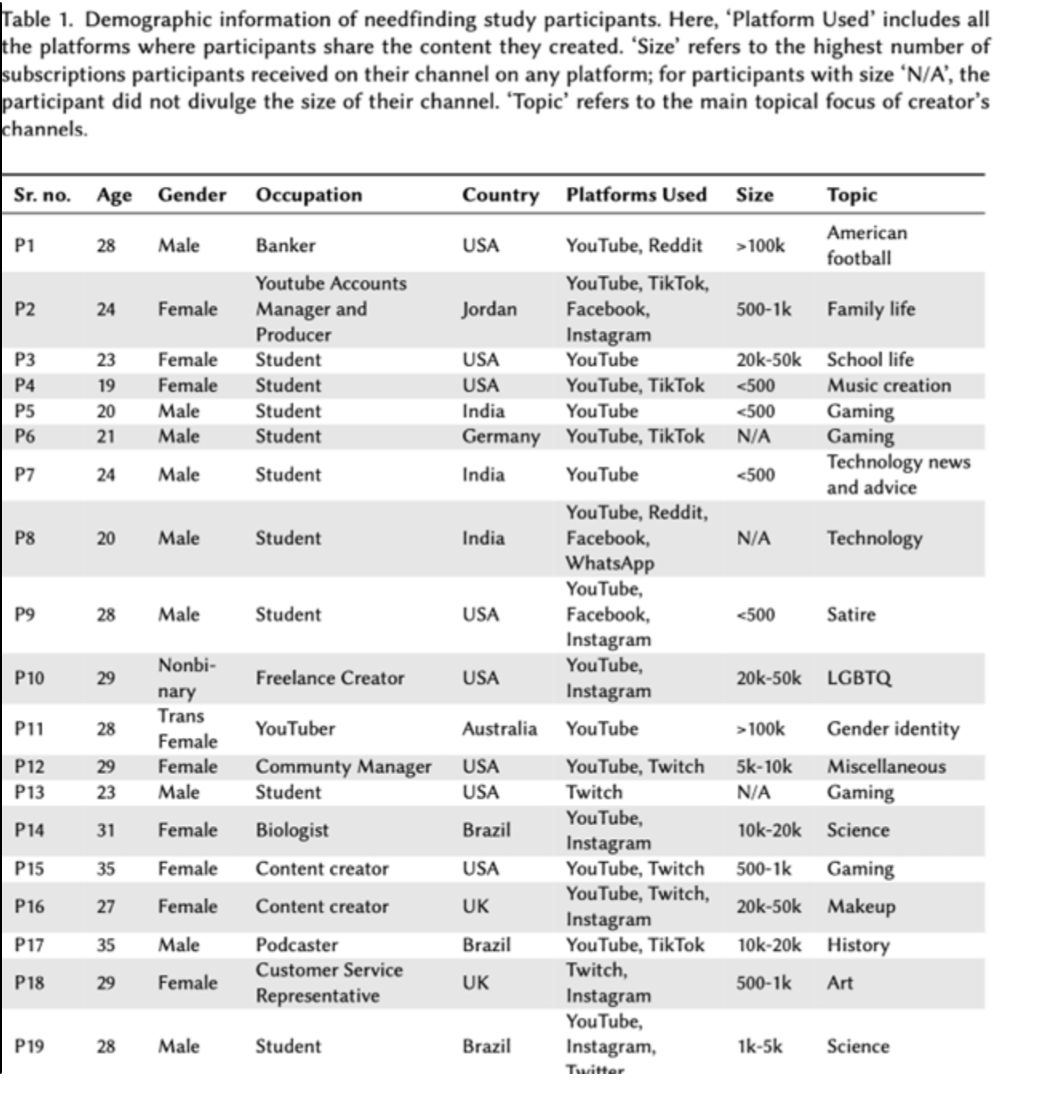

How Content Creators Experience and Combat Harassment

To understand their experiences with harassment and frustrations with current content moderation tools provided by platforms, we interviewed 19 content creators active on platforms such as YouTube, Twitch, TikTok, and Instagram between October 2020 and April 2021. Creators had anywhere from under 500 to over 100,000 subscribers, and created content on a host of topics, including gaming, sports, science, technology, history, art, makeup, LGBTQ and gender-related content, and everyday life.

People who create content for online platforms such as social media are a fast-growing group as more people seek to gain influence and make money on social media. Their work requires cultivating and engaging with an audience. This opens them up to harassment that can threaten their ability to build an audience or earn income.

“I actually enjoy reading comments because they give you a sense of achievement, and it’s really rewarding honestly, especially on videos where it’s building awareness and seeing how people are reacting to that. For example, we did a video about gender equality, and it was really, really nice to see how people are reacting to that and their own experiences.” - A content creator who produces content about family life for YouTube, TikTok, Facebook, and Instagram.

Creators invest a great deal of time, emotional energy, or money to address harassment and suffer occupational stressors contributing to burnout, PTSD, and mental health risks. Especially at risk for identity-based harassment are members of minoritized groups, such as women, Jews, people of color, and LGBTQ+ creators, including women and LGBTQ+ streamers on Twitch.1 By putting more of themselves out in public to build an audience, creators become more exposed to identity-based attacks.

“[We received comments about this] friend of mine that is a trans woman about – What is she doing? What is she wearing? Why is she talking like that? Why is she there? But she also is a scientist, she also has a Ph.D., and she is an amazing paleontologist.” – A YouTuber with a science-focused channel discussing what happened when she invited a trans woman to be a guest speaker on their video series.

Not only are creators directly targeted, but when they receive harassment via comments, they must also contend with the impact on their fans, who can see the hateful comments and may be targeted if they comment.

“It feels almost kind of embarrassing for someone to say, like, ”You’re ugly,” or, ”You’re dumb,” and then for other people to see that comment. I want to form a positive community, and if I don’t control those sorts of language, then people will think it’s okay, and then slowly the community will become a lot more negative overall.” – A YouTuber with a channel about school life.

Many creators turn to platform tools to moderate comments. While platforms do carry out their own moderation via a centralized Trust & Safety team, much of this moderation catches comments that are the most egregious or obviously harmful according to site policy. As a result, creators often go further to address the remaining harassing comments left up on their content. They must conduct significant voluntary labor themselves or pay for their own moderators to take down harassing comments.

Creators also worry that if they don’t adequately moderate their comment sections, platforms may demonetize or demote their content algorithmically. This is not a remote concern: in the past, YouTube has penalized creators who don’t remove harassing comments, placing further burden on those who are already disproportionately targeted for harassment (this policy is no longer in place today).2 But when creators do take down comments, their content may get demoted due to the appearance of lower engagement. Creators who moderate heavily also fear pushback from their audience. Five of the content creators that we spoke with discussed receiving backlash from their audience for perceived censorship.

1. Freeman, Guo, and Donghee Yvette Wohn. "Streaming your Identity: Navigating the Presentation of Gender and Sexuality through Live Streaming." Computer Supported Cooperative Work (CSCW) 29.6 (2020): 795-825.

2. https://www.thewrap.com/youtube-inappropriate-comments-demonetization/

Filtering Hate

Word filters that enable creators to configure a list of key phrases are the main tool for creators across major platforms to moderate comments. Comments containing one of the configured phrases are automatically removed or held for manual inspection. As a creator’s audience outgrows manual human moderation, rule-based automated moderation tools such as word filters offer a means for moderating at scale. Word filters reduce the time-consuming work and emotional energy required by automatically removing large volumes of inappropriate content, while still being customizable, as creators can understand the logic behind how the filter operates.

But how good are existing word filter tools at addressing creators’ needs when it comes to combating harassment? How could they do better?

To answer this question, we followed a human-centered, iterative design process, consisting of:

User research to understand creator needs and an inspection of the user interfaces of existing word filter tools

In parallel with this, we conducted a design exploration via mockups and feedback sessions with creators

This culminated in a new prototype word filter tool that implements new designs that better address the needs uncovered in our user research.

We find that existing word filter tools on major platforms are rudimentary, offering little more than a textbox for users to input phrases, and pay little attention to usability or creator needs.

This limitation leads creators to expend significant manual effort setting up, auditing, and monitoring their word filters, a task that many find emotionally draining. From our design exploration, we present four major features that word filters should implement to better support creators, none of which are available on major platforms today. Finally, our prototype word filter tool demonstrates a way to implement all of these features in a working tool.

FilterBuddy, the tool we have developed, allows creators to login via their YouTube account to create, manage, and share word filters. From feedback sessions with eight creators, we received positive feedback on the tool, suggesting that platforms as well as third-party tool developers could easily and immediately improve the experiences of creators facing harassment by providing our proposed features.

What’s Wrong with Existing Creator Tools for Comment Moderation?

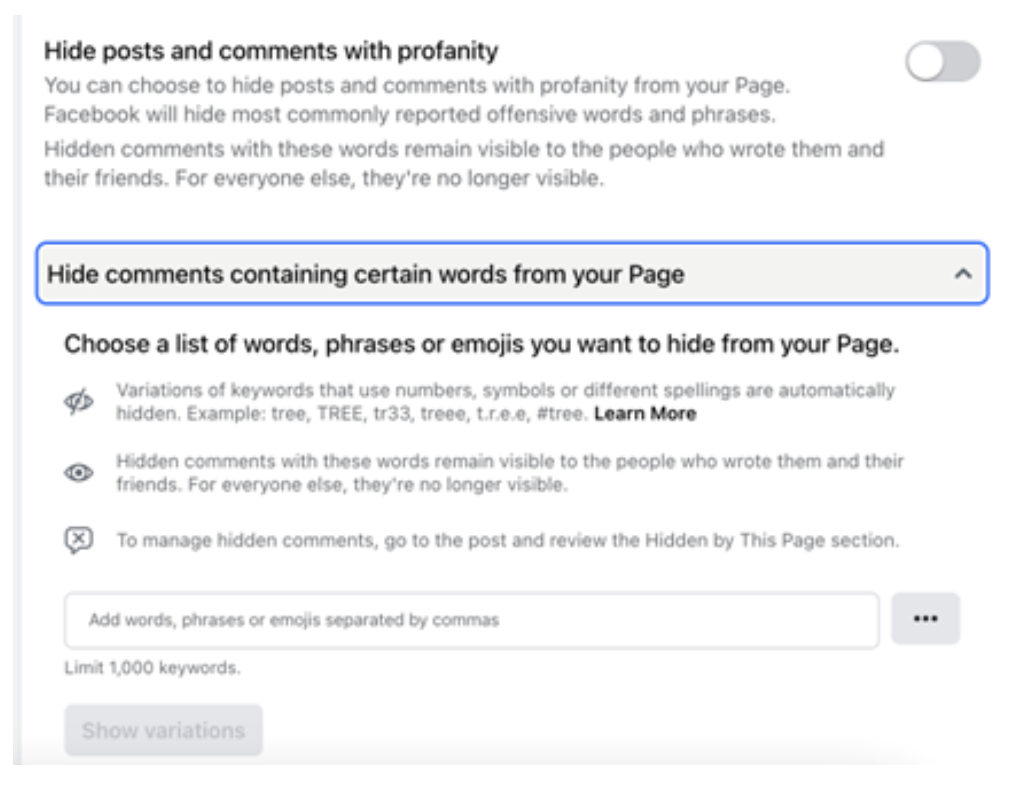

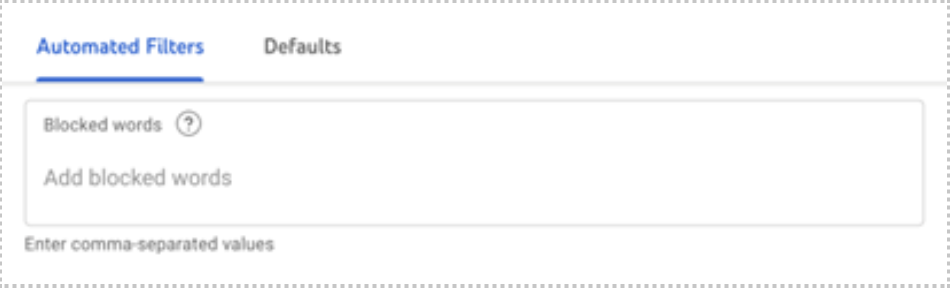

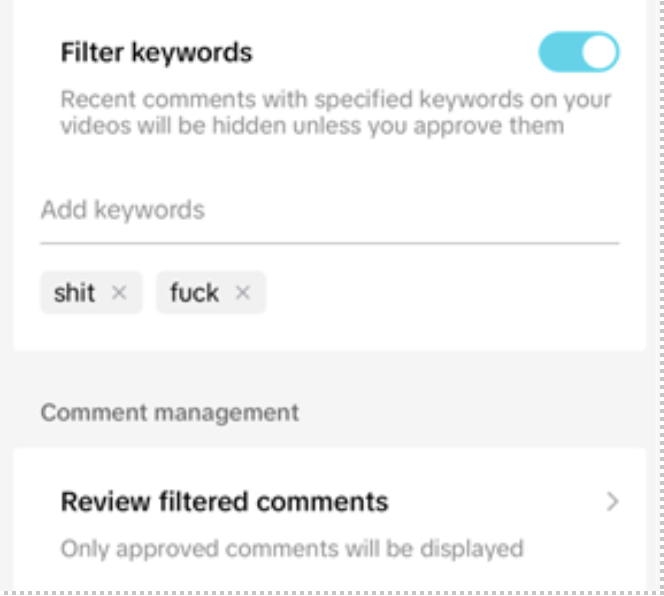

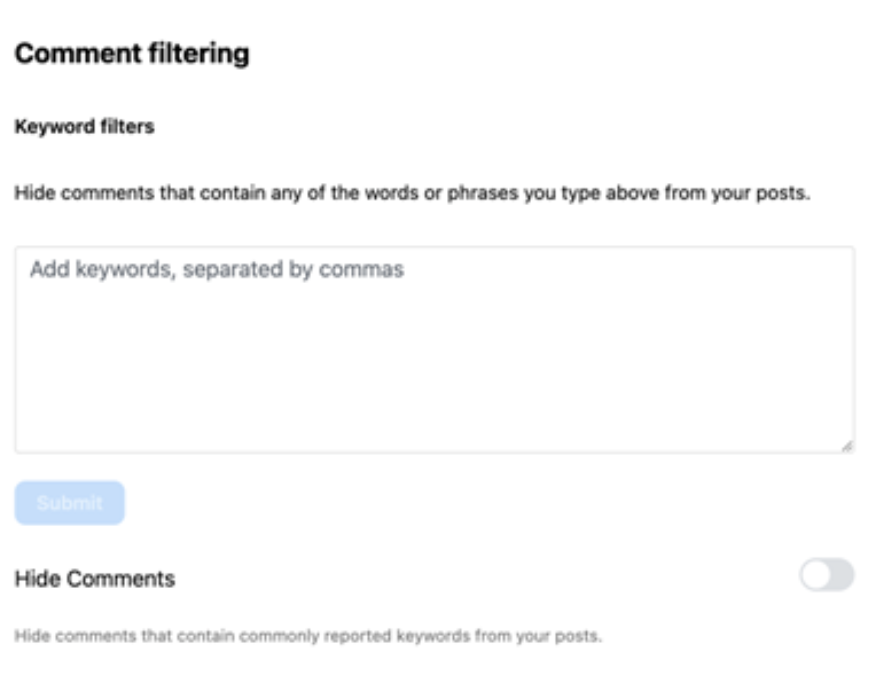

Facebook pages (screenshot)

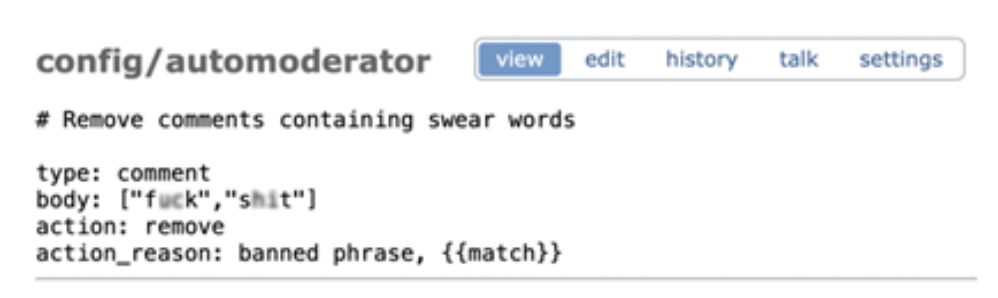

Reddit AutoModerator (screenshot)

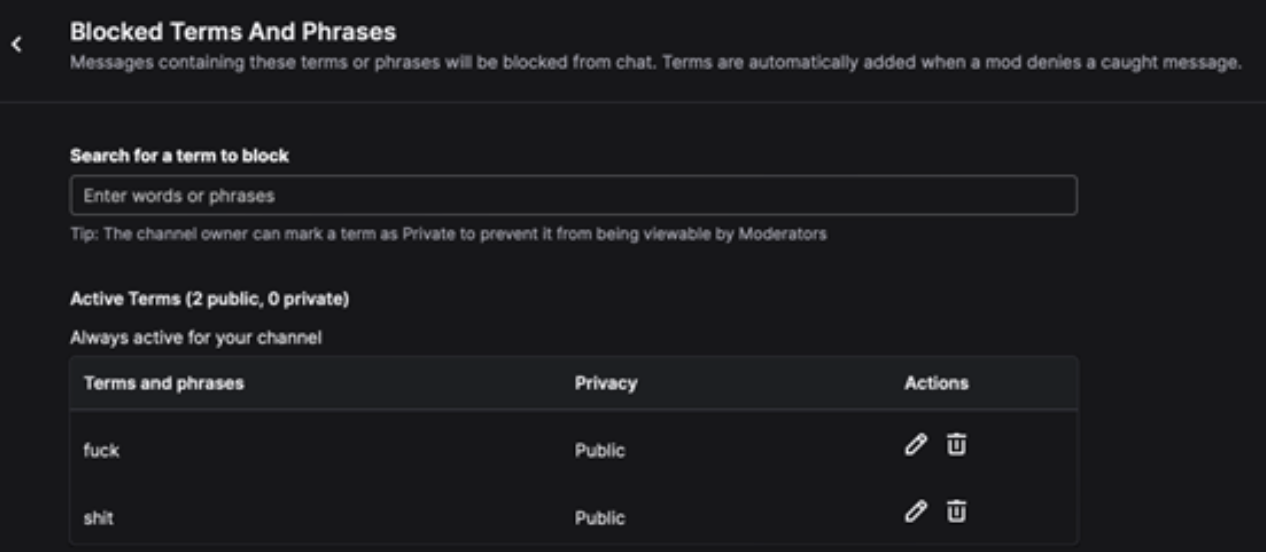

Twitch (screenshot)

YouTube (screenshot)

TikTok (screenshot)

Instagram (screenshot)

Fig. 1: Implementations of word filters on six popular platforms—Facebook, Reddit, Twitch, YouTube, TikTok, and Instagram.

(a) This setting appears on Facebook Pages, public Facebook profiles that allow “businesses, brands, celebrities, causes and organizations to reach their audience”.

(b) This community-specific setting is accessible only by the volunteer moderators of each Reddit community.

(c) This setting is available to Twitch creators on their Creator Dashboard, a webpage that allows creators to organize content and improve safety preferences.

(d) Available as part of Community Settings on YouTube Studio, an official YouTube site where creators can grow their channel, interact with audiences, and manage earnings.

(e) This is available to all TikTok creators in their Settings menu, under Privacy.

(f) This is an option for all Instagram creators under the Hidden Words setting in every account’s Settings and activity menu.

In each case, the interfaces are the only information sources available for viewing and configuring word filters; there are no accompanying feedback or visualization mechanisms to assist configuration or track how the filters are performing.

Customizing moderation

Word filter moderation by content creators is just one aspect of the landscape of content moderation on social media. When it comes to handling comments, there can be a great deal of custom moderation that creators need to perform beyond what the platforms moderate. This is an example of what we consider to be a multi-level content moderation system, where a portion of the content moderation workload is on users such as content creators to establish and enforce rules.

Content creators often prefer this multi-level system, as they may have rules and norms specific to their audience or personal preferences that would not make sense platform-wide. Content moderation is not always one-size fits all, and it should combine top-down policies with community-based tools. Platform-wide tools may not always catch certain forms of harassment, like comments exposing creators' personal information such as address or phone number with the intent to cause certain types of harm (a practice known as doxing); or comments that misgender trans creators or reference their deadname (a former name from before they transitioned).

A few platforms offer automated, machine-learning tools for creators, such as Twitch’s AutoMod (short for automated moderation), but these are often too opaque or too general.

AutoMod blocks messages under four categories:

discrimination and slurs

sexual content

hostility

profanity

Because these tools rely on machine learning models, their workings are often opaque to creators. Several creators we spoke with did not trust such models and felt that general-purpose ones would not accurately filter out harmful content.

“I would prefer to just do it myself manually. I almost wouldn’t want that power taken away from me because moderation is so personal and difficult and very sensitive, so I think I would want complete control over it.” – A YouTuber with a channel about school life.

Without better tools creators are fumbling in the dark, only improving their filters through a slow process of trial and error. The challenges become compounded as creators develop longer lists of filtered terms and then must maintain them. There are no publicly available statistics on how many terms creators typically add to their lists. But one creator shared their YouTube word filter list with us that contained over 100 phrases, some of which were alternative spellings of terms to catch harmful or harassing content.

Four Features to Improve How Creators Moderate Comments

Word filters should demonstrate how a new filter will work using past examples. For creators we interviewed, filters often catch too many false positives. Many were nervous about adding new filters for this reason, given the pressures they face to demonstrate high engagement with their content. Three interviewees, for example, chose not to use word filters at all out of concern that many innocuous comments would be caught.

“Sometimes, like in the last few months, I would post a video and then like an hour after, I would just go check the [comments held for review], and there would just be people talking about normal stuff...There’s probably a bunch of nice comments caught in there but I don’t really have the brain space to go back and look through them and approve all of them, so I just kind of leave them there, which sucks.” – A YouTuber with over 100k followers who makes content discussing gender identity topics.

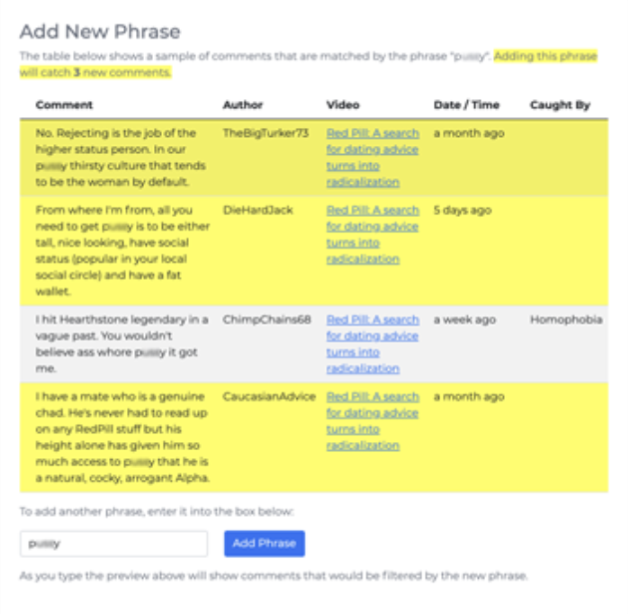

Creators can better understand how a particular filter will work by showing them examples of past comments the filter would remove, in order to avoid many false positives. Using our prototype word filter tool, one participant considered adding a swear word as a phrase, but, when seeing that the preview included amusing comments posted by her friend, she changed her mind.

“I think that [seeing examples of caught comments] is actually very helpful because it helps you understand how many of your word choices are incredibly common. For instance, if you were to use a word that’s a super common phrase and this table shows that a lot of people are going to end up using it generally in any comment, that helps me understand..how much I should narrow it down compared to what I was doing.” – A content creator on YouTube, Twitter, and Twitch who makes content about makeup.

Creators should be able to audit and monitor the activity of word filters over time. Language norms or a creator’s content and audience may change over time. Harassers may also change their language to evade word filters, so creators wanted to periodically review the activity of their filters and make adjustments. Twelve out of the 19 creators we interviewed wanted to see how many comments were caught by each word filter and be able to inspect the caught comments for each filter. This way, they could audit what each filter took down to catch issues such as false positives, and even delete or refine a filter that created too many false positives. Creators also wanted graphs to show caught comments over time to spot unusual spikes or longer-term trends.

“Obviously, something like adding word filters could potentially have a negative impact if 75% of your viewers are saying a phrase that you filtered out. And now because you have an automatic delete function, now your comments have dropped 80% or something like that. So it probably would be cool to have statistics for stuff like that.” – A content creator on YouTube, Facebook, and Instagram who makes satirical content.

Word filters need to be easy to categorize and support spelling variations. Interviewees wanted a better way to organize their word filters instead of just having a long list of phrases. 15 of the 19 creators we spoke with wanted to group phrases into different categories and monitor what was taken down at the category level. Creators also wanted to group spelling variations under a single phrase to be able to monitor or make changes to the group as a whole. For example, creators wanted to be able to configure different resulting actions, such as taking down the caught comment, blocking the author, or writing a custom automated response, for an entire group of filters.

Creators want opportunities to collaborate on pre-made word filters with trusted peers. Our interviewees were excited about the prospect of being able to share word filters with other trusted creators and to work together on lists of phrases. Several creators said they expected that creators of a similar identity would come up with similar phrases to add to their word filter. They also wanted to import lists from trusted third-party organizations. Doing so would reduce the workload and the emotional strain of having to repeatedly look through the harassing comments one has received and think of offensive phrases to add.

“Having [a pre-made list to build off of] is just perfect for the creator, because they don’t even have to like spend the time, just like brewing over what words might be used towards them, which is like the most messed up thing to have to think about in the first place, so having a tool like this is just amazing. Honestly, it’s a lifesaver!” – A content creator on YouTube, Twitter, and Twitch who makes content about makeup.

Black and women creators who received racist and sexist comments wanted the option to enable pre-made categories of terms, such as of well-known racist and sexist terms, instead of having to create such commonly needed categories from scratch. However, interviewees only trusted pre-made lists from individuals or groups that they felt would understand the language norms of their community and the kind of harassment they face. While many public lists of different kinds of unwanted terms exist online, some can unintentionally censor marginalized groups. For instance, the widely used “List of Dirty, Naughty, Obscene and Otherwise Bad Words (LDNOOBW),” first created in 2012 by Shutterstock and used by developers and researchers at companies such as Slack and Google, contains terms commonly used and reappropriated in an affirming way by LGBTQ+ people.3

“If you guys created some sort of like—‘we’ve gathered together a panel of these five people to create our lists for this year and then every year it was edited’—right, because culture is constantly evolving, then I’d know that you guys have taken the time to select each of these people, and then I’m more likely to trust you guys as thought leaders in the space and use the lists.” – A gaming content creator on YouTube and Twitch.

3. Dodge, J., Marasović, A., Ilharco, G., Groeneveld, D., Mitchell, M., & Gardner, M. (2021). Documenting Large Webtext Corpora: A Case Study on the Colossal Clean Crawled Corpus. EMNLP.

Reimagining Word Filter Tools

To demonstrate how these four features could behave in a real application, we built a tool we have called FilterBuddy; it is a web application for creators to moderate their comments and manage their word filters. FilterBuddy currently works with YouTube, such that any YouTube creator can connect their account and start using it to moderate comments on their videos. FilterBuddy is a working prototype, albeit built by our research lab as opposed to a large product team, and is open source so that anyone can run it on their own server or add to its code to make it work for additional creator platforms, for instance.

Using a scenario based on a fictional YouTuber named Ariel, we illustrate how FilterBuddy can be deployed to reduce the workload and stress incurred by receiving harassing comments while retaining control.

Ariel is a YouTube creator who likes to post videos of herself cooking kosher recipes. She recently posted a video that went viral, and as a result her channel begins to receive thousands of new subscribers and comments. While Ariel appreciates many of the encouraging comments, she also receives a few comments that criticize her appearance. Other comments criticize her Jewish heritage, while a few are blatantly antisemitic.

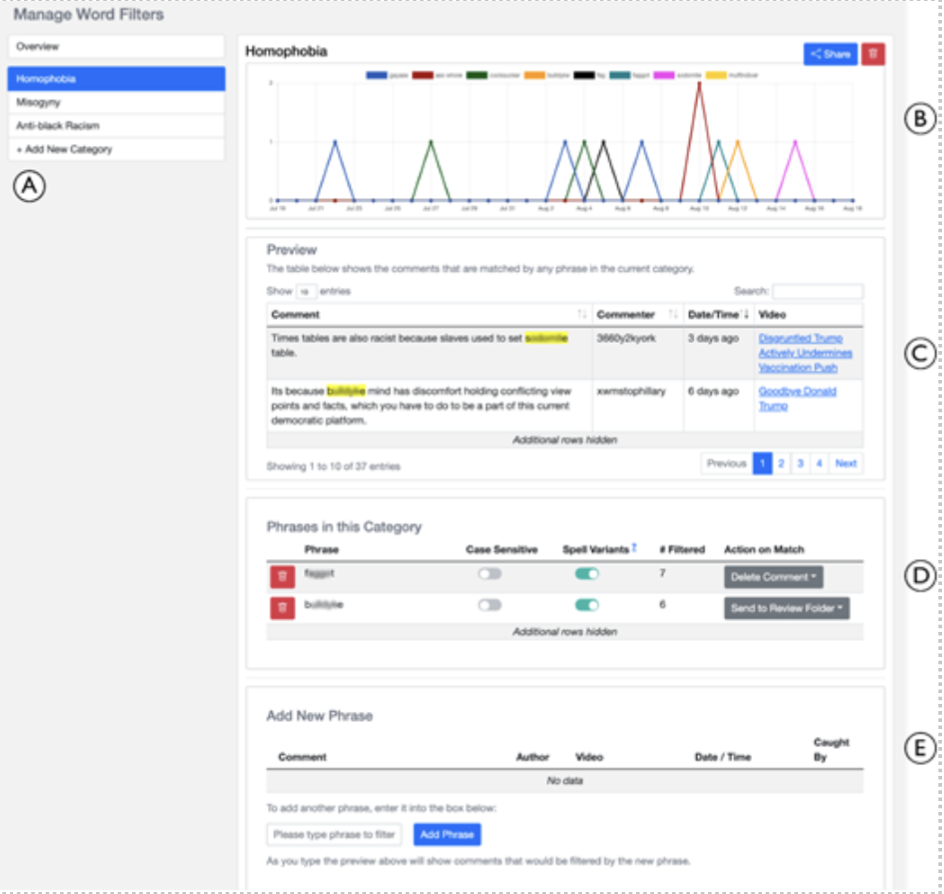

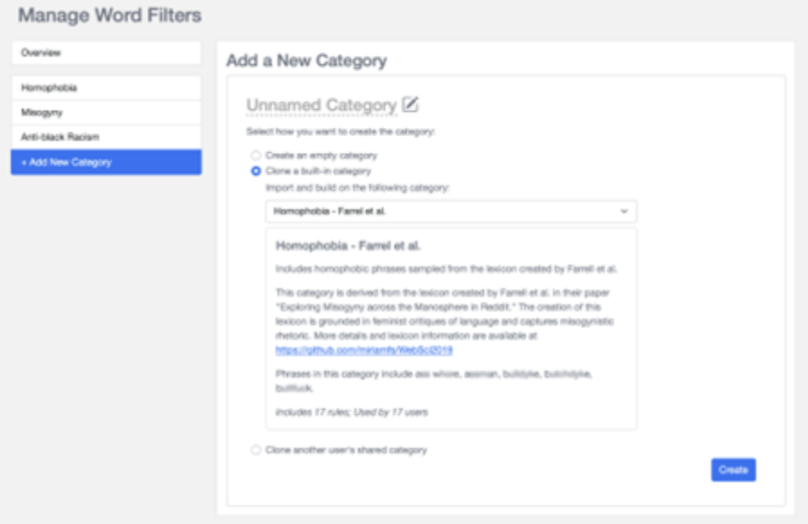

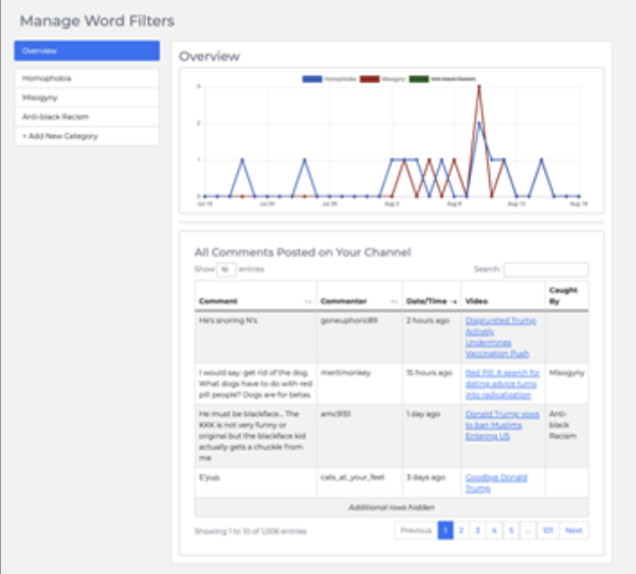

To reduce her stress and time spent removing these hurtful comments, Ariel logs in to FilterBuddy with her YouTube credentials. She notices that FilterBuddy already provides several pre-made categories of harmful terms (Figure 2), including a category for Misogyny started by a fellow YouTuber whom she trusts. She imports the category, and now she can see its full list of misogynistic phrases as well as the comments caught by each phrase (Figure 1).

Fig 1: A screenshot of FilterBuddy’s category page showing (A) a sidebar with links to the overview page, each configured category page, and Add new category page; (B) a chart showing the number of comments caught by each category phrase in the past month; (C) a paginated table previewing all comments caught by the category; (D) a table of phrases configured in the category with options to include/exclude spelling variants and determine action on match for each phrase; and (E) a section to add new phrases in the category. Note that we limit the number of table rows we show in all the figures for brevity.

Figure 2. FilterBuddy’s ‘Add a New Category’ Page. Users can either create an empty category, import one of the built-in categories, or clone a category shared by another user to quickly set up their configurations. We show here the details of the built-in ‘Homophobia’ category selected in the dropdown.

Noticing that her channel also contains antisemitic comments, she creates a new category from scratch called Antisemitism. When she goes to add a new phrase to this category, FilterBuddy shows her prior comments on her videos that would have been caught by that phrase (Figure 1E, Figure 3). She reviews these comments to help her decide whether to keep or remove the phrase until she is satisfied with her list.

Fig. 3. Zooming in on the ‘Add New Phrase’ section on the FilterBuddy Category Page. As the user types in a phrase, the comments caught by that phrase are auto-populated. Comments not already caught by any configured phrases have a yellow background so that they are easily distinguished.

A week later, Ariel logs in to FilterBuddy, and her home page (Figure 4) shows her a temporal graph detailing how many comments were caught by her Misogyny and Antisemitism filters. To her relief, she notices that the number of antisemitic comments has decreased in the past week.

The home page also contains a table of her recent comments that have not been filtered, and she notices some misogynistic comments made that were not caught by the Misogyny category. To remove these going forward, she decides to add new phrases to the category. While inspecting the Misogyny category, she also sees a few recent innocuous comments caught by one of the terms, and she manually reinstates those comments. Because she doesn’t see too many false positives, she feels confident that her word filter isn’t unduly affecting her engagement metrics. She resolves to check back in a few more weeks to make additional adjustments.

Figure 4. FilterBuddy’s Home Page. It shows (a) a time-series graph for number of caught comments aggregated at the category level and (b) a paginated table of all comments posted on the user’s channel.

This scenario demonstrates how a creator can quickly ramp up moderation in the wake of a wave of harassment by bootstrapping from existing lists. From there, they can monitor the performance of their filters over time and make minor adjustments to the filters to better fit their particular context. The ability to monitor and audit the activity of their filters also means that they can become aware of and recover from either over- or under-moderating.

Takeaways and Recommendations

Our interviews with creators show that what they need most are tools that provide automation to speed up moderation work, coupled with monitoring and auditing capabilities to reduce concerns of over- or under-moderating. Additionally, pre-made filters by trusted peers and organizations can also help creators reduce the work required while still providing fine-grained control and customization to fit their audience’s language norms and catch personalized harassment.

Positive feedback from creators on FilterBuddy suggests that the features we propose would be an improvement over the existing word filter tools offered on major platforms today. This research was conducted over the course of a year, with a few months of development work by a team of one postdoc and one graduate student to build a prototype. Many of the design ideas we implemented are not technically sophisticated, nor are they wildly creative user interfaces. The question remains why major platforms, who have considerably more resources than we do to design and develop such features, have not done so yet.

We call upon platforms to dedicate more resources towards supporting creators who face harassment through comments on their content. When creators moderate their comments, they also perform an important voluntary service that improves overall platform experience. If platforms won’t take on or pay for this stressful and time-consuming labor, they should improve the tools they offer creators. Platforms could also dedicate technical and human resources to foster an ecosystem of third-party tools such as FilterBuddy.

Recommendations

For Tech Companies

Improve tools for creator-led comment moderation. As a one-size-fits-all model for content moderation is insufficient, platforms need to consider the tools they provide to creators so they can customize their moderation beyond what is caught at the platform level. The current tools provided are underpowered and lack usability, particularly for content creators who receive many comments or are being targeted for harassment.

Make transparent how comment moderation impacts recommendation and demonetization. Greater transparency is needed into how platforms make decisions regarding recommendation algorithms that can punish or reward creators. Currently, these decisions are opaque, leading to significant stress for creators, many of whom are competing to earn more money on the platform.

In particular, content creators are anxious about how their actions to moderate content could have negative ramifications: being sanctioned for not moderating enough, or being downranked for moderating too much.

Provide training and guidance around comment moderation. Greater clarity around what is expected of content creators in terms of moderation and guidelines or training on how to best moderate, including how to best use existing tools, would help alleviate creators’ concerns. Discord’s Moderator Academy exemplifies this approach. However, care should be taken to ensure that responsibilities placed on creators for moderating should not penalize those who receive disproportionate harassment.

Create plugin ecosystems for third party moderation. If platforms prefer not to develop additional moderation tools, they could instead open up a plugin ecosystem to support content creators, and allow third parties to develop a diversity of more powerful moderation tools. Reddit, for example, allowed for third party moderation tools before it dropped its public API in 2023, and currently Bluesky is a platform experimenting in this area. Platforms could encourage a rich ecosystem by providing developer or financial support to tool builders and by clearly advertising the existence of these plugins on their platform.

For Developers and Designers of Moderation Tools

Design automated tools that enable control, customizability, and collaboration. While word filters seem like a simple tool, there are many features that can improve their usability when it comes to authoring and monitoring; in particular, examples of what would be caught or what has recently been caught are helpful explanations. Many participants expressed hesitation when it came to integrating more sophisticated automation such as machine learning into their toolkit. This is due to their lack of trust in such models and inability to understand them. But they were excited about the idea of importing and collaborating on pre-existing word filters created by organizations or individuals they trust.

For Content Creators

Be mindful of burnout and get support from trusted peers. Many volunteer moderators of large communities, such as on Reddit and Facebook, express feelings of burnout. The same goes for content creators and the labor they perform to keep their comments safe. Content creators should make use of existing automated tools, and also lean on trusted individuals to lower their burden—as well as knowing when to take a step back.

For Civil Society Organizations

Develop and publish pre-made word filters for different marginalized groups. We also found that people were interested in using pre-made word filter lists created by a trusted organization. Civil society organizations with expertise on harassment against specific marginalized groups could publish lists as a valuable service to people doing their own moderation.

For Government

Mandate transparency and data access for researchers. As with creators, too often researchers don’t have adequate access to platforms, and platforms themselves take approaches to transparency that are hard to compare across the industry.

Federal legislators and other state lawmakers should follow the example of California and enact similar policies to California’s A.B. 587, a landmark bill requiring publication of aspects of platforms’ terms of services and the actions they take against content that violates those terms. Additionally, federal lawmakers should pass S. 1876 - Platform Accountability and Transparency Act, which would require social media companies to share more data with the public and researchers.

Pass federal legislation, such as anti-doxing and swatting laws, to hold perpetrators accountable for severe online abuse. Our laws have not kept up with increasing and worsening forms of digital abuse, and policymakers should enhance access to justice for victims of serious online harms. Many forms of severe online misconduct, such as doxing and swatting, currently fall under the remit of extremely generalized cyberharassment and stalking laws. These laws often fall short of securing recourse for victims of these severe forms of online abuse. Policymakers must introduce and pass legislation that holds perpetrators of severe online abuse accountable for their offenses at both the state and federal levels. ADL’s Backspace Hate initiative works to bridge these legal gaps and has had numerous successes, especially at the state level.

Appendix

Method

We conducted semi-structured interviews with 19 content creators on sites like YouTube, Twitch, and TikTok between October 2020 and April 2021. In the three years since then, nothing has changed on these platforms and our findings are still accurate today. We sought to recruit a diverse set of participants, ensuring that our sample included creators with channels of different sizes focused on a variety of topics and residing in multiple countries. Prior research has shown that BIPOC, LGBTQ+, Jewish, Muslim, and women users experience more frequent instances of online harassment. We therefore oversampled certain gender, racial, and LGBTQ+ groups, since we expect that these groups would especially benefit from upgraded moderation tools. Our selection criteria included choosing only those creators who had received at least a few dozens of comments on their channels so that they understand the challenges of content moderation.

In parallel with conducting interviews, we made mockups of new features that could be added to a word filter tool and shared them with interviewees for feedback. As the interviews progressed, our iterative design exploration led us to distill a series of core features that addressed creator needs. We then built a working tool, FilterBuddy, that implements these features along with feedback we received from interviews. The tool allows a YouTuber to connect with their YouTube account to create new word filters over the comments they’ve received, monitor the impact of their filters over time, and collaborate on word filters with others. Finally, we recruited an additional eight participants who are creators on YouTube to explore the tool using their YouTube comments and provide additional feedback.

Participant Demographics

Below, we detail the demographic information of the participants of our interview study.

Author Bios

Shagun Jhaver

Shagun Jhaver is a social computing scholar whose research focuses on improving content moderation on digital platforms. He is currently studying how internet platform design and moderation policies can address societal issues such as online harassment, misinformation, and the rise of hate groups. Jhaver is an assistant professor at Rutgers University’s School of Communication & Information in the Library & Information Science Department. Before joining Rutgers, he completed his PhD in Computer Science at Georgia Tech and served as a postdoctoral scholar at the University of Washington.

Amy X. Zhang

Amy X. Zhang is an assistant professor at University of Washington's Allen School of Computer Science & Engineering, where she leads the Social Futures Lab, dedicated to reimagining social and collaborative systems to empower people and improve society. Previously, she was a 2019-20 postdoctoral researcher in Stanford CS after completing her Ph.D. at MIT CSAIL in 2019, where she received the George Sprowls Best Ph.D. Thesis Award at MIT in computer science. She received an M.Phil. in Computer Science at the University of Cambridge on a Gates Fellowship and a B.S. in Computer Science at Rutgers University, where she was captain of the Division I Women's tennis team.